Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Azure Data

- Azure Database Support Blog

- SQL Azure Blob Auditing Basic Power BI Dashboard

SQL Azure Blob Auditing Basic Power BI Dashboard

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Published

Mar 13 2019 06:42 PM

9,241

Views

Mar 13 2019

06:42 PM

Mar 13 2019

06:42 PM

First published on MSDN on May 26, 2017

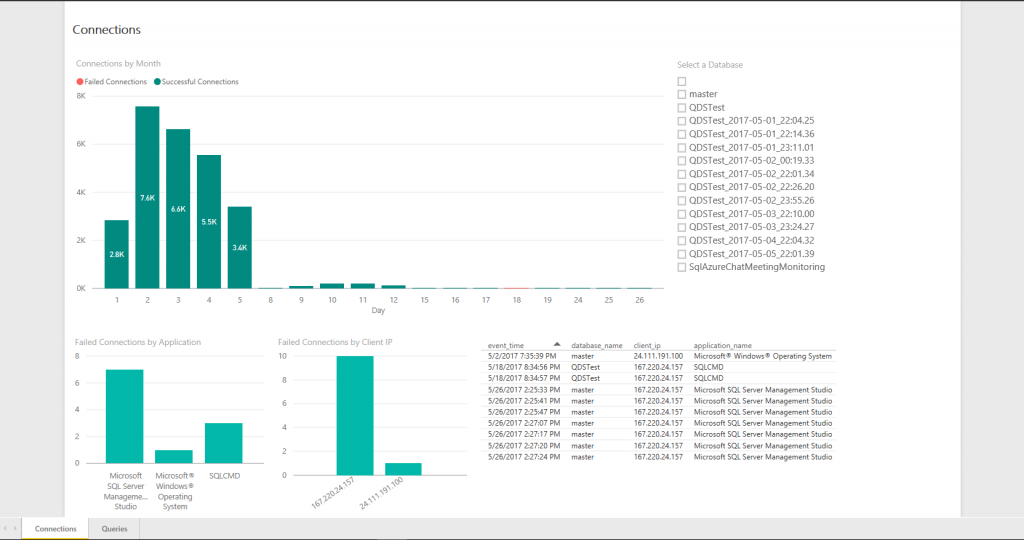

We have created a simple Power BI template for viewing Blob Auditing data.

You can download the template here.

This template is based on the query:

[code language="sql"]SELECT * FROM sys.fn_get_audit_file('https://<storageaccountname>.blob.core.windows.net/sqldbauditlogs/<servername>', default, default);[/code]

Which will let you process your blob auditing files from T-SQL.

To use the Power BI report first download Power BI Desktop https://powerbi.microsoft.com/en-us/desktop/

Once installed open the template file from above first you will get prompted to fill in the parameters, please fill in your SQL Azure server name (without .database.windows.net) and your Auditing storage account name.

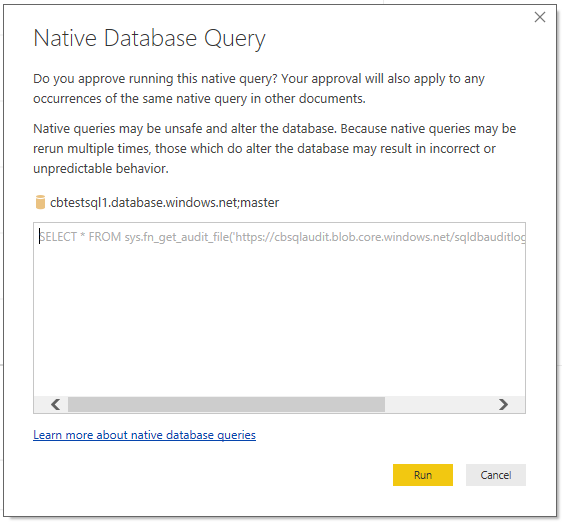

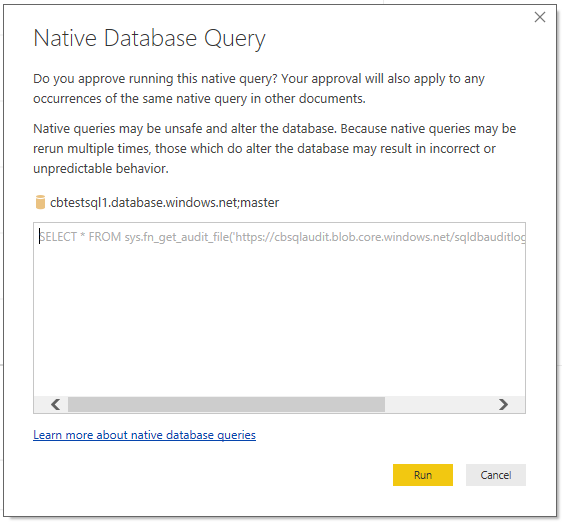

Next you will need to provide permission to run the query against your database, click Run to continue.

Finally enter your database admin credentials (Azure Active Directory Authentication is not currently supported). Make sure to select Database on the left hand side before entering your credentials and clicking Connect.

Now you can use the report through Power BI Desktop. If you would like to utilize this via the Power BI service and enable auto refresh there are a few more steps. First click the Publish button under the home tab. Save the report, the name you use will be published to Power BI.

Select the workspace you would like to publish to and click Select.

You can now click the link provided to open the report online.

To enable scheduled refreshing of the data go to Datasets and click the Scheduled Refresh button for the dataset.

You will see an error that your credentials are invalid, click the Edit credentials link.

Change the authentication mode to basic, enter your credentials, and then click the Sign in button.

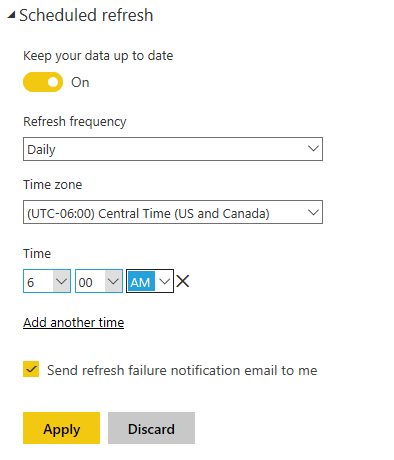

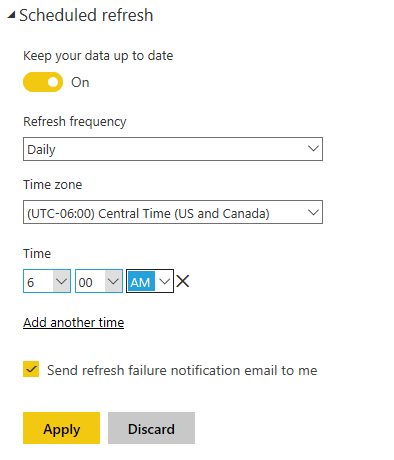

Expand the Scheduled refresh section, toggle Keep your data up to date to On, click the Add another time link and select a time for the refresh, finally click the Apply button.

You are now set up to have the data automatically refresh for the report.

We have created a simple Power BI template for viewing Blob Auditing data.

You can download the template here.

This template is based on the query:

[code language="sql"]SELECT * FROM sys.fn_get_audit_file('https://<storageaccountname>.blob.core.windows.net/sqldbauditlogs/<servername>', default, default);[/code]

Which will let you process your blob auditing files from T-SQL.

To use the Power BI report first download Power BI Desktop https://powerbi.microsoft.com/en-us/desktop/

Once installed open the template file from above first you will get prompted to fill in the parameters, please fill in your SQL Azure server name (without .database.windows.net) and your Auditing storage account name.

Next you will need to provide permission to run the query against your database, click Run to continue.

Finally enter your database admin credentials (Azure Active Directory Authentication is not currently supported). Make sure to select Database on the left hand side before entering your credentials and clicking Connect.

Now you can use the report through Power BI Desktop. If you would like to utilize this via the Power BI service and enable auto refresh there are a few more steps. First click the Publish button under the home tab. Save the report, the name you use will be published to Power BI.

Select the workspace you would like to publish to and click Select.

You can now click the link provided to open the report online.

To enable scheduled refreshing of the data go to Datasets and click the Scheduled Refresh button for the dataset.

You will see an error that your credentials are invalid, click the Edit credentials link.

Change the authentication mode to basic, enter your credentials, and then click the Sign in button.

Expand the Scheduled refresh section, toggle Keep your data up to date to On, click the Add another time link and select a time for the refresh, finally click the Apply button.

You are now set up to have the data automatically refresh for the report.

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.