Code Metrics – Maintainability Index

SKU: Premium, Ultimate

Versions: 2008, 2010

Code: vstipTool0134

At long last it is time to look at the final metric from the code metrics (see vstipTool0129, “Code Metrics - Calculating Metrics”): maintainability index. As with the other metrics it is best to start with some definitions. First, from the MSDN main documentation:

“[Maintainability Index c]alculates an index value between 0 and 100 that represents the relative ease of maintaining the code. A high value means better maintainability. Color coded ratings can be used to quickly identify trouble spots in your code. A green rating is between 20 and 100 and indicates that the code has good maintainability. A yellow rating is between 10 and 19 and indicates that the code is moderately maintainable. A red rating is a rating between 0 and 9 and indicates low maintainability.”

https://msdn.microsoft.com/en-us/library/bb385914.aspx

(emphasis added)

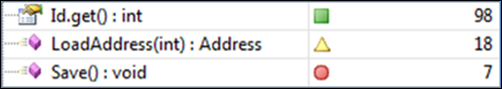

Unlike other metrics, there is virtually no ambiguity to reading this value. David Kean on the code analysis team blog provides a view into the values, icons, and colors you could see:

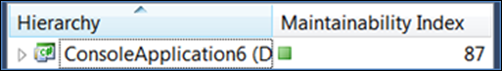

Using this information you can easily see areas of code that may need attention. But what does this look like when you actually use it? I took some sample code and created extreme conditions to show how this looks in actual use. If we just look at maintainability index everything looks fine:

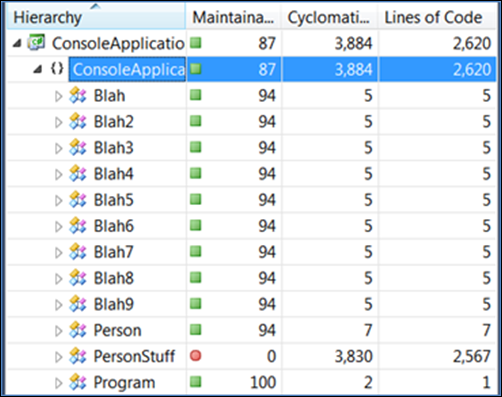

Be careful! This is not the whole picture. Maintainability index is actually a calculation using three metrics. Two of the metrics used are lines of code (see vstipTool0130, “Code Metrics - Lines of Code”) and cyclomatic complexity (see vstipTool0131, “Code Metrics - Cyclomatic Complexity”). Without looking at these you will miss something. Let’s add them to our code metrics:

We have a problem. The lines of code don’t’ really seem excessive but the cyclomatic complexity is very, very high and that is a red flag. We need to dig into the hierarchy to see what is going on. I’ll go two levels down:

Now we see a major problem. Just looking at the maintainability index alone wouldn’t have revealed the issue at a high level but paying attention to the cyclomatic complexity definitely revealed a code smell (https://en.wikipedia.org/wiki/Code_smell) that needed further investigation. At this point we would take action to correct for high cyclomatic complexity.

The moral of this story is to pay attention to your metrics. At this point you should be well armed to use maintainability index for your code. If you want to get into the details then read on…

Digging Deeper

When dealing with metrics the details can be important. It’s good to see how they are calculated. Fortunately, the Code Analysis Team blog has provided the calculation used for maintainability index:

Maintainability Index = MAX(0,(171 - 5.2 * log(Halstead Volume) - 0.23 * (Cyclomatic Complexity) - 16.2 * log(Lines of Code))*100 / 171)

This is just one of many ways to calculate maintainability index (https://www.virtualmachinery.com/sidebar4.htm) and there are calculation variants that take into account additional items such as comments. I’ve already covered lines of code (see vstipTool0130, “Code Metrics - Lines of Code”) and cyclomatic complexity (see vstipTool0131, “Code Metrics - Cyclomatic Complexity”). Halstead Volume, on the other hand, is new to our discussions.

The Halstead metrics where created in 1977 by Maurice Halstead to create empirical methods to measure software development efforts. He sought to identify and measure software properties as well as show relationships between them. (https://en.wikipedia.org/wiki/Halstead_complexity_measures) You can find a great description of how to calculate Halstead metrics at https://www.virtualmachinery.com/sidebar2.htm.

Halstead Volume can be defined as “[…] the size of the implementation of an algorithm. The computation […] is based on the number of operations performed and operands handled in the algorithm. Therefore [it] is less sensitive to code layout than the lines-of-code measures.”

https://www.verifysoft.com/en_halstead_metrics.html

Code Analysis

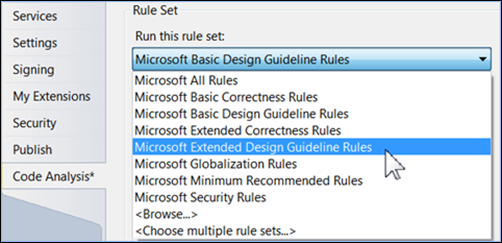

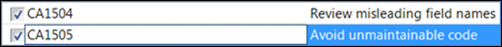

When using code analysis, the Extended Design Guideline rule set contains a maintainability area:

Inside the maintainability area is a rule for maintainability index:

This rule is triggered when the maintainability index is low. You can learn more about the rule here:

https://msdn.microsoft.com/en-us/library/bb386043.aspx

Unfortunately, the documentation doesn’t talk about the thresholds set for this rule but I ran my own experiments and found that at 19 or below this rule will issue a warning by default.