Comparing the Performance of .NET Serializers

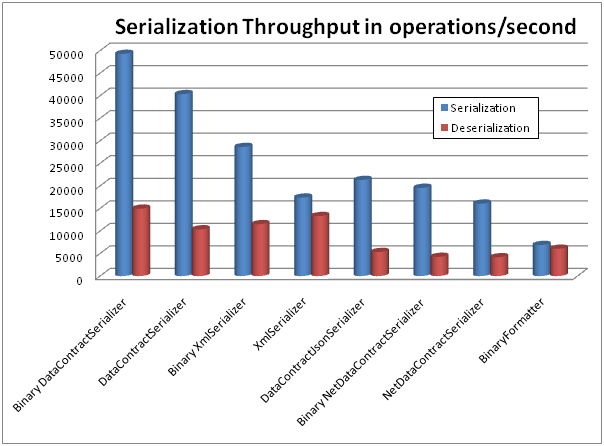

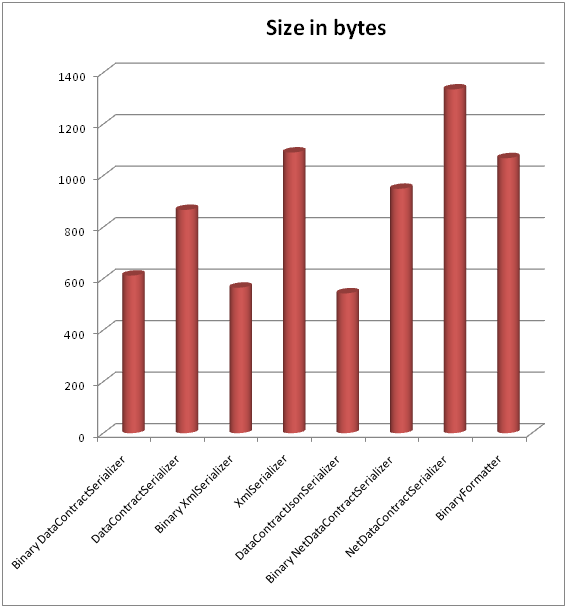

The .NET framework comes with a variety of different serializers. Hopefully, my overview of these serializers will have provided some insight into the differences between them and the various advantages and disadvantages of using different serializers. But there are not only great differences in functionality between the serializers, but there are also vast differences in performance. This is an attempt to provide a rough idea of how serializers stack up against each other as far as performance (speed) is concerned and as far as the size of the serialized instances.

Disclaimer

Serialization performance can vary a lot between different machines, and depends heavily on the object being serialized, the serializer features being used, and the stream or writer to which the serialized instance is being written to. This is only meant to give you a glimpse into how fast or slow serializers might be. If you’re concerned with serialization performance in your scenarios, the best thing you can do is run your own performance tests with the types and functionality you care about.

Setup

I decided to serialize out and deserialize the following object:

object o = new Library()

{

Name = "Library Of Congress",

Books = new List<Book>() { new Book() { Author = new Person(){ FirstName = "Barack", LastName = "Obama"}, Title = "Dreams from My Father: A Story of Race and Inheritance", Year = 1995},

new Book() { Author = new Person(){ FirstName = "Lewis", LastName = "Caroll"}, Title = "Alice's Adventures in Wonderland", Year = 1865},

new Book() { Author = new Person(){ FirstName = "Kurt", LastName = "Vonnegut"}, Title = "Welcome to the Monkey House", Year = 1968},

new Book() { Author = new Person(){ FirstName = "Orson", MiddleName = "Scott", LastName = "Card"}, Title = "Ender's Game", Year = 1985}}

};

Using each of the serializers. This object contains lists, strings, ints, and several classes. It is meant to represent a “typical” object. I used a MemoryStream to write to and read from for all five serializers, and then used XmlDictionaryWriter/Reader.CreateBinaryWriter/Reader to create binary writers for testing the Binary XML performance for DataContractSerializer, NetDataContractSerializer, and XmlSerializer. All of these serializers were used without any particular switches, and no customizations were made to the format of the serialized string. Only parameterless DataContract, DataMember, and Serializable attributes were added. Serialization and deserialization throughputs were measured directly, while roundtrip throughput was calculated using the formula: roundtrip throughput = 1/((1/serialization throughput) + (1/deserialization throughput)).

Results

There are several things we can infer from the data:

· The serializers seem to perform in roughly the following order: DataContractSerializer, XmlSerializer, DataContractJsonSerializer, NetDataContractSerializer, BinaryFormatter

· Using binary XML writers and readers seems to improve speed by ~5-40% and decreases message size by ~30-50% depending on the serializer

· DataContractJsonSerializer produces the smallest serialized instances, followed by DataContractSerializer, BinaryFormatter, XmlSerializer, and NetDataContractSerializer

Overall, DataContractSerializer comes out as the winner of this perf-off with the fastest speed and the second smallest serialized instance size.