Using Speech Synthesis in a WPF Application

WinFX contains the System.Speech.Synthesis and System.Speech.Recognition namespaces, which allow developers to add speech synthesis or recognition easily to a Windows application. The following diagram illustrates how both managed and unmanaged Windows applications interact with the underlying speech synthesis and speech recognition engines:

Application interaction with speech engines

The speech synthesis engine is accessed directly in managed applications by using the classes in System.Speech.Synthesis or, alternatively, by the Speech API (SAPI) when used in unmanaged applications. For more info on the breadth of features of the WinFX speech technology, see the MSDN article Exploring New Speech Recognition and Synthesis APIs in Windows Vista. The rest of this blog posting will look at how to enable speech synthesis in a WPF application.

Enabling speech synthesis

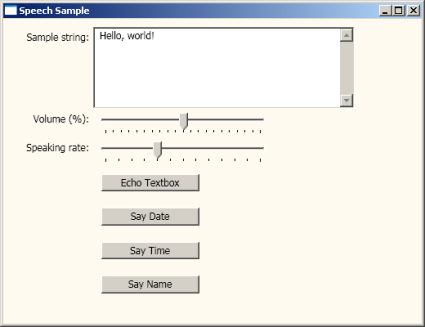

The Windows SDK Beta 2 contains a sample WPF application, Speech Sample, which provides a simple example of how to enable speech synthesis. BTW, speech synthesis is often referred to as TTS, or text-to-speech. When you build and run the sample application, the following window appears:

Click the Echo Textbox button to hear the displayed sample string, “Hello, world”. Try entering other text and hearing what it sounds like.

You can change the rate of speech by moving the slider control that is labeled Speaking rate -- this either slows or increase the playback of speech. You can also change the volume by moving the slider control labeled Volume.

Clicking the Say Date and Say Time buttons causes the current date and time to be spoken. Click the Say Name button causes the current speech persona to be identified. For Windows XP, the default speech persona is Microsoft Sam. For Vista, you can choose the default speech persona to be Sam, Lili, or Anna.

| A closer look at the XAML file |  |

The user interface for the sample is pretty minimal. A TextBox control is defined in the MyWindow.xaml file to provide the text that is spoken:

<!-- Text to display -->

<Label Grid.Column="0" Grid.Row="0">Sample string:</Label>

<TextBox

Grid.Column="1" Grid.Row="0"

HorizontalAlignment="Left"

TextWrapping="Wrap"

AcceptsReturn="True"

VerticalScrollBarVisibility="Visible"

FontSize="12"

Height="100"

Width="400"

Name="TextToDisplay">Hello, world!</TextBox>

A Slider control is used to control the volume of the spoken text:

<!--

Volume property: 0 to 100 -->

<Label Grid.Column="0" Grid.Row="1">Volume (%):</Label>

<Slider Name="VolumeSlider" Grid.Column="1" Grid.Row="1" Value="50" Minimum="0" Maximum="100" TickFrequency="5" ValueChanged="VolumeChanged"/>

A second Slider control is used to control the rate of speaking for the spoken text:

<!--

Rate property: -10 to 10 (adjust to reasonable range of -5 to 7) -->

<Label Grid.Column="0" Grid.Row="2">Speaking rate:</Label>

<Slider Name="RateSlider" Grid.Column="1" Grid.Row="2" Value="-1" Minimum="-5" Maximum="7" TickFrequency="1" ValueChanged="RateChanged"/>

A set of four Button controls are used to control the playback of entered text in the TextBox, in addition to three specific strings of text: date, time, and speech persona:

<!--

Buttons -->

<Button Grid.Column="1" Grid.Row="3" Click="ButtonEchoOnClick">Echo Textbox</Button>

<Button Grid.Column="1" Grid.Row="4" Click="ButtonDateOnClick">Say Date</Button>

<Button Grid.Column="1" Grid.Row="5" Click="ButtonTimeOnClick">Say Time</Button>

<Button Grid.Column="1" Grid.Row="6" Click="ButtonNameOnClick">Say Name</Button>

| A closer look at the C# code-behind file |  |

The C# code-behind file, MyWindow.xaml.cs, declares the following namespaces, which includes the speech recognition namespace, System.Speech.Synthesis:

using

System;

using System.Windows;

using System.Windows.Controls;

using System.Speech.Synthesis;

The MyWindow class defines an instance of the SpeechSynthesizer object, which is required to access the speech recognition engine:

public

partial class MyWindow : Window

{

SpeechSynthesizer _speechSynthesizer = new SpeechSynthesizer();

To convert the text in the TextBox object to speech, call the SpeakAsync method of the SpeechSynthesizer object. Really...that's all there is!

void

ButtonEchoOnClick(object sender, RoutedEventArgs args)

{

_speechSynthesizer.SpeakAsync(TextToDisplay.Text);

}

Creating speech output for the current date and time is just a matter of converting the DateTime object to the desired strings, and then calling the SpeakAsync method:

void

ButtonDateOnClick(object sender, RoutedEventArgs args)

{

_speechSynthesizer.SpeakAsync("Today is " + DateTime.Now.ToShortDateString());

}

void ButtonTimeOnClick(object sender, RoutedEventArgs args)

{

_speechSynthesizer.SpeakAsync("The time is " + DateTime.Now.ToShortTimeString());

}

You can also identify the currently selected speech persona by retrieving the Voice object and referencing the Name property:

void

ButtonNameOnClick(object sender, RoutedEventArgs args)

{

_speechSynthesizer.SpeakAsync("My name is " + _speechSynthesizer.Voice.Name);

}

Recall that two Slider controls were defined in XAML to control the volume and playback rate. The two event handlers below set the Volume and Rate properties for the SpeechSynthesizer object:

void

VolumeChanged(object sender, RoutedEventArgs args)

{

_speechSynthesizer.Volume = (int)((Slider)args.OriginalSource).Value;

}

void

RateChanged(object sender, RoutedEventArgs args)

{

_speechSynthesizer.Rate = (int)((Slider)args.OriginalSource).Value;

}

Creating a text-to-speech reading application

With a little more effort you could create a text-to-speech reading application that could read in ASCII text files. You would probably want to add functionality that allowed you to pause, play, and go back a certain interval.

| A great resource for finding interesting text files to play back as speech is Project Gutenberg, which contains an enormous collection of public domain literature in electronic formats. |

Enjoy,

Lorin

About Us

We are the Windows Presentation Foundation SDK writers and editors.