So how will you help people work with text? Part 1: Introduction

This series of posts describes how you can use UI Automation (UIA) as part of your solution to help people who find some aspect of working with text to be a challenge.

Introduction

Over the last couple of months I’ve had a few discussions relating to the programmatic accessibility of text. This has all been a reminder for me of how sometimes it can be really quick to build an interesting assistive technology tool to help people to work with text. My main goal in writing this series of posts is to encourage you to consider whether it would be practical for you to build a helpful tool for someone you know.

What is UI Automation?

UI Automation (UIA) is an API which allows programmatic interaction between an app which presents UI, (called “the provider”,) and an app which consumes and controls that UI, (called “the client”). Way back Microsoft supported an API called Microsoft Active Accessibility (MSAA) to allow that programmatic interaction, but over time the constraints of that API proved too limiting. So a new API, UI Automation, was born, which allowed for more flexibility and better performance in that programmatic interaction. It also meant that much more was possible when working with text in the provider app.

Why is it so very confusing to get started with UIA?

If you go to MSDN and look up UIA, you’ll find a ton of technical information. You’ll probably find that most of it doesn’t relate to you, and it’ll take a while to figure out exactly which bits are what you need to read. There’s a good chance that before you get to that point, you’ll have given up, and gone outside to rake up the leaves. One day, hopefully there’ll be an efficient way of getting all the information you need.

In the meantime, here are my thoughts on why things are so confusing.

If you’re working on the UIA client, (that is, the assistive technology tool that your customer’s using)…

So you’re building some app that’s going to help your customer work with text. This means you’ll be using the UIA client API to get that text from the provider app that’s also presenting the text visually. One source of big confusion is that there are two UIA client APIs, and so you’ll want to know why that is, and decide which one to use.

To give a little background on the UIA client API, the API originally shipped around the Windows Vista timeframe as a managed API included in the .NET Framework. A .NET UIA API is still available, as described at UI Automation Fundamentals. Importantly, at the top of that documentation, the reader is pointed to "the latest information about UI Automation" at Windows Automation API: UI Automation. This is because in Windows 7, Microsoft shipped a native version of the UIA API in Windows. And so today, we have the two UIA client APIs; a managed .NET API and a native Windows API.

All investment in improving UIA over recent years has been in the Windows UIA API, but many people still use the .NET UIA API because it's easy to use with their C# code, and it often does what’s needed. However, personally, I only ever use the Windows UIA API. This is because the Windows team has made many improvements to the Windows UIA API since Windows 7, and will continue to improve the API in the future.

On occasion I’ve seen some unexpected behavior with the .NET UIA API, (for example, delays beneath the client API calls,) which doesn’t happen when using the Windows UIA API. So when people ask me about unexpected behavior using the .NET UIA API, I ask them if it would be practical to see if the same behavior occurs with the Windows UIA API. If the unexpected behavior does not happen when using the Windows UIA API, I can encourage them to move their code to use the Windows UIA API. If however the unexpected behavior still occurs, the Windows team can investigate further.

However, asking someone to consider porting their C# code from using the .NET UIA API to using the Windows UIA API, is not going to be what they want to hear. First, they’ll need a managed wrapper around the Windows UIA API to call from their C# code, and depending on which wrapper is used, the C# code may need to be updated to make the calls into the API.

To alleviate that, in 2010, Microsoft created a managed wrapper around the native Windows UIA API, with the same interface as the .NET UIA API. This meant that C# app devs could move to indirectly use the Windows UIA API with little work on their part. The managed wrapper is called "UIAComWrapper", (available at UI Automation COM-to-.NET Adapter – Home). Given that the wrapper isn’t supported today and hasn’t had any attention as far as I know for years, I don’t use it. (But for all I know, it works fine for some people.)

The only way I use the native Windows UIA API from C#, is to manually generate a managed wrapper around the Windows UIA API using the tlbimp.exe tool that's included in the Windows SDK. Most of my old C# samples up at https://code.msdn.microsoft.com/windowsapps/site/search?f%5B0%5D.Type=User&f%5B0%5D.Value=Guy%20Barker%20MSFT use this approach. By doing this, I'm using the Windows UIA API without involving the use of the legacy UIAComWrapper. I give more details about using the tlbimp.exe tool at So how will you help people work with text? Part 2: The UIA Client.

The upshot of all that is that it can be pretty confusing when getting started with the UIA client API. For example, say you’ve heard of a function called “FindFirst” in UIA, and that’s a really handy API for finding UIA elements of interest. So you look up “windows ui automation findfirst” at MSDN, and intentionally included “windows” because you want to check out the Windows UIA API. When I just did that, I think everything in the first page of results related to the .NET UIA API. Only when I checked the “Windows Desktop Applications” checkbox did I start to see results for the Windows UIA API.

I've learned to be very aware of whether a UIA search result includes "(Windows)" in its title. If "(Windows)" is there, then it's probably what I'm looking for.

Figure 1: A UIA search result at MSDN which relates to the Windows UIA API.

You may think this is overly confusing, and it is. But once you’re aware of the existence of the two APIs, developing with UIA can be very efficient. And like I said, I only ever use the Windows UIA API, because I know by using that, my customers will have the best UIA client API experience possible today. And for reference again, this where the Windows UIA API is described, Windows Automation API: UI Automation.

If you’re working on the UIA provider, (that is, the app that’s providing the text)…

Say you’re building an app which presents text visually, and you want to make sure that its text can be accessed through UIA. In most cases, this will happen automatically, because the UI framework will do the work for you. For example, say you’re building a XAML app and show a TextBlock containing the text (say) “My spaniel wants her breakfast”. The XAML framework will create a programmatically accessible element representing the TextBlock in UIA’s hierarchical tree of elements, and allow a UIA client to call through to access the text and navigate through it. That’s lots of great functionality for a customer, and you didn’t have to do anything in particular to enable that.

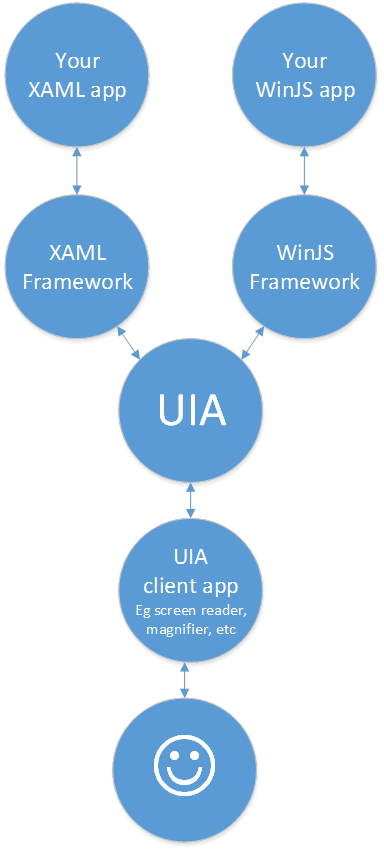

In fact, to borrow a picture from Building accessible Windows Universal apps: Programmatic accessibility, the picture below shows that XAML and WinJS apps don’t implement the UIA provider API directly. Instead, the UI framework exposes your data through the UIA provider API based on how you defined the UI.

Figure 2: Chart showing two-way communication between your app, the UI framework used by your app, UIA, the UIA client app such as a screen reader, and your customer.

However, some people will be working with apps or components which don’t get all this for free, and if they want to allow their customers to interact with their text in the powerful way that UIA allows, they’ll have to implement the UIA provider API themselves.

For example, say you’re responsible for the Windows RichEdit control. The RichEdit control has been a feature-rich control for many years, and there are loads of great things you can do with it programmatically. But a time came when it was important for it to expose its text in a way that could be accessed fully through UIA, particularly given that RichEdit’s used everywhere.

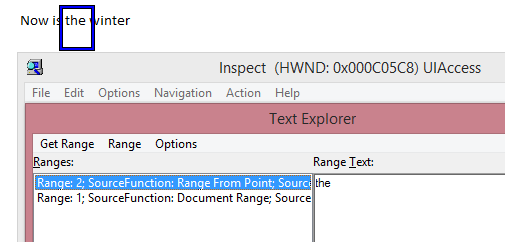

So RichEdit was updated to fully expose its text through UIA, and I can now do all sorts of interesting things programmatically with it. For example, I just pointed the Text Explorer SDK tool to text in WordPad, and I can find the word beneath the mouse. That works because WordPad hosts the RichEdit control, and the RichEdit control fully exposes its text through UIA. (The Text Explorer tool is accessed through the Inspect SDK tool's Action menu.)

Figure 3: The Text Explorer SDK tool accessing text in WordPad through the UIA API.

So when looking through MSDN for information on how to make your UI programmatically accessible, you may hit lots of results which don’t relate to your situation. The page at https://msdn.microsoft.com/enable was recently updated to make it quicker to reach information on platform-specific technologies, so that’s a very helpful step.

If you do need to directly implement the UIA provider API for exposing text, more details can be found at So how will you help people work with text? Part 3: The UIA Provider.

The SDK tools are essential

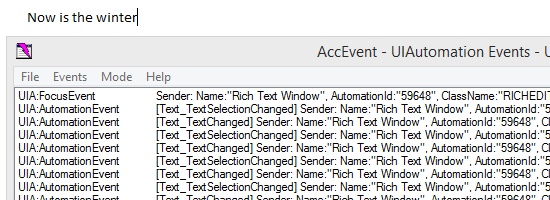

Whether you're working on the UIA client API or UIA provider API, a couple of SDK tools really are essential. These tools are Inspect and AccEvent. Inspect shows you what’s being exposed by UI through the UIA API, and allows you to programmatically control that UI. AccEvent shows you what UIA events are being raised by UI.

Figure 4: The AccEvent SDK tool reporting text-related events being raised by the WordPad app while typing in WordPad.

These SDK tools aren’t quite as polished as I’d like them to be, but once you know how to use them, they really are incredibly valuable. (In fact I wouldn't be able to do my job without them.) Some notes on Inspect can be found at the “Six things to know about the Inspect SDK tool” section at Building accessible Windows Universal apps: Programmatic accessibility.

And here’s a note about AccEvent…

When AccEvent registers for events, it doesn’t ask for data about the element that raised the event to be cached with the event. So if AccEvent needs to show you the Name property of the element that raised an event, then once it’s received the event, it goes back to the provider and asks for the Name. Other UIA client apps may request for that data to be cached with the event. In rare situations where the provider changes its data immediately after raising the event, this can mean AccEvent can report different data than that accessed by other UIA clients which do request that element properties are to be cached with the event.

And it’s important to note that Inspect and AccEvent are UIA client apps. So when I'm using these tools, they’re calling the same Windows UIA client API that my own UIA client apps do.

When I’m building an assistive technology tool using the UIA client API to interact with some provider UI, I never write code without pointing Inspect to the provider UI first. By using Inspect, I can learn the values of various UIA properties and behaviors in the UI that I’m interested in. For example, does it have an AutomationId, a ControlType, a Name, and can it be programmatically invoked? By understanding exactly what’s being exposed in the UI, I can feel confident that I can write code which will successfully interact with that UI.

Sometimes you might be surprised by what Inspect tells you about the provider UI. For example, someone queried at Odd context menu behavior recently whether the UIA element hierarchy associated with some context menus is expected. The answer really is that it’s unexpected because it’s not what you expect, but it’s expected because that’s how it is. When UIA client apps interact with UI, the client app has to take into account the way in which that UI is being exposed through UIA. If the provider UI is not exposing some really important information through UIA, it can’t hurt to make sure the people who built the UI are aware of that, but often the UIA client dev has to try to get creative and write the code in whatever way will achieve their goals for the customer.

And it’s also worth pointing out that UIA can’t make inaccessible UI accessible. UIA simply channels information between processes. It’s up to the provider to make sure all the expected data is being exposed.

Summary

UIA can be a confusing technology to begin with, due to the number of APIs and technologies involved. But UIA is a powerful thing, and worth getting to know if you want all your customers to benefit as much as possible from text shown in apps, regardless of how your customers interact with that text.

If you’re building an assistive technology tool to help someone you know, I’d recommend using the Windows UIA API. More details are at So how will you help people work with text? Part 2: The UIA Client.

If you’re directly implementing the UIA provider API for exposing text, a sample provider and more details are at So how will you help people work with text? Part 3: The UIA Provider.

So now how will you help people work with text?

Guy

Posts in this series:

So how will you help people work with text? Part 1: Introduction

So how will you help people work with text? Part 2: The UIA Client

So how will you help people work with text? Part 3: The UIA Provider