A recipe for an exciting assistive technology app: Throw three UIA samples together and stir vigorously!

Hi,

Last week I had an interesting discussion with someone who has experience in the Education space. She was telling me how valuable it can be for some students to have a word that’s shown visually in a document spoken. So this got me thinking back to one of my old UI Automation (UIA) samples, which spoke text shown in an e-mail window. This sample's on MSDN at Windows 7 UI Automation Client API C# sample (e-mail reader) Version 1.1. Perhaps I could take that sample, and change it to speak a word shown in a Word 2013 document. And as usual, I couldn't resist trying this out. I downloaded the sample, renamed it to "Herbi Reads", and started on a new app that would speak a word from a Word 2013 document.

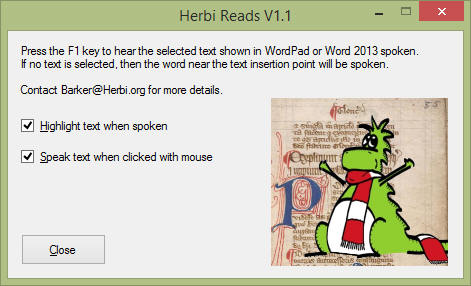

Figure 1: The Herbi Reads app.

Note that given that I built the sample a couple of years ago, Visual Studio asked me whether I wanted to update the app’s use of .NET 3.5 to a newer version of .NET, so I did make that move. (I also found I needed to add a reference to Microsoft.CSharp in order to get the app to build.)

The next question was what would trigger the speaking of the word, and what word would be spoken? An app like this has all sorts of potential to interfere with the document app's own interaction model. For example, if Herbi Reads speaks a word when the word is clicked with the mouse, how does that impact what the document app would usually do when a word is clicked on? Or if a key press triggers the speaking of the word containing the caret, how does that impact what the document app would usually do when that key is pressed? For my first version of my app, I didn't worry about that too much. I knew what I was building wouldn't be ideal from a usability perspective, but if people were interested in using it, I could smarten things up based on how they'd really like to use it.

So I decided that I'd have the word containing the caret spoken in response to a press of the F1 key. In order to do that, I'd use a low-level keyboard hook to detect a press of a key, then have the hook post a message to the main Herbi Reads form, and finally get the word near the caret and speak it. And what's the quickest way for me to add a low-level keyboard hook to my C# app? Use another of my UIA samples! This sample's described at How UI Automation helped me build a tool for a user who’s blind and who has MND/ALS, and the source is available at https://herbi.org/WebKeys/WebKeysVSProject.htmI. I downloaded my HerbiWebKeys sample, and copied KeyboardHook.cs and related code into my new app. This made it quick 'n' easy for my app to know that the F1 key had been pressed, and that I should take the action to speak the word containing the caret. (I also decided that if there's currently a selection in the document, I'd speak the entire selection.)

Using the keyboard to trigger the speech will be fine for some customers, but for other customers, they’d want to click on a word and have it spoken. For that, I decided I'd use a low-level mouse hook. And what's the quickest way for me to add a low-level mouse hook to my C# app? You guessed it! I downloaded my MSDN sample at Windows 7 UI Automation Client API C# sample – The Key Speaker, copied MouseHook.cs and related code into my app, and could now take the speech-related action I needed to in response to a mouse click.

Having done that, I then needed to update the code I had which gathers the text to be spoken from the document app. The sample code I currently had in the Herbi Reads app looked for an e-mail client window and got text from that. For my new app, I'd need new rules about which UI element I want to get text from, and what text to get. Interestingly, I decided not to make this specific to Word 2013, so the app will speak anywhere the code works. This has the fun side effect that the app speaks the source code shown in Visual Studio while I’m debugging the app. It also has the not-so-fun side effect of the app saying my default "I'm sorry, I couldn't find any text to read" as I clicked in lots of other apps. When I next update the app, I really should limit it to only try to get text from apps that I'm interested in.

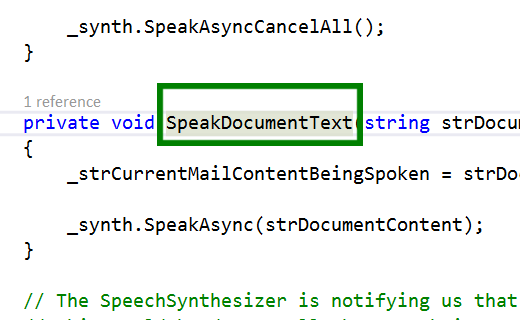

The function included at the end of this post is the one I ended up with, to gather text to be spoken in response to a mouse click or press of a key.

One more feature request I got for the app was to have the text being spoken highlighted. As it happens, the sample I based the new app on already highlights the text by showing a magnification window and magnifying the text being spoken. While it was very interesting for me when I wrote the sample to get the Windows Magnification API working in a C# app, I didn't really fancy using that type of highlighting in my new app. So I kept the logic in place for when highlighting appears and disappears, (it disappears shortly after the text has finished being spoken,) and replaced the magnification window with a new topmost Windows Form. I made that form transparent except for a green border, and sized and positioned the form to lie over the text being spoken. I'd say this is good enough for generating feedback from people potentially interested in using the app.

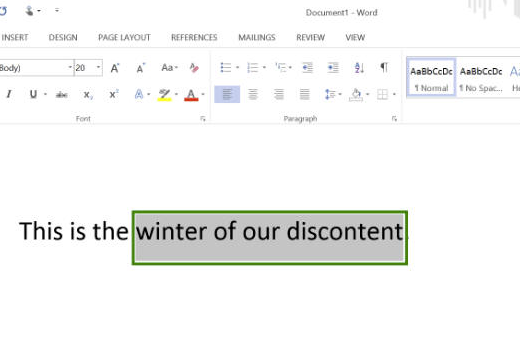

The end result of all this is that I can now have text of interest in a Word 2013 document spoken and highlighted following a click of the mouse or a press of a function key.

Figure 2: The Herbi Reads app speaking and highlighting text shown in Word 2013.

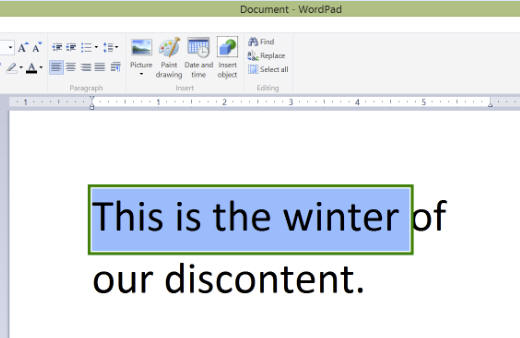

Based on the action taken by my new Herbi Reads app, it also works in WordPad on my Windows 8.1 machine.

Figure 3: The Herbi Reads app speaking and highlighting text shown in WordPad.

And here's the app's source code being spoken and highlighted while I was debugging the app!

Figure 4: The Herbi Reads app speaking and highlighting text shown in Visual Studio Ultimate 2013.

All in all, it only took me a few hours to build a new app from my three UIA samples, such that the app met the basic requirements that I was discussing with my colleague last week. (And it probably wouldn't have taken me as long to build it as it did if it weren't for the fact that I was watching the USA v Portugal game while building the app.) Hopefully I'll get some feedback from educators which will help me turn the app into a useful tool in practice for students.

Guy

P.S. If you’re interested in seeing the app in action, there’s a short demo video up at https://herbi.org/HerbiReads/HerbiReads.htm.

public string GetDocumentText(bool triggerIsMouse)

{

string strDocumentContent = "I'm sorry. I couldn't find something to read.";

// Use a cache request to get the Text Pattern. This means that once we have the

// element of interest which supports the pattern, we don't need to make another

// cross-process call to actually get the pattern.

IUIAutomationCacheRequest cacheRequest = _automation.CreateCacheRequest();

cacheRequest.AddPattern(_patternIdTextPattern);

IUIAutomationElement elementMain = null;

IUIAutomationElement elementTextContent = null;

bool fTextPatternSupported = true;

IUIAutomationCondition conditionTextPattern = _automation.CreatePropertyCondition(

_propertyIdIsTextPatternAvailable,

fTextPatternSupported);

if (triggerIsMouse)

{

// First get the UIA element where the cursor is.

// FUTURE: Update the app to use the cursor position when the click occurred.

// This means updating the mouse hook to pass the point up to the app form.

Point cursorPoint = System.Windows.Forms.Cursor.Position;

tagPOINT pt;

pt.x = cursorPoint.X;

pt.y = cursorPoint.Y;

elementMain = _automation.ElementFromPoint(pt);

if (elementMain != null)

{

// Get the nearest element we can which supports the Text Pattern.

elementTextContent = elementMain.FindFirstBuildCache(

TreeScope.TreeScope_Element | TreeScope.TreeScope_Descendants,

conditionTextPattern,

cacheRequest);

}

}

else

{

// The customer's pressed a key to trigger the speaking of the word of interest.

// Assume we're interested in the foreground window.

IntPtr hwnd = Win32Interop.GetForegroundWindow();

if (hwnd != IntPtr.Zero)

{

// We found a window, so get the UIA element associated with the window.

elementMain = _automation.ElementFromHandle(hwnd);

if (elementMain != null)

{

// Get the first UIA element in the window that supports the Text Pattern and

// which declares itself to be a Document control. If we don't limit ourselves

// to looking at Document Controls, then when we're working with WordPad, the

// app can speak the content of the font name control shown on the ribbon.

// FUTURE: These basic rules are fine for this version of the Herbi Reads app,

// but will want to be made more robust once people really start using the app.

IUIAutomationCondition conditionDocumentControl =

_automation.CreatePropertyCondition(_propertyIdControlType, _controlTypeIdDocument);

IUIAutomationCondition conditionDocumentTextPattern =

_automation.CreateAndCondition(conditionTextPattern, conditionDocumentControl);

elementTextContent = elementMain.FindFirstBuildCache(

TreeScope.TreeScope_Element | TreeScope.TreeScope_Descendants,

conditionDocumentTextPattern,

cacheRequest);

}

}

}

if (elementTextContent != null)

{

// Because the Text Pattern object is cached, we don't have to make a cross-process

// call here to get object.

IUIAutomationTextPattern textPattern = (IUIAutomationTextPattern)

elementTextContent.GetCachedPattern(

_patternIdTextPattern);

IUIAutomationTextRange rangeText = null;

// Get all the ranges that currently make up the selection in the document.

IUIAutomationTextRangeArray rangeArrayDocument = textPattern.GetSelection();

if ((rangeArrayDocument != null) && (rangeArrayDocument.Length > 0))

{

// The Herbi Reads app is only interested in the first selection.

rangeText = rangeArrayDocument.GetElement(0);

}

if (rangeText != null)

{

// Get the text from the range. By passing in -1 here, we're saying

// that we're not interested in limiting the amount of text we gather.

strDocumentContent = rangeText.GetText(-1);

// If the range has no text in it, then expand the range to include the

// word around the caret.

if (strDocumentContent.Length == 0)

{

rangeText.ExpandToEnclosingUnit(TextUnit.TextUnit_Word);

strDocumentContent = rangeText.GetText(-1);

}

}

}

return strDocumentContent;

}