Let us talk about “Quality Bars”

When we talk about quality bars, we are not talking about the phenomenal gems such as the Kalahari Oasis in the Mabalingwe game reserve, which I visited and thoroughly enjoyed on more than a couple of hot days under the sun.

Instead we are starting a discussion on the quality bars we are using and considering for ALM Ranger projects, with the hope that you (ALM community) will give candid feedback and share your experience.

Why do we need quality bars?

We strive to ship quality solutions and experience which:

- Accelerate the adoption of Visual Studio with out-of-band solutions for feature gaps and value-add guidance

- Create raving and unblocked fans

- Meet regulatory and legal requirements

The first has been our mission from the start and the second will always be fuelling (inspiring) us and the community. The third is the one that has and will continue to introduce challenges and friction in the ALM community, because it requires bandwidth (which we have little of) and razor-sharp focus.

What are the challenges with quality bars?

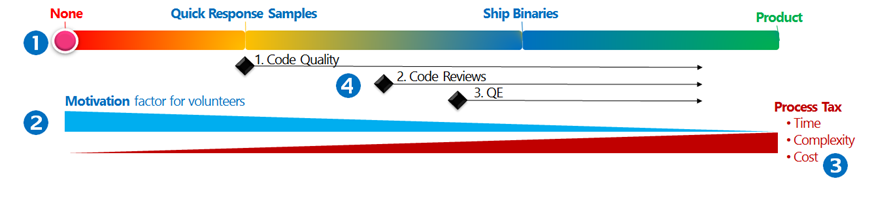

Let’s start by looking at the quality bar slider diagram we created in an attempt to highlight the challenges when striving for the full compliance with industry guidelines, standards and a quality user experience. At this point we assume that the ALM Rangers are well known to you, as well as their geographically dispersed, virtual, part-time and volunteer based community.

We deliver a menu of solution types ranging such as guidance, supporting whitepapers and samples, quick response tooling samples, tools or a combination thereof. Raising the Quality Bar for Documentation gives us an overview of the documentation quality bar, while Raising the Quality Bar for Tooling is the starting point for the quality bar discussed herein.

| When we talk about tooling we can move the slider from none (pure guidance) to a fully supported “shrink-wrapped” product. The compliance and quality bar checks and balances take a huge jump when we reach and especially cross the “ship binaries” marker. | |

As mentioned the ALM Rangers community is based on volunteers, who invest their passion for technology, their real-world experience and their personal time on a part-time basis. What motivates the ALM Rangers? Great question which probably will never have a finite answer. Here are a few replies from a recent survey in which we asked ALM Rangers why they do what they do:

The challenge is that the motivation factor starts declining as we move the quality bar slider to the right as shown. |

|

| As we move the quality bar slider to the right we get closer to the product group quality bars, but also start inheriting more and more “process tax”. Quality solutions, quality experience and in particular regulatory and legal requirements introduce stringent compliance testing and validation. We refer to this as the “process tax” which requires time, razor-sharp focus and which inadvertently chisels away at the motivation factor for volunteers, who want to build solutions in their personal time and not be bogged down with compliance red-tape. Our current strategy is for the Program Manager Product Owner and Dev Leads to shield the team from as much of the quality processes as possible, keeping the team focused on building and testing the bits and continuing to have “fun” with what motivates them. | |

We have broken down the quality bar into three main checklists:

|

What is the base quality bar we recommend?

Looking at the Ruck Guides we note the following suggested list:

The further you move the quality dial from left to right, the more quality and compliance checks you need to consider as part of your quality bar bucket.

- Minimum

- Sprint Objectives

- Project principles

- Code Reviews

- Definition of Done

- Automated Unit Testing

- Manual Test Cases

- When shipping binaries

- Dogfooding (early adopters)

- Telemetry

- Quality Essentials (PoliCheck, ApiScan, Signing, …)

- License agreement(s)

I will let you dig into the Ruck Guides, Quality Bar, Definition of “DONE” and knowing when it is safe to sleep peacefully and Code Review and Build process for more details and examples of the ingredients such as “definition of done” and “code review”.

But, what about testing in the context of the ALM Rangers?

- Automated Unit Testing … typically includes a mix of unit, system, integration and regression testing that is automated and performed as part of the build.

- Manual Testing … typically includes a mix of manual user, exploratory and user acceptance testing to validate features and edge-cases, using Microsoft Test Manager.

- Dog-fooding … typically includes a mix of manual, exploratory and on-the-job testing with no predefined tests. While we have dog-fooded the use of automated Coded UI testing, the preferred trend is to use humanoids who are passionate about the technology, who test edge cases we would never have considered and who are willing to give candid feedback.

Your candid feedback and food for thought would really be appreciated! Rangers, comments?