UNISA Chatter – Operating System Concepts: Part 9 … Virtual Memory

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigate and summarize virtual memory management. Last time we looked at memory … today we virtualize and take a sneak peek at virtual memory.

Why do we need virtual memory?

Thinking back to the 80’s we wrote programs that had to operate within segments of 64KB … today the “B..kilo” has been replaced with the “G…giga” and programs are executing on processes whose logical memory space far exceeds the available physical memory. Virtual memory is a strategy that allows us to map large logical (virtual) memory to smaller and physically constrained memory, thereby increasing processor utilization, multiprogramming and reduces the complexity of programming by removing the need to worry about memory availability.

Thinking back to the 80’s we wrote programs that had to operate within segments of 64KB … today the “B..kilo” has been replaced with the “G…giga” and programs are executing on processes whose logical memory space far exceeds the available physical memory. Virtual memory is a strategy that allows us to map large logical (virtual) memory to smaller and physically constrained memory, thereby increasing processor utilization, multiprogramming and reduces the complexity of programming by removing the need to worry about memory availability.

Allocation of Frames

The first question we need to ask is how should the operating system allocate the fixed amount of memory amongst processes?

In a single user system this is probably not too difficult, because the user process would get the (number of frames – what the operating system needs).

In reality, however, we have multi-user, multi-process and multi-threading environments … all competing for the (number of frames – what the operating system needs).

Some of the strategies include:

- Minimum Frames

- Allocate the bare minimum number of pages needed, which is defined by the computer architecture.

- Maximum Frames

- Allocate the maximum number of pages needed, which is defined by the amount of available physical memory.

- Equal Allocation Algorithm

- Allocate available frames m amongst n processes.

- Allocation = m/n.

- Example:

- 1000 frames available and 2 processes. Each gets 1000/2 = 500.

- Proportional Allocation

- Allocate available frames proportionally to processes based on their size.

- Allocation = virtual size for process / sum of virtual process space * available frames.

- Example:

- 1000 frames available

- Process A has size of 10 pages … frames = 10/110*1000 = 90

- Process B has size of 100 pages … frames = 100/110*1000 = 909

- Working Set

- Working set model is based on the assumption that each process executes in a locality and that the working set is the sum of pages in the locality. In plain English … the working set is the number of frames most recently used and needed by the process.

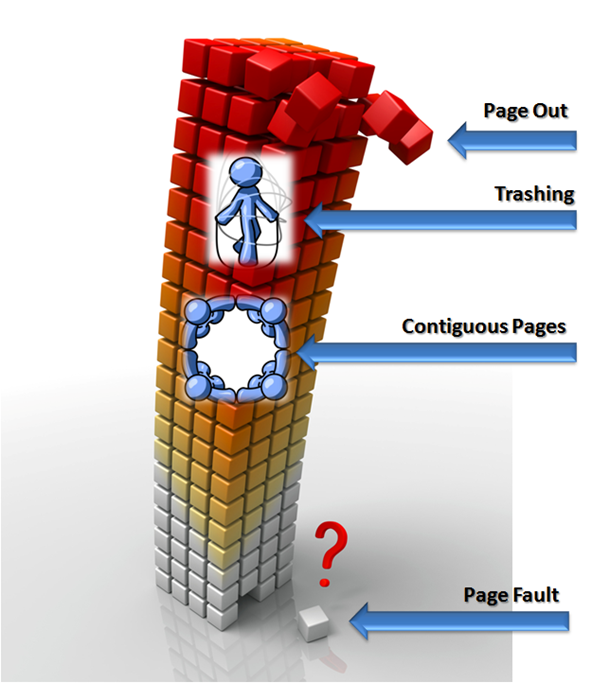

- If a process has less pages than needed for its working set, trashing will occur, as the process will be the cause of many page faults.

Looking at demand paging

Now that we know how many pages we get, we can look at how these pages are loaded. Does it make sense to load all the process pages at load time? If we had the physical memory yes, because memory is fast, but then the reason for this post is that we are using virtual memory, which is typically a lot greater than physical memory.

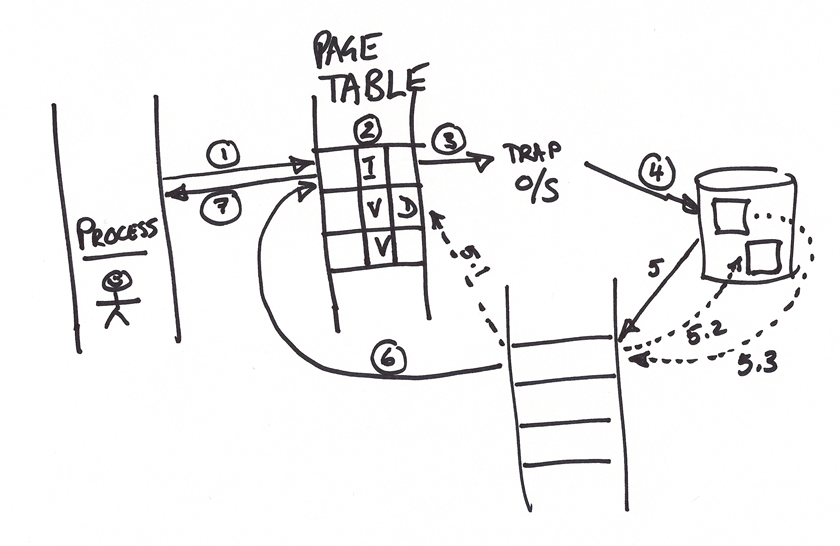

Demand paging is a technique that loads pages on demand, as needed. Looking at the diagram below we have a page table, with an indicator for each page which defines whether the page is valid or invalid, or rather in-memory (valid) or paged-out (invalid). The pages that are valid are boring to look at, so let us rather step through the invalid scenario:

- Process references what it believes to be memory.

- The page table defines it as invalid … not available.

- A page fault is created.

- The page is located on disk.

- The page is loaded from disk into physical memory.

- The page table is updated to reflect a valid page.

- The memory reference is returned to the process, which was oblivious to steps 2-6.

Pure demand paging only brings pages into memory as needed, which can be inefficient. Most operating systems look at processes having a locality of reference, which defines the minimum working set of pages needed to execute effectively.

Another important responsibility of the operating system is to manage the restart of instructions after a page fault. A common strategy is to use temporary registers to hold values of modified locations and to restore the memory to its state before the instruction was started, allowing for a repeat or re-run after addressing the page fault.

Page Replacement Strategies

The common strategies, which can grab pages from the local process (local replacement) and/or from any process (global replacement) include …

- Basic Page Replacement

- If a page is free use it, else find a victim and free it.

- Looking at the illustration above, we notice steps 5.2 and 5.3. If we cannot perform step 5 due to no available free pages, a victim is identified. The victim’s page is paged to disk (5.2) and the newly needed page is paged in (5.3).

- The page table typically has another bit per page, which marks it as dirty (modified) or not. If the page is dirty, we have to perform step 5.2, else we can skip it and improve overall performance.

- Obviously the victims page must be marked as invalid to indicate that it has been paged out.

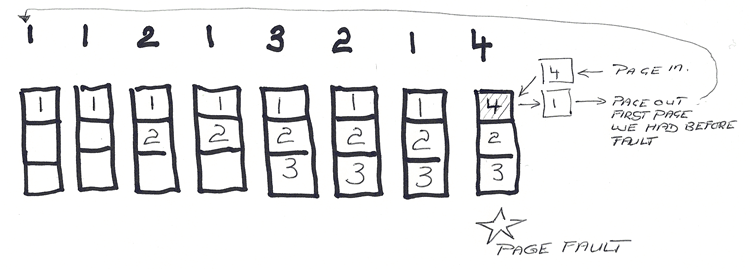

- FIFO

- Optimal

- Belady’s anomaly defines an unexpected anomaly in that some page replacement algorithms cause greater page faults as allocated frames increase.

- The optimal strategy is looking for the optimal page-replacement algorithm … which works well on the whiteboard, but in practise is difficult to implement as the operating system has no crystal ball to determine future memory references.

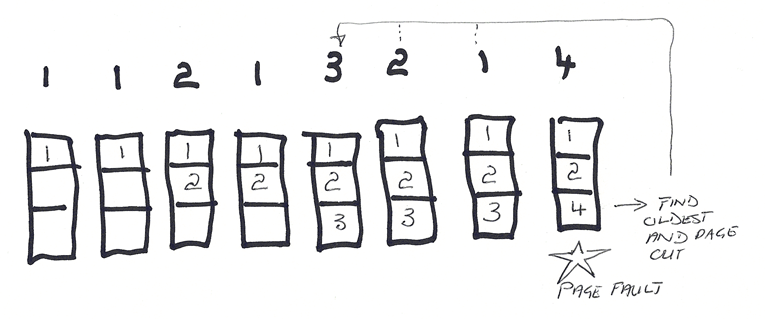

- LRU

- Least-recently-used … replace pages that have not been used for the longest period of time, which changes the above illustration of usage as follows:

- Uses counters or a stack concept. The latter always removes a page that is used from the stack and places it at the top.

- There are special sub-strategies for LRU

- Second Chance … add an indicator to each page which is set when used. When we need to find a victim, those with a set indicator are not considered, but we then clear their indicator … they are scheduled to be a victim the second time around.

- Enhanced Second Chance … add another indicator (A,B), where A = used and B = clean.

- Least-recently-used … replace pages that have not been used for the longest period of time, which changes the above illustration of usage as follows:

- MFU

- Most frequently used strategy assumes that the page with the lowest count of usage is probably new and has not yet been used.

Some trivia …

The following programming techniques can be used for demand paging, whereby they are split up into a good and bad category.

- Good

- Stack

- Sequential search

- Vectors

- Bad

- Hashed symbol table

- Binary search

- Indirection

The replacement strategies, ranked from best to worse in terms of the Belady’s anomaly are:

- Optimal

- LRU

- Second-Chance

- FIFO

Kernel

- Buddy allocation system, allocates and split memory on the basis of power 2 … 256 –> 2x 128 –> 4x 64

- Slab allocation system, allocates kernel data structures to caches, which in turn are associated with slabs of physically contiguous pages.

… these are my notes for this topic … next time the schedule has storage management in line for us :)