Asynchronous Solution Load Performance Improvements in Visual Studio 2013

Improving Solution Loads

Over the past few years, the Visual Studio team has been working hard to improve Visual Studio performance and scalability when working with large solutions. One area of particular interest to many of you has been our initial solution load times. For Visual Studio 2012, we implemented changes that enabled large solutions to load asynchronously, which results in substantially faster load times for many of our users. For Visual Studio 2013, we’ve continued to improve solution load performance by deferring the initialization of document tabs until they are needed. In this post, we’ll walk you through the changes you can expect to see in the latest release, and some of the early performance data we’re seeing from customers using pre-release builds.

The Big Load

Prior to VS 2012, solutions were loaded all at once. During the load, the entire IDE was blocked while all the necessary preparation and initialization was done to prepare the solution for use. Users working with larger solutions are likely familiar with this dialog:

For a small solution with only a couple of projects, loading usually doesn’t take too much time. But as the size and complexity of solutions grow, so does the time it takes to load the solution, and thus the time spent waiting for this dialog to disappear. So while working on VS 2012, we asked ourselves, “What would happen if we only loaded the projects a user needs to get back to work?”

Going Async

We reasoned that at any given moment, a developer is only actively working on a couple of projects in the solution. If we could determine which projects were the most important to a developer when the solution was closed, we could then load those projects up front on the next solution load. The remaining projects could then be loaded at some point down the road when they wouldn’t interfere with developer productivity.

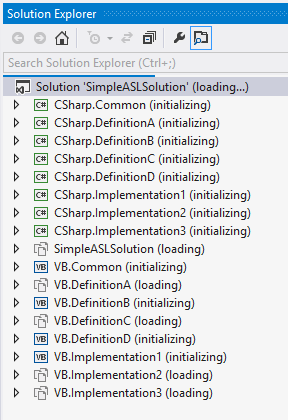

The aforementioned post does a great job describing asynchronous solution loading, so I won’t go into great detail here. But for this conversation, the key concept to know is that we use open documents to determine which projects should be loaded up front on the next solution load. Using the concept of ‘locality of reference’, we infer that if a document was open when the solution was closed, then it is likely to be needed again soon after the solution is reopened. To ensure the document is in a usable state after the solution is loaded, we must load the project that owns that document (and any projects it depends on) to ensure editing and navigation work as expected. Because we block the UI with a modal dialog until this is complete, we refer to this as the modal phase of solution load. Any remaining projects will be loaded asynchronously at a later time when they won’t interfere with a developer’s productivity (referred to unsurprisingly as the async phase).

Progress!

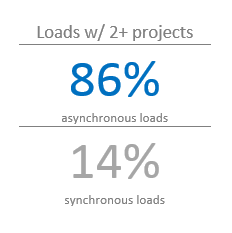

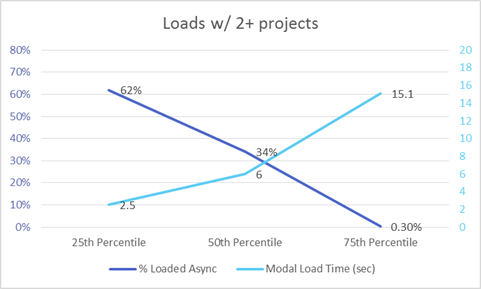

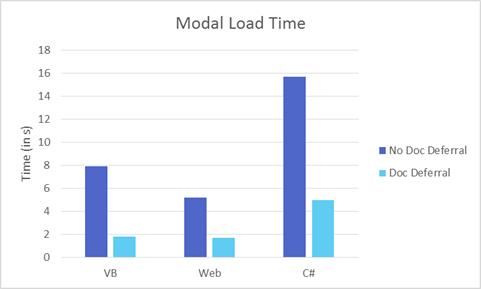

Our internal testing showed significant potential gains to be had from loading solutions asynchronously, but ultimately what matters is how much of a gain users get in practice. To accomplish this, we added new telemetry points that allowed us to analyze how well asynchronous solution loading was working for our customers. Use those points, we can evaluate loads occurring in Visual Studio 2012 Update 3 . While single-project solutions can be loaded asynchronously, they aren’t the primary load scenario being targeted by these improvements, so we’ll look at loads containing at least 2 projects first.

These numbers are pretty promising. We see that for a quarter of all solution loads, we defer loading over 60% of the solution and only block the IDE for around 2 seconds. As we look at a larger set of solution loads, we begin to see the impact of asynchronous solution load start to diminish. By the 75th percentile, solution loads are loading almost the entire solution synchronously and the time to responsive has grown greatly.

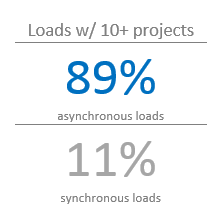

While even solutions with just 2 projects can benefit from asynchronous loading, we expected the impact to be much more noticeable for larger solutions. For example, when focusing on even larger solutions with 10 or more projects, we can see an even greater impact from asynchronous loading.

The 75th percentile has increased the number of projects deferred from 0.3% to 18%. And though the modal load time has increased slightly, it would be much greater without deferred project loading.

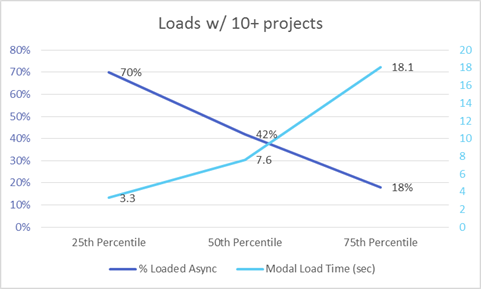

While this was encouraging progress, we wanted to know what else could be done to further improve the number of projects loaded asynchronously. Looking again at our telemetry, we found that one of the variables most strongly correlated with slower load times was the number of documents left open between sessions.

Looking at loads this way illuminated that having just one project with an open document causes the number of modally loaded projects to increase greatly. We still see noticeable improvements in the amount of time it takes the IDE to be responsive, but there was clearly room for further improvement.

Based on this, for VS 2013 we asked ourselves a familiar question: “What would happen if we loaded only the documents a user was most likely to use?”

Getting Lazy. In a Good Way.

As a result of normal development activities (such as editing & debugging), a solution can often end up with many documents open in the IDE. The chart above indicates that as the number of projects with open documents increases, so too does the number of projects synchronously loaded. However, many of those documents aren’t actively being used and are effectively hidden behind other documents or off screen. Most of the time, only a smaller subset of documents is visible at any given time. These documents are most likely the last ones used by a developer when closing the solution and are most likely the ones to be first used on the next solution load.

Using the same logic that led us to only load the essential projects in the solution up front, we reasoned that if we only load projects for the visible documents, we could further reduce the number of projects that had to be loaded synchronously. The projects that owned the remaining, non-visible documents would now load asynchronously, just as if they didn’t own any open documents at all. Only in the event that one of the non-visible documents needs to become visible – such as when the document’s tab is clicked – do we incur the cost of loading the necessary projects.

We also discovered another set of savings by deferring document loads. In addition to avoiding certain project loads, we also noticed that we could potentially defer loading entire components of the IDE. Each distinct type of document that is opened in the IDE has a corresponding set of assemblies and data structures that need to be loaded and initialized in order to properly render that document (designers, for example). For any of these document types that are open, but non-visible when the solution is loaded, we now also avoid paying the cost of bootstrapping those subsystems until they are required. In some cases, the time saved by not loading these components was actually greater than the time saved by not loading the projects!

What It Looks Like

Visually, the IDE looks just the same. All the open document tabs from the previous session are displayed exactly the same as when the solution was closed . They all appear to be loaded just as before as well. However, only the fully visible ones are loaded while the rest remain in an uninitialized state until needed.

For extenders who need to interact with the open documents programmatically, they will continue to find all the documents present in the Running Document Table (RDT). The normal methods of accessing the documents through the RDT have been updated to ensure that the necessary projects are loaded and the document is fully initialized before returning the desired content.

A note on best practices for VS extenders:To help ensure document deferral works to its full potential, it’s important that components and extensions access deferred documents only when truly necessary. Extensions can check for the following new properties to detect when a document is in the deferred state and wait until they are fully loaded before interacting with them: For IVsWindowFrame, check for VSFPROPID_PendingInitialization. For native code working with the running document table, check for the RDT_PendingInitialization flag being returned from IVsRunningDocumentTable.GetDocumentInfo(). For managed code, use IVsRunningDocumentTable4.GetDocumentFlags() to check for the same flag. When a deferred document is initialized, the RDTA_DocumentInitialized attribute will be announced by IVsRunningDocTableEvents3.OnAfterAttributeChangeEx. |

Going Further

Based on our prototypes, we were pretty confident that delaying initialization of the non-visible documents would help shave time off of many solution loads. But we were left with the question of when these deferred documents should be loaded. After some discussion we ended up with two possibilities: either asynchronously at the end of the solution load, or on-demand as they are needed.

The first method was one we were familiar with as we use the same strategy by loading projects asynchronously. During the solution load, some projects and documents might briefly be in an uninitialized state, but eventually everything would reach the fully loaded state that is expected by most components of the IDE.

However, we found that in certain scenarios, initializing the documents asynchronously after the solution load is complete could lead to some very significant responsiveness issues. It’s important to remember that the IDE is in an interactive state at this point and we expect developers to be actively working on their solutions. Any noticeable delay in the UI could be an annoying disruption for someone trying to get work done. And while we saw that many documents could be initialized without causing perceptible UI hangs, some of the more complex documents would. Specifically, the first time we tried to initialize some designers or very large files, we could experience very noticeable delays as entire components were loaded and initialized for the first time. From the user’s viewpoint, this could happen unexpectedly and without warning. Definitely not a good user experience. So we opted for the second option of only loading documents on demand. Using this model, a user only ever pays the cost of loading a document if they absolutely need it.

Early Results

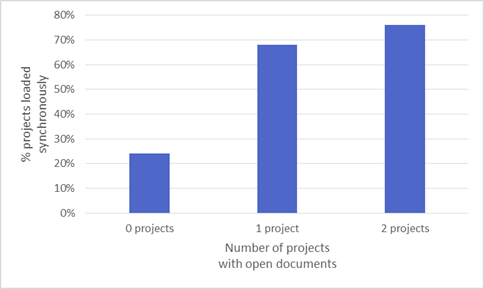

Using some of our internal benchmark solutions, we’ve been able to demonstrate just how much of an improvement deferring document initialization can provide with even a small number of open documents (in this case 5).

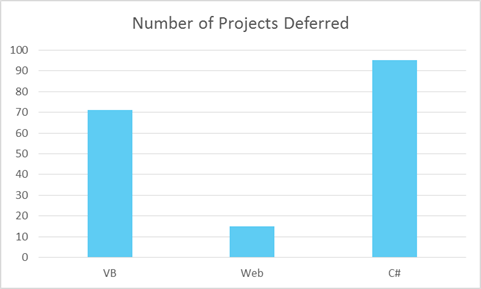

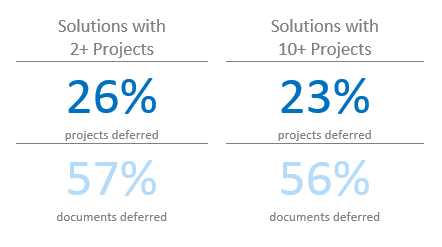

Of course, it’s more interesting to look at actual results users are experiencing from document deferral. To do that, we can take a look at some initial telemetry from the Preview and RC releases where delayed document initialization resulted in projects being deferred:

From this data, we can start to develop an early idea of how delaying document initialization is impacting solution loads of actual users. So far, we’re seeing about a quarter of the projects in a solution and over half the open documents being deferred from loading synchronously. And these projects deferrals are in addition to any projects that would already have been loaded asynchronously!

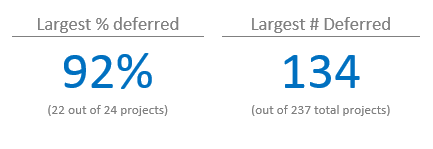

In addition to the general averages, we can also see some standout loads that really demonstrate what deferring document initialization is capable of:

Conclusion

In Visual Studio 2012, we took a significant first step toward improving the performance of loading solutions in the IDE. With deferred document initialization, we’ve taken another step to reduce the amount of time spent waiting for solutions to load and allow users to start being productive earlier than before.

In addition to showing off a new feature in the next release, we hope this post shows how important telemetry and your feedback is when we’re planning future features and improvements. We’ll continue to analyze the data we receive from the Customer Experience Improvement Program to evaluate solution load quality, in addition to other channels available to you to provide feedback. So grab the RC release and let us hear from you through Connect, UserVoice, the Send-A-Smile system in VS, or in the comments below!

Light

Light Dark

Dark

0 comments