Concurrency Visualizer as a Microscope for Execution Dynamics

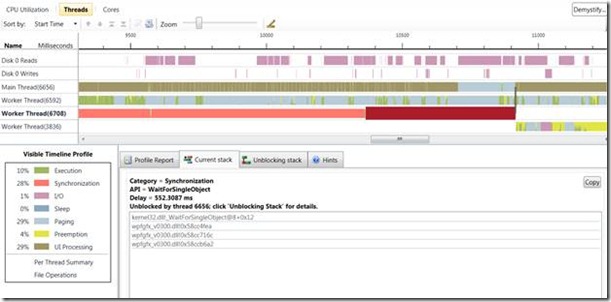

![clip_image002[4] clip_image002[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/30/71/metablogapi/8420.clip_image0024_thumb_491AB054.jpg) This is the picture that Concurrency Visualizer team used on the title page of internal specs. It actually reveals how most of us think about our product: not as a profiler (though you can get decent sample profile from it by clicking the green “Execution” category in the legend),

This is the picture that Concurrency Visualizer team used on the title page of internal specs. It actually reveals how most of us think about our product: not as a profiler (though you can get decent sample profile from it by clicking the green “Execution” category in the legend),

and not even as a performance analyzer (though it is the main usage of it today).

No, we feel it can be more important in a wider scenario – helping people understand what’s going on. In some sense the biggest practical problem of dealing with software is opaqueness: while shoemaker sees and touches the object of his labor, programmer can just talk about it. Doctors also used to talk about our guts - they built multiple diagnostic theories and looked for secondary signs to exclude/confirm them; now in many cases they just send us to some “Imaging Department” and immediately see what’s going on. For example, I did a root canal last year, and on the way back built the following literal analogy:

My tooth is in pain |

Program is painfully slow |

The dentist notices a bump on my gum |

Task manager icon does not look very green |

The dentist takes a jaw-wide panel X-Ray |

The programmer goes to CPU utilization view |

The dentist notices dark spot in the film |

The programmer sees a dip in the CPU utilization view |

The dentist takes digital X-ray of the dark area |

The programmer switches to thread blocking view |

The dentists traces the root |

The programmer traces blocking/unblocking chain |

The dentist sees an exact spot of inflammation |

The programmer finds a problem – too long wait for X |

I am sure before X-rays dentists would have less chances to find the actual problem… they probably just extracted the teeth (don’t want even to think how they did it 100 years ago… probably pulled the door?)

I remember my first computer in freshman year (it was long before they appeared in kindergartens), with a lamp indicating CPU activity. It was blinking slowly enough to visually differentiate execution of, say, SQRT, from sequences of MOV, ADD, etc. I looked at it and saw what my program was doing. Then they let us use a more serious computer and, to my horror, it happened to be quite opaque - I did not see anything there… note that it was before debuggers. You couldn’t even printf from assembly code, so for a few years I was busy building theories like “the result could be wrong because X may be not 1… or because Y happens before Z…” etc… I vividly remember these coffee-filled Sherlock Holmes-style nights.

Well, then they taught us higher level languages; there, I could easily printf anything I wanted to know. I could know what’s going on via these long logs, if I had invested sufficient effort into adding print statements. It was OK at the time, though the logs were loooooong… I color-coded lines and drew sketches on the side to re-express what I read in a more comprehensible and accessible way…

But then people started to write asynchronously – instead of the solid prose, programs now reminded short verses (chunks of code, reactively called by certain triggers), and these triggers were more and more independent (e.g. disk write finished, network packet arrived). Add to this multitasking (even though there was still only one CPU, it alternated tasks periodically)… and it was hard again. Lots of midnight oil (at this time I learned to add cognac to my coffee – this combination holds better through the night). E.g. to relay data between computers reliably and efficiently in my previous project, MSMQ, we simultaneously started disk and network operations. If network relay finished first, we canceled disk write; if one of them failed, did X, otherwise Y. Now imagine that some message went lost - go figure out where was the bug… again, logs and theories… and again, midnight oil.

And then computers became multicore; at first, people largely ignored it, but then they started to use multithreading for real. And this is when all hell broke loose. Concurrent programming is a minefield: you step too far left, you explode… too far right, explode… it reminds me of the old game of minesweeper:

What is so difficult in multithreaded programming? It is hard to keep track of the ordering variations when there are many independent actors (you need to keep in mind all possible event interleavings). Psychologists know about the “sequential nature of thinking” (half-joke is that it is an evolution step – e.g. pig already has it, but duck does not yet). Unfortunately, “visual” type people (usually more artistic ones) do it better than “logic types”, which most programmers tend to be. Education adds to the problem - programmers are educated to think sequentially (e.g. Turing machine, Von Neumann architecture), CS courses teach sequential programming first (and the first language leaves footprint forever). We interview a lot of people for our concurrency-related teams and we know from this experience that most people are concurrency-deaf, even if they are otherwise excellent programmers. It is terribly hard to find “naturally-parallel-ready people” – it is a rare and special talent.

My visualization for the concurrency problem is below: the degree of redness indicates the danger. Some areas of your project are more likely to have concurrency problems than others. And programmers of different skill levels will have different probabilities of mistakes in each of these areas. Concurrency programming project is actually a game – “use your available players to touch all cells in minimal time, avoiding explosion”:

The most blatant problem in concurrent programming is correctness – mostly inadequate shared state protection. Here is an obvious example: routines P {A; B; C;} and R {D; E; F;} both refer to the common data item S. Counterintuitive, P and R cannot assume anything easy about S: it could be suddenly changed asynchronously since “my last setting”. Both P and R should follow some global convention (e.g., taking locks); but who will enforce it? Alternatively, P and R could hand-craft the custom code that takes care of all possible interleavings of ABC and DEF (this is sometimes done for performance reasons in system software; very hard to do it right). Of course, there are other approaches – functional programming (like F#), message-passing (like Axum), transactional memory (like my previous project STM.NET), pre-packaged parallelism (concurrency runtimes and data structures), static analysis, debuggers, profilers, etc. But let’s be blunt – neither of these had solved all practical problems yet… the result is a lot of bugs – hangs, deadlocks, crashes - enough of them to inspire scientific papers like A Comprehensive Study on Real World Concurrency Bug Characteristics.

While the crisis in the correctness aspect of concurrent programming is obvious, people frequently miss that the problem is lot wider than just plain bugs (go fix them, after all). Another part is performance, which can be drastically dependent on your understanding of the temporal aspect (what happens when): but how can the programmer find opportunities or impediments for parallelism? Tools certainly emerge (e.g. this), but usually address a pretty narrow set of problems. So most practical programmers are back to vague theories… the debugger is of little helps since it smashes timing patterns… long logs and midnight oil again… Has anything changed in this industry? Oh yes, it is the next loop of the spiral - sequential programming is really much easier now than it was then.

But concurrency usage grows like mushrooms after the rain (How do programs become more concurrent? ). And above the debugging and performance aspects towers the most important problem of all: just understanding what’s going on. Everything changes around you all the time – code, people working on this code, project goals, data sets, hardware, environment – and the programmer constantly finds herself needing to learn how the program behaves in these new situations. What is the execution dynamics – when/where do the pieces work? What changed here since the previous version? What is so different in this data set? Why does it break on this hardware? The programmer needs to find out how his program executes in time and space. But what does it mean - “executes”? Well, Homo sapiens think top-down… first you need to get oriented at a highest level (“from 50000 feet”), then find an interesting area and dig into it (repeat recursively). But computers (and most programs) are black boxes – your stuff runs basically in the darkness.

And here is where the Concurrency Visualizer can help. It is an X-Ray, or microscope, showing you how the story unfolds during your program execution, in time and space. You can see the temporal history (“Does CPU do anything at all? When? Which code exactly is executed on this thread? Is it what I expected?”). Then you focus on some specific threads during some short sub-period… why is it blocked? “Ah, here is who is holding me! But I thought it would happen on a previous stage!” Bingo - you learned something. The behavior differs from your picture of the world. It may be not a correctness bug, or have no performance implications, but it is important anyway – you thought about this program incorrectly, your mental model of execution logic and dynamics was wrong. It is important by itself because you will base your other decisions on it. You cannot claim understanding your code without understanding its dynamics. And the best way to present the execution dynamics is an interactive visual representation with direct links to the code.

The Concurrency Visualizer is a microscope into the execution dynamics of a program. Hence the picture on our specs.

And you are likely to be surprised by what you see. All of us are – every time we look in the microscope; it inevitably shows that programs, runtimes or OS work slightly differently from what we thought… e.g. if I launch & profile the trivial MAIN with

Using (StreamWriter outfile = new StreamWriter("AAA.TXT")) { for (int i = 0; i<1000000; i++) outfile.Write("xxx"); }

I will be surprised to find no AAA.TXT in the file write channel (or file operations report). But if I expand profiling interval little bit beyond process boundaries, I would see that all file writing actually happens after my MAIN exited:

But I never thought about it while coding! And it may be important – e.g. I should not be sending any “mission accomplished” signal after the end of this program, since the computer still may crash before writing completes.

Don’t feel ashamed - even world class experts hit surprises (e.g. debugging guru John Robbins writes about it too). The first rule in software investigations is “it is probably not what you thought”. And we hope that the Concurrency Visualizer microscope gives you a way to find it out, top-down, visually. Use it for every concurrent program you touch – just to get or validate your understanding of execution dynamics; this knowledge will pay you back many times over. And good luck with concurrency!

Sasha Dadiomov – Parallel Computing Platform

![clip_image004[4] clip_image004[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/30/71/metablogapi/5756.clip_image0044_thumb_4D221075.jpg)

![clip_image006[4] clip_image006[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/30/71/metablogapi/4606.clip_image0064_thumb_5680C35A.jpg)

![clip_image008[4] clip_image008[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/30/71/metablogapi/8867.clip_image0084_thumb_4596A108.jpg)

![clip_image002[5] clip_image002[5]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/30/71/metablogapi/0728.clip_image0025_thumb_209BCE40.jpg)