How Hyper-V responds to disk failure

I have talked to a handful of people over the years who have had to deal with Hyper-V servers that have suffered disk failure. This can be quite problematic to diagnose and troubleshoot – so I wanted to spend some time digging into what happens and what you should look for.

To simulate this scenario; I create a virtual machine with two virtual hard disks. The first virtual hard disk was stored locally, while the second virtual hard disk was stored on a flash USB stick. I then put the operating system on the first virtual hard disk, and an application on the second virtual hard disk (yes – the application was Plants vs. Zombies). Once it was up and running – I pulled the flash USB stick out of the server – and recorded what happened.

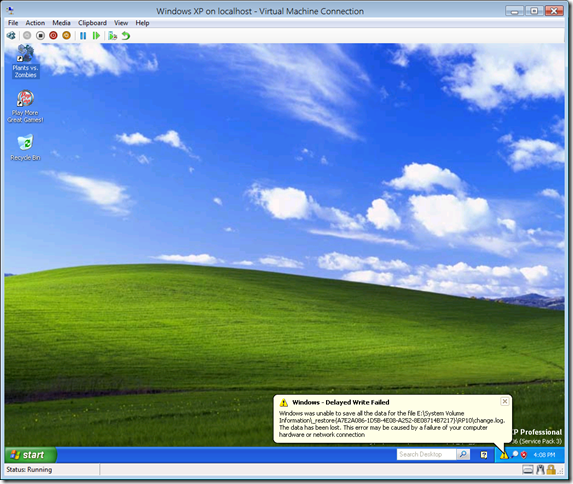

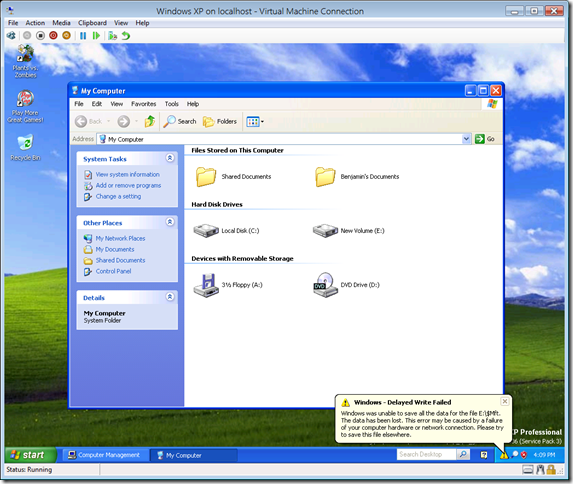

The first thing to note is – because it was not the operating system disk that disappeared – the virtual machine continued to run, and the guest operating system (Windows XP in this case) continued to run. Surprisingly the application also ran… for a while. After about 30 seconds the application silently exited and I started to see Windows alerts about write failures:

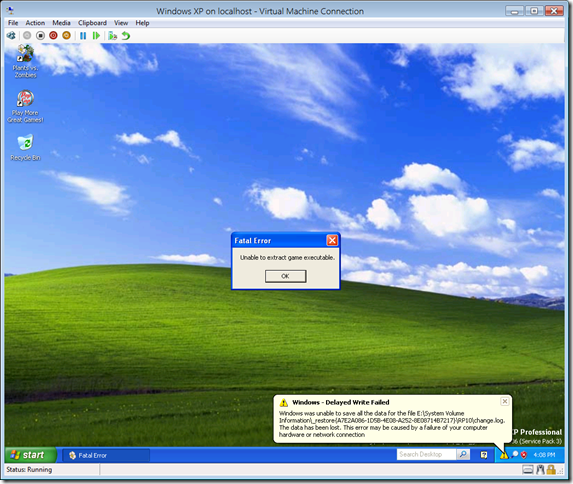

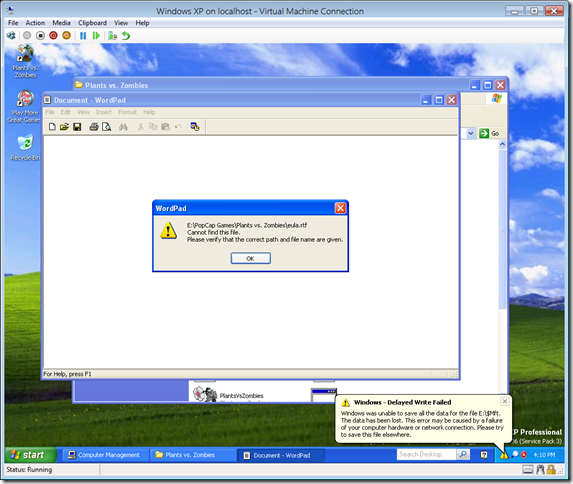

If I tried to start the application again – I would get a cryptic error message:

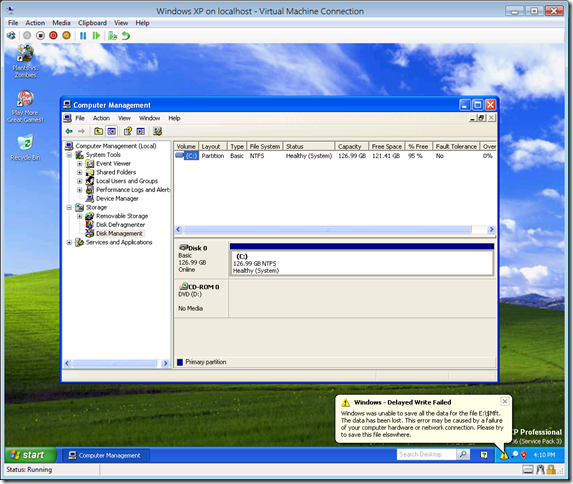

Exploring the guest operating system shows some significant confusion at this point in time. Disk manager believes that the disk (E:) has disappeared:

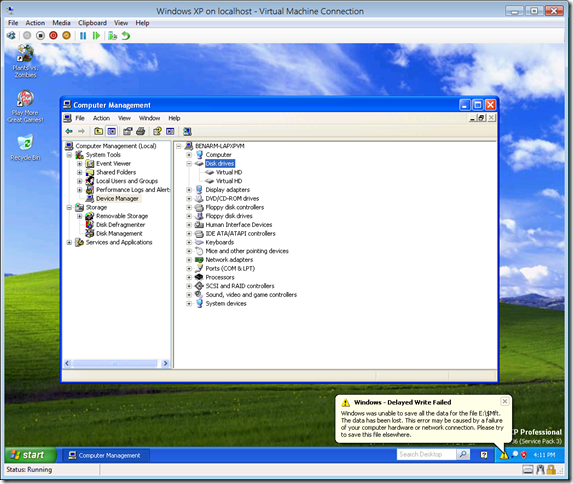

But device manager and Windows Explorer believe that the disk is still there:

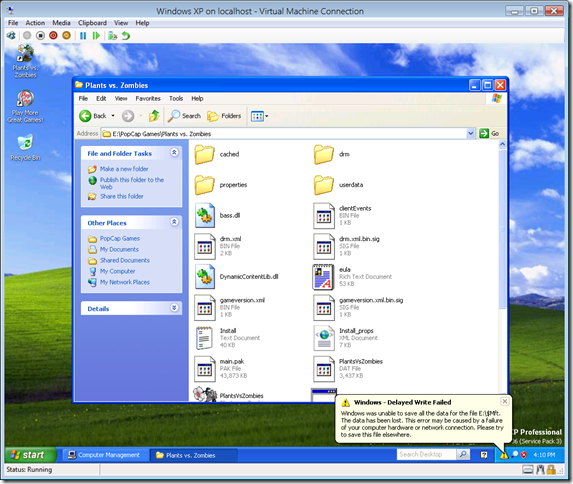

I can even explore the missing E: disk:

But trying to open a file fails:

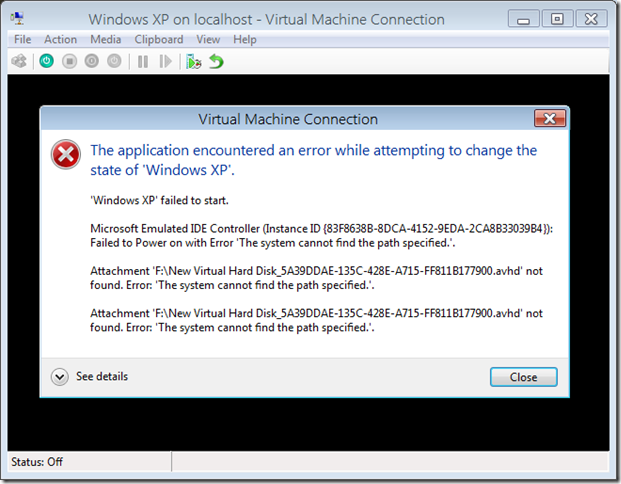

At this stage, the virtual machine will continue to run indefinitely in this mode. However, if the virtual machine tries to reboot it will fail:

Given all of this information – the real trap that I have seen people fall into is that if the missing physical disk is reconnected while the virtual machine is still running – the virtual hard disk will *not* be reconnected to the virtual machine. Where I have seen this cause problems is in a scenario like this:

You have a bunch of virtual machines where their virtual hard disks are stored on remote network based storage (e.g. iSCSI). Late at night one night there is a large glitch in the network (e.g. a switch reboots) and the remote disks disappear for a minute or two and then come back. This causes all of the virtual machines to lose their virtual hard disk.

When the server administrator comes in in the morning – he / she finds that a number of his virtual machines are in a strange state. They seem to be having problems accessing their disks – but the disks are not gone completely. Further more – if he / she looks at the parent partition the disks are there and everything looks fine.

9 times out of 10 the server administrator decides to reboot the affected virtual machines – which does reconnect the missing virtual hard disks – and everything goes back to being good. However the server administrator has no idea what happened.

So how do you figure out if this is what happened in your environment?

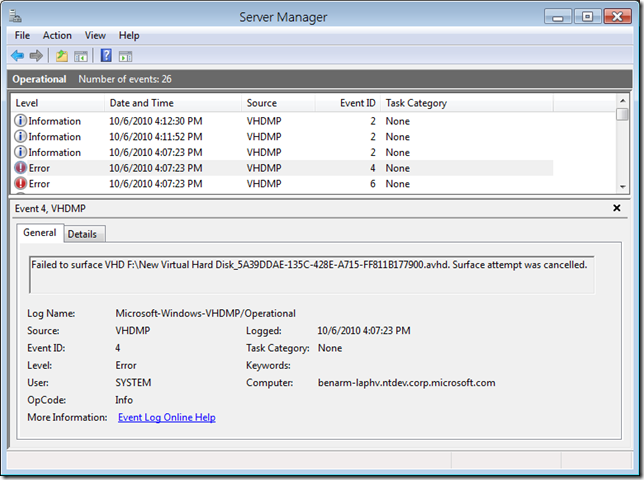

The first thing to do is to look at the Windows System event log. If there is any underlying hardware problem that is causing disks to come and go – there will be event log entries here to indicate that this is happening. However, there will be nothing there in the case of a disk removal (e.g. a USB disk getting pulled, an iSCSI disk going away for a moment). For these scenarios you can look at the event log entries under Applications and Service Logs –> Microsoft –> Windows –> VHDMP –> Operational. If a virtual hard disk is ever removed from a virtual machine due to an underlying storage error – you will see an error event log entry here:

Of course – once you have confirmed that this is what is causing the problem – the next task is to go and fix the hardware ![]()

Cheers,

Ben