How to Get Started with Multi-Core: Parallel Processing You Can Use

One of the great features of Windows 7 and Windows Server 2008 R2 has been the ability to support processing on machines with multiple cores. Both Windows 7 and Windows Server 2008 R2 support 256 processors. Computers with that kind of processing power aren’t available at commodity prices. Yet you probably have a 64-bit computer with dual cores and many with quad cores.

In order to take advantage of all those processors, you need the ability to write parallel code and to debug that code. And in the past, writing threads has been challenging.

Visual Studio 2010 Beta 2 brings it all to life. You can download the edition you want to try from Download Beta 2. You’ll have your choice of each edition in Visual Studio 2010 or .NET Framework 4, including the free Express versions.

This posting should help get you acquainted with the terms and concepts around parallel and concurrency processing on multi-core computers.

NUMA

For C++ programmers, you can use the NUMA methods and take full advantage of all those processors. NUMA stands for Non-Uniform Memory Access. Under NUMA, a processor can access its own local memory faster than non-local memory, that is, memory local to another processor or memory shared between processors. This means that you need some precise control of processors and memory.

You can do that using the Windows Software Developer toolkit and the new APIs that support NUMA.

The illustration shows how a group is organized.

- Windows Server 2008R2 supports 256 logical processors

- Machine has 1 to 64 Processor Groups

- Group has 1+ NUMA nodes (logical, cache, and memory in proximity)

- NUMA node has 1+ Sockets

- Socket has 1+ Cores

- Core has 1+ LP (>1 if hyper-threaded)

Windows 7 and Windows Server 2008 R2 introduce the concept of a processor group. This is a new system-level abstraction encapsulating and extending the notion of processor-affinity. A thread, process, or interrupt can indicate a preference for an operation on a particular processor, node, or group.

You can see how your own processors are organized. Get CoreInfo from Windows SysInternals. Coreinfo is a free utility you execute from the command line that shows you the mapping between logical processors and the physical processor, NUMA node, and socket on which they reside, as well as the cache’s assigned to each logical processor. In fact, CoreInfo calls the GetLogicalProcessorInformation method. It returns how your processors are organized.

To learn more about how you can support NUMA in unmanaged code, see the MSDN documentation at Process and Thread Functions. For more information, see NUMA Support Functions.

For more information, see New NUMA Support with Windows Server 2008 R2 and Windows 7.

You can learn more about NUMA processing from a series of Webcasts on Channel 9:

- Program Manager Discussion

- Topical Introduction

- Demo1 - System Topology API

- Demo2 - NUMA Device/Thread Locality

- Demo3 - NUMA Processor/Memory Locality

Threads

Because you are using threads, doesn’t mean you are doing multi-core programming. Use would use threads when you want to have your user interface continue even while it would otherwise be blocked waiting for a compute or I/O-heavy response. So they work on a single core machine.

But they have a cost. And that cost means that if you have thousands of methods that do computing, it will bring a computer to its knees or crash.

Let’s take an example. We have a Tree object that we pass into a recursive method. The idea is to walk the nodes of a tree and do some work in ProcessItem.

private static void WalkTree(Tree tree)

{

if (tree == null) return;

WalkTree(tree.Left);

WalkTree(tree.Right);

ProcessItem(tree.Data);

}

This is how you write your code today. So if you rewrite that code to use threads, it might look like this:

private static void WalkTree(Tree tree)

{

if (tree == null) return;

Thread left = new Thread(o => WalkTree(tree.Left));

left.Start();

Thread right = new Thread(o => WalkTree(tree.Right));

right.Start();

left.Join();

right.Join();

ProcessItem(tree.Data);

}

But if you are running on a x86 machine with more than a thousand nodes, this code does not speed up. In fact, it takes a lot longer to run because of the overheard of the threads. And each of the threads takes up memory, it will crash. And it doesn’t really take advantage of having multiple processors. And it takes time to destroy each thread. It takes memory on the stacks and in fact, in a 32 bit address space you can only create only approx 1360 threads per process. And it’s hard to get the result back from your thread.

Using System.Threading.Tasks

CLR only uses processor group 0 and doesn’t call any of the new Windows NUMA APIs. If you are a C# or VB developer, you’ll be able to use up to 64 processors. This provides plenty of processing power for the foreseeable future on commodity hardware. But rather than embed NUMA into your code, .NET Framework 4 provides some a new namespace that lets you take advantage of parallel processing power in your PC.

In .NET Framework 4, you could use Task class in the System.Threading.Tasks namespace. The Task class helps you write concurrent and asynchronous code. The main class in the namespace is Task which represents an asynchronous operation. Where Thread is expensive, Task is relatively cheap.

Here’s the example that rewrites WalkTree to work with Task.

using System.Threading.Tasks;

private static void WalkTree(Tree tree)

{

if (tree == null) return;;

Task left = new Task(() => WalkTree(tree.Left));

left.Start();

Task right = new Task(() => WalkTree(tree.Right));

right.Start();

left.Wait();

right.Wait();

ProcessItem(tree.Data);

}

When you run this on your duo core computer, you get nearly twice the speed because Task fills the logical processors that are available with its tasks. Quad core, nearly 4x the speed. It also won’t crash when confronted with thousands of Tasks. There is some overhead, so you want to use Task only when you have lots of items to process and when your processing is not bound by I/O. It depends on what you are trying to accomplish, and you should time each operation to be sure you’re getting the kind of improvement you expect.

Imperative Task Parallelism Using System.Threading.Tasks.Task class

.NET Framework 4 has several ways for you to use Task. We have some big names for them. But the first is called Imperative Task Parallelism. And what that means to programmers is that you use the System.Threading.Tasks.Task class.

You can create a Task in several ways. You can new up a Task and pass in a delegate or lambda function as in the preceding code. Or you can use the Task factory.

The most common approach is by using the Task type's Factory property to retrieve a TaskFactory instance that can be used to create tasks for several purposes. For example, to create a Task that runs an action, the factory's StartNew method may be used:

var t = Task.Factory.StartNew(() => DoAction());

By calling StartNew, you both create the Task and start it up. Or you can create a Task and delay starting it up until needed:

var t2 = new Task<DateTime>(() => DateTime.Now);

t2.Start();

t2.Wait();

Console.WriteLine(t2.Result);

You’re also able to get the result of the thread and do something with it. The Wait is used to synchronize your code when you use Task. In addition, if you have created many tasks, you can put them in an array and you can have them wait until one of the tasks compute or any of the tasks complete. Tasks also support cancellation that was not supported in threads.

In the example, I call the Wait method. This is not always preferable because Wait waits for the task. When you can, use ContinueWith. It creates a continuation that executes when the target Task completes.

var t4 = Task.Factory.StartNew(() => DateTime.Now);

var t5 = t4.ContinueWith(task =>

Console.WriteLine(t.Result));

In addition, Task can have parent child relationships. In this example, a Task is created and started that contains a second task that is a child of the first task.

var parent = Task.Factory.StartNew(() => {

Task.Factory.StartNew( () => DateTime.Now,

TaskCreationOptions.AttachedToParent); // Child task

});

parent.Wait(); // Wait for parent & all children

Task class offers you a lot of flexibility and manages the underlying threads.

If you are looking at using Task for I/O-bound work, like user interface input or file input/output or even retrieving for a data query in a data base, you might still be able to use the Task class. Check out how Jeffrey Richter uses his AsychEnumerator with Task in his blog posting, Using .NET 4.0 Tasks with the AsyncEnumerator or see it in action at Jeffrey Richter and his AsyncEnumerator on Channel 9.

Imperative Data Parallelism Using Parallel For, ForEach, Invoke

The .NET Framework 4 has added some features that make it easy for developer to use parallel processing in your applications. When you use Parallel For, ForEach, or Invoke, the Framework uses the Task class under the covers. It gives you another way to simplify your code.

This offers you a simple API for some very common scenarios. You should use these carefully. Don’t go through your code and replace all your for keywords into Parallel.For. These work only when operations you are doing are independent; state must be not be mutable.

In addition, methods don’t return until all operations complete. So your code will be waiting for completion. This may or may not be helpful to your particular code. This strategy can work well if you have either of these

- Lots of items

- Lots of work for each item

Overhead is too great for few items with little work.

That said, this does work well and can speed up your code, if you are careful.

Using Parallel.For, You could change:

for (Int32 i = 0; i < 1000; i++)

DoWork(i);

to this:

Parallel.For(0, 1000, i => DoWork(i));

Using Parallel.ForEach, you can change:

foreach (var item in collection)

DoWork(item);

into:

Parallel.ForEach(collection, item => DoWork(item));

You can use Parallel.Invoke. You can call any method in an asynchornous way, by changing:

DoWork(1);

DoWork(2);

DoMoreWork(3);

into

Parallel.Invoke(() => DoWork(1), () => DoWork(2), () => DoMoreWork(3));

For more information, see Task Parallel Library Overview.

Declarative Data Parallelism Using Parallel Linq

You can use Parallel Linq on any IEnumerable object to walk through a collection of objects.

Where you would have

IEnumerable<T> data = ...;

var q = data.Where(

x => p(x)).Orderby(x => k(x)).Select(x => f(x));

foreach (var e in q) a(e);

You would change your code into:

IEnumerable<T> data = ...;

var q = data.AsParallel() .Where(

x => p(x)).Orderby(x => k(x)).Select(x => f(x));

foreach (var e in q) a(e);

Aside from writing LINQ queries the same way you're used to writing them, there are two extra steps needed to make use of PLINQ:

- Reference the System.Concurrency.dll assembly during compilation.

- Wrap your data source in an IParallelEnumerable<T> with a call to the System.Linq.ParallelEnumerable.AsParallel extension method.

Calling the AsParallel extension method in Step 2 ensures that the C# or Visual Basic compiler binds to the System.Linq.ParallelEnumerable version of the standard query operators instead of System.Linq.Enumerable. This gives PLINQ a chance to take over control and to execute the query in parallel. AsParallel is defined as taking any IEnumerable<T>:

For more information, see Running Queries On Multi-Core Processors and PLINQ changes since the MSDN Magazine article.

Visual Studio 2010 Visualization

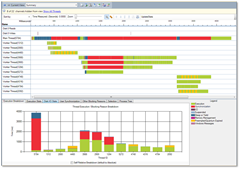

All this is well and good. But how do you profile and debug? Visual Studio 2010 provides parallelism and concurrency profiling tools so you can see the behavior of a multithreaded application on multi-cores. You can collect resource contention data.

For example, here is the Thread Blocking View. The top half of the screen shows thread details, swim-lane fashion. Each thread gets a swim lane. We only color in the swim lane while the thread exists, but we place it accurately in time. The bottom half of this screen shows a bar chart of the blocking reason breakdown for each thread shown in the top half of the screen.

For example, here is the Thread Blocking View. The top half of the screen shows thread details, swim-lane fashion. Each thread gets a swim lane. We only color in the swim lane while the thread exists, but we place it accurately in time. The bottom half of this screen shows a bar chart of the blocking reason breakdown for each thread shown in the top half of the screen.

The goal is to help you understand the causes of thread blocking events so you have actionable information. You can also see the aggregate costs of blocking call stacks.

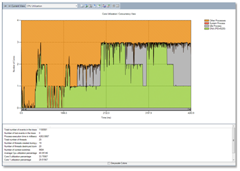

The core utilization view helps you learn or confirm the degree of concurrency in a particular scenario. And you can tune or determine opportunities for parallelism. It also you understand the degree of CPU contention with other processes

The y-axis indicates the number of cores – in this case, the system has 4 cores. The x-axis indicates time in milliseconds. Green area shows the number of cores used by your process over time. Grey area shows the number of idle cores over time. Orange shows the number of cores used by processes other than your own

Gaston Hillar has put together an article for Dr. Dobbs entitled Visualizing Parallelism and Concurrency in Visual Studio 2010 Beta 2. Hillar walks you through how to profile your application.

Resources

Blogs

- Parallel Programming in Native Code

- Parallel Programming with .NET

- Windows Server Performance Team Blog

- Daniel Moth

Video

Parallel Programming for Managed Developers with the Next Version of Microsoft Visual Studio video on Channel 9 from PDC 2008.

See Rob Bagby and others discuss Develop with New Parallel Computing Technologies as part of The New Efficiency launch events live and on demand.

Developer Training Kit

Windows Server 2008 R2 Developer Training Kit - July 2009

Bruce D. KyleISV Architect Evangelist | Microsoft Corporation

My special thanks to Jeffrey Richter at Wintellect and Rob Bagby for contributing to this article.