Smart Acc.: Web application based on Faster-RCNN architecture making it easier to find accommodations in London.

Guest post by Imperial College London, Microsoft Industry Exchange Project Group using Faster RCNN The project was developed by Nattapat Chaimanowong, Suampa Ketpreechasawat, Tomasz Bartkowiak, Lin Li, Yini Fang and Danlin Peng, a group of MSc.CS students from Imperial College London.

The visual impression is undoubtedly the most important factor influencing people’s decision concerning the choice of accommodation. An accommodation platform should aim to organize visual information so that it is simple and intuitive for the user to browse through. The user may also be interested in features that are available in a room. From the perspective of the accommodation provider, the process of updating such information can be tedious and time-consuming. A smart platform should be able to understand and adapt the search results to the user’s needs, as well as to automate and simplify the accommodation providers’ actions. Development of a product to address these issues provides a good opportunity for the application of machine learning algorithms, specifically classification and object detection models.

Our product, Smart Acc web application, targets the room owners who have a room for rent, and all the people willing to find an accommodation in London. The main functionality of the application is to auto-classify room types and identify all the room features. The automated feature tagging provides the flexibility for the room searching and reduces the time consumed for the homeowner in manually filling the room description. Another features of the web app, Find me a Similar Room, allows users to search for the rooms containing features similar to the image they uploaded.

Product Design

The design of the system involves four distinct modules: website (also referred to as a web app), back-end server, object detector and a room classifier. The website provides a basic interface between the user and the server and supports services like registering/logging in or room searching, where requests are being handled by the server and sent back to the client. The specific functionalities like detecting objects in a particular image and classifying the type of a room based on the detections, are implemented behind the scenes, on the server side, by utilizing the outputs of the object detector module and the room classifier module (which essentially are the model files created during the training process). The figures below show the high-level view on the whole (general) architecture and the room classification architecture.

Figure 1 High-level system architecture

Figure 2 High-level view on detection/classification system

Object Detector

As given in the baseline project, this module utilizes the state-of-the-art object detection model (Faster-RCNN) to locate objects and predict their classes.

The Faster-RCNN consists of three components: feature extractor, Region Proposal Network (RPN) and a Region-based classifier - Fast-RCNN. The feature extractor works on extracting convolutional features from the raw image input, which is usually derived from other successful deep CNNs like AlexNet and VGG-16. The feature map, the output from feature extractor, is shared by RPN and the Fast-RCNN as parts of their inputs.

Figure 3 The architecture of Faster-RCNN (source: Faster-RCNN)

RPN’s job is to slide a small convolutional layer over the feature map followed by two sibling 1 × 1 convolutional layers for the class score and bounding box regression respectively. At each sliding window, the center of window, denoted as the anchor, is associated with several scales and aspect ratios, which represent the potential boxes for detection regarding the corresponding window. Then the features bounded by each anchor box are fed into a box-regression layer and a box-classification layer respectively to refine the coordinates and predict the objectness, whether there is an object or just a background.

Figure 4 Illustration of anchor system (source: Faster-RCNN)

After the region proposal network, the convolutional feature map from the feature extractor and the region of interests from the RPN are both fed into a RoI pooling layer[4] in order to retrieve completed features belonging to a particular RoI and unify the sizes of all RoIs for further classification. Then, similarly to the two small sibling layers in the RPN, the Fast-RCNN classifier takes RoIs with convolutional features as input, and outputs a more specific class prediction (which class the object belongs to), and a further refined coordinate.

Room Classifier

The objects that have been recognized by the CNN include labels and objects positions on the image. This information is outputted in JSON format by the server using pre-trained CNN model. However, the task of the project also included recognizing the room type, not the objects and their locations alone. There was a need to create a classifier that, based on the list of labels/objects (their positions are not really important here), assigns a particular room type to that list. The high-level architecture can be seen below:

Figure 5 The architecture of room classifier

There are many candidates for a good classifier - Support Vector Machines, Neural Networks, Random Forests (with bagging or boosting applied) and others. We, however, decided to test just two models: Feed Forward Neural Networks (referred to as FFNNs) and Random Forests (RF). In practice, after optimizing the topology of the neural network, we constructed a simple Feed Forward Neural Network with two hidden layers and 10 neurons in each hidden layer. For the Random Forests model, we trained a set of decision trees based on the ID3 algorithm and obtained the final prediction by combining the predictions from all decision trees.

We trained both of two models on the same dataset and compared their performance. Based on the comparison and other factors, we finally decided to use the Random Forests model as the classifier.

Datasets

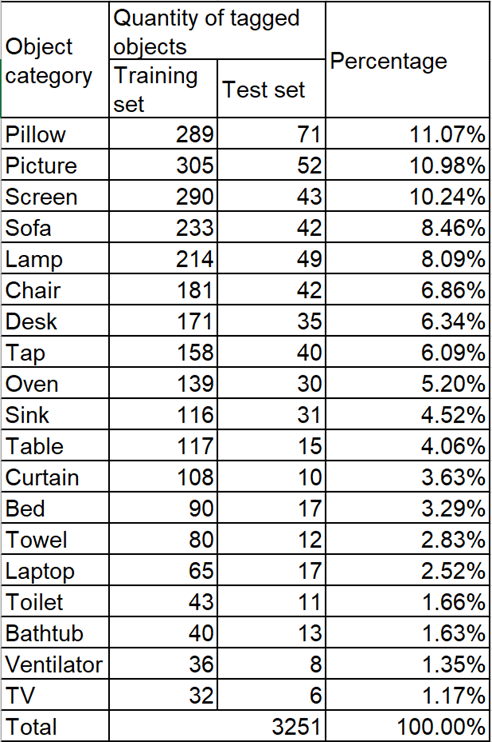

In practice, two datasets have been used in the project. The HotailorPOC2 dataset (provided together with the baseline project) has only 113 images and 8 object categories of two types of rooms - bathroom and bedroom, and was used during the early stage of the project, while our custom dataset, is a developed version of HotailorPOC2 composed of much more images and classes. The custom dataset contains 516 images and 19 object categories of five types of rooms - bathroom, bedroom, living room, kitchen and office.

Figure 6 The distribution of categories in the custom dataset

Evaluation

The overall mean average precision rates on two datasets are both over 50%, whereas the performance on the HotailorPOC2 (57.32%) is slightly better than that on the custom dataset (50.59%). This is caused by the fact that the custom dataset is far more complicated that HotailorPOC2 - there are, indeed, far more object categories.

However, the average precision rate distribution over 8 common categories of two datasets is very similar. Basically, the model performs worse job on detecting small-size objects like tap (22.22% and 37.96% respectively) and towel (28.57% and 16.67%), while it is able to achieve a very high accuracy on the large-size objects like bed (80.56% and 84.51%) and toilet (94.78% and 66.15%). The reason for this is that the small-size objects usually include relatively fewer features, compared to the large-size objects, and their missing information caused by the stride operation of convolutional layers takes a larger proportion with respect to the total information contained by them.

To find the reasons for the unsatisfactory accuracy of some objects, we introduced a false-positive errors analysis. Small-size objects like tap and pillow are usually localized at wrong positions or are confused with the background. For some groups of objects like toilet, bathtub and sink, they usually share a very similar shape, color and surface texture so that the model is confused with the others when detecting one of them. For some objects like picture and table, their features are not consistent within the different images in the training set, which makes it hard for the model to build a unified criterion, e.g. what a picture looks like.

Figure 7 False-positive errors analysis

Product Demo

Web app video

The code for the Faster-RCNN model as well as the false-positive analysis tool can be found on https://github.com/TreeLLi/CNTK-Hotel-pictures-classificator.

Room detection algorithms can be found on:

https://github.com/bartkowiaktomasz/CNTK-FFNN-Room-classifier (Feed Forwards Neural Network)

https://github.com/fangyini/Random_Forest (Random Forest)

Teamwork

The project was developed by Nattapat Chaimanowong, Suampa Ketpreechasawat, Tomasz Bartkowiak, Lin Li, Yini Fang and Danlin Peng, a group of MSc.CS students from Imperial College London.

With regard to the work efficiency and time constraints of the project, the team members have been allocated into 2 sub-teams - Machine Learning (ML) Team and Web Development Team, working in parallel.

Web-development team focused on the front-end development that involved a graphical user interface design for a web service, and a back-end development with database management and the deployment of the machine learning model on the web service. ML team focused on the extension and the improvement of the baseline model with the aim to improve its robustness and accuracy.

Acknowledgements

The codes concerning the Faster-RCNN model and back-end web application heavily depend on the baseline projects provided by the Microsoft. Besides, all cloud computing services, Azure DLVM (deep learning virtual machine) and Web App. Finally, we want to thanks for the helpful advices we received from our co-supervisors, Anandha Gopalan (Imperial College London), Lee Stott (Microsoft), Tempest van Schaik (Microsoft) and Geoff Hughes (Microsoft).

![clip_image002[6] clip_image002[6]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0026_thumb.gif)

![clip_image004[6] clip_image004[6]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0046_thumb.gif)

![clip_image006[4] clip_image006[4]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0064_thumb.gif)

![clip_image008[4] clip_image008[4]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0084_thumb.gif)

![clip_image010[4] clip_image010[4]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0104_thumb.gif)

![clip_image002[4] clip_image002[4]](https://msdntnarchive.blob.core.windows.net/media/2018/06/clip_image0024_thumb.gif)