Utilizing Custom Vision AI for creating Cognitive Services Projects

Guest post by Sidak Pasricha Microsoft Student Partner at UCL

Personal Introduction:

Hello! My name is Sidak and I’m a Microsoft Student Partner currently pursuing Computer Science Engineering at UCL. My interest areas mainly include Artificial Intelligence, Robotics and Video Game Development but I am open to any other technologies as I enjoy learning new skills and languages. One of my many ambitions is to successfully create video games that incorporate AI to provide a richer user experience and involvement.

Cognitive Services:

Initially called Project Oxford, Microsoft Cognitive Services is a suite of technologies (APIs, and SDKs) that facilitate the integration of Machine learning for developers in their applications. “Democratising AI” is one of the core principles of the Microsoft Research foundation and Cognitive Services showcase just that: By providing simple solutions for complex technologies like Image identification and moderation, Language Analytics, Speech Recognition and Knowledge Mapping. From creating fun apps that detect the age of an individual to aiding the impaired/disabled to understand the world around them, the application of these facilities is countless and quite honestly, Empowering.

Custom Vision:

Vision is one of the products provided by the Azure Cognitive Services (along with Speech, Language, Knowledge and Search). Using this subsidiary of the Cognitive Services, not only does one have the power to detect emotions (Use it on your girlfriend to save yourself), detect faces and expressions or moderate media content, one can even dissect videos for analysis. One such offering from the Vision services that I found quite interesting among all of these was the Custom Vision Service.

While the Computer Vision API, Face API or Emotion API are quite useful in most cases, there are quite a few scenarios where the results given by them are unnecessary for the final product. It is in this situation, where there is a need for a custom computer vision model, that the Custom Vision Service comes quite handy.

Using the Custom Vision Service, a user can train a custom model to detect and show only select results in a few clicks, literally.

How to make your own Custom Vision Project:

Making a Custom Vision AI project is all about finding the right images to train the model on. People have made fun models such as one that differentiates between Chihuahuas and Muffins (shown below) and the photo set showcases the variety in the images used, they are barely alike in terms of dog expression or type of muffin.

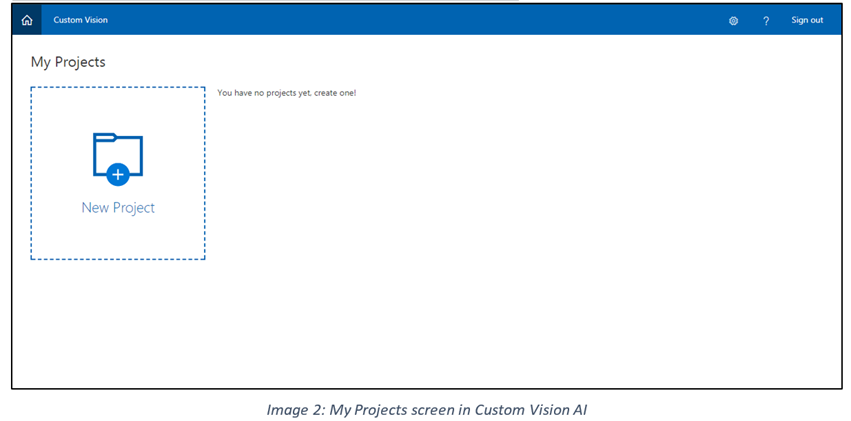

To make your own model (classifier), visit https://www.customvision.ai and Sign in to your Microsoft account. Once logged in, the following screen will greet you:

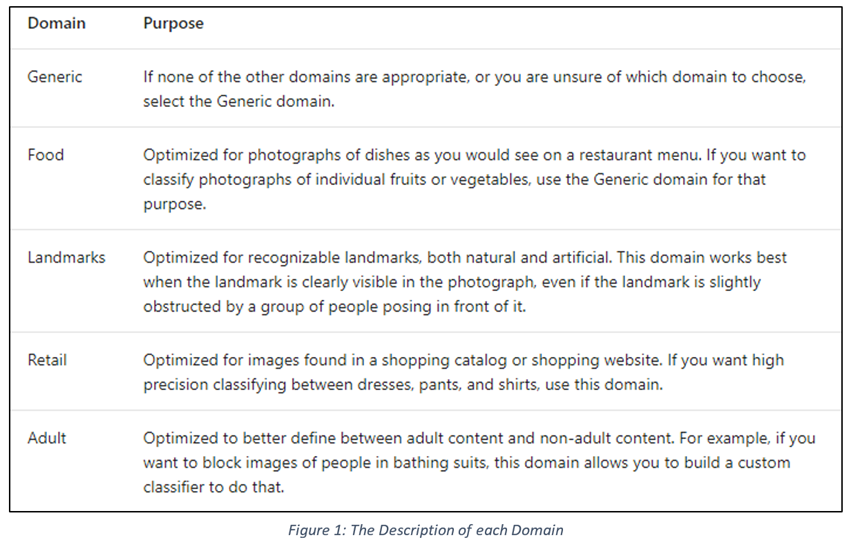

Create a New Project and appropriately name it and describe it. The choice of domains refers to certain scenarios you plan to implement the model in. The choice of domain optimises the classifier for the specific type of images. The domain can be changed later. The Microsoft Cognitive Services documentation provides a brief description of each domain and its usage:

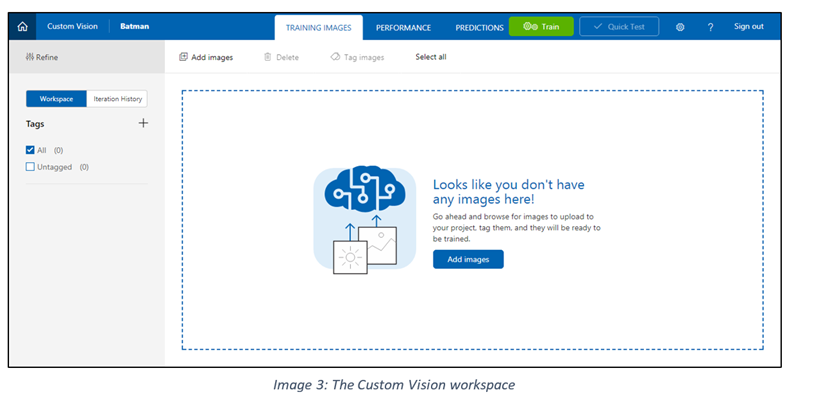

Usually, the general domain works best and we will be using that in the sample. After this step is over, a screen similar to the following is seen:

Click on “Add Images” and select all the images of the same tag. I’ll be demonstrating by making a model that differentiates between the different Batman Actors – George Clooney, Christian Bale and Ben Affleck.

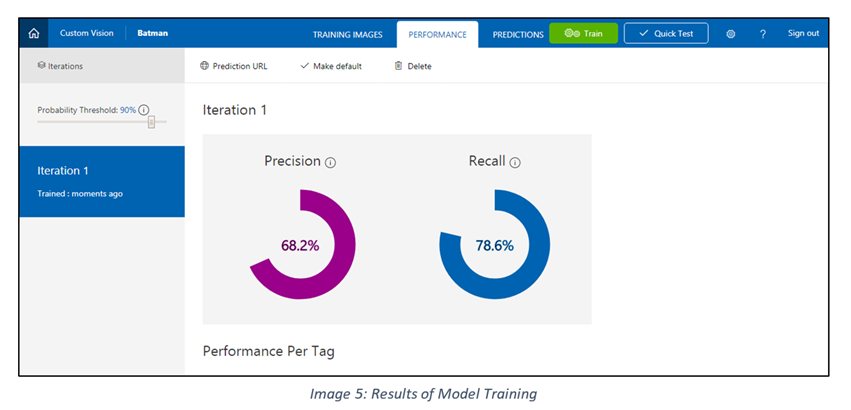

I uploaded all the images of Christian Bale as Batman and tagged them as “Christian Bale” as can be seen below:

Quick Notes about Uploading Images:

1. Make sure it is clear.

2. Avoid images like the one in the red square where there is another entity as that can cause confusion in the learning and reduce prediction accuracy.

3. Try different variants, angles and backgrounds. This allows the program to differentiate better and provides more accurate results.

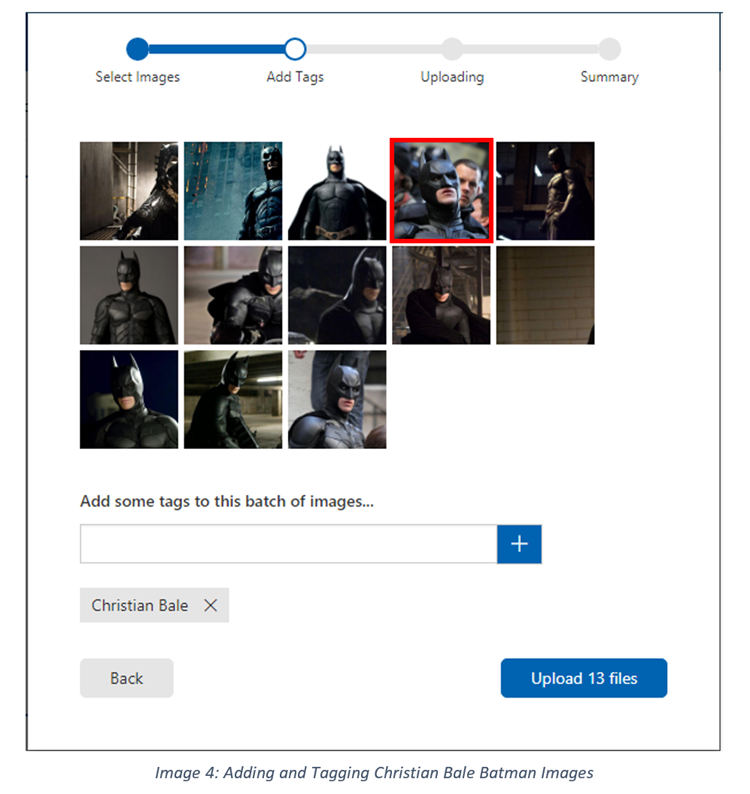

Upload the images and your workspace will be updated. Do the same for the other tags – in my case George Clooney and Ben Affleck. Once you’ve got a basic model ready, with all the tags you need and appropriate images in them, click “Train”. This creates an iteration of the program that can be tested and analysed for performance improvements thanks to the inbuilt model precision report:

Keep in mind that 100% precision is not really possible and usually any model with 75%+ in Precision and Recall is really effective, provided the image set is diverse.

You can “Quick Test” your model by uploading an image which is not part of your data set and see the effectiveness of your model. Once you’ve tested the model, the image used is stored in the “Predictions” tab and can be added to the Training Images directly with the appropriate tag in case the prediction was wrong.

Like children learning the different between various fruits, or new names, the Custom Vision AI, or any form of Machine Learning technology for that matter requires continuous training before being adept in differentiating to a certain accuracy. Don’t worry, provided your training images are right, you won’t need to keep on training again and again, only for extreme cases.

In order to use this Model in an application, obtain the Training Key and Prediction Key from the Project settings tab next to the “Quick Test” tab and use the appropriate API calls.