Recognizing celebrities on pictures with Azure Computer Vision API and Azure Notebooks

Guest blog by Tomasz Czernuszenko, Microsoft Student Partner

About me

Hi there! My name is Tomasz and I study computer science at UCL. I’m an author of programming courses on YouTube covering Python, Visual Basic, and Android whose reach has recently passed one million views. This summer, I worked at a Fintech company on automating the creation of documentation for their API and since then I became a great enthusiast of APIs of all sort - I’m in constant search of unique services that make developers’ work easier and ultimately help creating better applications for end users.

LinkedIn profile: https://www.linkedin.com/in/tomaszczernuszenko/

What are we going to create?

In this article, I'll walk you through the process of creating an interactive Jupyter application where the user can paste an URL to a picture of a famous person and the program will say what person is in that picture.

What will you learn?

- How to get started with Azure Notebooks

- What are REST APIs and how to use them in Python

- What are Azure Cognitive APIs and in particular the Computer Vision API

- How to parse JSON objects in Python

- How to create a simple interactive program in Jupyter

How is our application going to work?

1. The user will be presented with a text box and a button.

2. Once the user submits the URL of an image, our program will send this link through Azure Computer Vision API for the clever algorithms to analyze it.

3. We will fetch then the response from the API, transform it and present the result to the user.

What steps will we take to create the program?

1 In order to use the Computer Vision API, we first need to get an API key - sort of a signature or a password using which Azure determines who is using the service.

2. Then, we will then create a Jupyter project in Azure Notebooks which will communicate with the API.

3. Finally, we will create a simple graphical interface to make the usage of the application nicer.

What is this API that you're constantly talking about?!

API stands for "Application Programming Interface" which in human language means a way for two computer programs to communicate with each other.

If that's still not quite clear, let me explain it in a different way: in software engineering there is a wide range of different interfaces - means of transferring information from one entity to another, for example between a human and a program. The most common one is Graphical User Interface (GUI), a thing you use on a daily basis - a set of buttons, pointers, animations, images etc. - presented in an organized layout. They let a human put information in, e.g. enter their username and password into text fields, and take information out, e.g. read emails from a list of text excerpts and icons. There also other interfaces e.g. Voice User Interface which is applied in solutions like Cortana or smart home devices; in this case the user speaks a command (puts information in) and listens to the voice response (takes information out). Now, when you look at the name "Application Programming Interface" it should become more self-explanatory what it means - a way of transferring information between two pieces of software. A RESTful API is a special kind of an API which operates through various types of requests, most common ones being GET, POST, and DELETE.

What is a request then?

Think of a request as a text messages that one system sends to another asking to do something. A GET request asks to be provided with information e.g. to learn more about a given user, a POST request is used to send information to the server e.g. when registering a new user, and a DELETE request, unsurprisingly, is used to delete information from the server e.g. when deleting an user’s account.

While the type (GET, POST etc.) of requests vary, all queries look pretty much the same - they consist of:

● An endpoint - the URL to which the request is being made together with the most important parameters

● Headers - “key-value” pairs, providing some more detailed information about the request we are making

● A body - the data that is being sent to the server. This part is not always present e.g. the GET and DELETE requests usually do not have a body.

While all RESTful APIs have the same structure for the most part, before using one, one needs a Documentation - instructions of how to shape the requests made and what data is being sent back. All of this might be a little overwhelming, but once we go on and try the Azure Computer Vision API , you’ll discover that it is actually all quite simple.

Let's get started!

Digging into the API Documentation

Before we start developing our little program, let’s go and see the Azure Computer Vision API’s documentation. The API is a part of Azure Cognitive Services.Azure Cognitive Services is a collection of APIs to algorithms analyzing images or text as well as doing multitude of other complex analytics. Head here to discover what Azure has to offer: https://azure.microsoft.com/en-gb/services/cognitive-services/.

What we will be interested in in our case is the computer vision API (https://azure.microsoft.com/en-gb/services/cognitive-services/computer-vision). Given a picture it can describe its contents, read text included, and recognize famous people.

Click “API” on the top of the page and we’ll land in this page: https://westus.dev.cognitive.microsoft.com/docs/services/56f91f2d778daf23d8ec6739/operations/56f91f2e778daf14a499e1fa. What are we looking at is the API documentation - all the necessary information needed to properly use the given API.

Click “West US” and you’ll be redirected here:

In “Query parameters” section, from the drop down menu “details” select “celebrities”. Now, at the bottom of the page you’ll find the URL we’re looking for:

The page also tells us how should the body and the headers be formatted. You’ll notice that there is an empty field next to Ocp-Apim-Subscription-Key parameter asking to put some data in it. This informs us that this is how Azure will authorize the users - check if they have the permission to use the API. At this point we have no permission to use it so, let’s go and get the permission.

Obtaining the API Key

In a new tab, navigate to https://azure.microsoft.com/ and click "PORTAL" in the top-right corner. Log in using your Microsoft account and you will be presented with a dashboard presenting your most recent projects and possible services that Azure is offering.

In this tutorial, we will focus on the Computer Vision API which is a part of Cognitive services. Therefore, first thing we need to do is create a Cognitive Services project.

Click the "New" Button on the Left hand side and in the Search field put "Cognitive Services". You'll see a simple form where you put the API type (in our case “Computer Vision API”), name of the project, choose which pricing tier you want to use (the F0 tier is free for up to 5,000 API calls per month), and location (make sure that you set the location to West US regardless of your location). You'll be also asked to choose a subscription which basically is a way of billing users who choose to use the API professionally. You can set up a free subscription for the first month. Check "Pin to dashboard" for an easier access later, and hit "Create".

After your project is set up, you'll be redirected to the Project Dashboard or you might need to manually choose it from the list. You'll be presented with some basic information about the service that you just created, the pricing tier and so on. The only thing that we will be really interested in are the keys which you can obtain by clicking “Show access keys…”.

Leave the page open for now, we’ll need it later. Let’s set up our program now.

Setting up Azure Notebooks

For the purpose of this tutorial, I will be using Azure Notebooks – it allows for easy hosting of Jupyter Notebooks – a great tool to write and share Python code: not only the set up takes seconds unlike traditional IDEs but also the programs can be easily enriched with visuals, text, and can be accessed on any device. Also, developing applications on a nice and shiny website that can be shared with friends appeals to me more people than writing code in a black command prompt J

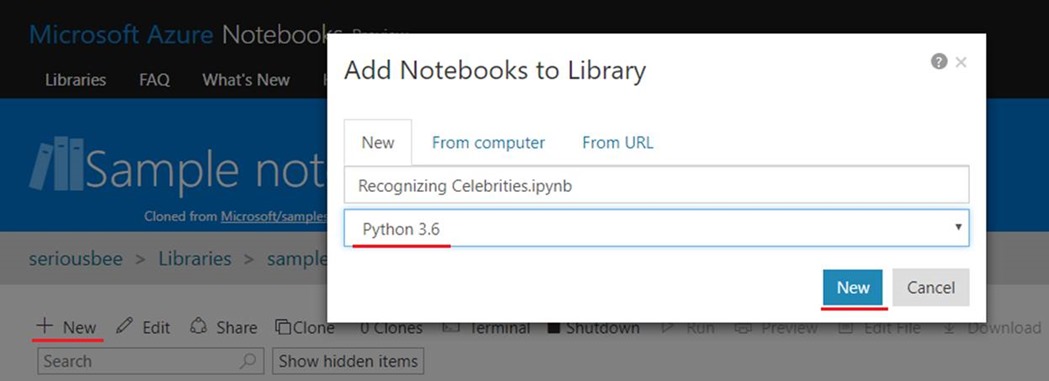

Navigate to https://notebooks.azure.com/ and sign in with your Microsoft credentials. Create a library (folder) or go into the sample one that is already there. Click new, set the name of the project and select Python 3.6.

You’ll see a website with an empty notebook. A Jupyter Notebook consists of three types of elements:

- code (supported languages include Python, R, F#) and its output (Code),

- text/articles that user can write to enrich the programs or provide some context to the output for the user (Markdown),

- and code that you do not want to be executed (Raw NBConvert).

This time we’re not writing any academic paper so only the first feature will be interesting for us.

Let’s code!

We want to make a POST request to the Computer Vision API. This simply means that we will send (POST) to Azure some information and then collect the response from it. The Python library “requests” allows us to do it very easily. Here’s the code for a generic post request:

import requests

url = "<API Endpoint URL>"

headers = {"<header 1 name>": "<header 1 value>"}

body = '<;Body of request>'

r = requests.post(url, data=body, headers=headers)

We just need to adjust it to our needs. That’s how it looks after the changes:

import requests

url = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Categories&details=Celebrities&language=en"

headers = {"Ocp-Apim-Subscription-Key": "abcefghijklmnoopqrstuvwxyz123456789"}

body = '{"url":"https://upload.wikimedia.org/wikipedia/commons/1/19/Bill_Gates_June_2015.jpg"}'

r = requests.post(url, data=body, headers=headers)

print (r.text)

The URL, header’s name and body structure are taken from the Computer Vision API Documentation page. The header - “Ocp-Apim-Subscription-Key” – holds the key we obtained from the Azure dashboard, and the body is a JSON object with only one value: url of the picture to be analyzed.

Replace “abcefghijklmnoopqrstuvwxyz123456789” with your API key, and hit run (the triangle in the top navigation bar). If everything goes well, there should be no errors displayed and the response we get should look somewhat like this:

{"categories":[{"name":"people_portrait","score":0.98828125,"detail":{"celebrities":[{"name":"Bill Gates","faceRectangle":{"left":265,"top":308,"width":566,"height":566},"confidence":0.999535441}]}}],"requestId":"4350f15e-a8a6-4dce-b5f1-3e3e81d96897","metadata":{"width":996,"height":1408,"format":"Jpeg"}}

This is a nasty, unformatted JSON string. JSON is the most common way of transferring information through REST APIs. Here’s a nicer representation of the response I got:

{

"categories":[

{

"name":"people_portrait",

"score":0.98828125,

"detail":{

"celebrities":[

{

"name":"Bill Gates",

"faceRectangle":{

"left":265,

"top":308,

"width":566,

"height":566

},

"confidence":0.999535441

}

]

}

}

],

"requestId":"4350f15e-a8a6-4dce-b5f1-3e3e81d96897",

"metadata":{

"width":996,

"height":1408,

"format":"Jpeg"

}

}

JSON Decoding

While all these braces, colons etc. might look weird at first, actually this is a very convenient format that will allow us to extract just the right piece of information very easily. Inside the curly braces, JSON describes an Object and its properties. In our case the object is a query response which is described by categories, requestId, metadata. Some other examples of Objects that can be easily represented in JSON are: users (with properties like username, email), messages (content, sent time) etc. In our case the object holds an array “categories” to which the API was able to match the picture to, a “requestId” using which this particular request can be referenced in the future, and “metadata” describing the input image file. In our program, the only thing we really care about is the “celebrities” array holding the name of the identified celebrity. In order to extract only this piece of information we could either search the string and do some nasty regex operations, or we can make it easier for ourselves and use this special JSON structure to our advantage.

To extract only the name of the celebrity, we will have to put in code the following commands:

- From the JSON Object, read the “categories” array

- From the “categories” array take the first object

- From the first object, extract the “detail” object.

- From the “detail” object, read the “celebrities” array

- From the “celebrities” array take the first object

- From the first object, extract the “name” property.

Using Python code it looks like this: (j is the JSON object)

j['categories'][0]['detail']['celebrities'][0]['name']

Let’s alter our code to reflect this change:

import requests, json, io

url = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Categories&details=Celebrities&language=en"

headers = {"Ocp-Apim-Subscription-Key": "abcdefghijklmnopqrst"}

body = '{"url":"https://upload.wikimedia.org/wikipedia/commons/1/19/Bill_Gates_June_2015.jpg"}'

r = requests.post(url, data=body, headers=headers)

j = json.loads(r.text) #transform the text into a JSON Object

print (j['categories'][0]['detail']['celebrities'][0]['name']) #extract the name of the celebrity

Note: make sure you press the Run button. Only after you press it any changes you made to the code will apply to the output!

As you can see, we need to import two other libraries - json (responsible for reading the JSON object) and io responsible for converting the text of the response into a JSON Object). You might have noticed that we use JSON already when creating the request - the body holds an object with only one parameter – url – whose value is the URL of the image to analyze.

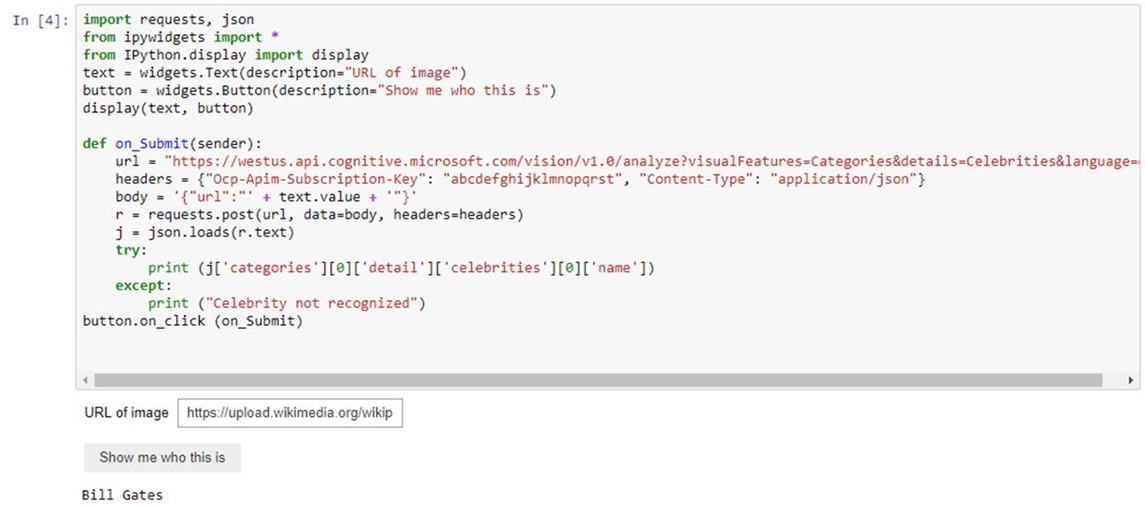

Creating the User Interface

Let’s say you want to make usage of the program more seamless - you want to often check different URLs. Of course you could just change the code and run the code again, but that’s not really a great UX. A good solution could be introducing a text field where you would paste the URL, after pressing a button the request to the API would be made, and the response displayed to the user.

What we are going to do, is create a text field for input and a button to execute the recognition procedure. For this, we’ll need two other libraries - ipywidgets and IPython.display. Now, the code should like this:

import requests, json

from ipywidgets import *

from IPython.display import display

text = widgets.Text(description="URL of image")

button = widgets.Button(description="Show me who this is")

display(text, button)

def on_Submit(sender):

url = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Categories&details=Celebrities&language=en"

headers = {"Ocp-Apim-Subscription-Key": "abcdefghijklmnopqrst", "Content-Type": "application/json"}

body = '{"url":"' + text.value + '"}'

r = requests.post(url, data=body, headers=headers)

j = json.loads(r.text) #turn String into a JSON Object

print (j['categories'][0]['detail']['celebrities'][0]['name']) #this line can potentially cause problems if the celebrity is not recognized

button.on_click (on_Submit)

A few words of explanation - we created two variables: one with the text field, and the other with the button. We use the display method to show these in the document. We put all our previous code into a function which we put as a parameter to on_click method of the button to describe what should be happening when the button is pressed. Finally, within the on_Submit method we retrieve the content of the text field and pass it to the body parameter using String concatenation (attaching pieces of text to each other).

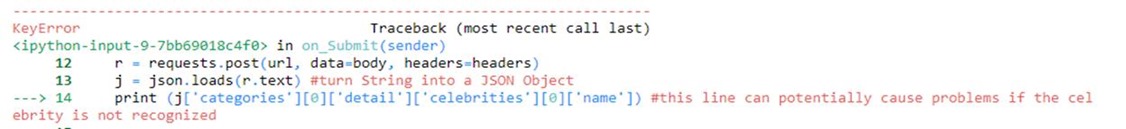

The program is almost ready. It has a major flaw - if the users puts in an URL of a celebrity that the API does not recognize, the program will break:

In order to accommodate for this, we will add a try - except block around the JSON object decomposition:

import requests, json

from ipywidgets import *

from IPython.display import display

text = widgets.Text(description="URL of image")

button = widgets.Button(description="Show me who this is")

display(text, button)

def on_Submit(sender):

url = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Categories&details=Celebrities&language=en"

headers = {"Ocp-Apim-Subscription-Key": "abcdefghijklmnopqrst", "Content-Type": "application/json"}

body = '{"url":"' + text.value + '"}'

r = requests.post(url, data=body, headers=headers)

j = json.loads(r.text)

try:

print (j['categories'][0]['detail']['celebrities'][0]['name']) #this line may cause errors

except: #in case of any errors, the code in here will run

print ("Celebrity not recognized")

button.on_click (on_Submit)

The way it works is very simple. Python will attempt to execute whatever commands it is given inside “try” and if any errors arise it will execute the commands from the except block. This way, if during JSON decomposition Python discovers that the array of recognized celebrities is empty, extracting the name of the first element will raise an IndexError which will then launch the except block and as a result “Celebrity not recognized” will be printed.

That’s it! The very basic application is ready. You can further develop the program by:

● catching different errors on different stages of execution of the program (think about what happens if user does not put any URL in or if the user provides a link to a website and not an image …),

● adding another text box for the user to use his API key,

● handling of having multiple celebrities in one picture,

● or anything else!

In software development the sky is the limit but with Azure Cognitive Services you can reach even for the stars!

Enjoy your further adventure with Azure APIs and don’t hesitate to ask questions in the