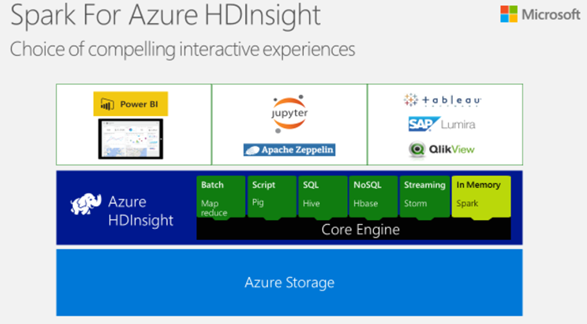

Spark for Azure HDInsight

Guest blog from Alberto De Marco Technology Solutions Professional – Big Data

This week we just launched Azure Data Lake service in Europe Azure Data Lake Analytics and Azure Data Lake Store are now available in the North Europe region.

Data Lake Analytics is a cloud analytics service for developing and running massively parallel data transformation and processing programs in U-SQL, R, Python, and .NET over petabytes of data. Data Lake Store is a no-limit cloud data lake built so enterprises can unlock value from unstructured, semi-structured, and structured data.

Typically what you can have out of the box on Azure for this task it’s Spark HDInsight cluster (i.e. Hadoop on Azure in Platform as a Service mode) connected to Azure Blob Storage (where the data is stored) running pyspark jupyter notebooks.

Azure HDInsight

It’s a fully managed cluster that you can start in few clicks and gives you all the Big Data power you need to crunch billions of rows of data, this means that cluster nodes configuration, libraries, networking, etc.. everything is done automatically for you and you have just to think to solve your business problems without worry about IT tasks like “check if cluster is alive or check if cluster is ok, etc…” , Microsoft will do this for you.

Now one key ask that data scientist have is : “freedom!” , in other words they want to install/update new libraries , try new open source packages but at the same time they also don’t want to manage “a cluster” as an IT department . In order to satisfy these two requirements we need some extra pieces in our playground and one key component is the Azure Linux Data Science Virtual Machine.

The Linux Data Science Virtual Machine it’s the Swiss knife for all data science needs, you can find out more here.

Data Science Virtual Machine & Apache Spark

The Data Science VM allows engineers, Data Scientists to add/update all the libraries they need Jupyter and Spark are already installed on it so data scientists can use it to play locally and experiment on small data before going “Fully Implemented” on HDInsight

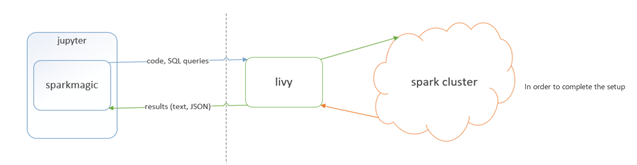

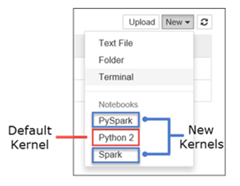

In fact we can use the local jupyter and spark environment by default and when we need the power of the cluster using spark magic when can , simply changing the kernel of the notebook, run the same code on the cluster!

Background: Since December 2016, the Linux DSVM has come with a standalone instance of Apache Spark builtin to help local development before deploying to large Spark clusters on Azure HDInsight or OnPrem. HDInsight Spark by default uses Azure blob storage (or Azure Data Lake Store) as the backing store. So it is convenient to be able to develop on the Linux DSVM with your data on the Azure blob so that you can verify your code fully before deploying it into large Spark clusters on Azure HDInsight.

Here are the one time setup steps to take to start using Azure blob from your Spark program. (Ensure you run these commands as root):

cd $SPARK_HOME/conf

cp spark-defaults.conf.template spark-defaults.conf

cat >> spark-defaults.conf <<EOF

spark.jars /dsvm/tools/spark/current/jars/azure-storage-4.4.0.jar,/dsvm/tools/spark/current/jars/hadoop-azure-2.7.3.jar

EOFIf you dont have a core-site.xml in $SPARK_HOME/conf directory run the following:

cat >> core-site.xml <<EOF

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.AbstractFileSystem.wasb.Impl</name>

<value>org.apache.hadoop.fs.azure.Wasb</value>

</property>

<property>

<name>fs.azure.account.key.YOURSTORAGEACCOUNT.blob.core.windows.net</name>

<value>YOURSTORAGEACCOUNTKEY</value>

</property>

</configuration>

EOF

Else, just copy paste the two <property> sections above to your core-site.xml file. Replace the actual name of your Azure storage account and Storage account key.

Once you do these steps, you should be able to access the blob from your Spark program with the wasb://YourContainer@YOURSTORAGEACCOUNT.blob.core.windows.net/YourBlob URL in the read API.

If your not running the Linux DSVM complete the setup we need to do the following:

Add to the Linux VM spark magic (adding libraries, conf files and settings) to connect from local Jupyter notebook to the HDInsight cluster using Livy

Step by Step instructions:

Step 1 Enable local spark to access Azure Blob Storage:

Download azure-storage-2.0.0.jar and hadoop-azure-2.7.3.jar from here (they are inside the Hadoop 2.7.3 libs)

Copy these libraries here “/home/{YourLinuxUsername}/Desktop/DSVM tools/spark/spark-2.0.2/jars/”

Open spark-defaults.conf in this folder “/home/{YourLinuxUsername}/Desktop/DSVM tools/spark/spark-2.0.2/conf/”

Write into it : spark.jars /home/{YourLinuxUsername}/Desktop/DSVM tools/spark/spark-2.0.2/jars/hadoop-azure-2.7.3.jar,/home/{YourLinuxUsername}/Desktop/DSVM tools/spark/spark-2.0.2/jars/azure-storage-2.0.0.jar

Open core-site.xml in this folder “/home/{YourLinuxUsername}/Desktop/DSVM tools/spark/spark-2.0.2/conf/”

Write into it :<?xml version=”1.0″?><?xml-stylesheet type=”text/xsl” href=”configuration.xsl”?><configuration><property><name>fs.azure.account.key.{HERE STORAGE ACCOUNT}.blob.core.windows.net</name><value>{PUT HERE THE storage key}</value></property></configuration>

Step 2 Enable local Juypiter notebook with remote spark execution on HDInsight (Assuming that default python is 3.5 like is coming from Linux DS VM ):

sudo /anaconda/envs/py35/bin/pip install sparkmagic

cd /anaconda/envs/py35/lib/python3.5/site-packages

sudo /anaconda/envs/py35/bin/jupyter-kernelspec install sparkmagic/kernels/pyspark3kernel

sudo /anaconda/envs/py35/bin/jupyter-kernelspec install sparkmagic/kernels/sparkkernel

sudo /anaconda/envs/py35/bin/jupyter-kernelspec install sparkmagic/kernels/sparkrkernel

in your /home/{YourLinuxUsername}/ folder create a folder called .sparkmagic and create a file called config.json

Write in the file the configuration values of HDInsight (livy endpoints and auth) as described here :

At this point going back to Jupyter should allow you run your notebook against the HDInsight cluster using PySpark3, Spark, SparkR kernels and you can switch from local Kernel to remote kernel execution with one click!

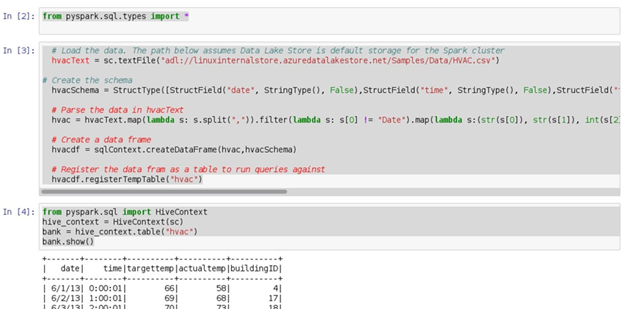

Running this on against Azure Data Lake instead of a simple Storage Account

The following guideline to enable Spark access from the Linux DS VM to your data lake store account.

- Edit the core-site.xml with your Active Directory S2S credentials that you will create as described here and assign the account the permissions to your data lake store account.

- Execute the following commands from that folder (replace {YOURUSERNAME} with your username)

sudo cp azure-data-lake-store-sdk-2.0.11.jar "/home/{YOURUSERNAME}/Desktop/DSVM tools/spark/spark-2.0.2/jars/"

sudo cp hadoop-azure-datalake-3.0.0-alpha2.jar "/home/{YOURUSERNAME}/Desktop/DSVM tools/spark/spark-2.0.2/jars/"

sudo cp core-site.xml "/home/{YOURUSERNAME}/Desktop/DSVM tools/spark/spark-2.0.2/conf/"

sudo cp spark-defaults.conf "/home/{YOURUSERNAME}/Desktop/DSVM tools/spark/spark-2.0.2/conf/"

Once done you can test jupyter with pyspark kernel in this way:

PS: If you want to avoid to have clear text credentials in the core-site.xml you can use the following commands:

Provisioning

hadoop credential create dfs.adls.oauth2.refresh.token -value 123

-provider localjceks://file/home/foo/adls.jceks

hadoop credential create dfs.adls.oauth2.credential -value 123

-provider localjceks://file/home/foo/adls.jceks

Configuring core-site.xml or command line property

<property>

<name>hadoop.security.credential.provider.path</name>

<value>localjceks://file/home/foo/adls.jceks</value>

<description>Path to interrogate for protected credentials.</description>

</property>

Of course some security features have to improved (passwords in clear text!), but the community is already working on this (see here support for base64 encoding) and ,of course , you can get the spark magic code from git, add the encryption support you need and bring back this to the community!

Resources

To learn more about these services, please visit the Data Lake Analytics and Data Lake Store webpages.

For more information about pricing, please visit the Data Lake Analytics Pricing and Data Lake Store Pricing webpages.