Hijacking faces with Deep Learning techniques and Microsoft Azure

Guest Post by Team FaceJack

Catalina Cangea , Laurynas Karazija , Edgar Liberis , Petar Veličković

Team FaceJack working hard at Hack Cambridge ’17.

Hijacking Faces

Machine learning with deep neural networks (commonly dubbed "deep learning") has taken the world by storm, smashing record after record in a wide variety of difficult tasks from different fields, including computer vision, speech recognition and natural language processing. One such computer vision task is facial recognition—identifying a person using a picture of their face. Many social networks and Computer Vision API services in this area are built using neural networks.

Neural networks may be used in security-sensitive applications, such as to authenticate a person based on a picture of their face in a smart home system. Despite the seemingly superb accuracy of such models, it is fairly easy to construct inputs which trick the network into authenticating a stranger. These are known as “adversarial examples”.

We are a team of students from University of Cambridge who have built an application to demonstrate this concept at the Hack Cambridge hackathon this January. We aim to highlight:

- The simplicity of procedurally generating adversarial examples, given access to the neural network (either directly or through an API);

- How adversarial examples can appear imperceptibly similar to legitimate inputs, tricking humans watching the camera feed;

- How this attack can be executed in real-time, even without a very powerful GPU. We used an Azure NC6 GPU machine for our demo.

What are adversarial Inputs?

Adversarial inputs are inherent to neural networks. Broadly speaking, a network is a sequence of transformations (layers) parametrised by weights, which are applied to the input to obtain a prediction. These transformations are designed to be differentiable, so that a network can be efficiently trained by:

- Feeding an input to the network to obtain a prediction;

- Computing an error of the prediction with respect to the expected output using a loss (error) function;

- Propagating the error backwards through the network, updating the weights as we go (aka backpropagation).

In a conventional training setting, we consider inputs and outputs of the network to be fixed and modify the weights to minimise the loss on these inputs. Here, however, we consider a fixed choice of weights and modify the input to produce a desirable output:

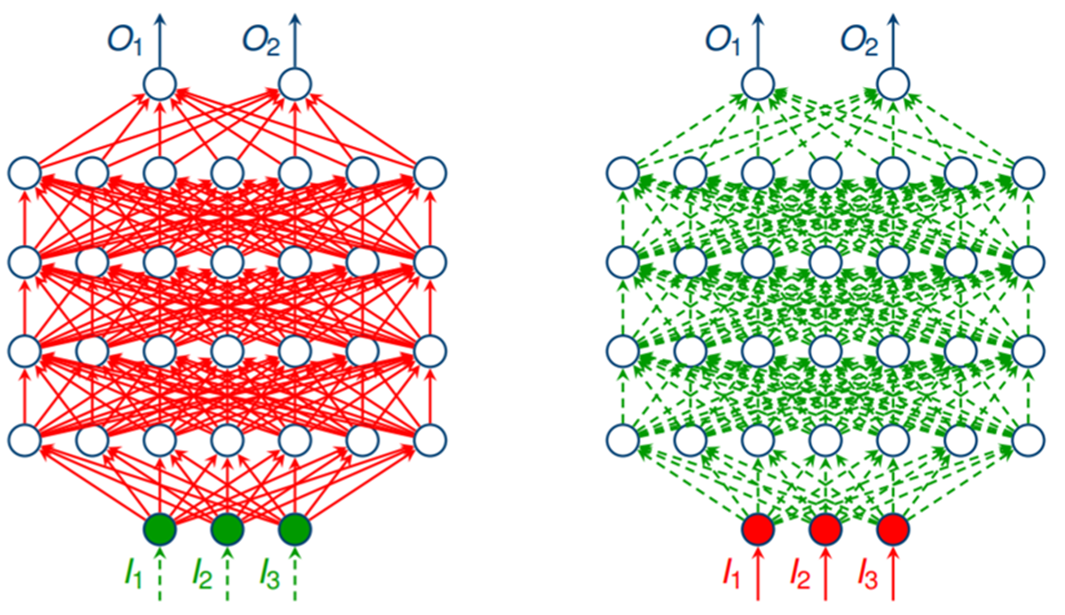

Figure 1. On the left: adversarial setting, where network parameters (weights) are fixed and inputs are adjustable. On the right: conventional training setting, where inputs and outputs are fixed, and parameters are adjustable.

To obtain an adversarial example, we set the network’s optimisation target (the loss function) to maximise the probability of the desired output class, e.g. that a face does belong to an administrator. With this set-up, we run the training process to “optimise” the input image for misclassification. As a result, feeding this modified input into the original face recognition network causes an avalanche of errors across the layers, which produces a wrong output.

Deep neural networks are particularly vulnerable to this for three main reasons:

· Computing an adversarial example usually only requires a crude approximation of the derivative (gradient) of the desired output with respect to the input image. Often, only the sign of the gradient for each input pixel is sufficient.

· Adversarial examples are often imperceptibly similar to the original input. In fact, Szegedy et al. (2013) demonstrated there is an entire space of adversarial inputs surrounding any correctly classified image.

· Even worse—what's adversarial for one network architecture will very often be adversarial for a completely different network as well—as they are often trained on the same datasets!

This shows that using neural networks in secure contexts requires particular care, because adversarial inputs can be used as an exploit.

Hackathon progress

During the hackathon we built an app (called FaceJack) to demonstrate this weakness of neural networks. The system consists of multiple components:

- The face recognition sub-system. We began with an existing state-of-the-art face recognition network “VGG-face” to extract features from a picture of a face. Deep convolutional networks at this scale take several days to train even on the most powerful machines, so we took a pre-trained version of VGG-face and ported it to the Keras deep learning framework.

Extracted features are subsequently fed into a simple classifier (two-layer perceptron) which we trained to recognise one of our team members as an administrator of a secure system. We did this by taking around 50 pictures of our faces from different angles. Pictures of Laurynas were used as positive examples, and the rest were treated as non-administrators. We found that this didn’t give sufficient discrimination, so we also added faces from the Labelled Faces in the Wild dataset as negative examples.

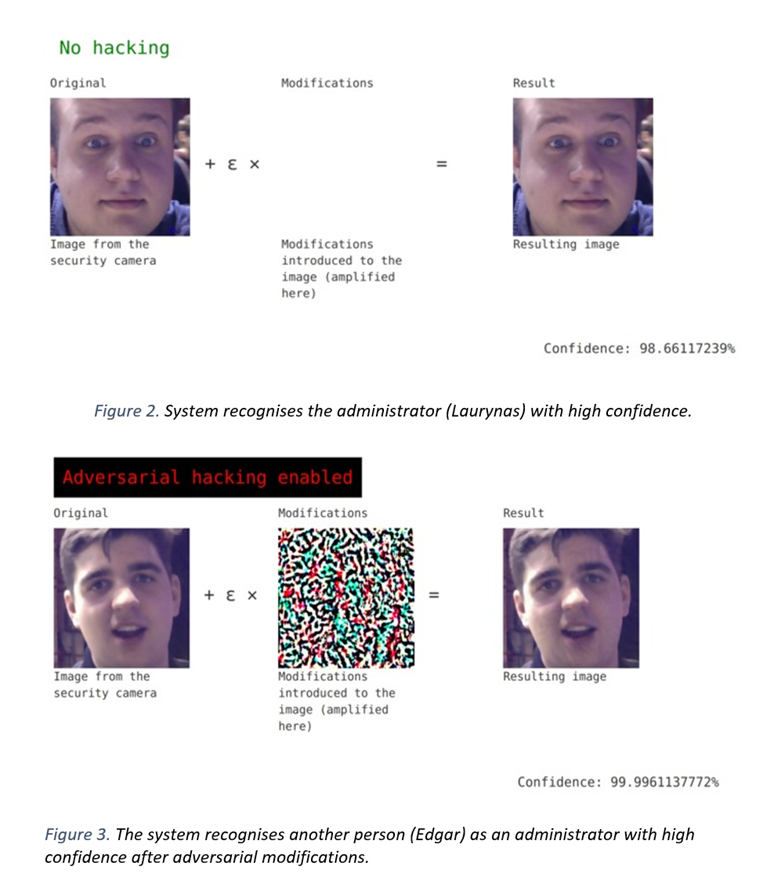

To generate adversarial inputs, we have set the network’s training objective to maximise the probability (confidence) of an administrator class. The input was modified using small steps at a time according to the direction pointed by the gradient, and it took around 6-10 iterations to generate an adversarial image which is misclassified with > 99% confidence. - The authentication system front-end, which leverages a laptop webcam to detect faces in the camera's view using the Haar cascade algorithm from OpenCV and submits them to the network for classification.

We have planted a "hack switch", capable of intercepting the input and performing adversarial “hacking” on it before submitting it for classification. This resulted in a 100% success rate for authenticating as an administrator, regardless of person’s facial features. In fact, it also worked on random objects in the background erroneously detected as faces! - The visualisation sub-system to display adversarial modifications and the classification confidence of the network:

The visualisation system runs on a Flask web-server which receives the data from the classification process and displays it as a web page.

Using the Azure services for deployment was very quick and convenient. We created the virtual machine using the azure-cli Azure command line interface and, after installing CUDA and cuDNN, we deployed our software and went live within minutes.

FaceJack was a success during the hackathon and managed to impress members of the audience with its novelty—many participants were not familiar with adversarial examples. It has achieved its objective of highlighting the security issue in a clear and concise fashion. As one of the judges jokingly put it: “Now I’ll be afraid to set-up face unlock on my phone”.