Kinect & Cognitive with Kinecting the World - Guest blog from ICHack17 Microsoft Challenge Winners

This is a guest blog by Shiny Ranjan, & Benedikt Lorch, from IC Hack 17 team - Kinecting the World

During early February weekend, we set off as individuals for IC Hack 17 – a Department of Computing (Imperial College London) organised hackathon - with Microsoft being the gold sponsor. None of us knew what to expect. Through this article we want to share our experiences how the initial anxiety turned into a great surprise.

Animated by an exuberant opening ceremony, everyone quickly rushed past the sponsor’s free takeaways and immediately got started with the hack. Having arrived as individuals, the five of us found each other in the #teamfinding channel on Slack and soon enough, we formed a team.

We are:

· Joon Lee, 3rd year Philosophy and Economics, University College London

· Qingyue (Cheryl) Yan, 1st year Physics, Imperial College London

· James Knight, 2nd year Joint Mathematics and Computing, Imperial College London

· Shiny Ranjan, 1st year Computer Science, Queen Mary University of London

· Benedikt Lorch, 4th year Computer Science, Friedrich-Alexander-University Erlangen-Nuremberg

The idea

With each of us coming from diverse disciplines and backgrounds, we didn’t have difficulties in collecting ideas for a project. Even before everyone could vote for their favourite project on our list, we got intrigued with the idea of creating a Kinect-based language learning game that comes with training and game mode. We decided to follow through with this “Kinecting the World” idea.

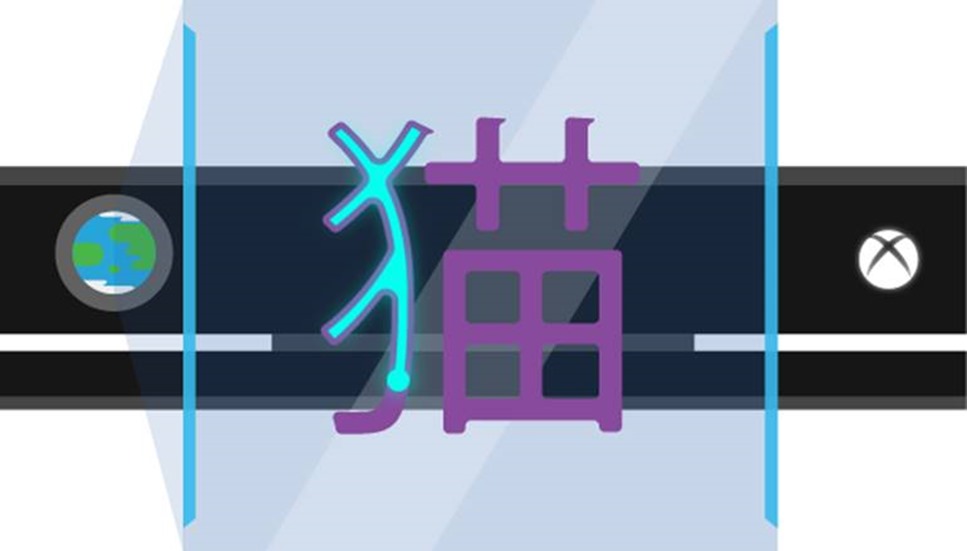

The “Kinecting the World” idea: learning a foreign language by tracing its symbols, wrapped in a colourful game.

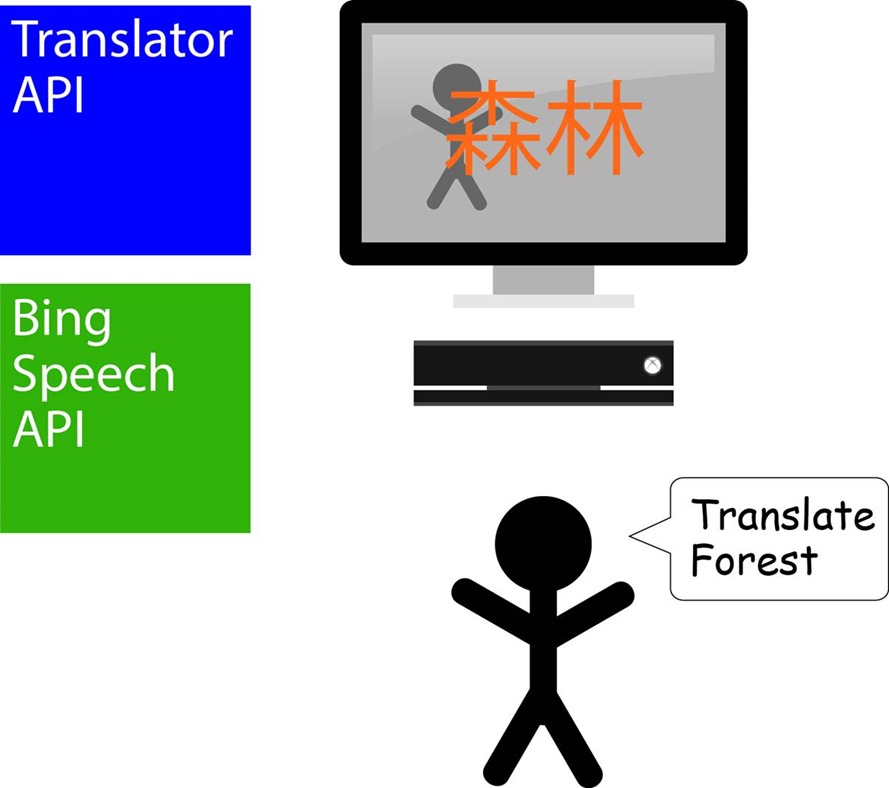

The basic principle of the training mode is that the player(s) can teach themselves Chinese by saying “Translate <English word/sentence>”, after which the recognised word is translated to Simplified Chinese. On the screen the player sees himself in the video stream from the Kinect with the translated Chinese symbols overlaid on top. By extending the hand towards the screen, each player can trace the Chinese characters. The drawn strokes are continuously checked against the Chinese symbol to provide tips and accuracy measurements.

In the game mode, the player follows a both addictive and instructive story line that will teach some basic Chinese characters while the player fights his way from level to level to find the evil monster that has taken over Queen’s Tower (an iconic structure at Imperial College London). Alongside learning, the player can simultaneously fight the evil monsters by tracing some Chinese characters while interaction with the game is done through the Kinect.

In terms of implementation, the speech recognition and translation could be put into practice using Microsoft’s Cognitive Services. Working with the Kinect gave us some nice properties on visualisation and interaction, two favourable properties for a Hackathon project. In addition to a Full HD colour image, the Kinect camera provides real-time tracking of people. For each tracked person, the camera supplies a number of joint positions such as head, left and right shoulders and hands etc. in 3D space. Players would draw onto the image by extending their arm towards the screen. The naïve way to distinguish between the player in a drawing state was to set some threshold between the z value of the player’s hand joint and his shoulder joint normalised by the player’s height. In other words, once the hand is a certain distance away from the corresponding shoulder, the draw method is triggered, otherwise it’s in the hover state. That was the agenda for the next 24 hours.

Jump Start

Having been one of the last teams to assemble, we could not find a table with enough space and started working with laptops on our laps. However, the organisers were very collaborative, even under the stress of feeding more than 350 students and sponsors for lunch, and after lunch we managed to move a table from the venue to a free location.

While Cheryl and Joon started off to create artwork, video and storyline for the game, Shiny, James, and Benedikt primarily focused on the programming part. As all three of us had prior experience with Java before, we opted for the Java library J4K that provides Java binding to the Microsoft Kinect SDK. The vast number of samples got us started quickly. In the beginning, we had anticipated that mapping the 3D coordinates from the body tracking to pixel coordinates in the 2D colour image would be one of the technical challenges, luckily J4K already came with the necessary functionality. To draw strokes on top of the colour images we only had to trace the path of one of the joints such as left and right hand.

Dead end

As easy as it sounds to draw some strokes, here we got stuck by the fact that all the visualisation in the J4K samples was done in OpenGL. Even though the GL object was accessible, one had to dig into the library to see how GL was set up internally.

After we couldn’t find any option to record sound from the camera, which was definitely required for the project, we decided to dismiss everything that we had done so far and switch from Java to C#. The people who have implemented the Java binding definitely did a great job, but with the Xbox logo on it the Kinect definitely finds more support in the .NET community. Installing and setting up Visual Studio and Xamarin Studios for our Windows and Macs respectively took some time, after the fresh start 8 hours in.

Fresh start

Starting with code from the colour basics sample shipped with Kinect SDK, we quickly caught up to the previous state. As convenient as in J4K, the mapping from 3D to 2D coordinates was already implemented. We started with drawing circles at the position of the tracked hands to verify that both tracking and coordinate mapping were working accurately.

In the meantime, Cheryl and Joon got their first API request through to Cognitive Services, translating English words to Simplified Chinese. They started by prototyping with curl on the command line, which was tricky as it required a temporary, screen-filling access token that could be obtained using the subscription key from Azure cloud. This access token had to be included in the header of the actual translation request. As soon as Cheryl verified the correctness of the translated result, we quickly wrapped the API requests into C# code and included the translation feature into the project.

While Benedikt was playing with the Speech Recognition clients Microsoft had introduced with Project Oxford (the beta name of their Cognitive Services), James taught the Speech Recognition engine to recognise words such as “translate” and “cancel” to introduce some flow into the game-to-be. At the same time, Shiny managed to visualise the trace of the tracked hand joint as polygon strokes onto a canvas object on top of the colour video stream.

A busy night

Apart from the spiritual sleeping class that was supposed to replace four hours of sleep in just 20 minutes, we all made it through the night. With the constant activity at the tables all around us hours flew by quickly until we finally put all parts together as dawn approached.

In hindsight, had we spent those wasted hours on C# instead of Java, we would have had enough time to integrate the game mode with the current project. As a compromise, Cheryl and Joon used the artwork they had created earlier to present the storyline in a humorous video, which they assembled in PowerPoint.

The clock was ticking, and we still didn’t have the character recognition that would compare the player’s strokes to the correct character. Under time pressure, James managed to get a simple matching of strokes to an image mask working, while the others submitted our project to Devpost.

After the hack officially ended, we happily presented our result to the jurors. A few other hackers stopped by at our table to check out our hack, curious of all the arm waving but also surprised to see themselves in the live video from the Kinect. During the expo, we took turns to check out the other projects. There were some really impressive hacks, ranging from games that would adapt to your level of anxiety to VR stuff, it was just a joy to walk around to get inspired. Many of the hacks relied on Cognitive services, extracted data from Twitter posts, or included some reference to US politics.

In the end, we were happy with our result, returned Kinect and the stuff we had borrowed, and sat down to relax before the upcoming ceremony.

Surprising turn of events

The closing ceremony was just as glamorous as the opening one. Over the first half an hour, both sponsors and audience were entertained by some really creative pitches from other teams. Unfortunately, mid-way through the ceremony, it was also time to say goodbye to our friend Benedikt, who travelled from Germany in order to attend the hackathon. What we hadn’t anticipated for was being called down for the finals presentation, shortly after Benedikt left. Once it had finally sunk in, we realised that all the hardware was already given back! Frantically, James made a dash back to the stalls to fetch the Kinect and wires. In the meantime, the rest of us waited and talked amongst ourselves about the exhilarating turn of events. We were surprised yet again, once our and the remaining groups’ presentations finished, that we won Microsoft’s prize for best use of its Cognitive Services! Microsoft sponsors also gave us a huge box of tech goodies, to share amongst ourselves. This hackathon has been a highlight for us all… and we want to thank DocSoc and the sponsors for an unforgettable weekend!

Takeaways

· We will never forget the Chinese characters for “forest”, “cat”, and “dog”, which we extensively used for testing.

· We gained a lot of experience to use Cognitive Services and the Kinect SDK as well as how pair programming can improve work efficiency.

· Hackathons are a great opportunity to meet like-minded people.