Recommendation Engines in Azure Machine Learning

I am working with a team of students from Imperial University on a project, one of the things they want to implement is a Recommendation engine for their new Cortana Intelligence Service.

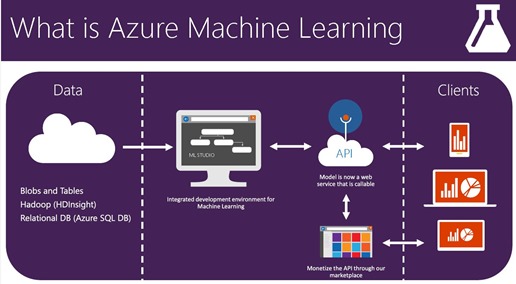

We had a interesting call today about some of their initial ideas and suggestions and I introduced them to the Azure Machine Learning Recommendations API.

The recommendation engine is actually utilised by Microsoft Channel 9 online learning resource. So when you watch a video on channel 9 the recommendations are coming from a model built by this service.

The model has been learning Channel 9 usage data back dated to July of 2014.

You can learn more about the Azure Machine Learning Marketplace video on Channel 9.

The Recommendation API is quite user friendly and well documented. And the sample app proved to be invaluable, especially the classes that handled all the XML parsing and XPath syntax

Some other great resources for learning data are Azure datamarket https://datamarket.azure.com/home and Cortana Intelligence Suite Gallery https://gallery.cortanaintelligence.com/

A typical example of a Machine Learning process is as follows

- Uploads the latest catalog, meaning all published/viewed materials.

- Uploads the latest usage data, meaning all anonymized playback info, aka this unique user watched this unique video, visited this web site, read this article

- Builds and replaces the model, meaning we have the service rebuild the model with the latest catalog and usage data, and we replace the old model with the new one

- Rebuilds cache, meaning we cache all the recommendations on our servers

The following is the process the Channel9 team have implemented to provide recommendation for viewers of Channel9 content.

Uploading Catalog Data

Because there is constantly new content being added to Channel 9 the model needs to be continually updated with the latest entries. Otherwise, the recommendation engine doesn't know about the new content and can't make any recommendations. Updating the catalog is pretty straightforward. They simply call the ImportCatalogFile API, passing all unique IDs for all the videos on Channel 9 pretty much used the code from the sample app as is for this.

The only gotcha with the ImportCatalogFile API is that it is a comma delimited file that doesn't take quotes and doesn't have a way of escaping commas, so watch out for that.

Uploading Usage Data

The next step in using a recommendation engine is to provide usage data to inform the model. By usage data, I mean all the anonymous playback data from Channel 9 -- this unique user watched this unique video. We provide this data to the model as a bulk upload, using the ImportUsageFile API.

The one thing I had to customize with the ImportUsageFile API was a way to make sure that I wasn't uploading a usage file that I already uploaded. Because I have an automated WebJob that does the bulk upload, I needed to diff between what had been uploaded and not. This query isn't inherent in the API, but could be built. To get the usage files, you can call GetAllModels and then extracting the UsageFileNames element, which returns a comma delimited list of file names. Here's the code for that:

public string GetRecommendationUsageFiles(string modelId)

{

string usageFiles = null;

using (var request = new HttpRequestMessage(HttpMethod.Get, RecommendationUris.GetAllModels))

using (var response = httpClient.SendAsync(request).Result)

{

if (!response.IsSuccessStatusCode)

{

Console.WriteLine(String.Format("Error {0}: Failed to start build for model {1}, \n reason {2}",

response.StatusCode, modelId, response.ReasonPhrase));

return null;

}

//process response if success

var node = XmlUtils.ExtractXmlElement(response.Content.ReadAsStreamAsync().Result, string.Format("//a:entry/a:content/m:properties[d:Id='{0}']/d:UsageFileNames", modelId));

if (node != null)

{

usageFiles = node.InnerText;

}

}

return usageFiles;

}

Building And Replacing The Model

With the service having the latest catalog and usage data, the next step is to build a new model. This is done using the BuildModel API. This API returns immediately, so you have to poll the service using the GetModelBuildsStatus.

The only gotcha here is that you can only kick off one build at a time. If you try to kick of a second build before the first one completes, you'll get a cryptic error back.

Once the GetModelBuildsStatus returned a status of BuildStatus.Success, I call UpdateModel passing the BuildId of the latest model.

Building A Cache

The final thing to do is build a cache of all the recommendations. You do have the option of querying the API directly to get a given recommendation for an item in the catalog, you then simply walk through all of the Channel 9 video IDs and call ItemRecommend, storing the results.

To speed this up (since there are quite a few videos on Channel 9!), they wrap the calls to ItemRecommend in a ParallelForEach using a ConcurrentBag to store the results:

var resultCollection = new ConcurrentBag<string>();

Parallel.ForEach(catalogGuids.Skip(i * 100).Take(100), guid => { resultCollection.Add(GetRec(guid)); });

Final Thoughts

If you are in need of a powerful, smart recommendation service, I'd recommend using the Azure engine.

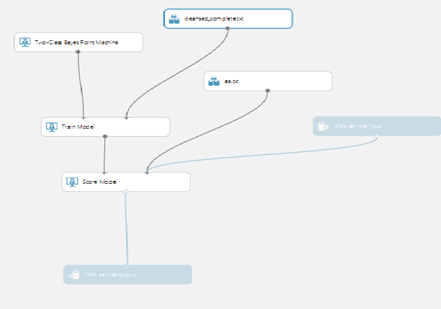

There are so many scenarios that it could be used for. Machine Learning in the cloud! and I am sure the students at Imperial will really enjoy using Azure Machine Learning Studio and jupyter workbooks using Python or R.

Resources

Give Azure ML at Try https://Studio.azureml.net