Think SPF is widely deployed? Think again.

With all the hoopla surrounding DMARC, I thought I would take the time to see how SPF is functioning in real life, at least in our network.

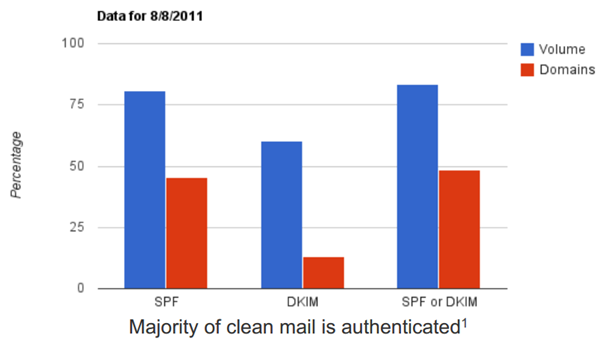

According to DMARC documentation, SPF and DKIM adoption is reaching critical mass (see page 5 of that link):

Over 75% of mail volume is SPF authenticated.

I decided to cross check this against our own email statistics. Unfortunately, my data is not as thorough as the above. Here is what I have:

My data is how much email, after IP rejections, is authenticated with SPF.

My data is only at the envelope level. So, if we get a message with three recipients, we scan the message and then bifurcate it into three messages. However, this only counts for one SPF check, not three. This is because not bifurcating the message saves time in the spam filter, and then we only the one log line for the spam filter verdict (eventually we will adjust our stats to account for total recipients). This will understate SPF results.

It’s unfortunate that we don’t have better data, but it’s the best I have.

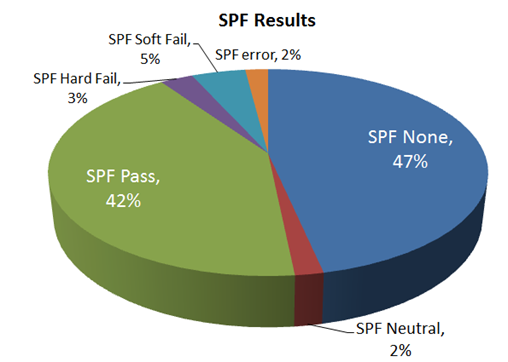

The results of messages going through the spam filter of our Forefront Online customer base, for the past 45 days (counts of several billion messages), are below:

You can see that far from 75%+ of messages having SPF records, we see a little over half of them having SPF records. Nearly half of messages have no SPF records (I have no data on total domains with SPF records therefore I cannot correlate between size of senders vs SPF implementation).

You may argue “Well, those messages that are all SPF None, those are spam messages. The majority of legitimate senders use SPF.”

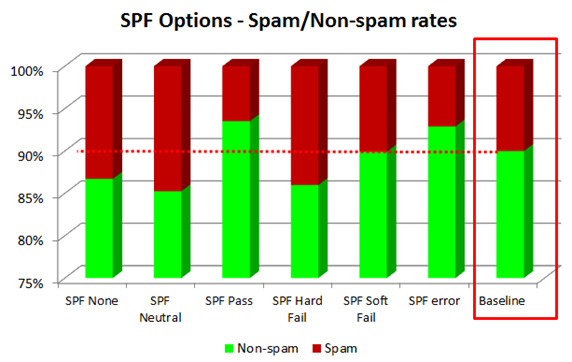

But that’s not true. I also have statistics on what the spam and non-spam proportions are of messages with various SPF dispositions and compare it against the baseline of all of our messages:

The baseline is the network-wide, after IP blocks, spam/non-spam ratio. Green is good mail, red is spam. 90% of our content filtered mail (before bifurcation) is non-spam (it looks so good because we reject so much mail in our IP filters, and bifurcation later on skews the ratio). Anything with green above 90% is better than average.

You can see above that SPF Pass is 94% good mail. That means that there are still 6% of messages that are marked as spam that pass an SPF check. Who are these senders? I don’t know, maybe spammy newsletters, maybe dirty jokes, maybe snowshoe spammers, and so forth.

But messages with SPF none are marked as non-spam 87% of the time. Either we have a very high false negative rate (which is unlikely because we monitor abuse inbox trends) or most mail without SPF records are legitimate. I’m not saying that all of it is, but rather that there are a lot of smaller senders out there that have not implemented SPF records are sending good mail. It’s not as clean as messages that pass SPF checks, but it’s not horrible, either.

Also interesting are SPF soft fails. Its spam/non-spam rate is exactly the same our baseline.

Finally, even SPF hard fails are not marked as spam most of the time. Why is this? The most frequently example is people logging in from a remote location and sending mail from “the hotel” rather than their corporate mail server. Other times people just have their mail servers misconfigured, or not fully populated, or something. In any case, SPF hard fails are not indicative of spam in our post-IP blocked mail.

One caveat for this is that we if had statistics for pre-IP blocked mail, these values would change significantly.

My conclusion in all of this is that SPF checks alone are not that great for detecting spam and phishing; most of the spam is caught with other filtering techniques. Instead, they are better used for validation of the sending domain and then using that as a whitelist or fast-track lane for filtering for domains you want to receive mail from. Also, we still have a ways to go before everyone is using SPF.