The pros and cons of graylisting

Graylisting is an antispam technique that works by taking advantage of sender reputation. Specifically, the lack of a good or bad reputation gives the sender a chance to prove themselves worthy of delivery. The basic idea is that a good sender will go to the trouble of demonstrating themselves legitimate, whereas a spammer will not.

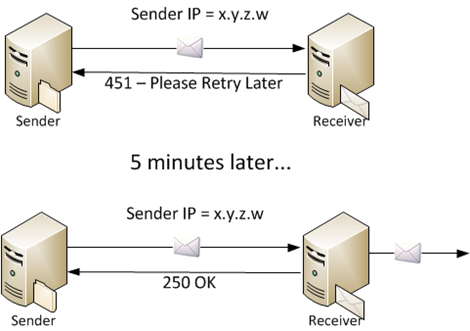

In graylisting, whenever an incoming email connection is received, the receiver takes a look to see if they have have ever seen email from this IP address before. If not, it issues a 4xx (e.g., 451) level error in SMTP. This is a temporary rejection which tells the sender to retry later (as opposed to a 5xx response which is a permanent rejection). Email servers usually issue 4xx level errors when they are having temporary local problems, but spam fighters have discovered that this is a useful spam technique.

Why?

The reason is that a legitimate mail server will interpret the 4xx error as a legitimate error. The receiver wants to accept the message but can’t. Therefore, since the email it wants to deliver is important, it will continue to attempt to deliver the message in a little while. How long is a little while? It depends on the software; some do it in 2 minutes, others in 5 minutes, still others in 30 minutes.

By contrast, a spammer sending mails from a botnet is concerned with sending as much mail as possible in as small a window as possible. He doesn’t have time resend messages. He’s better off just spamming as much as he can and ignoring errors, based upon the belief that most will get through. Spammers don’t bother retrying because they don’t have time.

Most mail servers improve on this performance by implementing blacklists and whitelists. If the sending IP is on a blacklist they issue a 5xx SMTP response (permanent rejection). If it’s on a whitelist or I’ve-seen-mail-from-this-IP-before list, they accept the message. Then, for IPs with no previous sending history, they issue the 4xx response. If the IP tries again, it’s added to the I’ve-seen-mail-from-this-IP-before list and subsequent emails from this IP do not receive the temporary errors.

People who have implemented graylisting (greylisting?) have had success with it. For the most part, spammers don’t retry and it cuts down most of the load from the downstream content filter. it works pretty well.

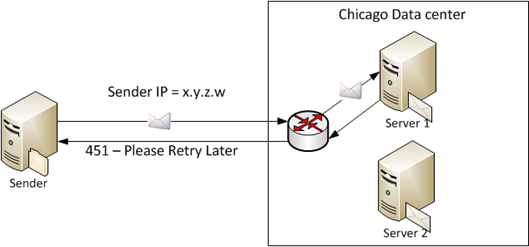

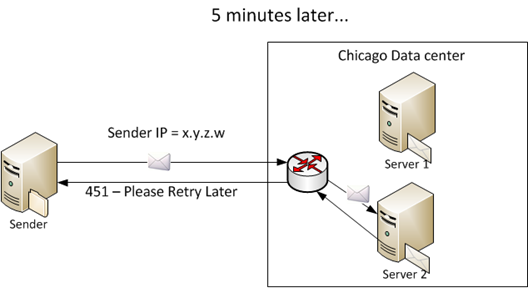

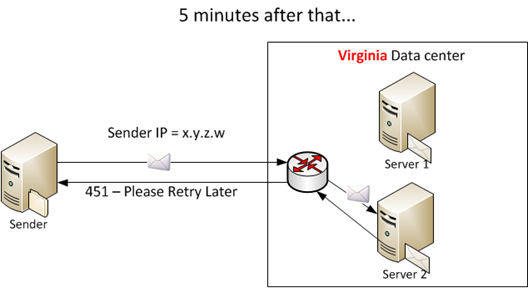

But graylisting has a serious drawback – it doesn’t work as well in distributed mail environments. For example, our own service is a geodistributed service. We have data centers (DCs) in Chicago, Texas, Virginia, Dublin and Amsterdam. If someone sends email to one of our clients, the email can be routed to any one of those depending on how much traffic we route through each DC. If we were to implement graylisting, the knowledge of who has sent to the server must be recorded and distributed across the rest of the network.

In the above example, the mail receiver keeps track of who has sent it mail by writing to a local file and then comparing each subsequent inbound email against this local list. In a geodistributed environment, just because a new IP has not been seen by that mail server does not mean it hasn’t been seen by a server somewhere else:

(Note: this example is hypothetical and is not necessarily representative of our own network, I merely refer to the use of “our” network for convenience).

You can see in this example that each server in each data center may be keeping track of who has sent it mail before. But unless the sender retries and happens to send luckily send it to the same recipient they sent it to the first time (and that is controlled by a load balancer, not by the sender), they will receive another Temporary Reject even though they have sent the message once before.

The solution for this is that all of the servers in the data center must share IP history with each other. And each data center must share IP history with the other data centers. In order for that to happen:

Each mail server must log its data.

Every once in a while, a process must collect these logs and pull it to a central repository for processing.

These logs must be parsed and compared against previous lists, and new additions updated to the central master list.

These lists must then be replicated back to the mail servers in every data center.

This process isn’t that complicated, it’s what we do to generate our internal IP blocklists (we have our own in addition to 3rd party ones). The difficulty is doing this in real time.

It takes time to pulls hundreds of thousands of log lines and process them, and then push them back to the mail servers. Data transfer is often the chokepoint (not to mention for some reason this process breaks down from time to time).

What’s the big deal? Aren’t we already doing this with our IP blacklists?

Yes we are, but blacklists don’t have to be updated in super-fast real time. Even if the IP filter isn’t updated super fast, we still have the content filter as a backup plan. We also have 3rd party lists that catch this spamming IPs and serve as another redundant back up. Thus, to the end user, the spam stays out of his inbox either through an IP blocklist, or by the content filter (and it ends up in his spam folder in the quarantine).

With graylisting, this pull-process-push must occur within the retry window of the sending mail server. How long between retries do most mail servers take? I don’t know, but if it’s 2-5 minutes, that’s faster than we can pull, process and push data across a geodistributed network. Worse, there’s no backup for good mail the way there is bad mail. While graylisting works for spam, a good mail server could end up retrying for a long time while the network catches up. This amounts to a whole lot of false positives because a good mail server will not be able to send mail through and it will not arrive at its correct destination.

Good IP reputation lists must be built faster than bad IP reputation lists.

Mail servers that continually reject good mail have bad reputations on the Internet. People on discussion lists say “Argh, what is <Company X’s> problem?” And then customers of Company X start complaining that their partners can’t consistently send mail to them. And if the lists are continually updated and removed, the whole cycle would start all over again.

You may say “So what? How many good mail servers are there? You’d have them sorted out in a week.”

Not so. That only works if we have one customer to worry about. But we don’t; we have tens (hundreds) of thousands and we don’t know who they all communicate with. There’s no way we can predict it, either. And unlike spam, there’s no workaround. A customer that doesn’t receive his email in a timely fashion because of a latent whitelist doesn’t get it via some other means.

Thus, while graylisting is a technique that works well on a small level, it is less workable on a large scale if a service is geodistributed and trying to build a reputation list on the fly.