Stating the obvious – human errors are the weak link in security

I frequently read publications like Bloomberg and Reuters and when I come across an article relating to cybersecurity, I pay attention. Those types of outlets aren’t known for their expertise in this area and so what they write is a good reflection of what the general public believes about security, and how the message is getting across to them.

This past week, Bloomberg published an article called Human Errors Fuel Hacking as Test Shows Nothing Stops Idiocy. Here’s an excerpt:

Staff secretly dropped computer discs and USB thumb drives in the parking lots of government buildings and private contractors. Of those who picked them up, 60 percent plugged the devices into office computers, curious to see what they contained. If the drive or CD case had an official logo, 90 percent were installed.

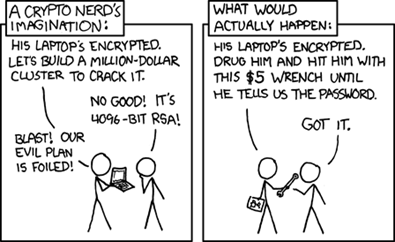

The test showed something computer security experts have long known: Humans are the weak link in the fight to secure networks against sophisticated hackers. The intruders’ ability to exploit people’s vulnerabilities has tilted the odds in their favor and led to a spurt in cyber crimes.

“There’s no device known to mankind that will prevent people from being idiots,” said Mark Rasch, director of network security and privacy consulting for Falls Church, Virginia-based Computer Sciences Corp.

This shouldn’t come as any surprise; humans have always been the weak link in any organization. That’s been true since day one.

The article goes on:

Hundreds of incidents likely go unreported, said Rasch, who previously headed the Justice Department’s computer crime unit. Corporate firewalls costing millions to erect often succeed in blocking viruses and other forms of malware that infect computers and steal data such as credit card information and passwords. Human error can quickly negate those defenses.

“Rule No. 1 is, don’t open suspicious links,” Rasch said. “Rule No. 2 is, see Rule No. 1. Rule No. 3 is, see Rules 1 and 2.”

Okay, so people open up suspicious links in email which goes to a web page with a zero-day exploit that infects the users system, and subsequently allows a hacker to gain unauthorized access using stolen credentials (or by a compromised machine). While it is true that the user clicked on the link, they also may have been running software that is not up to date. Whose fault is that?

For example, the NHS in the UK has resisted upgrading from Internet Explorer 6 despite the fact that it is a very insecure browser with tons of flaws and exploits. They have resisted this because all of their existing applications work in IE6 and they are worried that migrating to IE9 will break tons of stuff. In their case, they have institutionalized their insecurity. Aren’t IT departments responsible for ensuring that their users are running secure software (i.e., most up-to-date versions of the software)?

Rasch’s point in the article is that people shouldn’t click on malicious links. RSA was compromised when a user retrieved a spear phishing message out of their spam folder, opened it, and executed the content. But who is asking the question of why users do this? Why do users click unknown links? Why do users go into their junk folders and retrieve mails that were marked as spam and then open them up? I’ll get into that in a future post.

Continuing:

“The security industry is still stuck in infrastructure 1.0,” [CertiVox CEO Brian] Spector said. “As the Web 2.0 world started taking off, it wasn’t keeping up.”

Training may be the biggest key to stopping the attacks. Hudson Valley Credit Union in Poughkeepsie, New York, experienced a spear-phishing attack five years ago. Now, each of the company’s more than 800 employees takes an annual online security training course, said John Brozycki, the credit union’s information security officer.

Each year, the course expands to include new schemes and provides a refresher on long-time problems like phishing.

User education is probably the key to ensuring security problems do not affect companies. However, the cyber security industry has not done a great job at preaching its message. Children early on are taught “not to talk to strangers.” They aren’t taught early on “not to click on strange links.”

The education message needs to change to tell users what they need to know.