Rethinking the term “false positive SLA”

This is a topic that I have written about before, but I will continue to write about it until I see a fundamental change in the industry.

One of my hobbies, and it has been a hobby for years, is stock trading. I like doing research on weekends and evenings and figuring good stocks to buy. I’ve been doing this for 6 or 7 years now and I am only marginally better now than I was when I started. That’s the frustrating part, but I have gotten better at money management which limits the amount I will lose on the downside. I classify myself as an “okay” to “slightly better than average” trader. One of the reasons I like it so much is because it is a brutally honest hobby to go into. If you are a good trader, you will make money (or at least beat your benchmark). If you are a bad trader, you will lose money. There’s no place to hide, you can judge how well you are doing by looking by looking at your equity curve. You cannot arbitrarily define your definition of success because the bottom line reality is money. If you are losing money consistently (and/or consistently underperform your benchmark) then you are not a good trader. Period.

In the spam filtering industry, we are known for playing fast and loose with definitions and I really think that it is time to change. In statistics, a false positive is an incorrect result of a test which erroneously detects something when in fact it is not present (a type 1 error). With regards to filtering spam, a false positive is a legitimate message mistakenly rejected or filtered as spam, either by an ISP or a recipient's anti-spam program.

In industry we all agree on what constitutes a false positive. However, we fudge the definition of our measured “false positive rate” by deliberately making it ambiguous. Ironport has a published FP SLA of 1 in a million messages. MessageLabs has 1 in 333,333 messages, as does Postini. Our own published FP SLA is 1/250,000 messages. But note that the definition is 1 in xx messages. The term “messages” is always undefined. Is that 1 in xx legitimate messages, or 1 in xx total (spam + non-spam) messages?

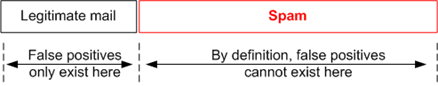

If you look the statistical definition of the term, an FP is the result of a test which erroneously detects something when it is not present. For spam, a spam message cannot be erroneously detected as a false positive. If a message is marked as spam, then that is a true positive. If the message truly is a spam, a filter cannot detect that which is not present because it really is present. The spam exists and therefore is present. Attempting to call it a false positive would be a logical error because it contradicts the definition of a false positive. Something cannot be A and not A at the same time.

Thus, when we talk about a false positive rate SLA, by the true definition of the term we should be referring to 1 message erroneously marked as spam in xx legitimate messages, not in total messages. A false positive cannot exist in the spam space.

This is important for the user experience. Let’s suppose that you have a false positive SLA of 1 in 100 total messages (1% FP SLA), spam + non-spam, and this is explicitly called out. Let’s also suppose that the user gets 10,000 messages and that 50 of them are legitimate and 9500 of them are spam. A spam filter could mark all 50 messages as spam and still be within the false positive SLA (50/10,000 = 0.5%) even though to the end user, their experience is a very negative one. Yet if the spammers all sent only 2010 messages and that same filter marked only 40 legitimate messages as spam, the FP rate is 40 / 40 legit + 2010 spam = 40 / 2500 = 1.6%. This same filter is now outside of the published SLA and is therefore in violation of it. However, the total amount of good messages marked as spam is less than before! In other words, the user gets more legitimate mail into their inbox but the FP SLA is determined to be worse! That makes no sense measuring it that way because it is not dependent on the legitimate mail that the user gets, but the amount of spam that the user gets. A user doesn’t want to see spam in their inbox and would never mark it as an actual false positive, they only care about legitimate mail that they wanted to receive marked as spam. The results are also counterintuitive because a user might get more mail that they want to see and fewer false positives, yet by this definition the filter is worse.

Why are FP SLAs implicitly defined as total messages instead of total legitimate messages? I think it’s because of marketing and achievability. 1 in 250 thousand messages is huge! 1 in a third of a million is huger yet! And one in a million? That’s astronomical! As humans, we cannot really comprehend numbers that large and so they are used to get us to think that false positives never occur. We all know that we will never win the lottery because the odds are so heavily weighted against it, we cannot fathom it. We also know that we don’t want false positives and so these huge rates are given to make us think that while it is possible, it is not probable. At such a small probability of missing a legitimate message, it will almost never occur or maybe once or twice per year.

But on the technical side, everyone who works with spam filters knows that false positive rates on spam filters are always higher than we would like. No matter what we do, we always occur false positives. Make it more aggressive and FPs pile up like nobody’s business. We don’t really think that 1 in a million legitimate messages marked as a false positive is possible, but 1 in a million total messages is, because the volume of spam to non-spam is so much higher in spam to non-spam. If 90% of email is spam these days, then 1 false positive in a million total messages is really 1 false positive in 100,000 legitimate messages. That’s no where near as good as the advertised rate. It’s still pretty high, but humans can fathom 100,000 a lot better than they can fathom a million. It just doesn’t sound anywhere near as impressive.

Luckily, on the technical side, this lower rate is achievable. Unfortunately, the perception that we are achieving the higher rate (i.e., the fake rate) it is not based upon reality. We can fudge the FP rates because we are using a definition of it that allows us to achieve it. Unfortunately, unlike the stock market, we are allowed to get away with it, and I find that personally frustrating. Pretty much no one is going to come back and ask us if our FP rate is per legitimate messages or total messages. In the markets, I can’t go and define my success as picking stocks that go down but at least I felt good about it. If I’m losing money, then that is the reality. There’s nothing to hide behind.

If no one on the marketing side asks too many questions and the definition of an FP rate is ambiguous, industry can get away with publishing such high FP rates based upon a faulty definition – we all do it, and nobody is going to be the first to step forward and lower their FP rates by 90%. That would be a public relations nightmare.

However, in my view, this needs to stop. Industry needs to start using the true definition of false positive rates and start publishing those, or at least measure it internally and use that as a benchmark.