Optimizing ad-hoc workloads

Today's tip isn't necessarily low-level, but it is a setting that you should consider turning on for your SQL Server instances (especially if you've got vendor applications that generate TSQL statements on the fly). The setting, "optimize for ad-hoc workloads", is configured using sp_configure and when enabled will generate a very small execution plan stub when a statement is first compiled. This plan stub, which is many times smaller than what the execution plan would normally be, ensures that the plan cache doesn't get very large from dynamically executed SQL that isn't likely to be resused. Any memory allocated for execution plans comes from the buffer pool, so the more plans you have then the smaller your buffer pool will be for data and index pages. If the same plan is reused, the plan stub goes away and a full plan stays in the plan cache.

Let's take a look at how significant the memory consumption savings can be when using this option. First, let's clear out the plan cache (remember to never do this on a production system).

DBCC FREEPROCCACHE

Now, let's look at what is returned for our cached plans that reference the table SalesOrderHeader (which is in the AdventureWorks database). This should return NULL since we just cleared out the plan cache.

SELECT PlanSizeInMB = SUM(size_in_bytes)/1024.0/1024.0

FROM sys.dm_exec_cached_plans

CROSS APPLY sys.dm_exec_sql_text(plan_handle)

WHERE text LIKE '%SalesOrderHeader%' AND

text NOT LIKE '%dm_exec_cached_plans%'

I also want to make sure the "optimize for ad hoc workloads" option is turned off.

EXECUTE sp_configure 'optimize for ad hoc workloads', 0

RECONFIGURE

Now, let's run a load that is similar to an application that builds SQL strings on the fly and executes the statements in a non-parameterized fashion. This is a contrived example, but illustrates what can happen. I'm simply looping through the Sales.SalesOrderHeader table a record at a time and issuing a SELECT statement for each record encountered with an explicit value for the predicate. This will create an entry in the plan cache for every single SELECT statement.

DECLARE @SalesOrderID VARCHAR(20)

DECLARE @SQL NVARCHAR(MAX)

DECLARE @TableVar TABLE (SalesOrderID INT)

DECLARE CursorExample CURSOR FOR

SELECT SalesOrderID

FROM Sales.SalesOrderHeader

OPEN CursorExample

FETCH NEXT FROM CursorExample INTO @SalesOrderID

WHILE @@FETCH_STATUS = 0

BEGIN

SET @SQL = 'SELECT SalesOrderID

FROM Sales.SalesOrderHeader h

JOIN Sales.SalesPerson p on p.BusinessEntityID = h.SalesPersonID

WHERE SalesOrderID = ' + @SalesOrderID

INSERT INTO @TableVar(SalesOrderID)

EXECUTE (@SQL)

FETCH NEXT FROM CursorExample INTO @SalesOrderID

END

CLOSE CursorExample

DEALLOCATE CursorExample

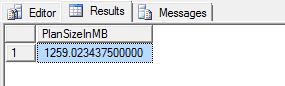

When the "optimize for ad hoc workloads" option is not on, a full plan is created for every single SELECT statement. In this case, around 1259 MB of plan cache was used to store these simple plans. That is just over 1.2GB of plan cache used!

SELECT PlanSizeInMB = SUM(size_in_bytes)/1024.0/1024.0

FROM sys.dm_exec_cached_plans

CROSS APPLY sys.dm_exec_sql_text(plan_handle)

WHERE text LIKE '%SalesOrderHeader%' AND

text NOT LIKE '%dm_exec_cached_plans%'

So, what happens if I enable the 'optimize for ad hoc workloads' setting and rerun the test? First, I'll want to enable the setting and then clear the procedure cache again.

EXECUTE sp_configure 'optimize for ad hoc workloads', 1

RECONFIGURE

DBCC FREEPROCCACHE

Rerun the same cursor code from earlier in this email. After you do, run the following statement to see the size of the plan cache:

SELECT PlanSizeInMB = SUM(size_in_bytes)/1024.0/1024.0

FROM sys.dm_exec_cached_plans

CROSS APPLY sys.dm_exec_sql_text(plan_handle)

WHERE text LIKE '%SalesOrderHeader%' AND

text NOT LIKE '%dm_exec_cached_plans%'

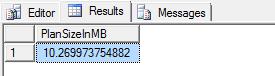

Using the 'optimize for ad hoc workloads' option results in a plan cache that is right at 10MB (compared to a bloated cache of 1.2GB). If these plans are never used again, that is an incredible amount of memory you're saving for the buffer pool (and for other plans). The good thing is, there is really no reason to not have this setting on – the downside is negligible.

HTH!

Tim