Connecting to Kinect from Sho

By now you’ve certainly heard of the Kinect sensor, and very likely about the freshly-released Kinect SDK developed by some of our colleagues here at Microsoft Research. We wanted Sho users to be able to get in on the fun too, so we’ve worked out a bit of glue code (kinect.py, included at the end of this article) to make it easy for you to get at Kinect data from Sho. In the usual Sho way, you won’t need to compile any code; just a few lines of script will get you going. With only 70 or so lines of code you can create the sorts of fun animations you’ll see below!

As you may know, the Kinect is a pretty complex sensor, and the SDK adds even more layers of sophistication. The hardware itself is comprised of a depth sensor and a microphone array; the SDK extracts skeletons of multiple people in the scene, high-quality audio output, and sound source localization (estimates of where the sound is coming from). In this post, we’re only going to work with the skeleton data; if there’s demand (i.e., if we hear from you!), we can add more posts to get the other sorts of data as well.

What Can It Do?

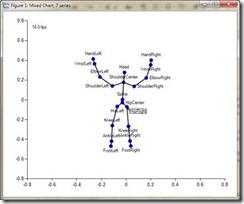

It’s often best to start with a demo. Let’s start with something basic: let’s use Sho’s built-in plotting functionality to just plot all the available skeletons and label all the individual joints. Kinect.py has a KinectTracker class which uses a polling model to get skeleton information. The class’ skeletonFrame member will contain the most recent set of skeletons (or None if no skeleton has been found yet), and skeletonUpdateTime will be set to the last time the skeletons were updated. To make it a bit more fun, we’ll have the skeletons update in real time within the plot (see the video). The video shows Sho’s new double buffering feature for plots, which will appear in Sho 2.0.5 (to be released in the next few weeks); in the current version of Sho you’ll see some “flashing” when updating the plot.

The next question, of course, is how we did that and how much code it took. Fortunately it’s quite simple and not very much code at all:

def bodyplot(kinecttracker):

def plotjointseq(jointlist):

# helper func to plot a set of lines through a series of jointnames

x = [jointhash[jointname][0] for jointname in jointlist]

y = [jointhash[jointname][1] for jointname in jointlist]

plot(x,y,'k-')

# set up plot

clearplot()

p = plot([1],[1])

# double buffering mode - uncomment the below for Sho 2.0.5+

# p.Freeze = True

lastplottime = System.DateTime.Now

jointhash = System.Collections.Hashtable()

hold(True)

# keep doing this until the user hits Ctrl-Shift-C in the console

while(True):

System.Threading.Thread.Sleep(0)

if kinecttracker.skeletonFrame:

clearplot()

p.HorizontalMajorGridlinesVisible = False

axisranges(-.8,.8,-.8,.8)

# iterate through all found skeletons

for skeleton in kinecttracker.skeletonFrame.Skeletons:

# only draw valid skeletons

if skeleton.TrackingState == SkeletonTrackingState.Tracked:

x = []

y = []

labels = []

# iterate through joints and store their

# location in jointhash

for joint in skeleton.Joints:

# extract the jointname from the id, where the id is

# something like:

# Microsoft.Research.Kinect.Nui.JointID.FootRight

jointname = joint.ID.ToString().split(".")[-1]

z = joint.Position.Z + 1e-10

# project x location into viewplane using z

currx = joint.Position.X/z

x.Add(currx)

# project y location into viewplane using z

curry = joint.Position.Y/z

y.Add(curry)

labels.Add(jointname)

# store the projected locations and the original x,y,z

jointhash[jointname] = (currx,curry,\

joint.Position.X,\

joint.Position.Y, z)

# draw joints with joint labels

plot(x,y,'b.',size=10, labels=labels)

# draw lines connecting joints

plotjointseq(["HipCenter","Spine",\

"ShoulderCenter","Head"])

plotjointseq(["ShoulderCenter","ShoulderLeft",\

"ElbowLeft","WristLeft","HandLeft"])

plotjointseq(["ShoulderCenter","ShoulderRight",\

"ElbowRight","WristRight","HandRight"])

plotjointseq(["HipCenter","HipLeft","KneeLeft",\

"AnkleLeft","FootLeft"])

plotjointseq(["HipCenter","HipRight","KneeRight",\

"AnkleRight","FootRight"])

# compute the framerate and use a label to display it

framerate = 1000.0/\

((System.DateTime.Now\

-lastplottime).Milliseconds)

plot([-.7],[.7],labels=[str(round(framerate))+" fps"])

lastplottime = System.DateTime.Now

# set the size of the graph

axisranges(-.8,.8,-.8,.8)

# in double buffering mode, update the view

# (uncomment below for Sho 2.0.5+)

# p.Render()

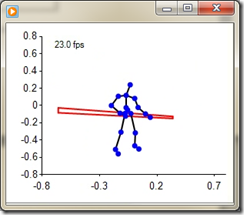

That example demonstrates all the important stuff: how to get at individual joints and their 3D locations, as well as how the joints are connected together. From there, it’s easy to add a few more lines of code and create simple virtual 3D objects. In the example below, I’ve created a simple staff object with a fixed 3D length that’s centered between the two hands. The code below can be inserted right before the “draw joints” section in the bodyplot() function above:

# draw red staff

handleftpt = DoubleArray.From(jointhash["HandLeft"])

handrightpt = DoubleArray.From(jointhash["HandRight"])

# compute center point between hands

handcenterpt = (handleftpt+handrightpt)/2.0

# compute vector along staff direction

staffvec = handrightpt-handleftpt

# normalize it to unit length

staffvec = staffvec/(norm(staffvec)+1e-10)

# compute the left and right end of the staff

# it's two units long and .05 units wide, centered between the hands

staffleftpt = handcenterpt -staffvec*1.0

staffrightpt = handcenterpt + staffvec*1.0

# if the hands are close enough to each other, draw the staff

if norm(handrightpt[2:]-handleftpt[2:]) < 0.7:

plot([staffleftpt[2]/staffleftpt[4],staffrightpt[2]/staffrightpt[4]],\

[staffleftpt[3]/staffleftpt[4],staffrightpt[3]/staffrightpt[4]],'r-')

plot([staffrightpt[2]/staffrightpt[4],staffrightpt[2]/staffrightpt[4]],\

[staffrightpt[3]/staffrightpt[4],(staffrightpt[3]+0.05)/staffrightpt[4]],'r-')

plot([staffleftpt[2]/staffleftpt[4],staffleftpt[2]/staffleftpt[4]],\

[staffleftpt[3]/staffleftpt[4],(staffleftpt[3]+.05)/staffleftpt[4]],'r-')

plot([staffleftpt[2]/staffleftpt[4],staffrightpt[2]/staffrightpt[4]],\

[(staffleftpt[3]+.05)/staffleftpt[4],(staffrightpt[3]+.05)/staffrightpt[4]],'r-')

When the hands get within a certain distance of each other, the staff (magically) appears, and our little stick figure friend can do some fancy tricks. A screenshot is below, but you’ll want to click here to see the video.

How It Works

The glue code that gets the SDK working in Sho, kinect.py, is also quite short, but what it does is a little complicated. Since the managed layer of the Kinect SDK depends on the WPF threading model and message pump, we have to create a shim WPF application and window that will get the events. That application has a callback that’s called every time it gets new skeleton data; inside that callback we save the skeletonFrame information and the skeletonUpdateTime in the KinectTracker object, which we can access outside of the shim application’s thread. The entirety of kinect.py is below; note you may need to update the paths to point to wherever you installed the SDK:

# kinect.py

from sho import *

import System, clr

clr.AddReference("PresentationFramework")

clr.AddReference("PresentationCore")

clr.AddReference("WindowsBase")

clr.AddReference("System.Xaml")

addpath("C:\Program Files (x86)\Microsoft Research KinectSDK")

ShoLoadAssembly("Microsoft.Research.Kinect.dll")

from Microsoft.Research.Kinect.Nui import *

class KinectHelperWindow(System.Windows.Window):

def __init__(self):

self.nui = None

self.Width = 1

self.Height = 1

self.Visibility = System.Windows.Visibility.Hidden

self.Loaded += System.Windows.RoutedEventHandler(self.Init)

def SkeletonFrameReady(self, obj, skeletoneventargs):

self.kinectinfo.skeletonUpdateTime = System.DateTime.Now

self.kinectinfo.skeletonFrame = skeletoneventargs.SkeletonFrame

def Init(self, obj, rea):

self.nui = Runtime()

self.nui.Initialize(RuntimeOptions.UseDepthAndPlayerIndex |\

RuntimeOptions.UseSkeletalTracking |\

RuntimeOptions.UseColor);

self.nui.VideoStream.Open(ImageStreamType.Video, 2,\

ImageResolution.Resolution640x480, ImageType.Color);

self.nui.DepthStream.Open(ImageStreamType.Depth, 2, \

ImageResolution.Resolution320x240, \

ImageType.DepthAndPlayerIndex);

self.nui.SkeletonFrameReady += \

System.EventHandler[SkeletonFrameReadyEventArgs]\

(self.SkeletonFrameReady)

class KinectTracker:

def __init__(self):

self.skeletonFrame = None

self.skeletonUpdateTime = None

pass

def StartKinect(self):

self.app = System.Windows.Application()

khw = KinectHelperWindow()

khw.kinectinfo = self

self.helperwindow = khw

self.app.Run(khw)

def startKinectTracker():

kt = KinectTracker()

kt.shothread = ShoThread(kt.StartKinect, "kinectapp", \

System.Threading.ApartmentState.STA)

kt.shothread.Start()

return kt

How To Get Started with Your Own Code

To develop your own Kinect demos, you’ll have to start by getting yourself a Kinect sensor. I’d recommend the standalone sensor, since it comes with a power supply; if you get the package with an xbox 360 you’ll need to buy a separate power supply as it only comes with a cable to connect to the 360 console. Once you have the device, you’ll need to install the Kinect SDK. Once you’ve done that, reboot your machine, plug in your kinect, and you should be ready to go.

In order to use the sensor from Sho, you’ll have to use the 32-bit console, shoconsole32, even on a 64-bit machine, as although the drivers work on both 32-bit and 64-bit machines, the managed SDK only supports calls from 32-bit contexts. You’ll also want to save the glue code above as kinect.py. Once in the console, import it and create a KinectTracker object with startKinectTracker:

>>> import kinect

>>> kt = kinect.startKinectTracker()

Once a skeleton is found in the sensor’s range, its data will be copied to kt.skeletonFrame and you can use it as in the demos above.

And You’re Off!

So there you have it; you now know how to talk to the Kinect SDK from Sho using the wrapper in kinect.py above. I’m sure you’ll do far more creative things than adding a red staff to a stick figure, and we’d love to hear about it. Please send us links to your own demo videos in the comments!