Microsoft’s MT-DNN Achieves Human Performance Estimate on General Language Understanding Evaluation (GLUE) Benchmark

Understanding natural language is one of the longest running goals of AI, which can trace back to 1950s when the Turing test defines an “intelligent” machine. In recent years, we have observed promising results in many Natural Language Understanding (NLU) tasks both in academia and industry, as the breakthroughs in deep learning are applied to NLU, such as the BERT model developed by Google in 2018.

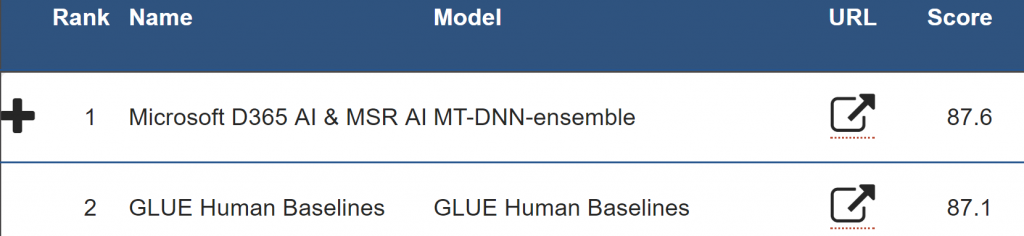

The General Language Understanding Evaluation (GLUE) is a well-known benchmark consisting of nine NLU tasks, including question answering, sentiment analysis, text similarity and textual entailment; it is considered well-designed for evaluating the generalization and robustness of NLU models. Since its release in early 2018, many (previous) state-of-the-art NLU models, including BERT, GPT, Stanford Snorkel, and MT-DNN, have been benchmarked on it, as shown in GLUE leaderboard. Top research teams in the world are collaboratively developing new models, approaching human performance on GLUE.

In the last few months, Microsoft has significantly improved the MT-DNN approach to NLU, and finally surpassed the estimate for human performance on the overall average score on GLUE (87.6 vs. 87.1) on June 6, 2019. The MT-DNN result is also substantially better than the second-best method (86.3) on the leaderboard.

The snapshot of the GLUE leaderboard on June 6, 2019

The latest improvement is primarily due to incorporating into MT-DNN a new method developed for the Winograd Natural Language Interface (WNLI) task in which an AI model must correctly identify the antecedent of an ambiguous pronoun in a sentence.

For example, the task provides the sentence: “The city councilmen refused the demonstrators a permit because they [feared/advocated] violence.”

If the word “feared” is selected, then “they” refers to the city council. If “advocated” is selected, then “they” presumably refers to the demonstrators.

Such tasks are considered intuitive for people due to their world knowledge, but difficult for machines. This task has proven the most challenging in GLUE, where previous state-of-the-art ML models can hardly outperform the naïve baseline of majority voting (scored at 65.1), including BERT.

Although the early versions of MT-DNN (documented in Liu et al. 2019a and Liu et al. 2019b) already achieve better scores than humans on several tasks including MRPC, QQP and QNI, they perform much worse than humans on WNLI (65.1 vs. 95.9). Thus, it is widely believed that improving the test score on WNLI is critical to reach human performance on the overall average score on GLUE. The Microsoft team approached WNLI by a new method based on a novel deep learning model that frames the pronoun-resolution problem as computing the semantic similarity between the pronoun and its antecedent candidates. The approach lifts the final test score to 89.0. Combined with other improvements, the overall average score is lifted to 87.6, which surpasses the conservative estimate for human performance on GLUE, marking a milestone toward the goal of understanding natural language.

The Microsoft team (from left to right): Weizhu Chen and Pengcheng He of Microsoft Dynamics 365 AI, and Xiaodong Liu and Jianfeng Gao of Microsoft Research AI.

Previous blogs on MT-DNN could be found at: MT-DNN-Blog and MT-DNN-KD-Blog.

Cheers,

Guggs