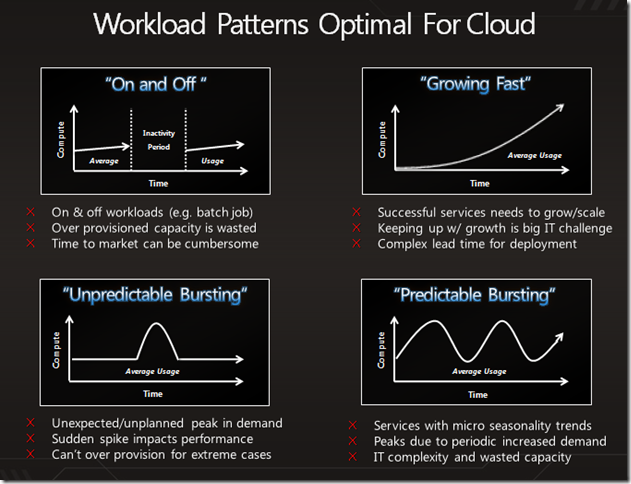

Optimal Workloads for the cloud

This is one of my favourite slides from the PDC last week – it comes from the session titled Windows Azure Platform Business Model

“Transforming to a services business“ by my friend Dianne O’Brien.

When talking to customers, colleagues and journalists I often talk about these models and Dianne’s slide will help me leave people with a visual representation. It’s even more powerful if we add example customer scenarios to each of these. Allow me to do that with a few fictional scenarios (i.e. these are not customers using Azure but they could be)

On and Off: a good example here would be a pharmaceuticals company like GlaxoSmithKline with drug trials data. Before a new drug is put in market it undergoes rigorous testing that generates masses of data. At a point, it may be necessary to apply brute force analysis of the data with a lot of computing power required. Once the results are produced though you want to turn that computing power off until the next time you need it. Why pay for servers in your own datacenter when you can spin up thousands on demand and only pay for what you use?

Growing Fast: this is the Web 2.0 overnight success story scenario. You and two pals leave your jobs, build a killer Web 2.0 site (think Facebook or Twitter) and you have demand that you could never have expected within days. In the past, this was a nightmare scenario of having to overbuy capacity (even in the days of traditional hosting) just in case you were successful. If you were, you then had to scramble to add more servers, build the load balancing and keep monitoring as you scaled up and up (hopefully not down). The cloud, and Azure more specifically allows you to scale as you need with minimal overhead. Note this is different that the EC2 approach from Amazon where you need to do quite a bit more administration as your capacity grows – though companies like RightScale do a fine job of helping with this. The key is Azure is designed to scale out using our Fabric Controller capability which is subtly but crucially different than having lots of VM’s or scaling up. As it happens we have a great on ramp here for Web 2.0 companies looking to lower their barriers to entry by providing free access to not our developer tools but also Azure.

Unpredictable Bursting: this could be applied to a number of sites but lets take something like a site that tracks news and may respond to huge bursts in demand when a global news story breaks – lets say Twitter (or Google :) ) when news of Michael Jackson’s death broke. Totally unexpected massive demand for the service which can sometimes be interpreted as a denial of service attack (as happened to Google that day). With a cloud platform that can dynamically scale you get around this issue.

Predictable Bursting: this is probably my favourite as it’s a bit of a hobby horse. Why do sites like Ticketmaster drive us insane when our favourite band (U2 in my case) fall over when tickets are announced? My guess is because they’re designed to support the middle of the curve – not the peaks. It’s expensive to buy hardware for the peaks when they maybe only happen a few times a year and the rest of the time your kit is idle. The scenario is similar to #1 but could be architecturally different in that you may use something like Azure just for it’s worker role capability to handle massive web requests but still have your core data within your own data center. This is where services like Project Sydney that we announced this week at the PDC come in handy – allowing you to have a secure, dedicated connection from the cloud back to your own data center.

So there you have it….the reasons to think about cloud as a new model for computing. thanks Dianne!