Leveraging Windows Azure WCF Services to Connect BCS with SharePoint Online

Introduction

You might have read an earlier post of mine where I discussed the new Business Connectivity Services (BCS) functionality in SharePoint Online (SP-O) and walked through how you can leverage jQuery and JavaScript to interact with an external list in SharePoint Online. This blog post assumed that you had created an external list in the first place and provided you with some code snippets to create a view of an external list. Since that time, a great post from Christian Glessner popped up that shows you how you can walk through creating an external list using SQL Azure. Using these two posts, you should now be able to get up and running and create a working external list that not only leverages SQL Azure as your line-of-business (LOB) data, but also gives you a little jQuery veneer.

However, the question I’ve been wrangling with of late is how to leverage BCS in SP-O using a WCF service? Using a WCF service can be much more powerful than using the SQL Azure proxy because you can literally model many different types of data that are beyond SQL Azure—ranging from REST endpoints, in-memory data objects, entity-data model driven services, service bus-mediated connections to on-premises data, and so on.

I’m still working through a couple of more complex scenarios, but I wanted to get something out there as I’ve had a number of requests on this. Thus, in this post, I’ll show you a simple way to use WCF to create an external list with the two core operations: read list and read item. The reason you will want to use WCF is that you will at some point want to connect your own LOB back-end to the SP-O external list, and you’re more than likely going to want to mediate the loading of that data with a WCF service of some sort. Now, if you’ve used the BDC Metadata Model templates in Visual Studio 2010, then you’ll be familiar with how you model the LOB data you’re trying to load into the external list (else, you’d just stick with a direct connection to SQL Azure) by using the web methods that map to the CRUD operations. You will do it in this post, though, using a generic WCF service project template (or more precisely, a Windows Azure Cloud project with a WCF Service role). In a set of future posts, I’ll walk through more complex scenarios where you have full CRUD methods that are secured through certificates.

The high-level procedure to create an external list in SP-O that uses a cloud-based WCF service is as follows:

1. Create a back-end LOB data source that you’ll run your service against;

2. Create a WCF service and deploy to Windows Azure;

3. Assess the permissions in the Metadata Store; and

4. Create an external content type (ECT) in SharePoint Designer that digests your WCF service and creates the list for you.

LOB Data

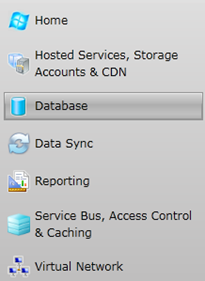

In this example, the LOB data is going to be SQL Azure—yes, I know, but the back-end data source is less important than the connection to that data. That said, if you navigate to your Windows Azure portal (https://windows.azure.com/default.aspx) and sign in using your LiveID, you’ll see the options similar to the below in your developer portal. (You need to have a developer account to use Windows Azure, and if you don’t you can get a free trial here: https://www.microsoft.com/windowsazure/free-trial/.)

Here are the general steps to create a new SQL Azure db:

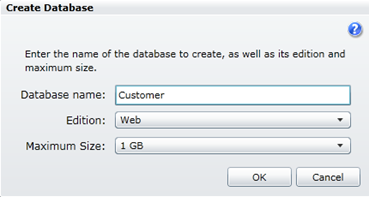

1. Click Database to display the database management capabilities in the Windows Azure portal.

2. Click Create to create a new database. Provide a name for the database and select Web edition and 1GB as the maximum size.

3. Click the Firewall Rules accordion control to manage your firewall rules . Note that you’ll need to ensure you have the firewall of your machine registered here so you can access the SQL Azure database.

4.After you create your SQL Azure database, you can now navigate away open SQL Server 2008 R2 Management Studio.

5.When prompted, provide the name of your server and enter the log-in information. Also, click the Options button to expose the Connections Properties tab and select Customers (or whatever you named your SQL Azure database). Click Connect. SQL Server will connect to your new SQL Azure database.

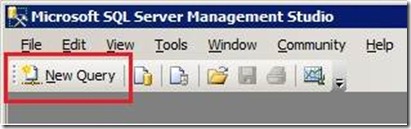

6. When SQL Server connects to your SQL Azure instance, click the New Query button as illustrated in the following image.

7. You now have a query window with an active connection to our account. Now you have the Customer database, you need to create a table called CustomerData. To do this, type something similar to the following SQL script and click the Execute Query button:

CREATE TABLE [CustomerData](

[CustomerID] [int] IDENTITY(1,1)NOT NULL PRIMARY KEY CLUSTERED,

[Title] [nvarchar](8)NULL,

[FirstName] [nvarchar](50)NOT NULL,

[LastName] [nvarchar](50)NOT NULL,

[EmailAddress] [nvarchar](50)NULL,

[Phone] [nvarchar](30)NULL,

[Timestamp] [timestamp] NOT NULL

)

8. You’ll now want to create a set of records for your new database table. To do this, type something similar to the following SQL script (adding different data in new records as many times as you’d like).

INSERT INTO [CustomerData]

([Title],[FirstName],[LastName],[EmailAddress],[Phone])

VALUES

('Dr', 'Ties', 'Arts', 'ties@fabrikam.com','555-994-7711'),

('Mr', 'Rob', 'Barker', 'robb@fabrikam.com','555-933-6514')

…

9. Eventually, you will have a number of records. To view all of the records you entered, type the following script and click the Execute Query button (where in this script Customers is the database name and CustomerData is the table name).

Select * from Customers.dbo.CustomerData

14. The picture below illustrates the type of results you would see upon entering this SQL script in the query window.

15. Close the SQL Server 2008 R2 Management Studio, as you are now done adding records.

This gives you a pretty high-level view of how to create and populate the SQL Azure DB. You can get a ton more information from the Windows Azure Developer Training Kit, which can be found here: https://www.microsoft.com/download/en/details.aspx?displaylang=en&id=8396.

Now that you’re done with the SQL Azure DB, let’s move onto the cloud-based WCF service.

Creating the WCF Service

As I mentioned earlier, the WCF service needs to ‘model’ the data you’re pulling back from your LOB and exposing in your external list. Modeling the data means at a minimum creating a Read List method and Read Item method. Optional methods, and ones you’d arguably want to include, would be Create Method, Update Method and Delete Method. The WCF service you create will be using the Cloud template and be deployed to your Windows Azure account.

To create the WCF service:

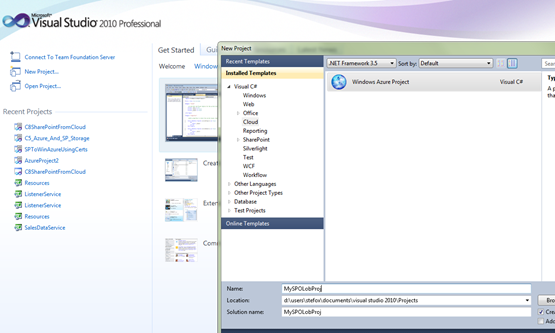

1. Open Visual Studio 2010 and click New Project. Select the Cloud option and provide a name for the project.

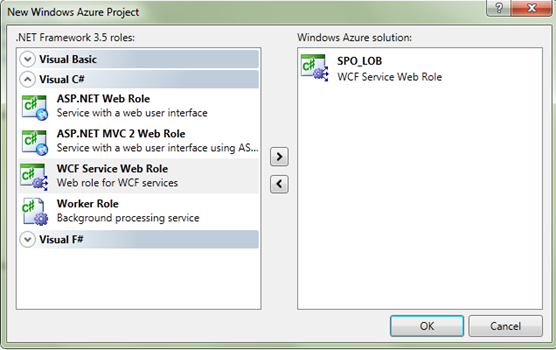

2. Select the WCF Service Web Role and click the small right-arrow to add the service to the cloud project. Click OK.

3. The cloud solution will create a new service project and the Windows Azure cloud project, which you use to deploy the service directly to your Windows Azure account.

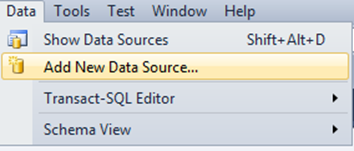

4. You’ll want to add the SQL Azure db to your project, so select Data on the file menu and select Add New Data Source.

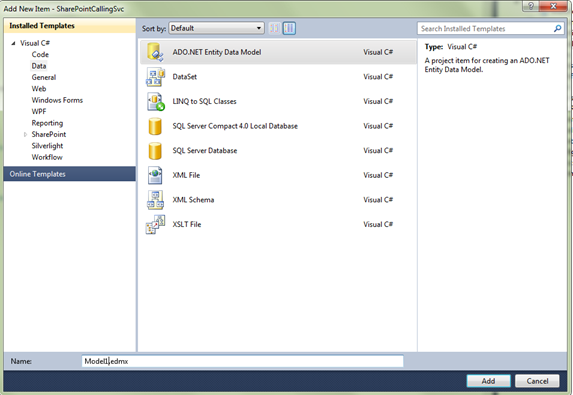

5. You can also right-click the main service project and select Add, New Item. Select Data and then select ADO.NET Entity Data Model.

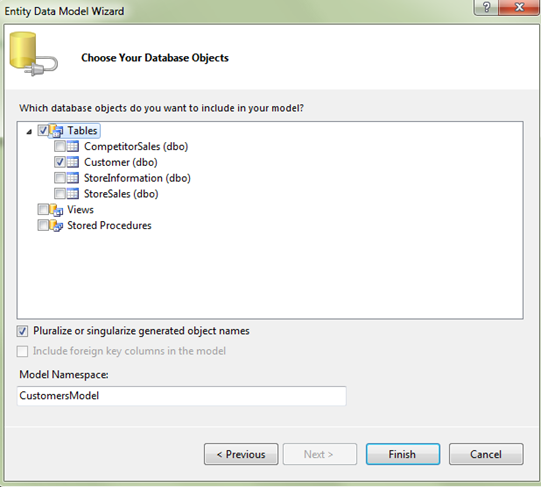

6. You’re then prompted to walk through a wizard to add a new database as an entity data model. In the first step, select Generate from Database. In the second, select New Connection and then connect to your SQL Azure instance. (You’ll need to either obfuscate the connection string or include it in your web.config before moving on. Given this is a sample, select Yes to include in your web.config and click Next.) Now, select the tables you want to include in your entity data model, and click Finish.

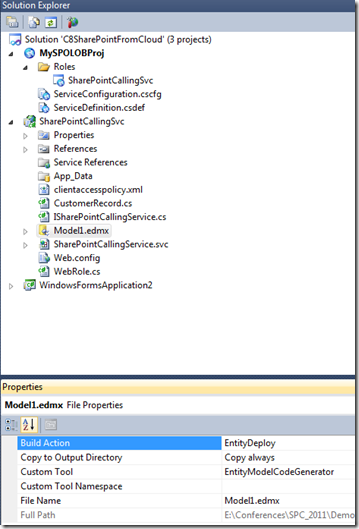

7. Make sure you set the Build Action to EntityDeploy and Copy always, else you’ll spend countless hours with delightfully vague errors to work through. (Note that you may also try setting Embedded Resource as the Build Action if you have deploy issues. I had an issue with this the other day and noticed that when analyzing my assemblies using Reflector that some EDM resource files were missing. Setting Embedded Resource solved this problem.) This ensures your entity data model gets deployed to Windows Azure. (You’ll also note that in the screenshot below, I renamed my services to be more intuitive than Service1. I also have a clientaccesspolicy.xml file, which is only necessary if you’re going to consume the service from, say, a Silverlight application.)

8. Right-click the service project and select Add and then select Class. Provide a name for the class, and then add the following variables to the class.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

namespace SharePointCallingSvc

{

public class CustomerRecord

{

public int objCustomerID { get; set; }

public string objTitle { get; set; }

public string objFirstName { get; set; }

public string objLastName { get; set; }

public string objEmailAddress { get; set; }

public string objHomePhone { get; set; }

}

}

9. In the service code, add a method that will read one item and a method that will retrieve all items from the SQL Azure (or our LOB) data store.

using System;

using System.Linq;

using System.Runtime.Serialization;

using System.ServiceModel;

using System.ServiceModel.Activation;

using System.Collections.Generic;

using Microsoft.ServiceBus;

namespace SharePointCallingSvc

{

[AspNetCompatibilityRequirements(RequirementsMode = AspNetCompatibilityRequirementsMode.Allowed)]

public class SharePointCallingService : ISharePointCallingService

{

public CustomerRecord[] GetCustomers()

{

using (CustomersEntities db = new CustomersEntities())

{

var myItems = (from c in db.Customers select c);

CustomerRecord[] myCustomerArray = new CustomerRecord[myItems.Count()];

int i = 0;

foreach (Customer item in myItems)

{

myCustomerArray[i] = new CustomerRecord();

myCustomerArray[i].objCustomerID = item.CustomerID;

myCustomerArray[i].objTitle = item.Title;

myCustomerArray[i].objFirstName = item.FirstName;

myCustomerArray[i].objLastName = item.LastName;

myCustomerArray[i].objEmailAddress = item.EmailAddress;

myCustomerArray[i].objHomePhone = item.Phone;

i++;

}

return myCustomerArray;

}

}

public CustomerRecord GetCustomer(int paramCustomerID)

{

using (CustomersEntities db = new CustomersEntities())

{

var myItem = (from c in db.Customers where c.CustomerID == paramCustomerID select c).FirstOrDefault();

CustomerRecord returnCustomer = new CustomerRecord();

returnCustomer.objCustomerID = myItem.CustomerID;

returnCustomer.objTitle = myItem.Title;

returnCustomer.objFirstName = myItem.FirstName;

returnCustomer.objLastName = myItem.LastName;

returnCustomer.objEmailAddress = myItem.EmailAddress;

returnCustomer.objHomePhone = myItem.Phone;

return returnCustomer;

}

}

}

}

10. Add the interface contract.

using System;

using System.Collections.Generic;

using System.Runtime.Serialization;

using System.ServiceModel;

namespace SharePointCallingSvc

{

[ServiceContract]

public interface ISharePointCallingService

{

[OperationContract]

CustomerRecord[] GetCustomers();

[OperationContract]

CustomerRecord GetCustomer(int CustomerID);

}

}

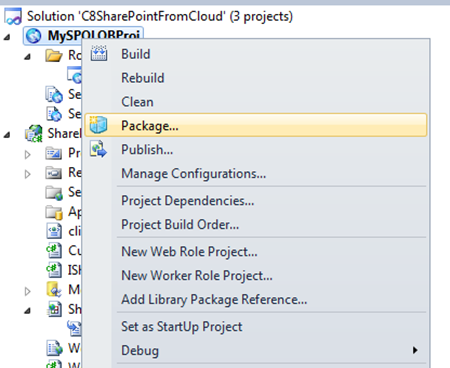

11. Right-click the Cloud project and select either Publish or Package. Publish is a more automated way of publishing your code to Windows Azure, but you need to configure it. Package is more manual. If you’ve never published an application to Windows Azure, select Package. Your solution will build and then Windows Explorer will open with the two built files—a configuration file and package.

12. Leave the Windows Explorer open for the time being and jump back to your Windows Azure portal.

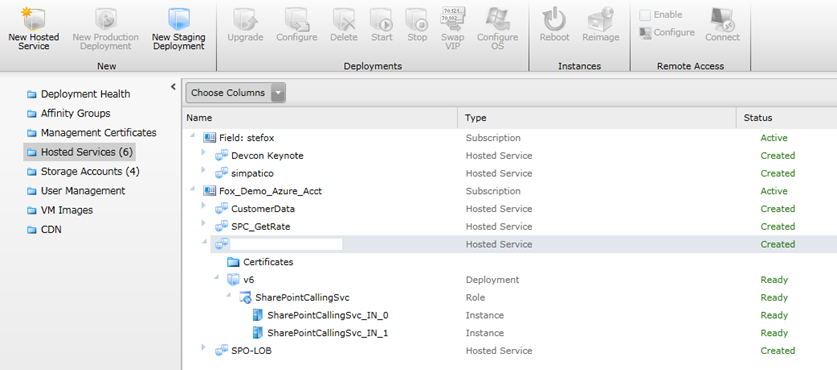

13. Above, you can see that I’ve got a new hosted service set up, but if you don’t click New Hosted Service in the ribbon, fill out the properties of the new hosted service (e.g. Name, URI prefix, deployment name, etc.). Click Deploy to production environment and then click Browse Locally to load the package and configuration files—which should still be open in Windows Explorer.

14. Click OK, and then go have a coffee; it’ll take a few minutes to fully deploy.

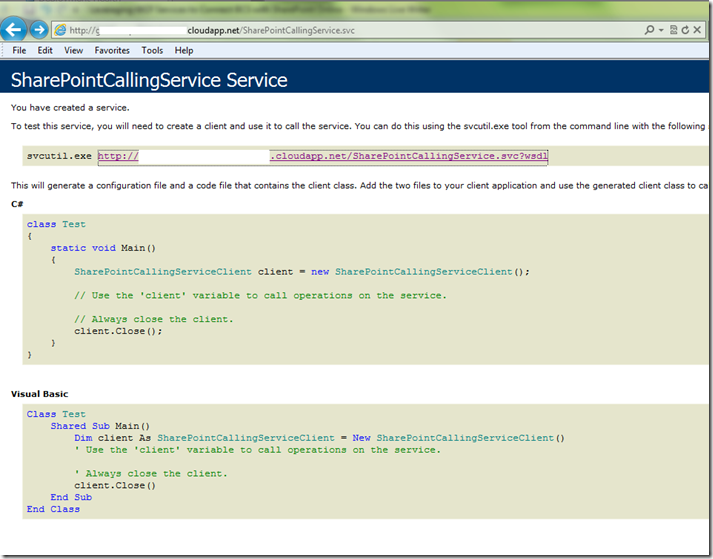

15. When it is deployed, you will be able to click on the service definition to retrieve the WSDL, which should reflect the two web methods you included earlier.

16. At this point, I would create a simple test app to make sure your service works as expected. If it does, your one method (GetCustomer) will take an ID and pass back a single record, and your other method (GetCustomers) will return all of the records in the LOB back-end.

Assuming your service works fine, you’re now ready to move onto the next step, which is making sure you’ve set the permissions for your ECT.

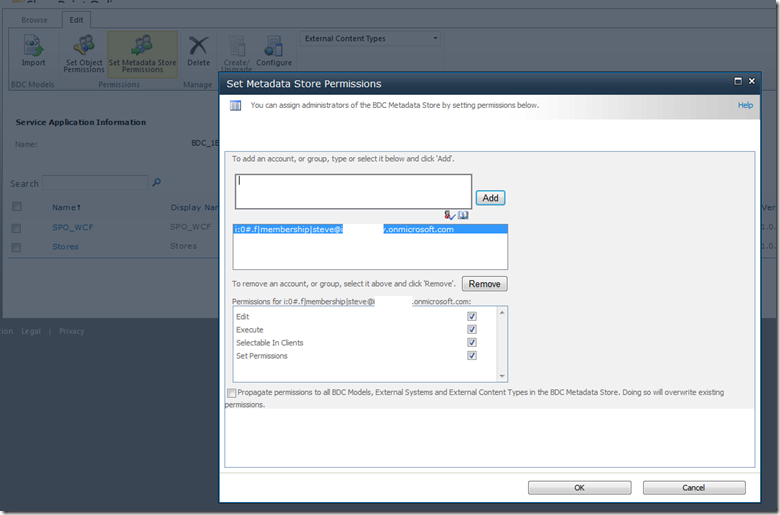

Setting the Permissions for the External Content Type

To set the permissions on the Metadata Store, where the ECTs are stored, simply navigate to the Business Data Connectivity option in your SP-O portal, and select Set Metadata Store Permissions. Type in the person you want to have permissions for the ECT, and click Add, and then set the explicit permissions. Click OK when done.

You’re now ready for the final step: creating the external content type using the simple service.

Creating the External Content Type

Creating the external content type is similar to how you did it using SharePoint Foundation and SharePoint Designer; you create a new ECT and SharePoint Designer saves it directly to the site for you.

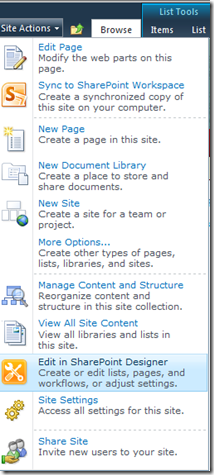

1. Navigate to your SP-O site and then click Site Actions and then select Edit in SharePoint Designer.

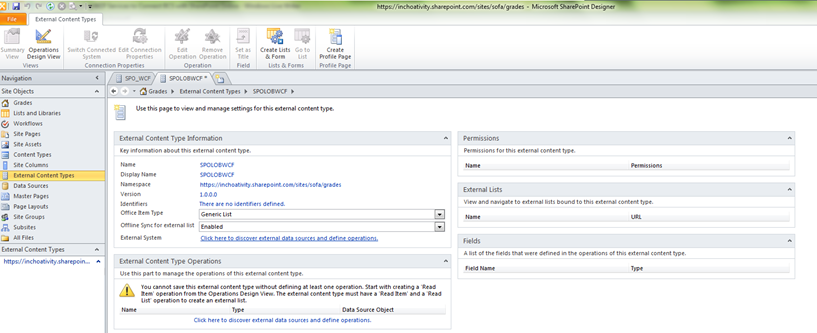

2. Click External Content Types in the left-hand navigation pane.

3. Click the External Content Type in the ribbon. Add a Name and a Display Name and leave the other options defaulted. Click the ‘Click here to discover…’ link.

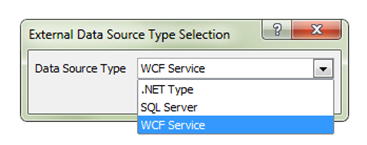

4. In the External Data Source Type Selection, select WCF Service from the drop-down and then click OK.

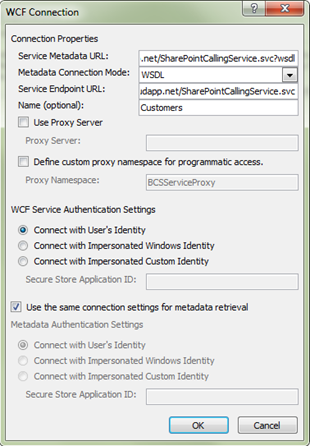

5. You now add metadata about the WCF service in the WCF Connection dialog. For example, add the service metadata URL (e.g. https://myservice.cloudapp.net/myservice.svc?wsdl), select WSDL as the metadata connection mode, and then add the service URL to the Service Endpoint URL (https://myservice.cloudapp.net/myservice.svc). Add an optional name. Click OK, and your service should resolve, and you’ll now be able to add the Read Item and Read List operations.

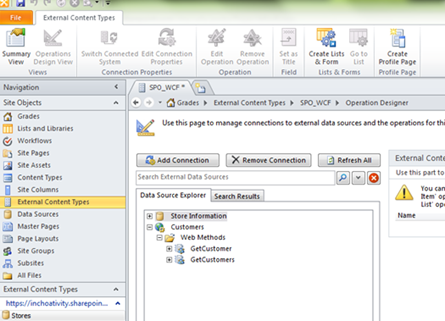

6. Below, you can see that I now have the two web methods that I created exposed in my data connection—so I can now create the ECT and save it to the Metadata Store.

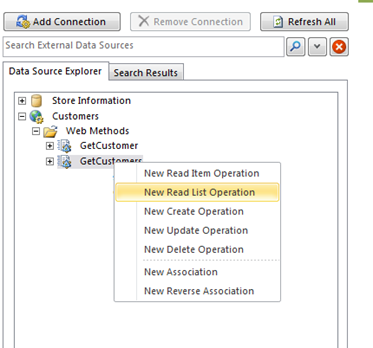

7. To do this, right-click on each of the methods in sequence. When right-clicking the GetCustomer method, make sure you select the Read Item operation and follow the wizard. When right-clicking the GetCustomers method, select Read List as the operation, as is shown below.

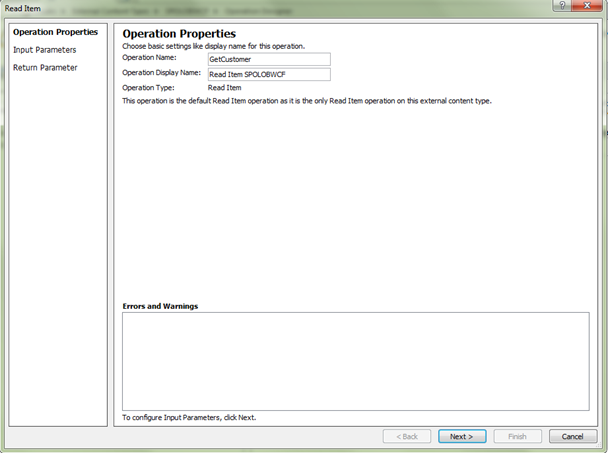

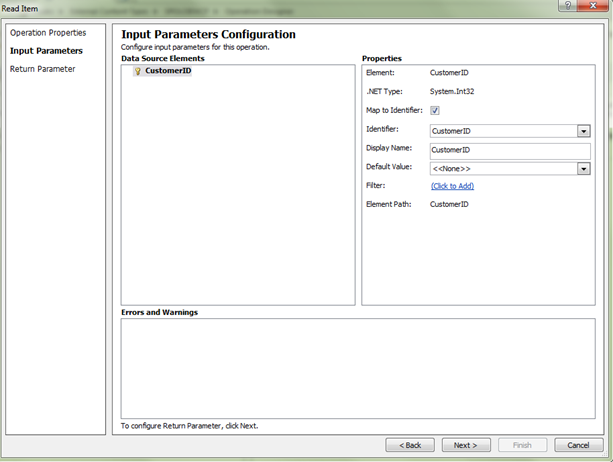

8. Both times you right-click and select an operation, a wizard will open to guide you through the process of creating that operation. For example, when you right-click and select New Read Item Operation, you’ll be prompted with a first step where you simply click Next. In the next step, you’ll then need to map the ID in your web method as the Identifier. You then click Next and then Finish.

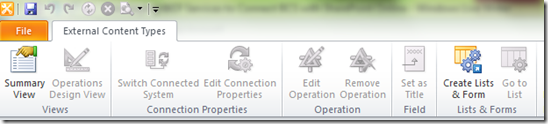

At this point, you’ve created both the Read Item and Read List operations and can click the Create Lists & Form button to create a new list using that ECT.

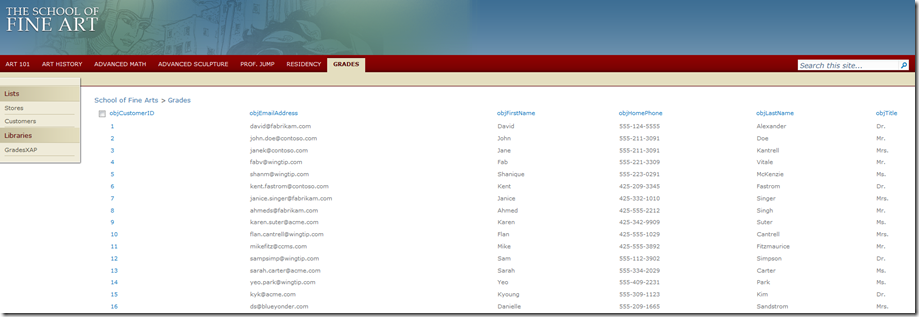

The result is a new external list, which is reflective of the class name properties as the column headers.

And voila, you’re now done. You’ve created an external list using BCS for SP-O using a WCF service talking to a SQL Azure back-end.

To reiterate, we used the WCF service because we wanted to model our own return data using the web methods in the service. On the other side of that service could literally be many different types of LOB data. While you have the Metadata Store permissions that you assessed and set, the actual service is in some way unsecured. To get a service working to test out the modeling, this would be fine; though, for production code you may want to provide a more secure channel that comes with a username/password authentication and is protected using a certificate/key.

A Couple of Tips

When deploying services to Windows Azure, test often. I always test locally before I move to the cloud. You can build and test in the local emulator environment, or you can deploy the service to IIS to test it out there.

Also, I am not a bindings expert in WCF, so this is an area I’m looking into right now to try and understand the best binding method for the service. For example, the serviceModel elements from my web.config are below, and I’m in the process of trying out different bindings—to manage both secure and non-secure WCF-based web services.

…

<system.serviceModel>

<client />

<bindings>

<customBinding>

<binding name="WCFServiceWebRole1.CloudToOnPremForwarder.customBinding0">

<binaryMessageEncoding />

<httpTransport />

</binding>

</customBinding>

</bindings>

<services>

<service behaviorConfiguration="WCFServiceWebRole1.CloudWCFServiceBehavior" name="SharePointCallingSvc.SharePointCallingService">

<endpoint address=""

binding="customBinding"

bindingConfiguration="WCFServiceWebRole1.CloudToOnPremForwarder.customBinding0"

contract="SharePointCallingSvc.ISharePointCallingService" />

<endpoint address="mex" binding="mexHttpBinding" contract="IMetadataExchange" />

</service>

</services>

<behaviors>

<serviceBehaviors>

<behavior name="WCFServiceWebRole1.CloudWCFServiceBehavior">

<useRequestHeadersForMetadataAddress>

<defaultPorts>

<add scheme="http" port="81" />

<add scheme="https" port="444" />

</defaultPorts>

</useRequestHeadersForMetadataAddress>

<serviceMetadata httpGetEnabled="true" />

<serviceDebug includeExceptionDetailInFaults="true" />

</behavior>

</serviceBehaviors>

</behaviors>

<serviceHostingEnvironment aspNetCompatibilityEnabled="true" multipleSiteBindingsEnabled="true" />

</system.serviceModel>

…

Lastly, if you get any ‘metadata resource’ errors, always make sure you’ve set the right properties on the entity data model. The resource files for my entity data model were not being generated and deployed, so I got this error a couple of times.

What’s Next?

As I mentioned earlier in the blog, I’ll next follow up with the other web methods/operations that you’d want to build into the cloud-based WCF service and will hopefully get a chance to discuss how to manage secure connections using certificates. It’d also be great to hear from other folks who are banging away at this. I know the MS writers are working hard to get some docs out on it, but it’s always good to test out different scenarios to take the BCS/SP-O functionality for a good test-run given the functionality is there now.

Happy coding.

Steve

@redmondhockey