Build Completely, Test Completely, Deploy Completely

There seems to be no clearer sign of a maturing project than the ability to build/test/deploy daily. The daily build is as close to a universal cure as any process change I can bring to a troubled project. It helps developers by providing a trusted method for integration testing. It helps ensure the solution is stable and installable for automated feature, integration and regression testing, and it baselines and/or versions the solution for long running stress, load, and performance tests. Seems like a win for everyone. That said I am surprised how often teams become focused on the "daily" part of daily builds and lose sight of the "completely" requirement.

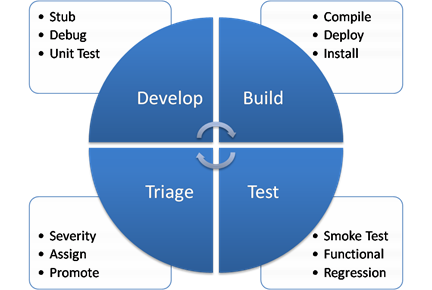

It takes a serious commitment for a program, and a huge commitment for a complex program, to get the daily build going smoothly. Below is a high level diagram of roles and activities. Every part of the team is involved. They need to synchronize and support each other. There are few clearer examples of software being a true team sport. It's clean. It's balanced and done poorly will confound the most seasoned managers to miss the troubles it can easily hide.

In practice, the developers must check-in working features and compile and smoke test a deployable version of the solution everyday. The key here is the build need not be functionally complete but each function included must, by the developers standards, be ready for test. Every build need not be tested. Along the same lines the testers take the most recent build and test it completely. If, and it is more likely than not, the testing process takes more than one day, no problem. When they are done they grab the next most recent build and let the builds in between hit the floor. Once a build passes feature an regression testing it becomes a candidate for the long running stress, load, and performance test. Again, not every build passing test is used but once a builds clears stress, load, and performance it is a candidate for release to users.

Once a team matures to the point where the build cycle is running smoothly it should always have a build version ready to test, another ready for load, stress, and performance test, and a third ready for release.

If developers fails to complete a feature or to unit test and integration test their code completely assuming the build will find any issues, they are most regrettably right. The build will break. The entire team will jump like ground hogs from their holes waiting to hear who is responsible. The culpable coder or coders will be informed and repair or remove their code so the build can continue. They win.

If you are a developer looking to hide that fact that you can't get your code to run you have just been awarded more time and as a bonus you are disconnected from the team. Now you can really go off on your own, work really hard and magically come back with a fix. The buildmaster is taking most of the heat and the next day, if it still doesn't build... well... just do it again. Since the code isn't making it to test there are no defects to track and the dev is free and clear. For a while.

Partial builds drive great metrics; short build times, day over day deployment success, and quick response to developer requests. Partial builds promoted to test hide everything and make any metrics you thought you were managing with useless. Building a modern program completely frequently entails compiling code, building orchestrations, merging scripts, and any number of platform or server based requirements be met. The Buildmaster has to aggregate the output of the build and create installable media which when executed must move bits to their target servers, run installers, reset configuration files, and verify success. The build must complete in total. All or nothing. Finding a bad config during installation requires a new complete build. Oh sure you could just go to the target machine and fix it. If you are building daily it will just be broken again tomorrow. Troubled projects routinely fail to recognize the breath of the build actively and always fail to execute with any consistency.

Testing completely is a touchy subject. Many and agilist will say test as a separate role or activity is bad. To some degree I agree. Testing should happen early and often. The team owns the code so every one can and should test everyone's code. Test first drives scope control. All good things. Unfortunately they are not always enough. Programs require integration and regression testing which address conditions not defined in test plans such as mixing letters and numbers in a date field (a simplistic example I know). Also long running tests such as stress and load needs to happen in parallel on build versions that pass all of the predecessor tests. The point is that test takes a lot of resources both human and machine. Troubled projects frequently look to cut corners. They may choose to test on the most resent change sets, or just the patch but not the full version of a product. They may assume the test plans are always complete ad skip exploratory testing. No matter what form the poison takes, anything less than complete testing is poison. It destroys any value testing measures may have, it deludes the team but reporting quality incorrectly and it eventually erodes the confidence of stakeholders and managers.