Presentation on Visual Studio Database Projects Integration with Visual Studio Team Foundation Server

This post contains the slide deck from a recent presentation on how to integrate Visual Studio Database Projects with Visual Studio Team Foundation Server. We have also recently published an accompanying blog with extended content, details and scenarios: https://blogs.msdn.com/b/ssdt/archive/2014/07/24/sql-server-database-projects-and-team-foundation-build.aspx.

(Note: A link to the complete slide deck can be found at the bottom of the post)

SQL Server tooling inside Visual Studio is a broad topic covering plenty of great functionality. In this presentation we will focus on integrating database projects with TFS and using Database Unit Testing. There are plenty of existing presentations covering the other parts of the SQL tooling experience. |

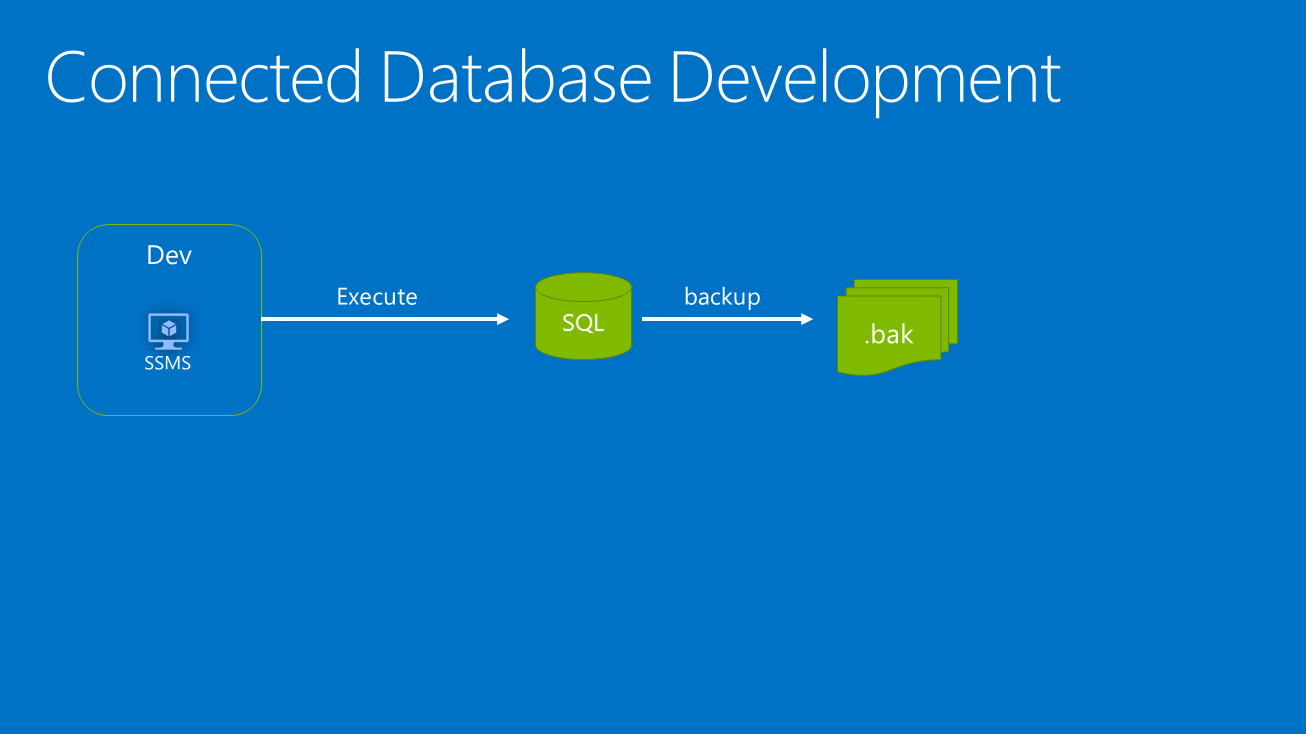

To understand the benefits of using project-based development it helps to examine common development practices and explain the benefits and drawbacks of each one. Let’s start with simple, connected based development experience using SSMS with database changes occurring directly against the database. In this case the database is the source of truth and periodic backups are used to restore the DB if there’s a mistake / an error occurs. This is popular due to the speed of development (make a change and it is instantly applied), but has a number of drawbacks. For instance it’s hard to understand what changes have occurred, why they were applied, or even what the overall structure is. One developer might have removed the View or Table a stored procedure calls but this won’t be noticed until you call into it. There’s also a lack of separation between development and deployment, which impacts the ability to migrate the DB to other servers among other things. So this has some benefits and lots of drawbacks. |

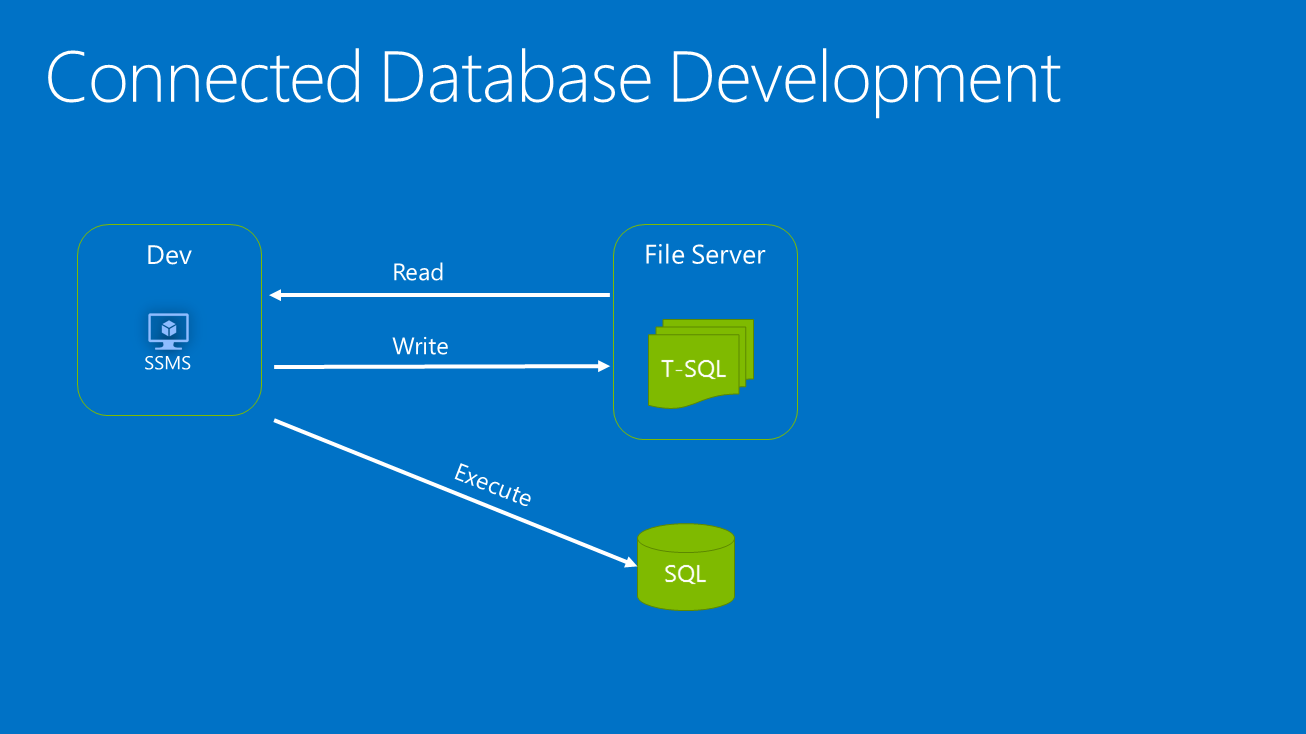

Moving to a more advanced, and likely more common scenario in many development environments, a lot of developers continue to use connected development in SSMS but with the use of a set of scripts that represent the database creation and modification steps needed to get it into its current state. In this example, it’s common to define the initial state (v1) of the DB, then have a set of ALTER statements that move that to v2, another for v3, etc. This supports developing against one DB and then deploying changes to a staging server before deployment. It’s a definite step up from the more basic version of connected development and many teams use this today. It does have drawbacks too:

These drawbacks are always going to be there when using pure connected development. It’s a reason we feel there are strong benefits to using an offline, project-based development pattern just like in other languages. |

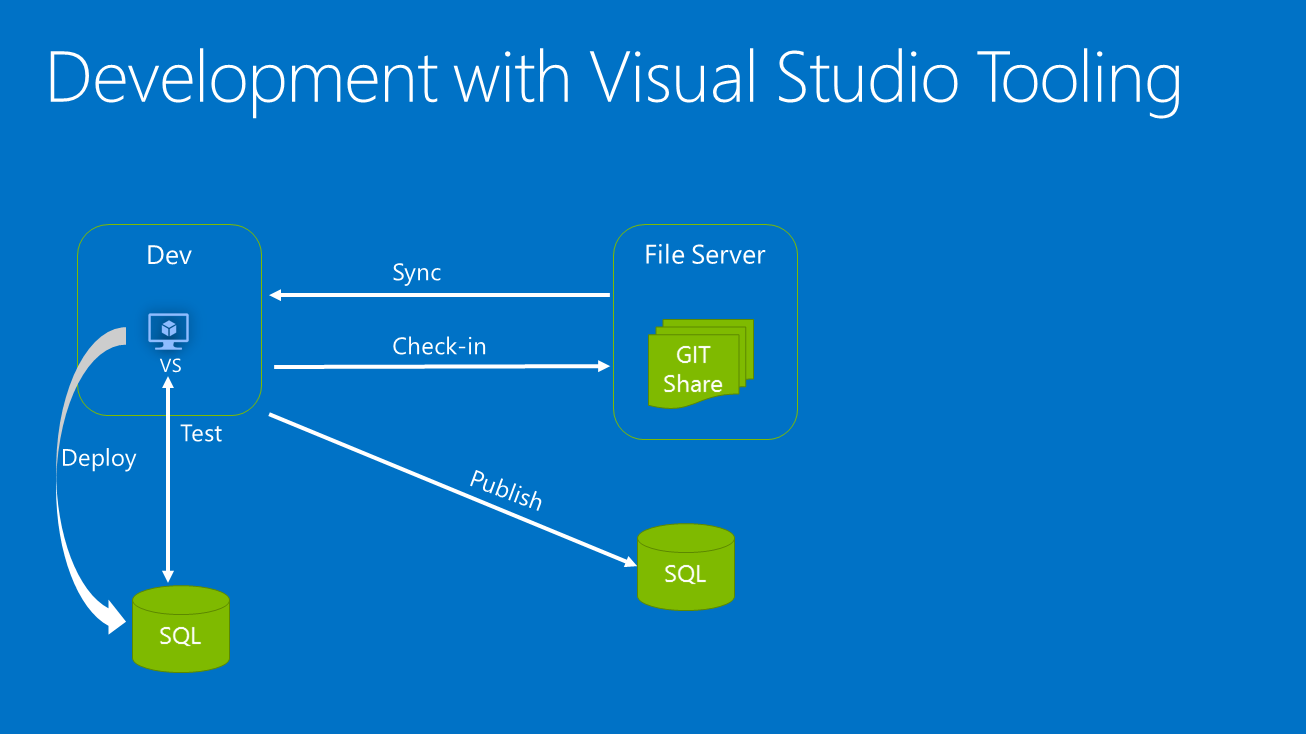

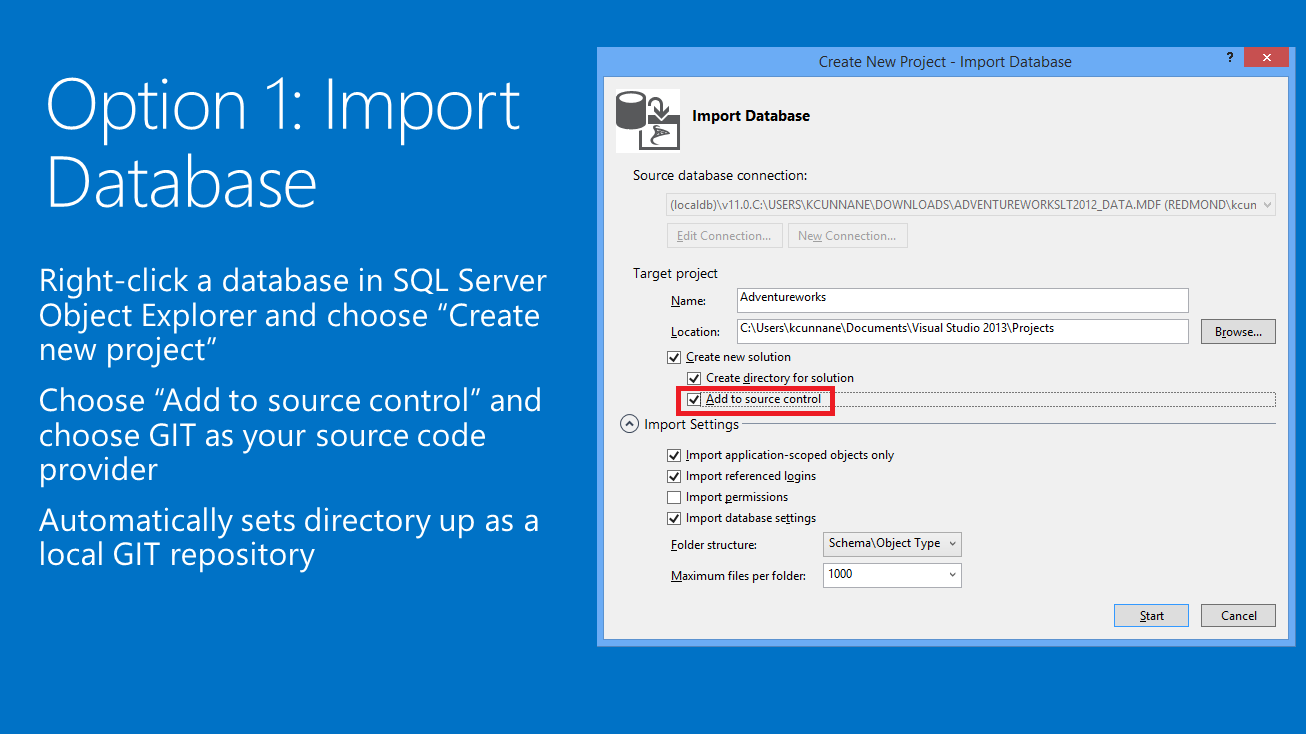

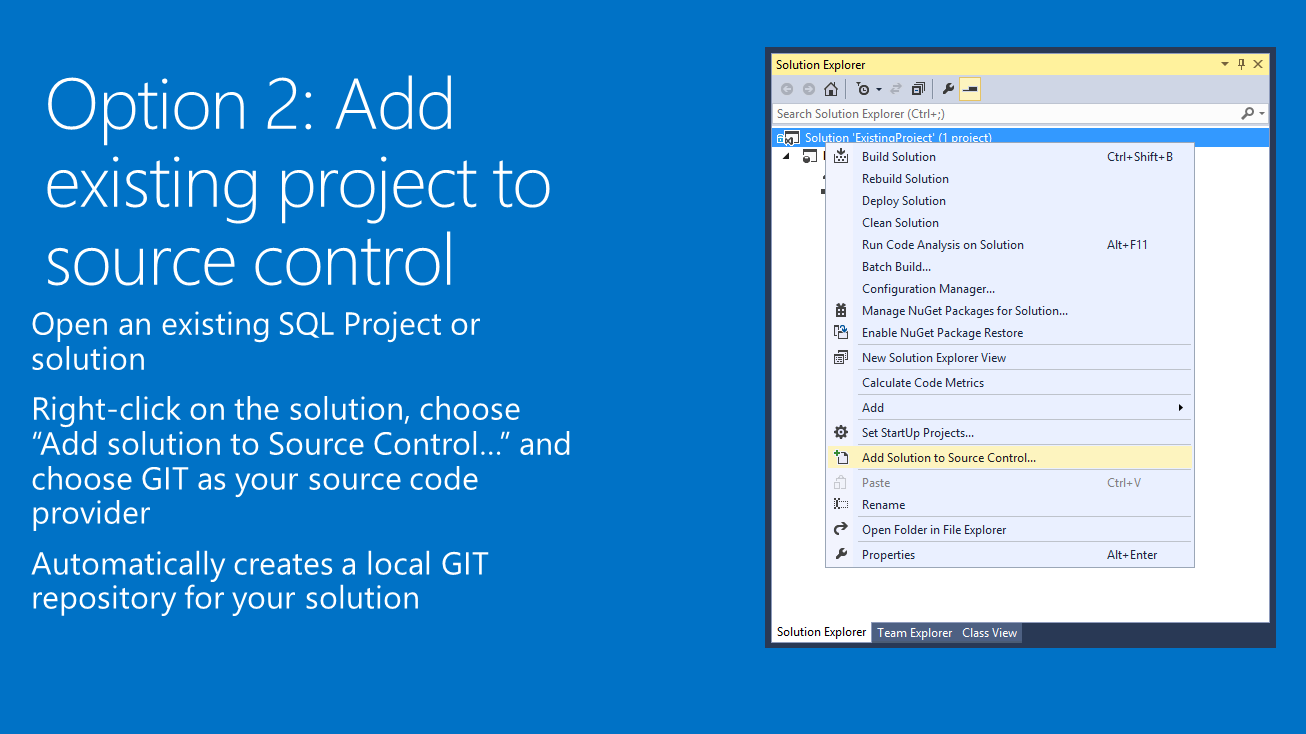

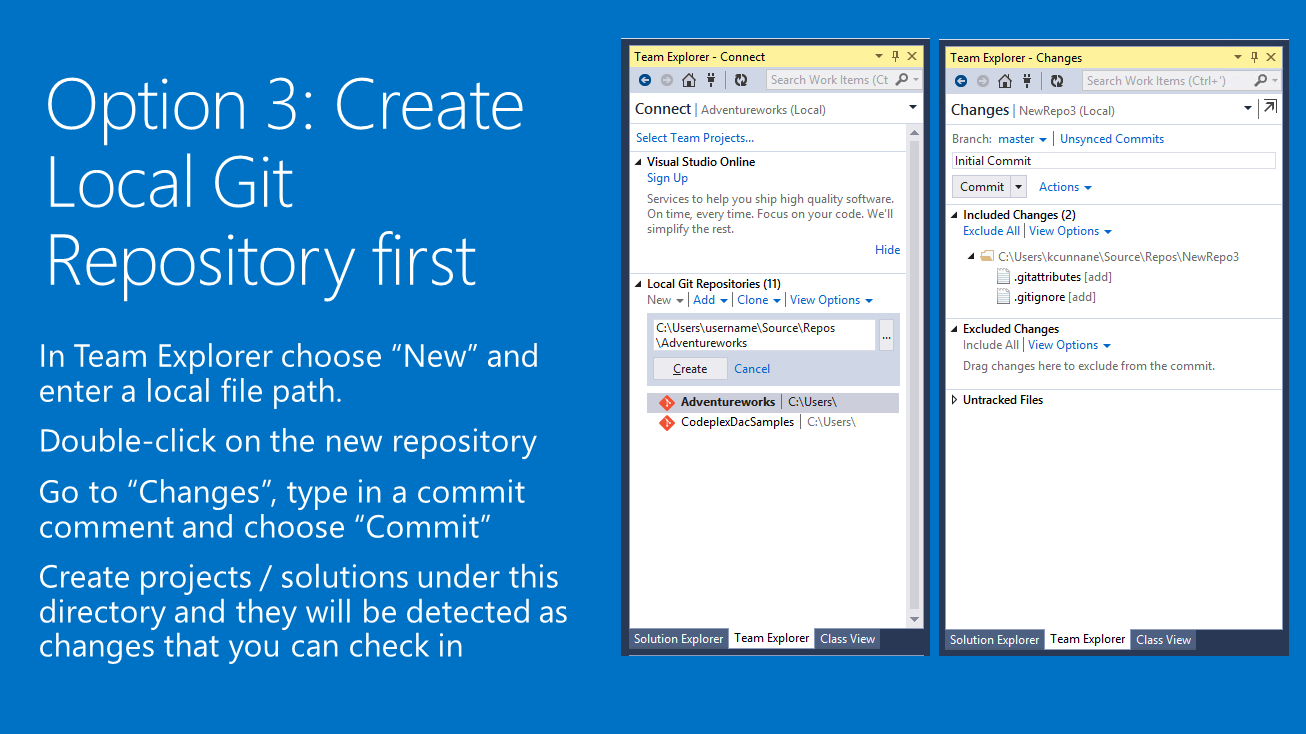

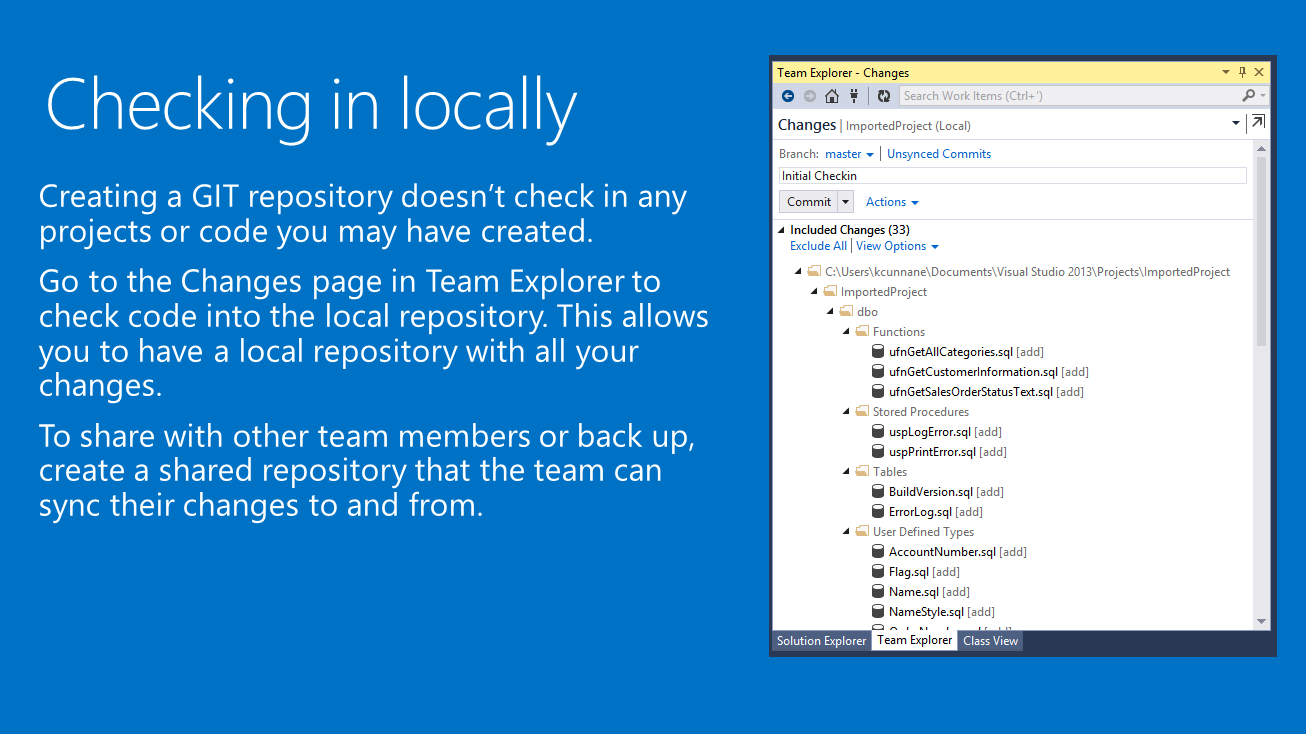

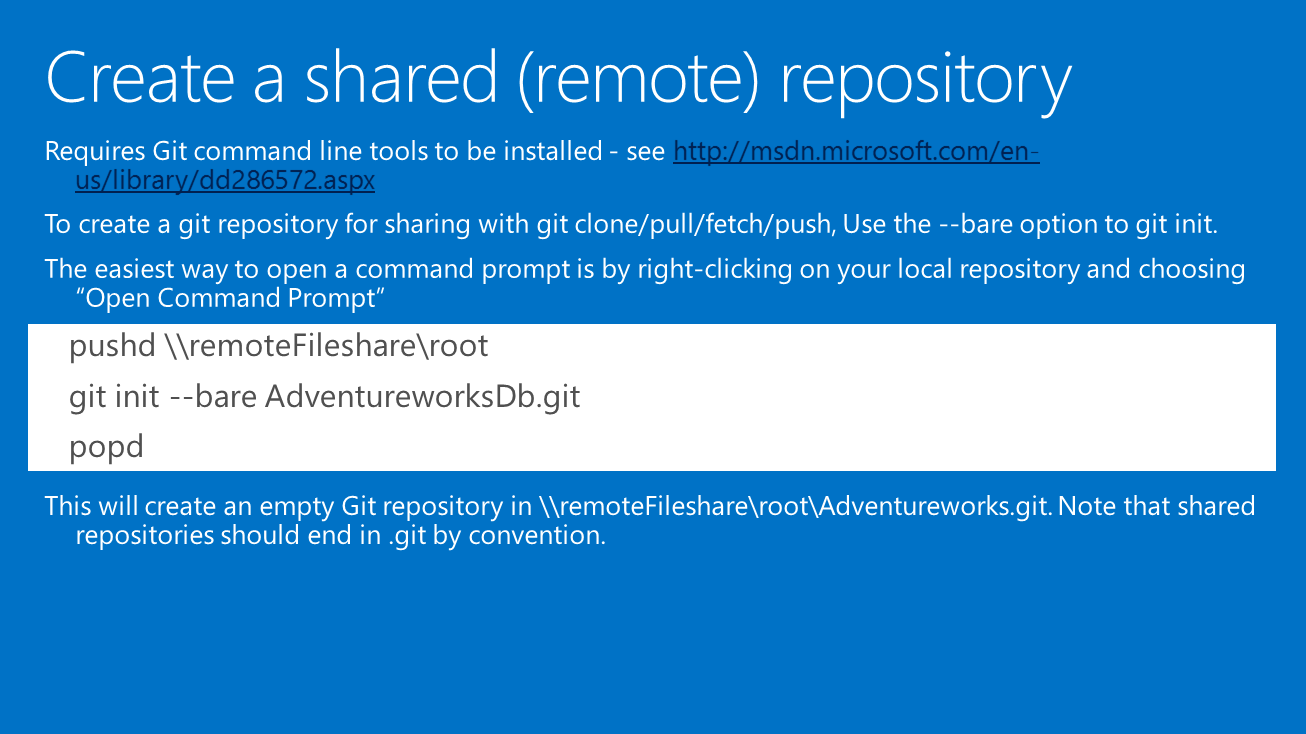

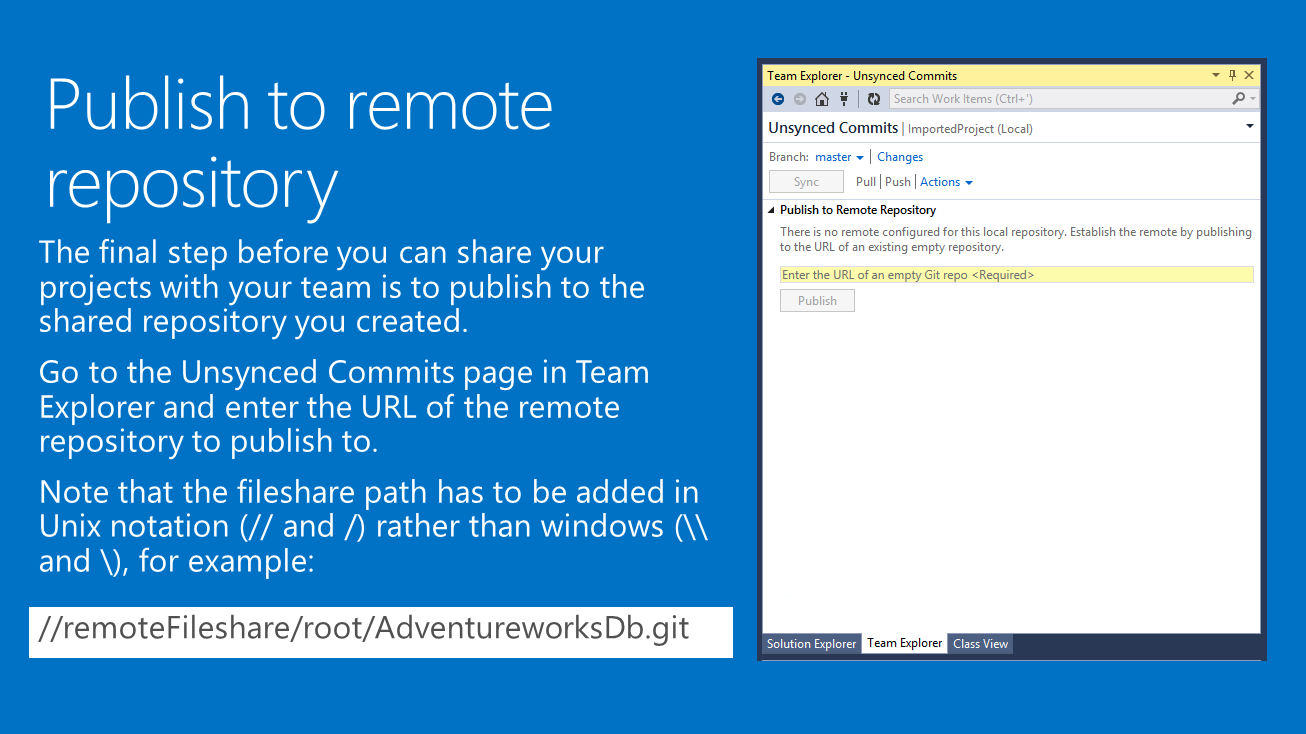

Here’s what a simple project-based development scenario looks like in Visual Studio, suitable for 3-5 developers. The previous set of incremental deployment scripts on a file share somewhere are replaced with only using pure Data Definition Language (DDL) describing how a table / view etc. should look. This has a big immediate benefit since you can see exactly how a given table should look for any version of your database. The changes (why they changed, what the change was) are stored by use of source control – Git in this example. That has built-in functionality for showing what changed, and commit comments explain why. You may be wondering how this works moving from v1 -> v2 -> v3? Before you needed incremental scripts, how does that work here? The answer is that the DacFx deployment technology used by our SQL tooling automatically generates those scripts for you. When developing and testing locally you’ll be making lots of small changes which are automatically applied (try hitting F5 on a database project to see your latest changes pushed to a local development DB, for instance). When publishing full changes to a staging server, you can choose to script out those changes for validation by a DBA or directly publish the changes using Visual Studio / our SqlPackage command line tools. In addition, now that the entire DB structure is in a project you get build-time validation to detect issues such as the broken stored procedure we would’ve missed in the connected world. This is really invaluable as a tool and can significantly improve long term maintainability. The big benefits here are therefore that versioning becomes much less of a concern, you get lots of extra validation (build time, using the local deployment loop, and via validation of the scripts generated for full publish), and your change information is documented and stored in a consistent manner. |

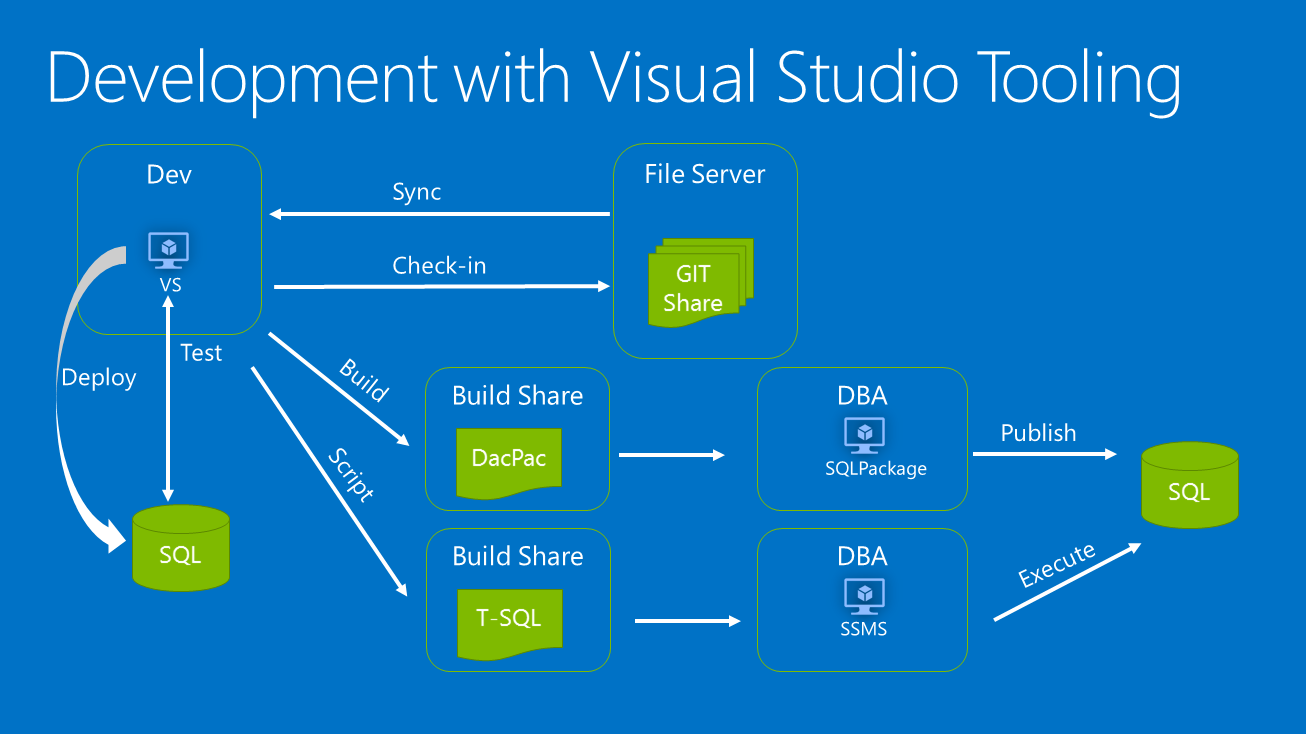

Getting to a more fully-fledged development pipeline you may wish to separate out the Developer and DBA roles. Here we go through an example of that, the only real change being that the developer outputs a Dacpac (a single file representing the expected database state, usable to deploy across Visual Studio, SSMS and our SqlPackage command line tooling) or a set of pre-build scripts build against the expected target server state, then hands these off to a DBA for deployment to the staging server. This is a common scenario in many companies where devs are not given access to staging / production servers. The tooling handles this well and readily supports scenarios such as this. |

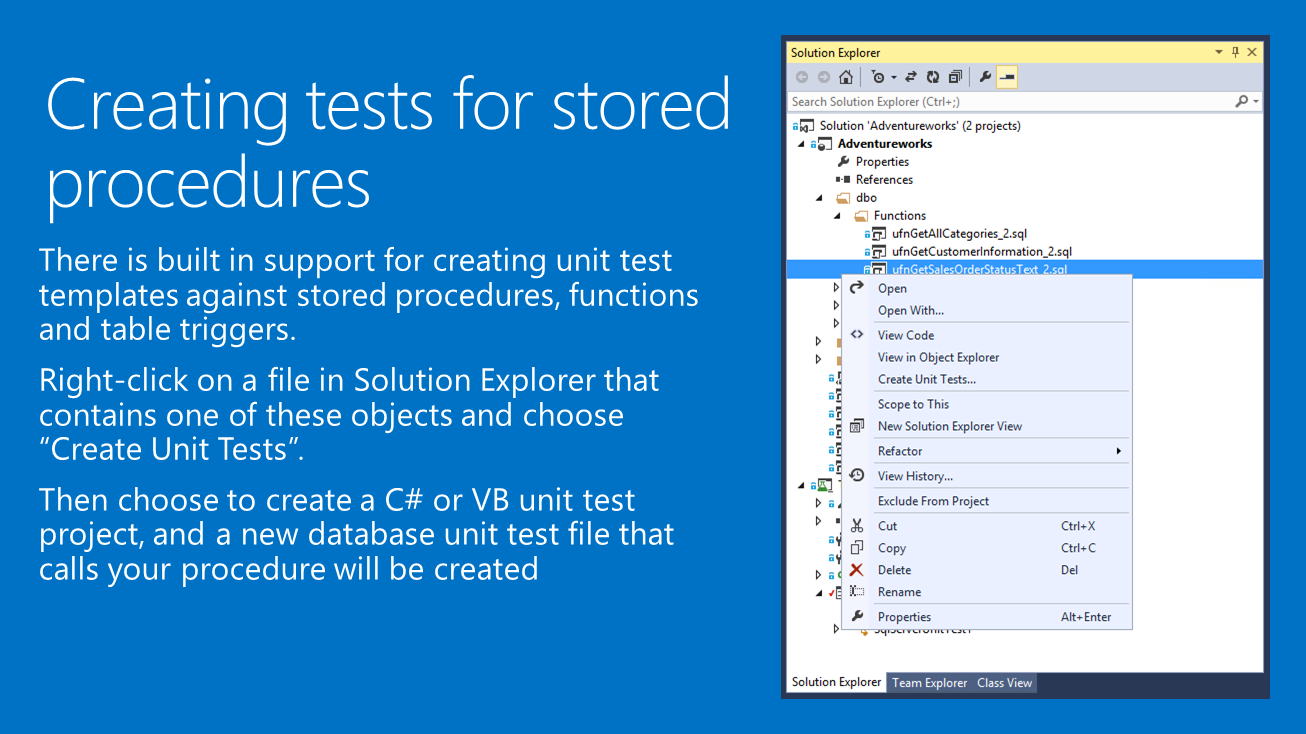

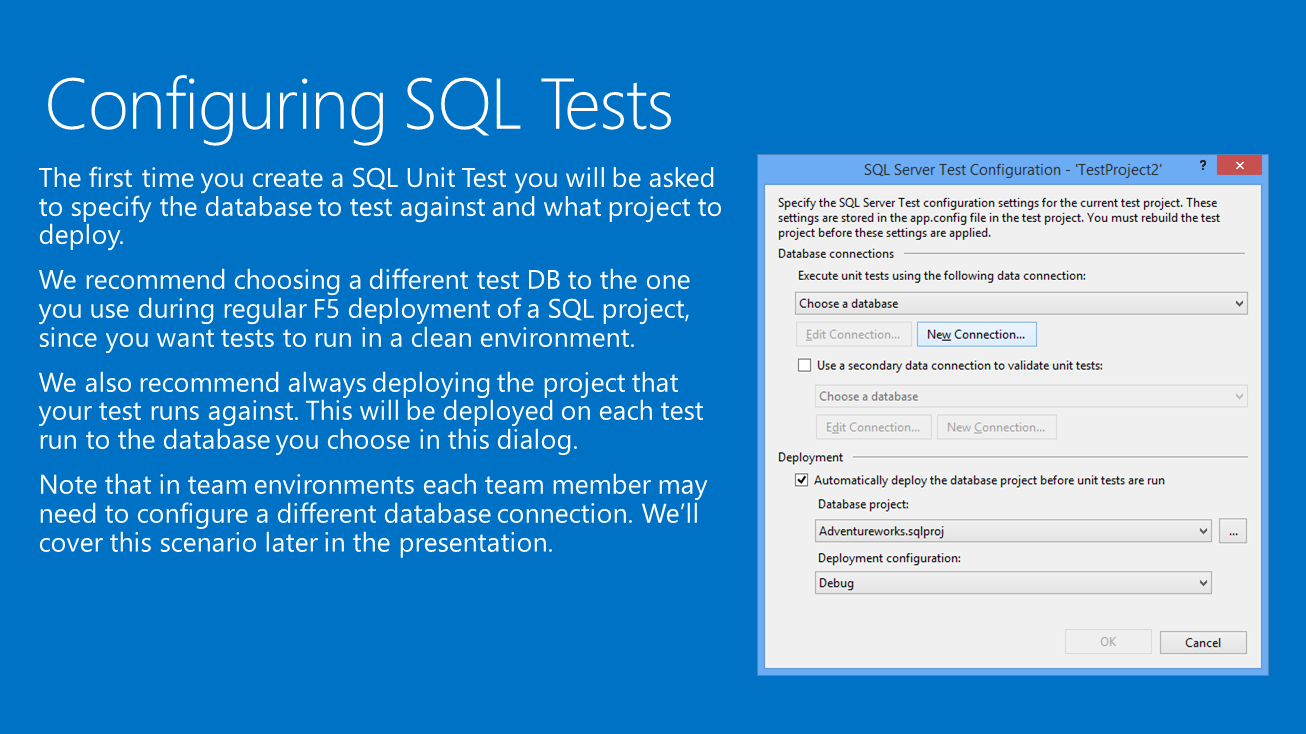

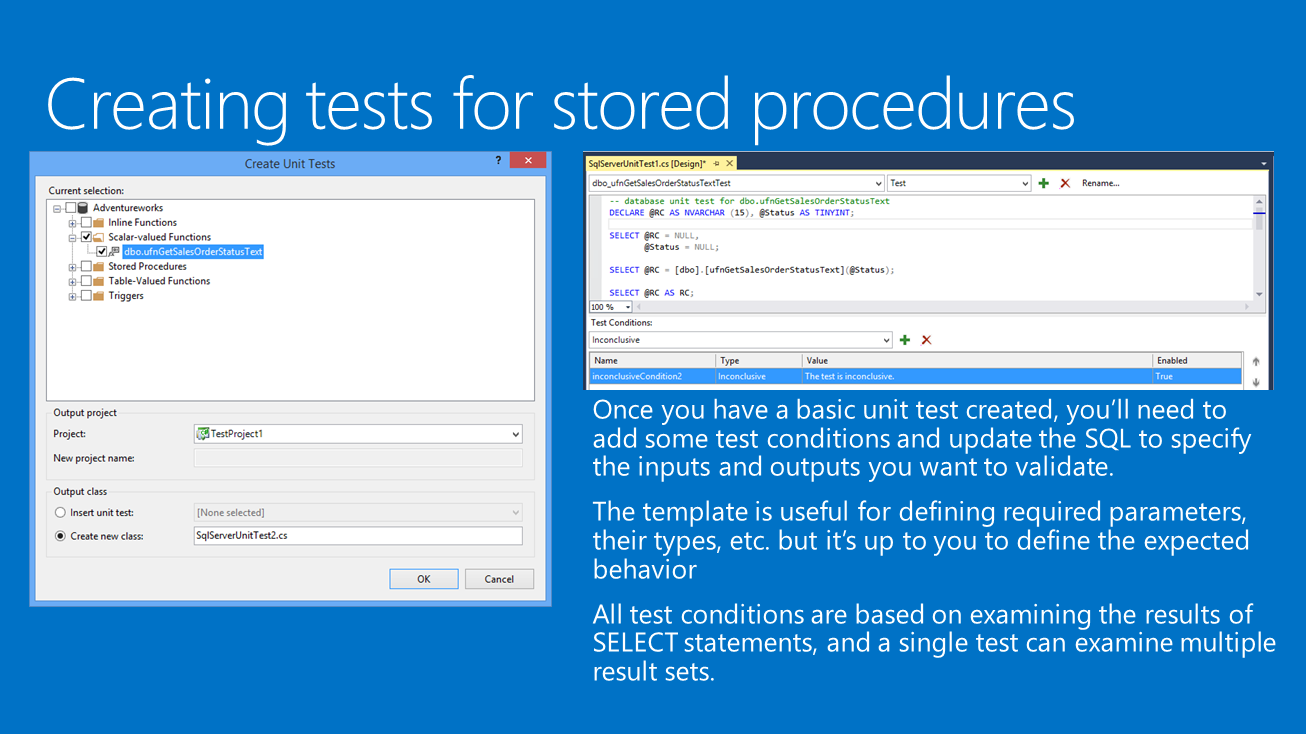

Let’s show how all this works in Visual Studio, moving from the connected world to a project-based system with source control integration and some basic unit tests to validate your functions or procedures work as expected. |

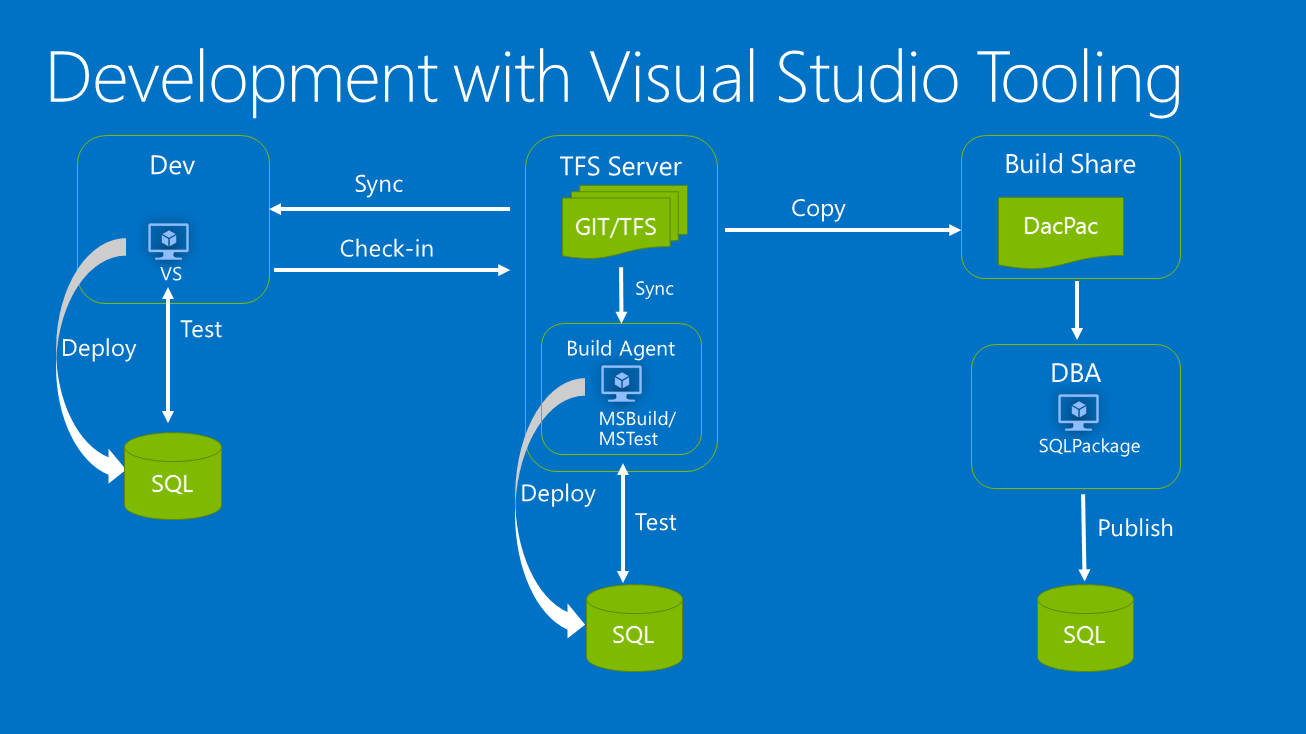

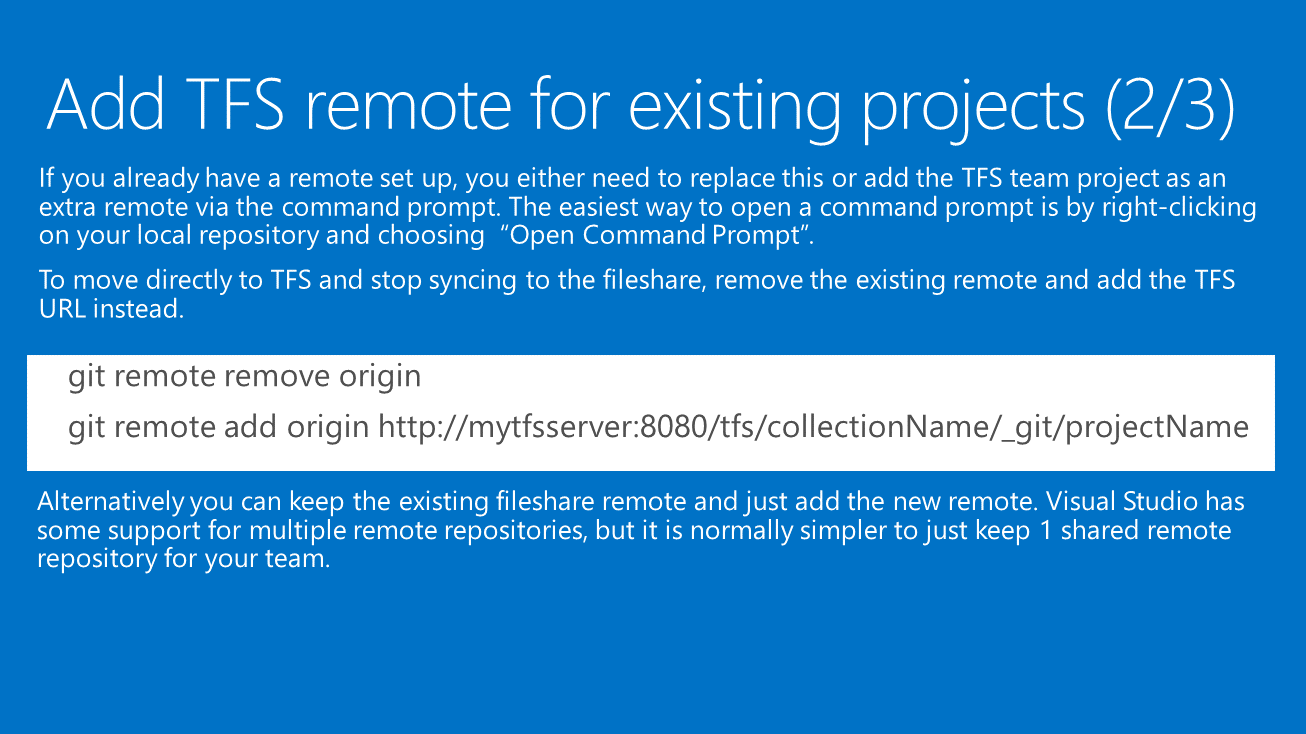

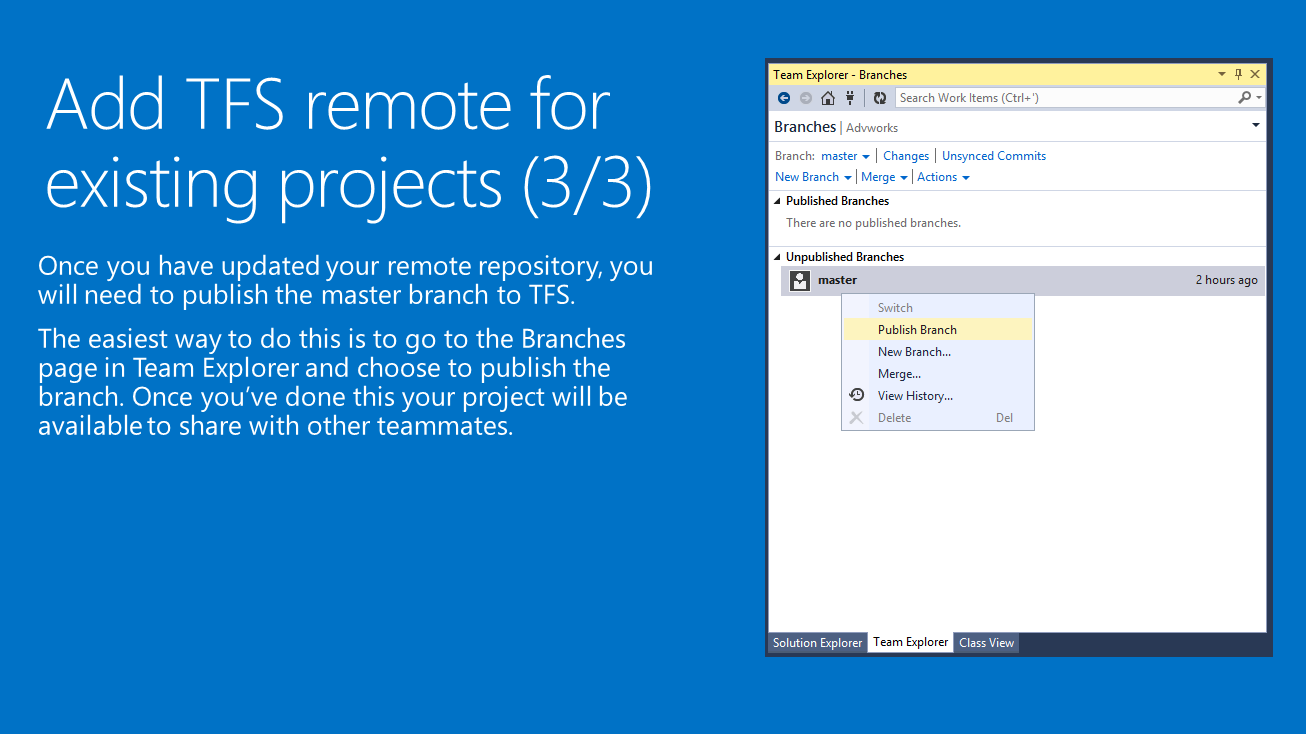

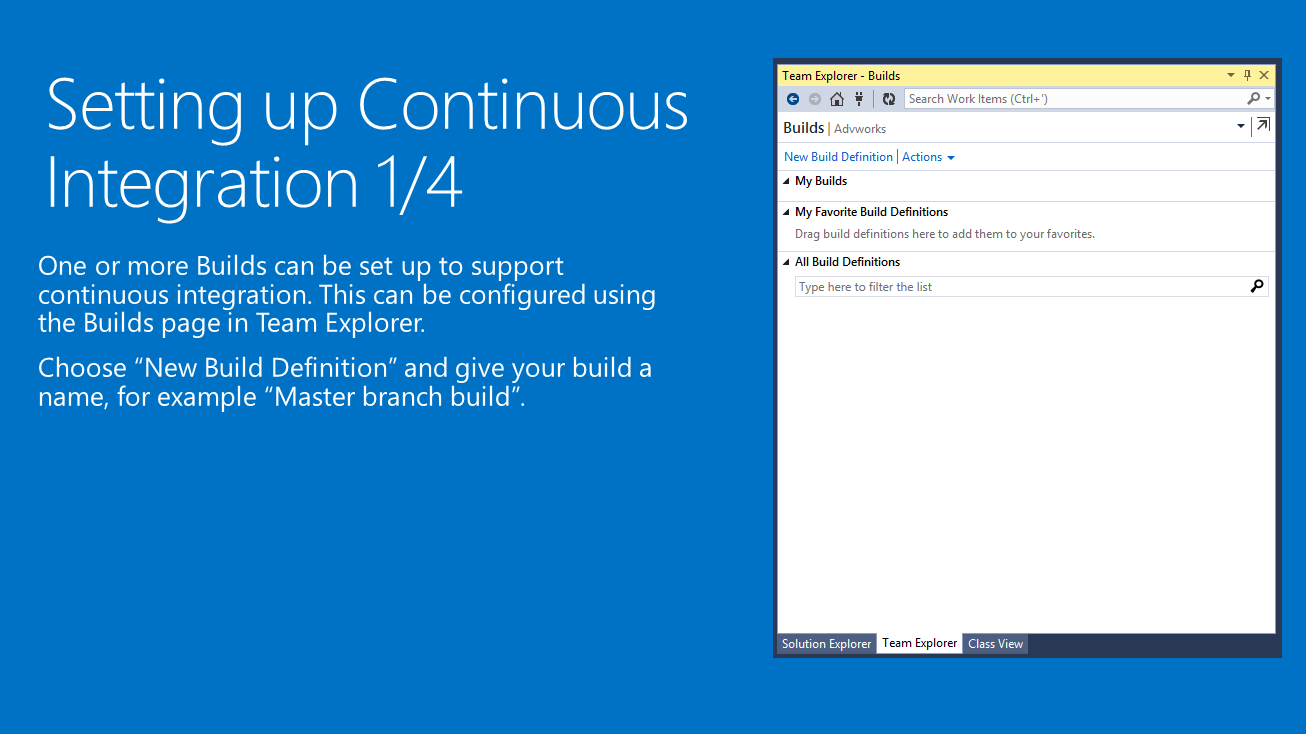

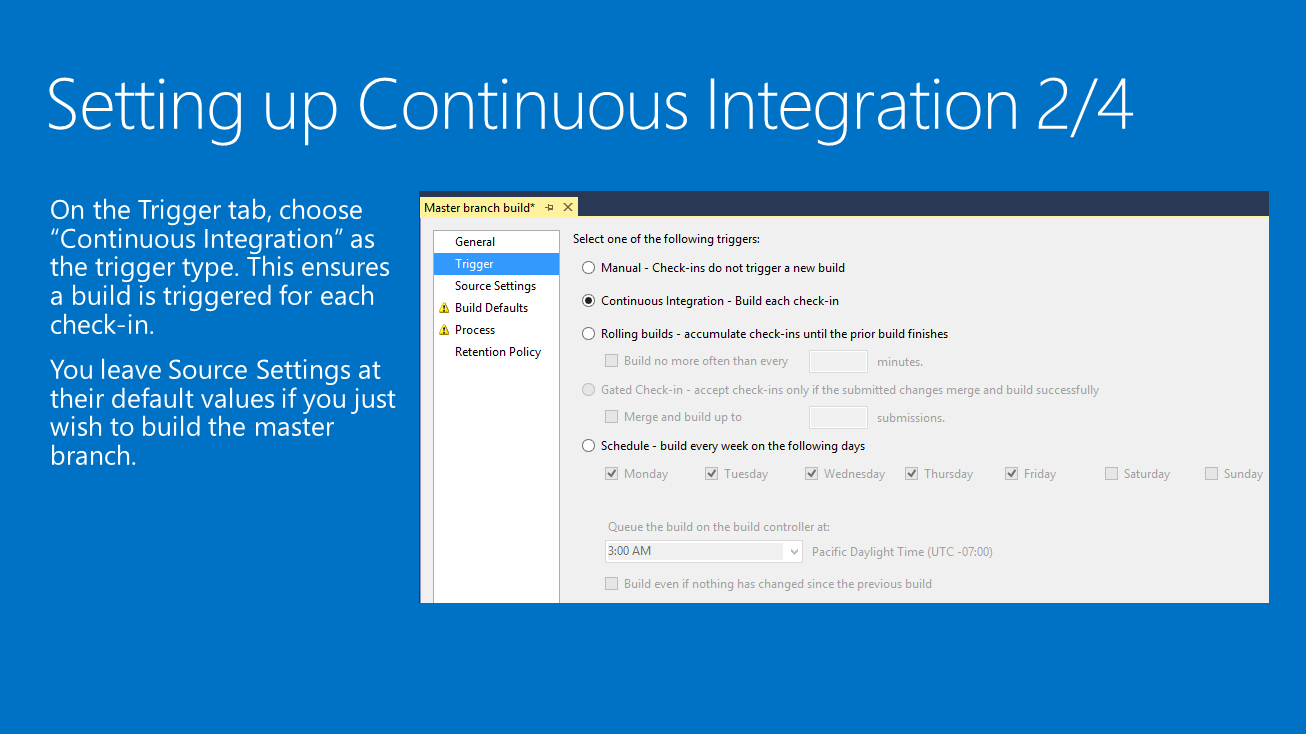

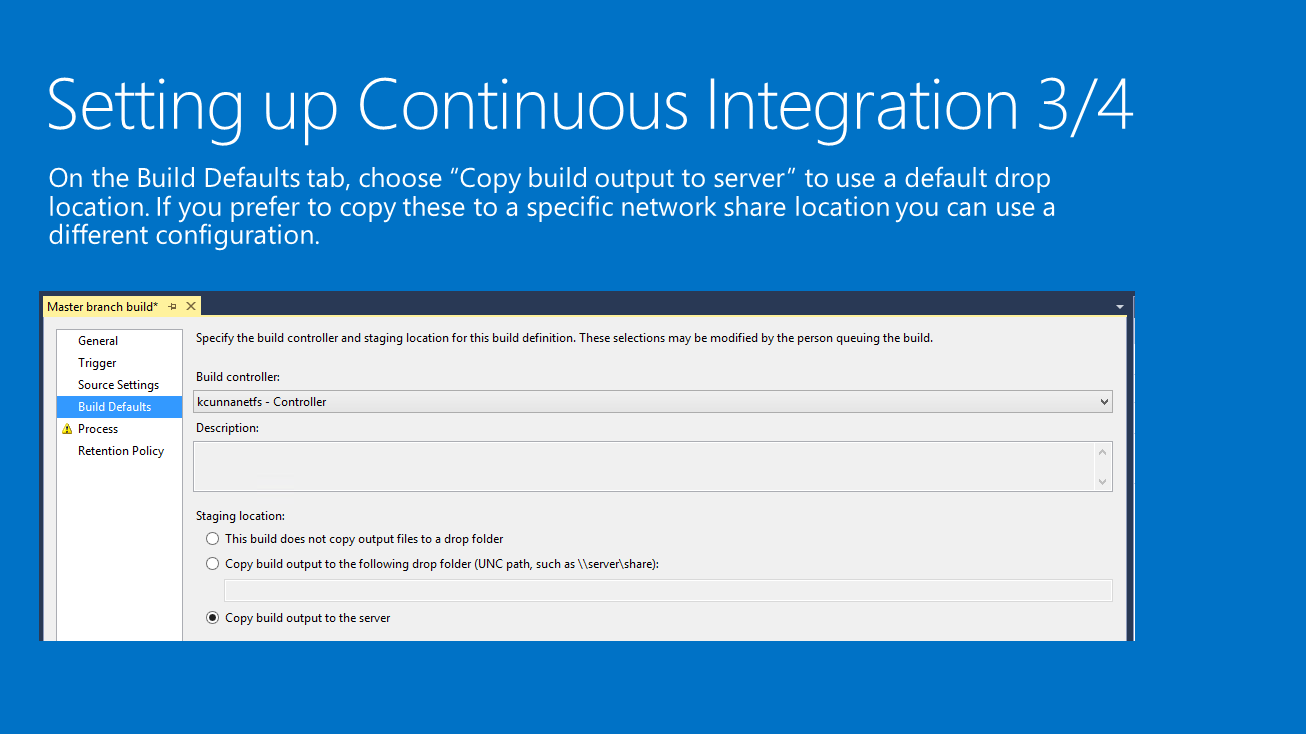

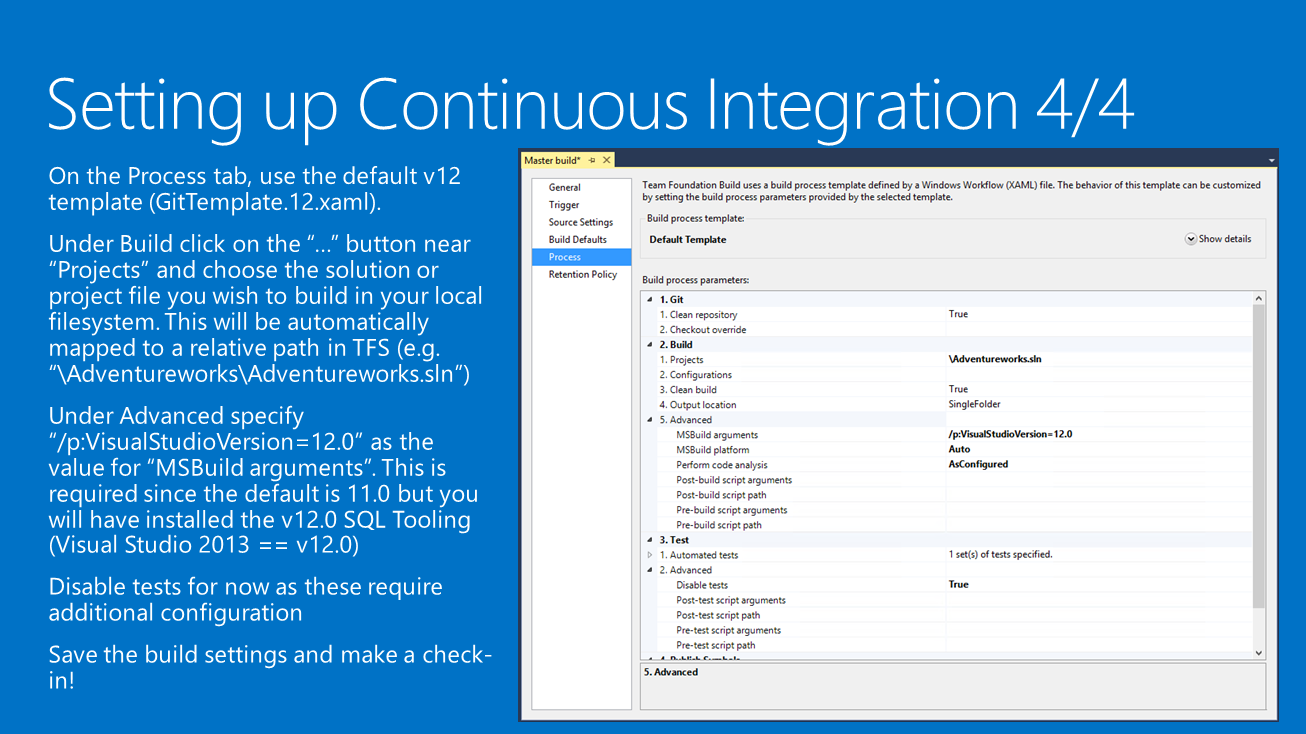

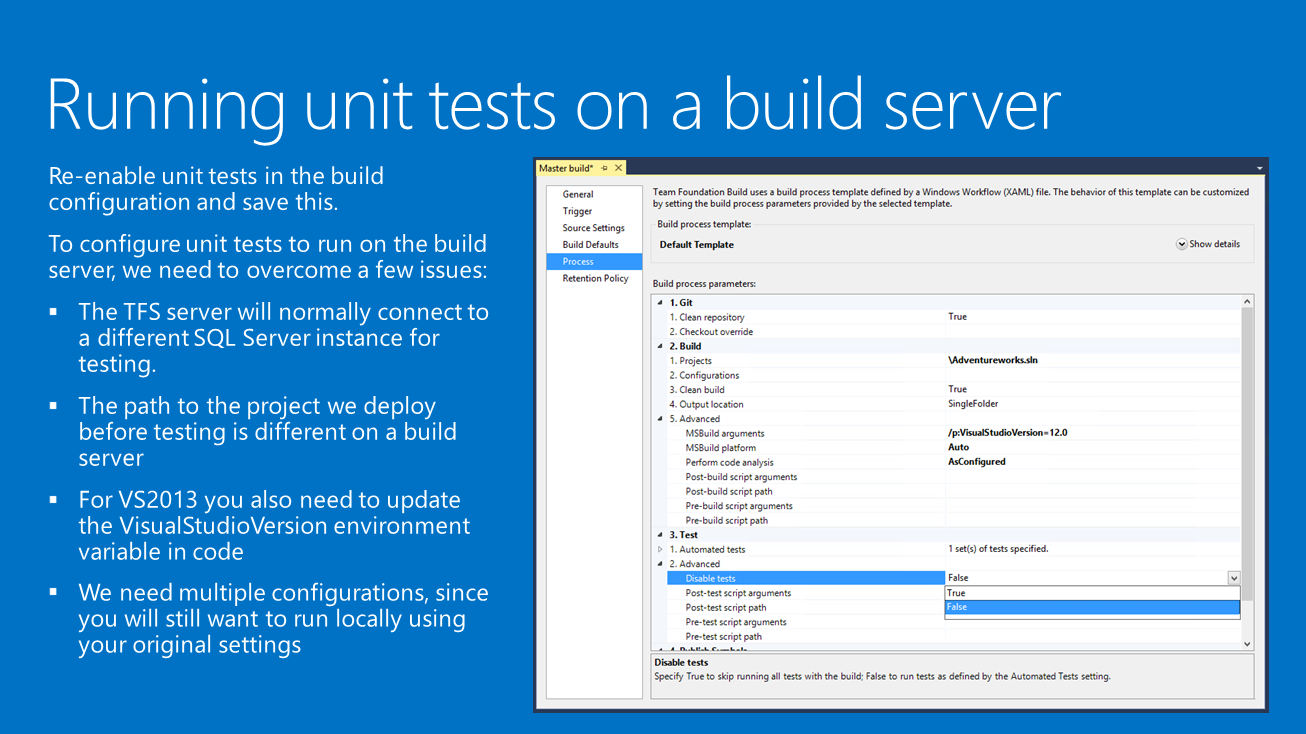

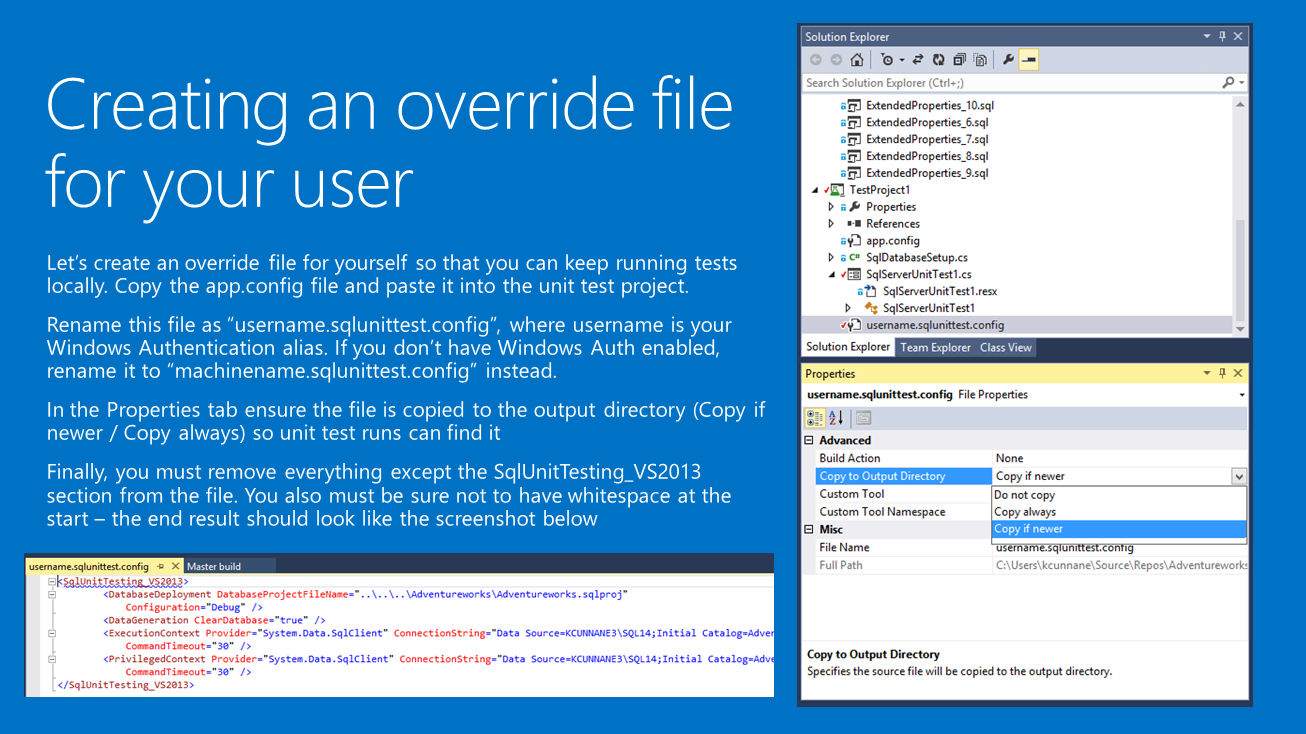

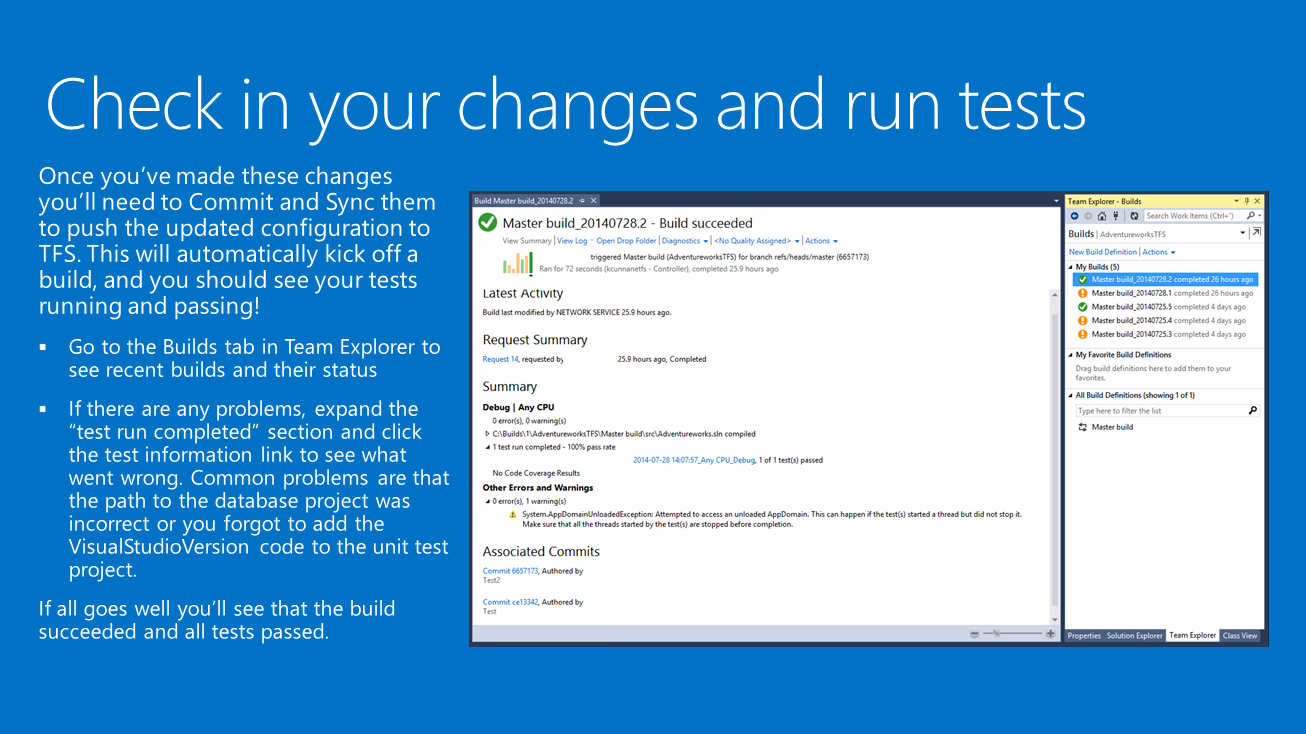

What we’ve shown so far can work great for teams of 3-5 developers. However using a fileshare makes things harder than it needs to be, and doesn’t get the benefits that come with a full featured tool such as TFS. TFS isn’t just source control, it also has automated build support using a build agent, with team-level validation, integration with your standard development processes (resolve work items on checkin, roll back changes if they break the build / break unit tests). Here we see a full development process, based on a developer checking kicking off a continuous integration build on the TFS server. This adds another layer of testing and validation, and only if it succeeds will it output a drop to the build share. Hence there’s a known quality bar to what gets output there so DBAs can have confidence when deploying to a staging server. |

Not much needs to be said about Visual Studio Online. It works the same as TFS on-prem, and in many ways works with less work than an on-prem installation. It’s great and something we encourage you to explore. Note that support for our latest SQL Tooling update, including SQL Server 2014 support, is expected in late August 2014. In the future we expect the time between when SQL Server tooling updates are released and the time these are applied to Visual Studio Online build servers to be much shorter, so you can be sure that you’re testing using the most up to date code. |