Identifying Contention in TempDB

It has been a number of years since I have seen a TempDB contention issue in a system that I support. When I came across this one, luckily during a load test and not a production environment, it was a great refresher. The offending application in this case is SharePoint 2010, but the problem was due to a customization/configuration exceeding a known limitation within SharePoint. I will start off by discussing how to diagnose the issue from a SQL perspective regardless of the offending application, proc, etc. I will then explain WHY it was causing a problem, and how it was resolved.

Calling Dr. House…

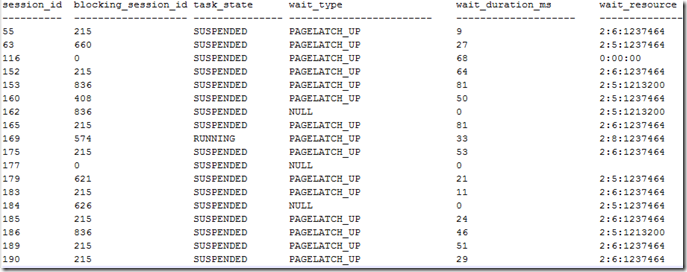

Diagnosis time! The initial complaint was that the ULS logs within SharePoint were reporting that many db queries were taking upwards of 5 to 6 seconds to complete during a load test. Knowing very well that this is a brand new system with top notch hardware and a very beefy SAN. SQL was also carefully configured specifically with a SharePoint workload in mind. At first I figured they had to be reading something wrong, so I configured PSSDIAG (SQLDIag would have worked as well) to collect data while we recreated the issue. Sure enough, a large number of queries were taking much longer than they should be while CPU, disk, etc were sitting pretty much idle. I then took a loot at blocking and waitstats…… That showed a completely different picture! In reality, all I would have needed to do is take one look at sys.dm_exec_requests, and the issue would have been found right then and there. Here are just a few columns and mocked up data rows to show you approximately what the output looked like…

There were hundreds of entries like this with the same wait type. Remember that PAGELATCH waits are waits within the buffer pool itself. Think of it as a “lock” on a physical data/index page in the buffer pool. Do not confuse this with a PAGEIOLATCH wait, which is still a “lock” on the physical data page, but PAGEIOLATCH means that the page is in the middle of an IO operation. Therefore it is not a disk io issue, which performance counters also back that up.

The other key piece of information is what is in the wait_resource column. This pointing to a particular page. The format goes dbid : filenum : pagenum. DBID 2 is tempdb. There are 8 tempdb data files in this case, and what about the page? You can use our old friend DBCC PAGE to find out what is on that particular page. This is an oldie but goodie on DBCC PAGE https://blogs.msdn.com/b/sqlserverstorageengine/archive/2006/06/10/625659.aspx

I used another method, though. I noticed that many of the page numbers were the same. With the database being TempDB, the chance of them being data pages is very slim. I suspected PFS (Page Free Space) pages. The very first page in a file is the PFS page, and a PFS interval is 8088 pages. Sure enough every page number I found was divisible by 8088. Of course, I did go back and check using DBCC PAGE to confirm that they were in fact PFS Pages (hint m_type = 11). If you want more info on PFS, GAM, SGAM, etc pages, here is another great blog post. https://blogs.msdn.com/b/sqlserverstorageengine/archive/2006/07/08/under-the-covers-gam-sgam-and-pfs-pages.aspx. Note that this and the above blog are both fairly old, but they still do apply up through SQL 2008 R2.

The PFS page contention in TempDB basically means that we are creating and dropping so many temp objects that too many spids need to modify the PFS page at once. Now, there are some ways to combat this such as Trace Flag 1118, adding more files to tempdb, etc, but what I really wanted to understand why so many objects were being created/dropped and should this really be happening? (note that I tried trace flag 1118 just for kicks and it did not help in this case)

What in the App is going on here?!

I wanted to dig deeper and find out what was causing this contention from an application side of things. Typically, when I see issues like these, the best resolution is typically a code change. For this instance, the question to ask is “what is creating and dropping so many temp objects and why? Is it necessary?” With the application being SharePoint 2010, I was fairly confident in thinking that this was probably not part of standard functionality, or it would be a major problem that would have been addressed via hotfix or service pack.

I was pretty easily able to see in the output from the blocker script (perf_stats output via SQLDiag) that the culprit was a stored procedure named dbo.proc_SecGetDomainGroupMapData that was in the main content database for the site. In viewing the code for this stored procedure, I noticed that it did an outer apply between 2 table-valued functions. That would at least require 2 temp tables per execution while more temp space may be required for sorting. In reviewing the trace, this procedure was called over 20,000 times per minute.This just did not seem right, so I brought this information up with the application support and development teams to discuss.

I will readily admit that I am far from an expert in SharePoint, but after working with other teams, we were able to alleviate the issue very easily. The issue had to do with the level of security, and we were far exceeding the Security Scope limit within SharePoint. Essentially, items in any list or document library can either inherit the permissions of the parent folder, or the list can break inheritance and every item can have its own permissions. Breaking the inheritance can be a very expensive operation, as the security for each and every item has to be checked individually. We found that inheritance was broken in this case, and we remediated that. Immediately we no longer saw any page latch contention in tempdb and overall query performance improved drastically,

This shows how not all db issues are best resolved by the DBA alone, but a team effort with DBAs, application support engineers, and development.

For more information on capacity limitations within SharePoint, this is a great article… https://technet.microsoft.com/en-us/library/cc262787.aspx