Instant Log Initialization for SQL Server in Azure

Reviewed by: John Hoang, Denzil Ribeiro, Rajesh Setlem, Mike Weiner

Introduction

Instant File Initialization (IFI) is a well-known feature of SQL Server, providing significant performance improvement for operations that require an increase in the size of data files, such as database creation, restore, and file growth. Without IFI enabled, zeroes have to be written into the newly allocated file space, which is a time-consuming size-of-data operation. With IFI enabled, space is allocated to the file, but zeroes are not written. SQL Server documentation provides details on how this feature works, and what is required to enable it.

The documentation says quite explicitly that “Log files cannot be initialized instantaneously.” Paul Randal explains the reason for this behavior in this blog. To summarize, log files need to be fully initialized, i.e. filled with zeroes (or other byte patterns), to support database crash recovery.

And yet, the title of this blog is not a mistake. There is a case where log can be initialized instantaneously and yet maintain crash recovery semantics. Specifically, this happens when database files are created directly in Azure Blob Storage.

SQL Server database files in Azure Blob Storage

As you may know, starting with SQL Server 2016, database files can be created directly in Azure Blob Storage as page blobs, rather than as files on local or UNC paths. The SQL Server Data Files in Microsoft Azure documentation topic describes this feature in detail.

Here is an example of creating a database with files directly in Azure Blob Storage, once the credential holding the Shared Access Signature for the storage container is created:

CREATE DATABASE GrowthTest

ON PRIMARY

(

NAME = N'GrowthTest',

FILENAME = N'https://example.blob.core.windows.net/mssql01/GrowthTest.mdf',

SIZE = 8192KB,

FILEGROWTH = 65536KB

)

LOG ON

(

NAME = N'GrowthTest_log',

FILENAME = N'https://example.blob.core.windows.net/mssql01/GrowthTest_log.ldf',

SIZE = 8192KB,

FILEGROWTH = 65536KB

);

Instant Initialization of SQL Server Transaction Log in Azure Blob Storage

Recently, we were working on a performance testing exercise using a SQL Server database with files in Azure Blob Storage. After creating the database using the default 8 MB size for data and log file (as in the example above), we wanted to increase the size of all files to be sufficient for the expected workload. IFI was not yet enabled for the SQL Server instance we were working with, and growing the data file from 8 MB to 1 TB took about one minute (using Premium Storage). This was expected, since the data file had to be fully initialized. We expected that the log growth to 1 TB would take about as much time, for the same reason. It was very surprising then that the same operation on the log file completed in less than one second. Here are the commands we used to grow the files:

ALTER DATABASE GrowthTest MODIFY FILE (NAME = N'GrowthTest', SIZE = 1024GB);

ALTER DATABASE GrowthTest MODIFY FILE (NAME = N'GrowthTest_log', SIZE = 1024GB);

Before anyone starts worrying, the SQL Server storage engine is not broken, and database recovery still works just like it always did. To understand what is happening, we should keep in mind that Azure Blob Storage is very different from the traditional storage systems. When database files are created as blobs in Azure Blob Storage, SQL Server can take advantage of some features that aren’t available in traditional storage systems.

The specific Azure Blob Storage feature that SQL Server is using here is the ability to clear a range of bytes in a page blob, provided by the Put Page API. The byte range specified in the Range or x-ms-range header is cleared when the value of the x-ms-page-write header is set to Clear. This operation has two important properties. One is that clearing the range is a metadata operation, so it happens nearly instantaneously. The other property is a guarantee that reading from a cleared range will always return zeroes, thus allowing SQL Server crash recovery to work in the usual way.

This means that if the log file is created directly in Azure Blob Storage (as opposed to on a disk attached to an Azure VM), SQL Server does not have to initialize the log by writing zeroes to the log blob. It can instead make a call to the storage API to clear a byte range within the blob. This call completes very fast, effectively resulting in instant log file initialization.

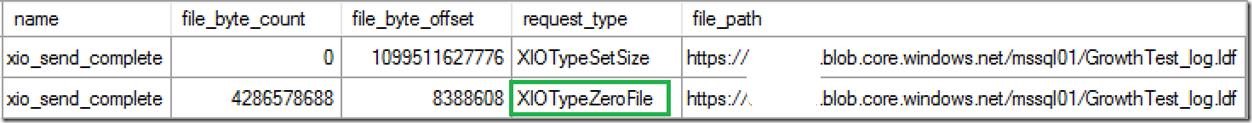

The log initialization operation, among other operations on database files in Azure Blob Storage, can be monitored using the xio_send_complete extended event. For log initialization, the file_path field will be set to the URL of the log blob, and the request_type field will be set to XIOTypeZeroFile. Here is an example. The first event fires when the blob is resized to 1 TB, and the second event fires when the 8 MB - 4 GB range is zeroed.

Conclusion

There are two outcomes worth highlighting here:

1. Some of the well-established truths about SQL Server may no longer hold as the underlying technology advances. In this case, SQL Server is taking advantage of a special capability in the storage subsystem, making instant log initialization a reality.

2. This shows how SQL Server can take advantage of functionality that is only available in Azure today. Customers using SQL Server in Azure VMs can benefit from instant log initialization if they create databases with files directly in Azure Blob Storage. This removes delays related to long log initialization from the list of operational concerns.