SQL 2014 Clustered Columnstore index rebuild and maintenance considerations

This article describes the index rebuild process as well as index maintenance for clustered Columnstore indexes and is directed towards SQL 2014. In SQL 2016, there have been several index maintenance enhancements that will be covered in a separate post.

Overview of Columnstore index build or rebuild process

Building (or rebuilding) a Columnstore index can be a resource intensive operation. Index creation time can be 1.5 times longer than a regular b-tree and resource consumption as well as duration depends on a few factors other than physical resources that include

- Number and data types of columns which determine the memory grant estimation

- Degree of Parallelism (DOP)

- Resource Governor settings

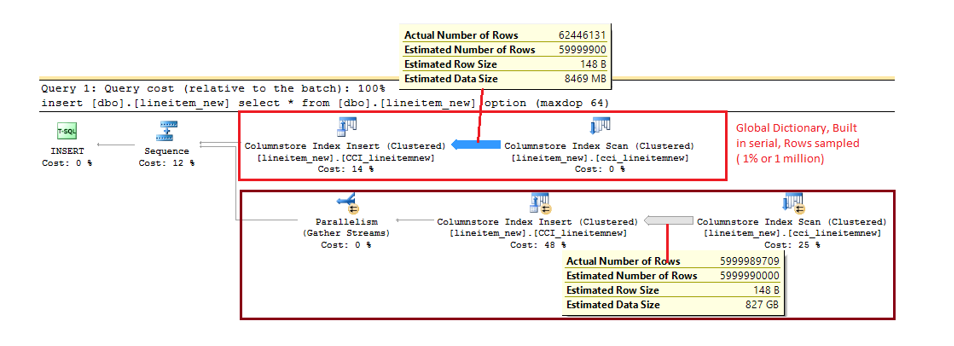

Plan for Index Rebuild of a Non-Partitioned Table with 6 billion rows:

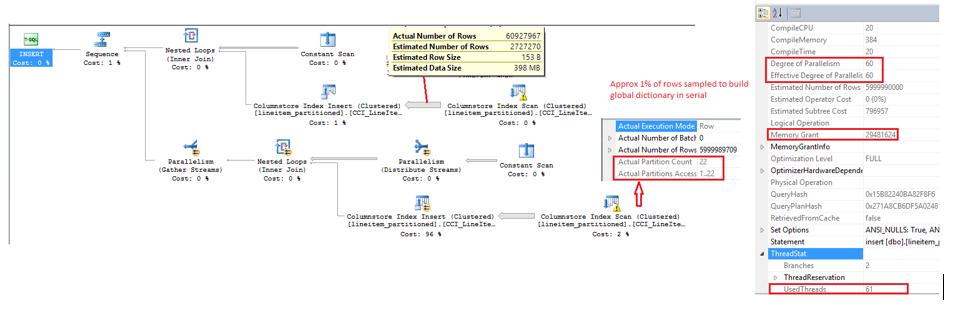

Plan for Index Rebuild of a partitioned table with 6 billion rows spread across 22 partitions

The Columnstore index is built in 2 phases indicated by the plans above:

- Primary (Global) Dictionary: This is built first and is a serial plan irrespective of MAXDOP settings and completed first and persisted. In order to build the primary dictionary we sample 1% of the rows if the table is over a million rows. Given this is a serial plan the duration taken for this step is incurred in all cases. The memory grant here is limited to 10%.

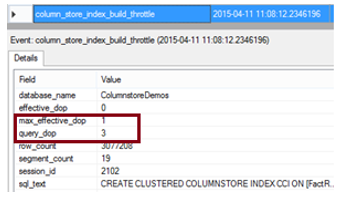

- Segments and Local Dictionaries: Segments are now built in parallel as are local dictionaries. First an estimate is made on a per-thread basis of memory consumption based on a segment size of 1 million rows. The memory grant is requested and all the threads are started but only the first one builds a segment and once it is done the actual memory grant is known per thread. While this first segment is being built, all other threads wait on it to finish and have the wait type COLUMNSTORE_BUILD_THROTTLE. Given the COLUMNSTORE_INDEX_BUILD_THROTTLE is cumulative as all remainder of the threads wait for the first segment to be built, the higher the DOP on the system, the higher you will see this value. AS you can see in the XE capture below, you see the first segment build completing and then the waits for all the remainder of the threads completing.

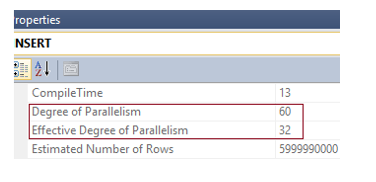

From the first segment build, we know how much memory was needed. Based on this knowledge we activate only N number of threads whose total memory grant will fit into the memory that was granted earlier. This number of threads activated is represented in the plan as “Effective Degree of Parallelism” and can be checked against the actual degree of parallelism as seen in the diagram.

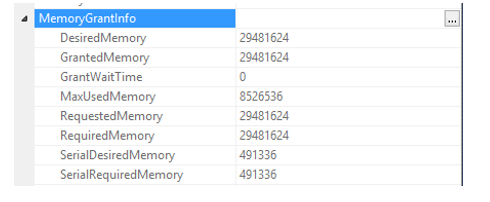

The Memory Grant information is also available from the Query Plan.

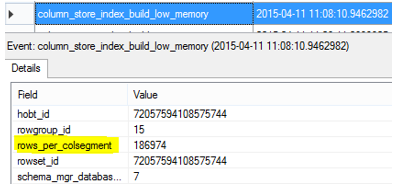

During the life of the index build, additional memory can be granted within resource governor limits and low memory conditions are checked as well. If a Low memory condition is detected, the segment will be trimmed before it reaches the 1 million row mark.

A segment can be trimmed or closed before the 1 million mark from an index build perspective if

- Low Memory condition is hit

- Dictionary is full ( Dictionary size is 16MB)

- DOP is greater than 1 and the last “N” row groups created don’t have 1 million rows

Columnstore Index Build Extended Events

There are a couple Extended Events that can help in diagnosing index build and segment quality related issues.

a. Column_store_index_build_throttle - indicates if the DOP has been throttled.

b. Column_store_index_build_low_memory – tells us if a segment is trimmed due to low memory condition

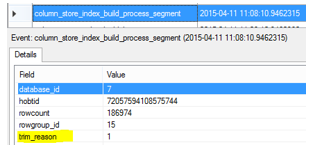

c. Column_store_index_build_process_segment – gives us the segment that was trimmed and the trim_reason. This list isn’t exhaustive, 1 = Low Memory, 2 = Dictionary full

Columnstore Index build test cases:

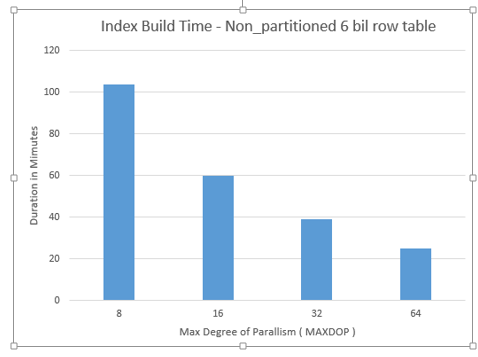

The following table depicts Index build results on a non-partitioned table with 6 billion rows. While observing the numbers we can see that the higher the DOP the higher the CPU. Also the higher the DOP, given more threads are spawned, the memory grant goes up.

Note: There wasn’t a noticeable difference when the same tests were performed on a partitioned table. The benefits of having Columnstore index on a partitioned table include being able to rebuild an index at the partition level, partition elimination in conjunction with segment elimination and ability to archive data efficiently.

This is a 6 billion row table that was tested, SQL Server has 60 cores.

StartTime

EndTime

Duration

MAXDOP

Actual DOP

max_grant_kb

CPU

4/10/15 8:02 AM

4/10/15 8:27 AM

0:24:50

64

60

28989608

85%

4/10/15 8:28 AM

4/10/15 9:07 AM

0:39:40

32

32

15461128

50%

4/10/15 9:08 AM

4/10/15 10:08 AM

1:00:13

16

16

7730568

28%

4/10/15 10:09 AM

4/10/15 11:53 AM

1:43:59

8

8

3865288

13%

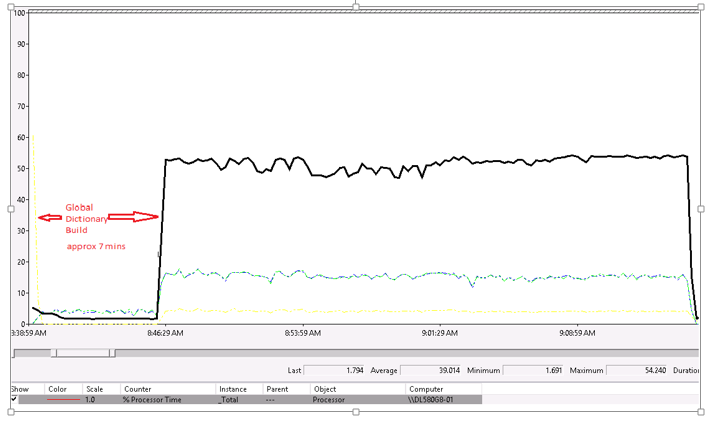

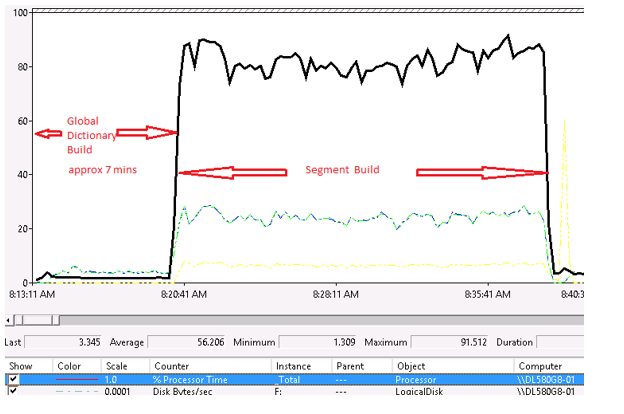

If you look at the perfmon charts below and the timings, Rebuild index for a Columnstore index does not scale linearly. This is because the Global dictionary is built in serial as discussed, and is a constant irrespective of MAXDOP for the index build. As you see in the perfmon images below, the dictionary build time takes a larger percentage of the overall time the higher the degree of parallelism.

MAXDOP 32: Building the Global dictionary takes around 20% of the total index build time with MAXDOP 32.

MAXDOP 64: Building the Global dictionary takes around 29% of the total index build time when maxdop is 64.

Columnstore Index Maintenance

There are 2 operations from a Columnstore Index maintenance perspective

INDEX REORGANIZE: This manually moves closed ROWGROUPS into compressed columnar storage and this is done online. You do not have to do this, the tuple mover will ultimately move a closed rowgroup into columnar storage but the tuple mover is single threaded so issuing an index reorganize is a way to manually invoke compression on a closed row group.

INDEX REBUILD: This can be done at a partition Level for a partitioned table, and is an offline index build and at this point there isn’t an ONLINE equivalent. The Rebuild reads and recompresses all the data in the specified partition or the entire index. In an updatable Columnstore, deletes of data that reside in a compressed rowgroup are logical deletes. You can encounter situations where majority of the data in a rowgroup could be deleted. In such cases in order to reclaim that space, you have to REBUILD the index for the partition in question. Another reason one would want to rebuild an index on the partition is to improve rowgroup quality if you have a large number of rowgroups each having a small number of rows. In this case rebuilding an index can consolidate smaller row groups into larger ones which could help both from the compression perspective as well as from the query performance perspective.

Here is a sample script which can help identify partitions that are good candidates to be rebuilt based on some thresholds defined in the script.

/*---------------------------------------------------------------------------------

The sample scripts are not supported under any Microsoft standard support program or service and are intented as a supplement to online documentation.The sample scripts are provided AS IS without warranty of any kind either expressed or implied. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose.

#--------------------------------------------------------------------------------- */

/*

Rebuild index statement is printed at partition level if

a. RGQualityMeasure is not met for @PercentageRGQualityPassed Rowgroups

-- this is an arbitrary number, what we are saying is that if the average is above this number, don't bother rebuilding as we consider this number to be good quality rowgroups

b. Second constraint is the Deleted rows, currently the default that is set am setting is 10% of the partition itself. If the partition is very large or small consider adjusting this

c. In SQL 2014, post index rebuild,the dmv doesn't show why the RG is trimmed to < 1 million in this case in SQL 2014.

- If the Dictionary is full ( 16MB) then no use in rebuilding this rowgroup as even after rebuild it may get trimmed

- If dictionary is full only rebuild if deleted rows falls above the threshold

*/

if object_id('tempdb..#temp') IS NOT NULL

drop table #temp

go

Declare @DeletedRowsPercent Decimal(5,2)

-- Debug = 1 if you need all rowgroup information regardless

Declare @Debug int =0

-- Percent of deleted rows for the partition

Set @DeletedRowsPercent = 10

-- RGQuality means we are saying anything over 500K compressed is good row group quality, anything less need to re-evaluate.

Declare @RGQuality int = 500000

-- means 50% of rowgroups are < @RGQUality from the rows/rowgroup perspective

Declare @PercentageRGQualityPassed smallint = 20

;WITH CSAnalysis

( object_id,TableName,index_id,partition_number,CountRGs,TotalRows,

AvgRowsPerRG,CountRGLessThanQualityMeasure,RGQualityMeasure,PercentageRGLessThanQualityMeasure

,DeletedRowsPercent,NumRowgroupsWithDeletedRows)

AS

(SELECT object_id,object_name(object_id) as TableName, index_id,

rg.partition_number,count(*) as CountRGs, sum(total_rows) as TotalRows, Avg(total_rows) as AvgRowsPerRG,

SUM(CASE WHEN rg.Total_Rows <@RGQuality THEN 1 ELSE 0 END) as CountRGLessThanQualityMeasure, @RGQuality as RGQualityMeasure,

cast((SUM(CASE WHEN rg.Total_Rows <@RGQuality THEN 1.0 ELSE 0 END)/count(*) *100) as Decimal(5,2)) as PercentageRGLessThanQualityMeasure,

Sum(rg.deleted_rows * 1.0)/sum(rg.total_rows *1.0) *100 as 'DeletedRowsPercent',

sum (case when rg.deleted_rows >0 then 1 else 0 end ) as 'NumRowgroupsWithDeletedRows'

FROM sys.column_store_row_groups rg

where rg.state = 3

group by rg.object_id, rg.partition_number,index_id

),

CSDictionaries --(maxdictionarysize int,maxdictentrycount int,[object_id] int, partition_number int)

AS

( select max(dict.on_disk_size) as maxdictionarysize, max(dict.entry_count) as maxdictionaryentrycount

,max(partition_number) as maxpartition_number,part.object_id, part.partition_number

from sys.column_store_dictionaries dict

join sys.partitions part on dict.hobt_id = part.hobt_id

group by part.object_id, part.partition_number

)

select a.*,b.maxdictionarysize,b.maxdictionaryentrycount,maxpartition_number

into #temp from CSAnalysis a

inner join CSDictionaries b

on a.object_id = b.object_id and a.partition_number = b.partition_number

-- Maxdop Hint optionally added to ensure we don't spread small amount of rows accross many threads

-- IF we do that, we may end up with smaller rowgroups anyways.

declare @maxdophint smallint, @effectivedop smallint

-- True if running from the same context that will run the rebuild index.

select @effectivedop=effective_max_dop from sys.dm_resource_governor_workload_groups

where group_id in (select group_id from sys.dm_exec_requests where session_id = @@spid)

-- Get the Alter Index Statements.

select 'Alter INDEX ' + QuoteName(IndexName) + ' ON ' + QuoteName(TableName) + ' REBUILD ' +

Case

when maxpartition_number = 1 THEN ' '

else ' PARTITION = ' + cast(partition_number as varchar(10))

End

+ ' WITH (MAXDOP =' + cast((Case WHEN (TotalRows*1.0/1048576) < 1.0 THEN 1 WHEN (TotalRows*1.0/1048576) < @effectivedop THEN FLOOR(TotalRows*1.0/1048576) ELSE 0 END) as varchar(10)) + ')'

as Command

from #temp a

inner join

( select object_id,index_id,Name as IndexName from sys.indexes

where type in ( 5,6) -- non clustered columnstore and clustered columnstore

) as b

on b.object_id = a.object_id and a.index_id = b.index_id

where ( DeletedRowsPercent >= @DeletedRowsPercent)

-- Rowgroup Quality trigger, percentage less than rowgroup quality as long as dictionary is not full

OR ( ( ( AvgRowsPerRG < @RGQuality and TotalRows > @RGQuality) AND PercentageRGLessThanQualityMeasure>= @PercentageRGQualityPassed)

AND maxdictionarysize < ( 16*1000*1000)) -- DictionaryNotFull, lower threshold than 16MB.

order by TableName,a.index_id,a.partition_number

-- Debug print if needed

if @Debug=1

Select getdate() as DiagnosticsRunTime,* from #temp

order by TableName,index_id,partition_number

else

Select getdate() as DiagnosticsRunTime,* from #temp

-- Deleted rows trigger

where ( DeletedRowsPercent >= @DeletedRowsPercent)

-- Rowgroup Quality trigger, percentage less than rowgroup quality as long as dictionary is not full

OR ( ( ( AvgRowsPerRG < @RGQuality and TotalRows > @RGQuality) AND PercentageRGLessThanQualityMeasure>= @PercentageRGQualityPassed)

AND maxdictionarysize < ( 16*1000*1000)) -- DictionaryNotFull, lower threshold than 16MB.

order by TableName,index_id,partition_number

-- Add logic to actually run those statements

Summarizing some of the best practices:

Partitioning enables rebuild of an index at the partition level as well as dictionaries created for each partition besides the other manageability benefits.

MAXDOP influences the memory grant size. If segments are getting trimmed due to low memory, reducing MAXDOP can help.

Resource Govenor settings for the respective pool can be increased from the default of 25% when rebuilding indexes if low memory causes rowgroups to be trimmed.

Massive deletions of rows that are already compressed in columnar format require a REBUILD of the index to reclaim that space.

Denzil Ribeiro

Program Manager SQL/Azure CAT

![clip_image006[7] clip_image006[7]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/50/01/metablogapi/4212.clip_image0067_thumb_02D3DDD7.jpg)