Optimizing CREATE SET in Cube Calculation Scripts

Author: Thomas Kejser

Reviewers: Peter Adshead, Nicholas Dritsas, Sanjay Nayyar, John Desch, Kevin Cox, Akshai Mirchandani, Anne Zorner

In this blog we will describe an important optimization that you should apply to cubes that are processed often and have CREATE SET commands in the calculation script. We will describe the measurements you can make to determine if this may affect you and also provide solutions that can make your cube be much more responsive to users.

Background

When you process a cube in Analysis Services, some expressions in the calculation scripts for connected users may get invalidated and marked as needing re-execution. This is because sets and expressions in the script my have a dependency on the new data in the cube. Examples of these dependences are: CREATE SET and SCOPE that contains expressions like: FILTER(<set>, <exp>).

The invalidated expressions are re-executed when a user issues a DISCOVER or execute a query. DISCOVER command are for example issued when Excel connects to the newly processed cube. Once the expressions are re-executed, Analysis Services caches the result of the expression and will use this cache to response to all future requests.

As you may be able to deduce – anyone issuing a DISCOVER or executing a query after a process operation will have to wait or all CREATE SET and SCOPES to be executed. If there are complex expression in the calculation script, this can take a long time and will show up as latency in the client interface.

Observing the Behavior

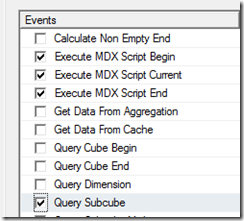

If you have a cube with a CREATE SET or SCOPE in the calculation script you should measure the time to takes for the calculation execute. To do this, set up a Analysis Services profiler trace for these events:

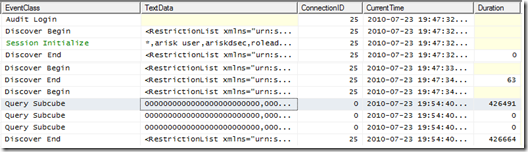

Now, process any partition in the cube and start a new connection to the cube with Excel. You will see a series of events:

In the above example, notice ConnectionID 25. We first see the Login and Initialize events, follwed by Discover events.. But note that the last DISCOVER event does not finish until ConnectionID 0 is done executing sub cubes. Those ConnectionID = 0 sub cubes are generated by the calculation script. In this case, 426 seconds are spend on retrieving the sub cubes. While this happens, no new DISCOVERand query events are being served from any connection. Note that deploying an updated cube script to a cube will exhibit a pattern similar to a process operation.

Using a trace, you can quantify the total time is takes to fetch the sub cubes required to execute the calculation script.

Optimizing the Calculation Script

If you determine that the overhead to executed the CREATE SET or SCOPE command it too large, you have several options to optimize it:

- If possible, use only block style calculations in the CREATE SET and SCOPE

- Create aggregates to support the execution of the expression

- Pre-warm the cube after processing

Ad 1) Using the MDX tuning guidance in the Analysis Services 2008 performance Guide – rewrite the CREATE SET or SCOPE commands to use block style calculations.

Ad 2) If you see Query Subcube events in the trace, you should consider adding aggregates to support those subcubes. We recently did such an optimization for a customer and brought execute time for calculation script down from 15 minutes to a few seconds.

Ad 3) Assuming you are processing during in off-peak hours or on a server not available for user queries (for example, a scale-out solution), you can run a DISCOVER command after you are done processing. This causes the calculation script to be executed and cached before the first user connects. If you are already using a cache warming script – adding the DISCOVER command to this script is a small change. However, note that the cached subcube may still be evicted from the cache under memory pressure – so it is generally preferable to use aggregations.

Conclusion

In this blog we have described how you can measure the time it takes for a calculation script to be re-executed after a processing operation has invalidated it. If this re-execute time is long – you should consider optimizing the expressions in the calculation script. Sometimes, this optimization is as simple as adding an aggregate that makes the CREATE SET or SCOPE execute faster.