Performance Comparison between Data Type Conversion Techniques in SSIS 2008

Authors: Sedat Yogurtcuoglu and Thomas Kejser

Technical Reviewers: Kevin Cox, Denny Lee, Carl Rabeler, Dana Kaufman, Jesse Fountain, Alexei Khalyako, Dana Kaufman

Overview

Data type conversion is one of the most common ETL tasks used in Microsoft® SQL Server® Integration Services packages. There are several ways to perform these conversions: you can use a Data Conversion transformation or a Derived Column transformation, or you can perform the conversion on the source component itself.

This technical note addresses the question: “What is the fastest-performing way of doing a data type conversion? For example, how can I most quickly change the length of a varchar column? What is the fastest way to changethe data type of a column from int to bigint?”

Measuring and Test Setup

Because the goal of ETL performance tuning is pure throughput, we will be measuring the run time of the competing data type conversion strategies. We will also record the CPU usage to see how the Integration Services buffers make use of the CPU cores in the machine. In our example, we used a two-core machine – as will be seen, the simple test we performed does not make full use of the cores. But the results nevertheless provide the necessary insight to make an informed choice between conversion strategies.

Depending on how you perform data type conversion, Integration Services uses different buffer layouts in the pipeline. To get an insight into this, we will be measuring the “Buffers Used” performance counter.

To illustrate the effects of multiple ways of doing the data type conversion and compare the results, the following three data type conversion operations are performed during the tests.

Int è BigInt

Varchar(50) è Varchar(25)

Varchar(50) è Varchar(75)

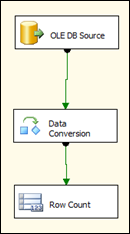

In the tests, a simple Integration Services package is used, with an OLE DB Source component to read 30 million records into the pipeline and then feed them into a Row Count component. By using a Row Count component we minimize the overhead of a destination, so that we can get a better measurement of the pure conversion performance. When reading the table, we will make sure the table resides in the buffer pool – to ensure that I/O is being taken out of the equation.

The tests were done on a dual core notebook running the 64-bit edition of SQL Server 2008 Developer on the 64-bit edition of a Windows® 7 RC. A 64-bit OLE DB Provider was used to connect to SQL Server.

This is the CREATE script of the table used as the source.

CREATE TABLE [dbo].[DataTypeConversion](

[Column 0] [int] NULL

, [Column 1] [varchar](50) NULL

, [Column 2] [varchar](50) NULL

) ON [PRIMARY]

The tests use an Integration Services package to populate the DataTypeConversion table with 30 million rows. As an alternative, you can also find the Transact-SQL based method to generate the data in Appendix A.

Test Preparation – Using Integration Services to Generate Data

Here are the steps to create the Integration Services package used to generate the test data on SQL Server.

1. Create an empty Integration Services package with a data flow.

2. In the data flow designer, add a script component as the Source component.

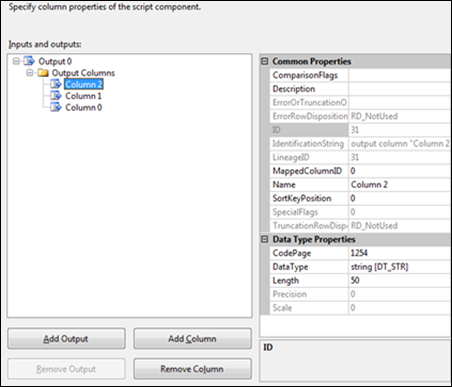

3. Edit the script component and create three columns on Inputs and Outputs page, as the following.

Column 0 : four-byte signed integer [DT_I4]

Column 1 : string [DT_STR] with length 50

Column 2 : string [DT_STR] with length 50

The Inputs and Outputs form of the script component should be similar to the following picture:

4. Edit the VBScript and paste the following code into the CreateNewOutputRows sub.

Dim i As Integer

For i = 1 To 30000000

Me.Output0Buffer.AddRow()

Me.Output0Buffer.Column0 = i

Me.Output0Buffer.Column1 = "abcde" & CStr(i)

Me.Output0Buffer.Column2 = "qtxyz" & CStr(i)

Next

Save the script, and then exit the script editor.

5. Add an OLE DB Destination task to the data flow designer and link the output of the script component to the destination.

6. Edit the OLE DB Destination task so that it accesses the DataTypeConversion table you created using the Transact-SQL code provided earlier, and then map the columns.

7. Execute the package, and then verify that the records were inserted.

Baseline

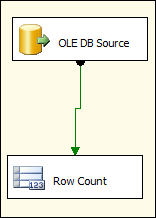

Before the data conversion tests, we measure the baseline speed of reading the rows from the table without performing any data conversions. The illustration below illustrates the simple package used in the baseline.

The execution time of the baseline package is around 35 seconds. The performance monitor shows that the CPU utilization is around 75 percent and the number of buffers in use is 1.

Transact-SQL Conversion

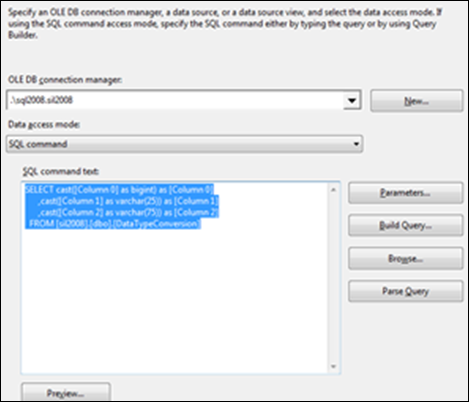

Now, let’s test the data type conversion performance of Transact-SQL. Edit the OLE DB Source component, and change the SQL statement as follows.

SELECT cast([Column 0] as bigint) as [Column 0]

, cast([Column 1] as varchar(25)) as [Column 1]

, cast([Column 2] as varchar(75)) as [Column 2]

FROM [dbo].[DataTypeConversion]

The execution time of that package is around 46 seconds. The performance monitor shows that the CPU utilization is around 75 percent and the number of buffers in use is 1.

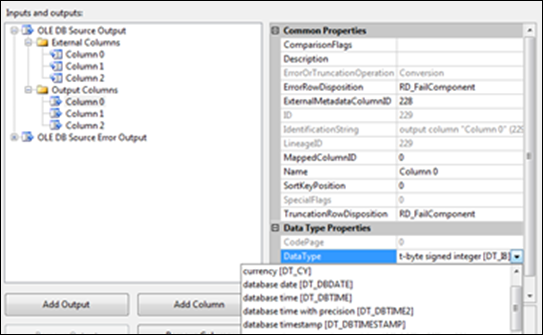

Conversion on the OLE DB Source Component

The conversion can also be done on the OLE DB Source component. To do this, right-click the OLE DB Source component, and then click Show Advanced Editor. In the Input and Output Properties tab, change the data types of the output columns as follows:

Column 0 : eight-byte signed integer

Column 1 : String [DT_STR] with length=25

Column 2 : String [DT_STR] with length=75

Important: Do not forget to change the SQL statement of the OLE DB Source component as follows (that is, be sure to remove the data conversion from the Transact-SQL statement).

SELECT [Column 0], [Column 1], [Column 2]

FROM [dbo].[DataTypeConversion]

The execution time of the package is about 45 seconds. The performance monitor shows that the CPU utilization is around 70 percent and the number of buffers in use is 1.

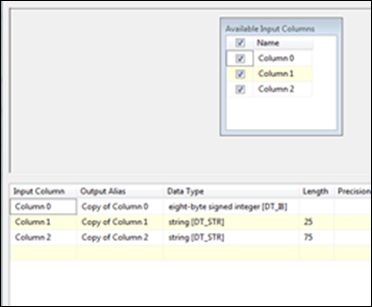

Data Conversion Transformation

Create a copy of the baseline package and then edit it by adding a Data Conversion transformation in the data flow just after the OLE DB Source component. In the Data Conversion transformation, change the data types of the data as follows:

· Convert [Column 0] from 4-byte signed integer to 8-byte signed integer

· Convert [Column 1] from string (50) to string (25)

· Convert [Column 2] from string (50) to string (75)

The execution time of the package with the Derived Column transformation is around 49 seconds. The performance monitor shows that the CPU utilization is now around 90 percent and the number of buffers in use varies between 1 and 3.

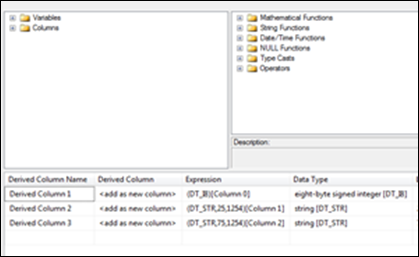

Derived Column Transformation

Create another copy of the Data Conversion package from the previous section, and then edit it by replacing the Data Conversion transformation with a Derived Column transformation. Edit the Derived Column transformation , and add three columns with the following expressions as shown in the picture.

· (DT_I8)[Column 0]

· (DT_STR,25,1254)[Column 1]

· (DT_STR,75,1254)[Column 2]

The execution time of the package with Derived Column transformation is around 71 seconds. The performance monitor shows that the CPU utilization is around 85 percent and the number of buffers in use varies between 7 and 8.

Summary

This paper described tests the SQLCAT team and MCS performed to show performance impacts of different types and methods of data conversions in Integration Services. We’ve compiled the performance results into a table that shows the values from the counters listed for each package we tested. (The tests were done on a dual-core PC running the 64-bit edition of SQL Server 2008 Developer on the 64-bit edition of the Windows 7 RC.)

Time(sec) |

CPU Utilization % |

Buffers in Use |

|

Baseline |

35 |

75 |

1 |

Transact-SQL conversion |

46 |

75 |

1 |

Conversion on the OLE DB Source component |

45 |

70 |

1 |

Data Conversion transformation |

49 |

90 |

1-3 |

Derived Column transformation |

71 |

85 |

7-8 |

As the table shows, we found significant differences in the speed of different data type conversion techniques. If you use the database to perform conversion, you should use the OLE DB Source component for maximum speed. If you use Integration Services transformations, the Data Conversion transformation is superior to Derived Column.

In terms of overall performance (elapsed time and CPU utilization), performing the data type conversion in the OLE DB layer instead of using Integration Services is the fastest option. Note that the Data Conversion transformation has a run time very similar to the run time of SQL Server’s own data conversion – although it spends more CPU to do the same work. However, you may want to consider features that Integration Services transformations offer.For example, while you may get better overall performance per CPU cycle from an OLE DB conversion, the Data Conversion transformation offers powerful row-level debugging.

If you are not using SQL Server as the RDBMS in the source systems, performing a test similar to the one described in this technical note will help you make the best choice on where to do the data type conversion.

Appendix A: Test Preparation – Using Transact-SQL to Generate Data

An alternative way to generate SQL Server data is to use Itzik Ben-Gan ’s number function:

CREATE FUNCTION [dbo].[fn_nums](@n AS BIGINT) RETURNS TABLE

AS

RETURN WITH

L0 AS(SELECT 1 AS c UNION ALL SELECT 1)

, L1 AS(SELECT 1 AS c FROM L0 AS A, L0 AS B)

, L2 AS(SELECT 1 AS c FROM L1 AS A, L1 AS B)

, L3 AS(SELECT 1 AS c FROM L2 AS A, L2 AS B)

, L4 AS(SELECT 1 AS c FROM L3 AS A, L3 AS B)

, L5 AS(SELECT 1 AS c FROM L4 AS A, L4 AS B)

, Nums AS(SELECT ROW_NUMBER() OVER(ORDER BY c) AS n FROM L5)

SELECT n FROM Nums WHERE n <= @n;

CREATE PROCEDURE [dbo].[Populate]

@scale_factor INT = 1

AS

/* Populate the DataTypeConversion table with 30 million rows */

TRUNCATE TABLE [dbo].[DataTypeConversion]

INSERT dbo.DataTypeConversion WITH (TABLOCK)

([Column 0],[Column 1],[Column 2])

SELECT (n1.n * n2.n)

,'abcde' + CAST((n1.n * n2.n) as varchar(50))

,'qtxyz' + CAST((n1.n * n2.n) as varchar(50))

FROM dbo.fn_nums(30000000) AS n1

CROSS JOIN dbo.fn_nums(@scale_factor) AS n2

After you execute the above scripts and create the necessary functions and stored procedures, execute dbo.populate.