An Approach to SQL Server Index Tuning

Well-constructed indexes can greatly improve read performance in SQL server, but they can be costly to maintain. There’s the obvious cost of additional time for your periodic index maintenance (rebuilds, reorganization and updating statistics) and the cost of additional storage, but there’s also a cost every time you make an update to indexed data.

Consider this small and poorly indexed table:

CREATE TABLE dbo.Person(

CompanyID INT IDENTITY,

NetworkId VARCHAR(20),

FirstName VARCHAR(20),

MiddleName VARCHAR(20),

LastName VARCHAR(50),

DateOfBirth DATE,

SSN CHAR(9),

EmailAddress VARCHAR(100),

BusinessPhone VARCHAR(10),

ModifiedDate DATETIME,

CONSTRAINT PK_Person PRIMARY KEY CLUSTERED (CompanyID)

);

GO

CREATE INDEX ix_LastName ON dbo.person (LastName);

CREATE INDEX ix_LastFirstMiddle ON dbo.Person (LastName, FirstName, MiddleName);

CREATE INDEX ix_LastNameFirstName ON dbo.Person (LastName, FirstName)

INCLUDE (DateOfBirth);

GO

Every time we insert or delete a row from our table we must also insert or delete a row in each of its non-clustered indexes, and if we update a value in an indexed column (e.g. , MiddleName) we must update any indexes that contain the column. You can see that we could be occurring a lot of costly I/O – and we haven’t even considered that each modification must also be written out to the transaction log.

Add to this a tendency to add every index suggested by tuning tools and wizards in hopes of (ironically) improving performance and we’ve got a mess. We clearly need a more considered, holistic approach to our index tuning, so I’d like to share my approach to this task.

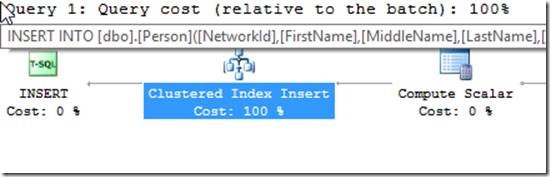

Let’s start by visualizing the index maintenance necessary when we modify our table. We’ll turn on the option to “Include the Actual Query Plan” then run an insert and an update and look at the properties of the Insert and Update operators…

INSERT INTO dbo.Person ( NetworkId, FirstName, MiddleName, LastName, DateOfBirth,

SSN, EmailAddress, BusinessPhone, ModifiedDate )

VALUES ( 'jroberts', 'Jonathan', 'Q', 'Roberts', '19700206', '123456789',

'jroberts@somecompany.com', '9195559632', GETDATE());

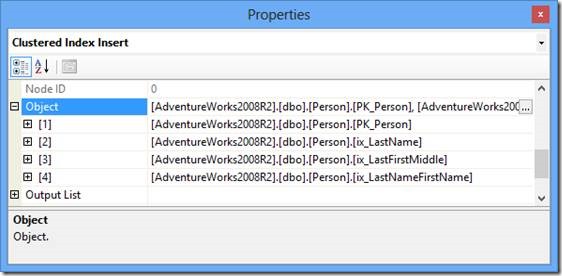

If you highlight the Clustered Index Insert operator and hit F4 you can see its properties. Scroll down to the Object node and expand it. Here you can see all that our non-clustered indexes are also being modified by the insert.

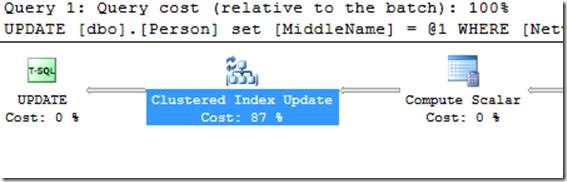

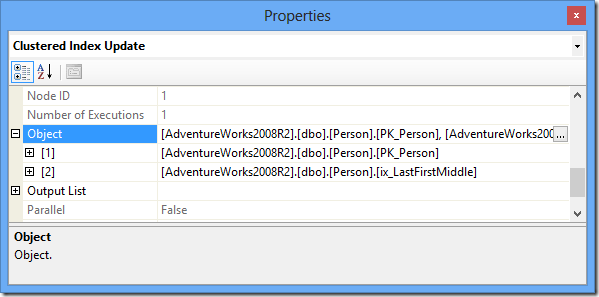

If we update MiddleName you can see there’s less work to do as it only appears in one of our indexes…

UPDATE dbo.Person

SET MiddleName = 'Quincy'

WHERE NetworkId = 'jroberts';

We can see that the number and design of our indexes will impact the performance of our server. Our goal is to get the most use from the smallest number of indexes. We’ll do this by first reviewing all of the existing indexes on a table looking for opportunities to consolidate them, then making modifications to the remaining indexes to maximize their usage and finally adding 1 or 2 thoughtfully built indexes and monitoring to see the impact of our changes. The approach laid out here approach does not absolve us from doing the preliminary bottleneck analysis and identifying our top contributing queries before we dive down into crafting indexes for the same.

Index tuning efforts may start with

1) Manually crafting an index to improve performance on a problematic SQL statement

2) Implementing “missing indexes” identified with a DMV or

3) Comprehensive workload evaluation using the Database Engine Tuning Advisor (DTA).

Wherever you start, I recommend you focus on 1 table at a time

If taking the manual approach, do a quick check for other expensive queries involving the same table. If using “missing indexes” or the DTA, make note of the various index suggestions for the targeted table, and note where they overlap. You want to discover as much as you can about your indexing needs so you can maximize the use of each index. There will be opportunities where simply adding one column to an INCLUDE clause will cover an additional query.

-- Gather missing index data for the current database

SELECT t.name AS 'table',

( avg_total_user_cost * avg_user_impact ) * ( user_seeks + user_scans )

AS 'potential_impact',

'CREATE NONCLUSTERED INDEX ix_IndexName ON ' + SCHEMA_NAME(t.schema_id)

+ '.' + t.name COLLATE DATABASE_DEFAULT + ' ('

+ ISNULL(d.equality_columns, '')

+ CASE WHEN d.inequality_columns IS NULL THEN ''

ELSE CASE WHEN d.equality_columns IS NULL THEN ''

ELSE ','

END + d.inequality_columns

END + ') ' + CASE WHEN d.included_columns IS NULL THEN ''

ELSE 'INCLUDE (' + d.included_columns + ')'

END + ';' AS 'create_index_statement'

FROM sys.dm_db_missing_index_group_stats AS s

INNER JOIN sys.dm_db_missing_index_groups AS g

ON s.group_handle = g.index_group_handle

INNER JOIN sys.dm_db_missing_index_details AS d

ON g.index_handle = d.index_handle

INNER JOIN sys.tables t WITH ( NOLOCK ) ON d.OBJECT_ID = t.OBJECT_ID

WHERE d.database_id = DB_ID()

AND s.group_handle IN (

SELECT TOP 500 group_handle

FROM sys.dm_db_missing_index_group_stats WITH ( NOLOCK )

ORDER BY ( avg_total_user_cost * avg_user_impact ) *

( user_seeks + user_scans ) DESC )

AND t.name LIKE 'Person'

ORDER BY ( avg_total_user_cost * avg_user_impact ) * ( user_seeks + user_scans ) DESC;

Run an index usage query just for the table you’re working with and save the output. We want to know which indexes are being used and which aren’t. We’ll also want to check back after our tuning session to see if usage patterns have changed. Keep in mind that the DMV counters are reset each time SQL is restarted, so the longer SQL’s up before you look for missing indexes or index usage the more accurate the values will be. Also consider cyclic usage patterns. You may want to postpone data collection until after those big end of month (quarter, year) reports have been run.

-- Index usage for tables having more than 10000 rows

SELECT t.name 'table', i.name 'index_name',

( u.user_seeks + u.user_scans + u.user_lookups ) 'reads',

u.user_updates 'writes', ( SELECT SUM(p.rows)

FROM sys.partitions p

WHERE p.index_id = u.index_id

AND u.object_id = p.object_id

) 'rows', i.type_desc, i.is_primary_key,

i.is_unique

FROM sys.dm_db_index_usage_stats u

INNER JOIN sys.indexes i ON i.index_id = u.index_id

AND u.object_id = i.object_id

INNER JOIN sys.tables t ON u.object_id = t.object_id

INNER JOIN sys.schemas s ON t.schema_id = s.schema_id

WHERE OBJECTPROPERTY(u.object_id, 'IsUserTable') = 1

AND ( SELECT SUM(p.rows)

FROM sys.partitions p

WHERE p.index_id = u.index_id

AND u.object_id = p.object_id

) > 10000

AND u.database_id = DB_ID()

AND t.name LIKE 'Person'

ORDER BY reads;

Script out the DDL for the table, including all of its indexes and keys. We need to see what we’ve already got to work with and the data types of the columns.

Before adding new indexes we always want to optimize those we’ve already got. Look for duplicate indexes we can eliminate or overlapping indexes that we can easily merge. Approach changes to Unique (including your Primary Key index) and Clustered indexes very cautiously as they have important roles in your table. Check your index usage numbers. You don’t want to spend time figuring out the best way to merge 2 similar indexes, neither of which is ever used.

Looking at our simplistic example from above, we find that we can roll the functionality of all 3 indexes into 1 by simply adding MiddleName to the 3rd index below:

-- Lots of overlap and duplication

CREATE INDEX ix_LastName ON dbo.Person (LastName);

CREATE INDEX ix_LastFirstMiddle ON Dbo.Person (LastName, FirstName, MiddleName);

CREATE INDEX ix_LastNameFirstName ON Dbo.Person (LastName, FirstName)

INCLUDE (DateOfBirth);

-- The functionality of the 3 can be combined into 1 index and

-- the other 2 can be dropped

CREATE INDEX ix_LastFirstMiddle ON Dbo.Person (LastName, FirstName, MiddleName)

INCLUDE (DateOfBirth);

5. After you’ve optimized your existing indexes, consider the indexes you’d like to add. Can you make small changes to any of the existing indexes to accommodate your new index needs?

To a point we can extend our INCLUDE list to “cover” additional queries. A covering index is one that contains all the columns needed for a query allowing us to avoid the additional IO of a Key or RID lookup in the base table.

The value of the INCLUDE clause is that it allows us to create a covering index with a smaller footprint as the included columns only are only stored at the leaf level of an index, not at the root or intermediate levels so there’s less impact to index size than if we were to add additional columns as index keys.

Index to add: Person (LastName, FirstName) INCLUDE (SSN)

-- Instead we can add SSN to the INCLUDE clause

CREATE INDEX ix_LastFirstMiddle ON Dbo.Person (LastName, FirstName, MiddleName)

INCLUDE (DateOfBirth, SSN);

Sometimes it’s more effective to add an index with some overlap to keep indexes narrow and I/O small:

Index to add: Person (LastName, FirstName, NetworkId) INCLUDE (EmailAddress)-- New index – option 1

CREATE INDEX ix_LastFirstNetworkId ON Dbo.Person (LastName, FirstName, NetworkId) INCLUDE (EmailAddress);

Or consider moving a column we aren’t using in our WHERE clause in the INCLUDE clause where it will take up less space. Try different implementations of an index and test their effectiveness.

-- New index – option 2

CREATE INDEX ix_LastFirstNetworkId ON Dbo.Person (LastName, FirstName) INCLUDE (EmailAddress, NetworkId);

6. Limit your changes to no more than a 1 or 2 indexes per table at a time, and keep a close eye on the usage statistics after implementation to see if they’re being used. Index tuning is an iterative process, so plan to do additional tuning and to check usage numbers on a periodic basis.

Useful Advice

ü Never implement a new index without careful consideration, evaluation and testing. If using the Missing Indexes DMV, read Limitations of the Missing Indexes Feature. The same applies when altering or dropping indexes. If index hints are present in code, disabling or removing an index will break the code.

ü Don’t duplicate your table by creating an index with a lengthy INCLUDE clause. Wider tables can justify wider indexes since the I/O savings can still be substantial. I try to INCLUDE no more than 1/3 of the table’s columns.

ü I like to limit the actual index keys (stuff to the left of INCLUDE) to no more than 3 columns. The key columns take up more space in an index than the INCLUDE columns, and I find 3 columns yields good selectivity.

ü I try to keep the number of indexes on tables in busy OLTP systems to no more than 5 (rule of thumb). Six is OK, 29 is not! More indexes on an OLAP system are appropriate.

ü Verify that the column order SQL’s recommending is correct. The choice of leading column drives statistics and is key to whether the optimizer chooses to use the index. Ideally it will be selective and used in the WHERE clause of multiple queries. Additional guidelines are that columns used for equality comparisons should precede those used for inequality comparisons and that columns with greater selectivity should precede those with fewer distinct values.

ü Create indexes on columns used to JOIN tables.

ü Drop unused or very seldom used indexes after verifying they aren’t used to generate a critical report for the CEO once a year. Remember that the DMV counters are reset each time SQL is restarted. Consider collecting data at intervals over a longer period of time to get a more accurate picture of index usage. It’s also a good practice to script out and save any indexes you plan to drop should you need to rebuild them in a hurry.

Additional Index design guidelines:

MSDN - Clustered index design guidelines

MSDN - General Index design Guidelines

Susan Van Eyck

SQL Server Premier Field Engineer