Implications of the SQL Server Bulk Logged recovery model on point in time restores

Choosing the database recovery model is one of the more important choices DBAs have. If the business does not need to recover a database to a point in time (PIT) then the SIMPLE recovery model is the choice for you. However, if PIT recovery is necessary, you must use the FULL recovery model. The other recovery model, BULK LOGGED exists so that you have the ability to make certain operations faster while still being able to potentially recover to a PIT. However, going the way of BULKED LOGGED is not without its potential dangers. In this blog post I want to take a look at why you shouldn’t use the BULK LOGGED operation as a full-time database recovery model and expect to be able to recover to a point in time should a failure occur.

First, I’ll create a database to use for the demos.

USE [master]

GO

IF DB_ID('MinimalLoggingRecovery') IS NOT NULL

BEGIN

ALTER DATABASE MinimalLoggingRecovery

SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE MinimalLoggingRecovery

END

GO

CREATE DATABASE MinimalLoggingRecovery ON PRIMARY

( NAME = N'MinimalLoggingRecovery_Data', FILENAME = N'C:\SQL\MinimalLoggingRecovery_Data.mdf' ,

SIZE = 1024MB , MAXSIZE = UNLIMITED, FILEGROWTH = 50MB )

LOG ON

( NAME = N'MinimalLoggingRecovery_Log', FILENAME = N'C:\SQL\MinimalLoggingRecovery_log.LDF' ,

SIZE = 100MB , MAXSIZE = 1024MB, FILEGROWTH = 25MB)

GO

Next, make sure the database is set to the BULK LOGGED recovery model.

ALTER DATABASE MinimalLoggingRecovery

SET RECOVERY BULK_LOGGED

GO

USE MinimalLoggingRecovery

GO

Now I am going to create two tables to use in the example. The first table, ImportantClusteredTable, will mimic an OLTP table used by a mission critical application. Imagine that records are inserted into this table on a regular basis and the table supports the day to day needs of the business. The other table, NonImportantHeapTable, will be a user table that a staff DBA creates to load some data into for maintenance operations. This table supports no business function.

CREATE TABLE ImportantClusteredTable

(

Fld1 INT NOT NULL, Fld2 INT NOT NULL, Fld3 INT NOT NULL , Fld4 INT IDENTITY(1,1) PRIMARY KEY CLUSTERED

)

GO

CREATE TABLE NonImportantHeapTable

(

Fld1 INT NOT NULL, Fld2 INT NOT NULL, Fld3 INT NOT NULL

)

GO

Create a full database backup so that the database does not behave as though it is in the SIMPLE recovery model.

BACKUP DATABASE MinimalLoggingRecovery

TO DISK = 'C:\SQL\MinimalLoggingRecoveryFull.bak' WITH INIT

GO

My first operation will be to insert 5000 records into the ImportantClusteredTable table. This will be a fully logged operation (assuming you do not have Trace Flag 610 enabled).

DECLARE @Value INT

SET @Value = 1

INSERT INTO ImportantClusteredTable(Fld1, Fld2, Fld3)

SELECT TOP 5000 @Value, SalesOrderDetailID + 100, SalesOrderDetailID + 99

FROM AdventureWorks2012.Sales.SalesOrderDetail

GO

To prove that the operation was fully logged, use the sys.fn_dblog function to return the log records generated associated with that specific table name.

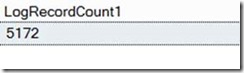

SELECT LogRecordCount1 = COUNT(*)

FROM sys.fn_dblog(null, null)

WHERE AllocUnitName LIKE '%ImportantClusteredTable%'

GO

The query above returned 5172 log records on my system.

Next, perform a checkpoint followed by a log backup. This will ensure that the log records are flushed to disk and that the fn_dblog function no longer returns information associated w/ the log records flushed to disk.

CHECKPOINT

GO

BACKUP LOG MinimalLoggingRecovery

TO DISK = 'C:\SQL\MinimalLoggingRecoveryLog1.bak' WITH INIT

GO

Perform the same INSERT operation again and view the number of log records generated. This number will likely be slightly different from the number above.

DECLARE @Value INT

SET @Value = 2

INSERT INTO ImportantClusteredTable(Fld1, Fld2, Fld3)

SELECT TOP 5000 @Value, SalesOrderDetailID + 100, SalesOrderDetailID + 99

FROM AdventureWorks2012.Sales.SalesOrderDetail

GO

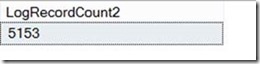

SELECT LogRecordCount2 = COUNT(*)

FROM sys.fn_dblog(null, null)

WHERE AllocUnitName LIKE '%ImportantClusteredTable%'

GO

In my case I see 5153 log records.

Perform another checkpoint and log backup.

CHECKPOINT

GO

BACKUP LOG MinimalLoggingRecovery

TO DISK = 'C:\SQL\MinimalLoggingRecoveryLog2.bak' WITH INIT

GO

Now, update all of the records from the original INSERT statement so that Fld1 is 9999. View the log records generated from this UPDATE statement. Again, this is a fully logged operation. (See link below for operations that are minimally logged)

UPDATE ImportantClusteredTable

SET Fld3 = 9999

WHERE Fld1 = 1

GO

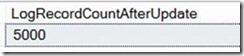

SELECT LogRecordCountAfterUpdate = COUNT(*)

FROM sys.fn_dblog(null, null)

WHERE AllocUnitName LIKE '%ImportantClusteredTable%'

GO

In this case I had 5000 log records – which is the same number as the number of records I updated.

Get the current timestamp on the system and wait 5 seconds. After waiting 5 seconds, perform a BULK LOGGED operation. In this case the NonImportantHeapTable is INSERTed into using the TABLOCK hint. This will cause the INSERT operation to be minimally logged. This is to mimic a scenario where a user or application performs a minimally logged operation while in the BULK LOGGED recovery mode.

SELECT GETDATE()

WAITFOR DELAY '0:0:5'

DECLARE @Value INT

SET @Value = 3

INSERT INTO NonImportantHeapTable WITH(TABLOCK)(Fld1, Fld2, Fld3)

select TOP 5000 @Value, SalesOrderDetailID + 100, SalesOrderDetailID + 99

FROM AdventureWorks2012.Sales.SalesOrderDetail

GO

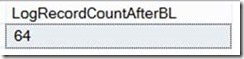

SELECT LogRecordCountAfterBL = COUNT(*)

FROM sys.fn_dblog(null, null)

WHERE AllocUnitName LIKE '%NonImportantHeapTable%'

Looking at the log records generated for the NonImportantHeapTable, I see 64 log records. Since this was a minimally logged operation, only allocation page changes were captured in the transaction log.

Capture a point in time after the BULK LOGGED operation occurs.

SELECT GETDATE() --make sure we know what time to restore to

GO

Here are the values I captured from the SELECT GETDATE() statements above.

2013-04-12 14:07:18.913 --before

2013-04-12 14:07:24.190 --after

Now, assume an unfortunate event occurs and puts the state of the business in jeopardy – maybe a user accidentally deletes all sales made in the past 45 minutes (assume it’s a LOT of sales). For the given scenario, you determine that best course of action is to restore the database to a point in time BEFORE the error occurs. No sweat – you’ve been taking log backups every hour, right?

So, the first thing you’ll want to do is take a tail log backup to ensure that you preserve all log records currently in the transaction log but not yet reflected in a log backup. You decide to use WITH NORECOVERY to ensure no new modifications can occur that modify the state of the database.

use master

go

BACKUP LOG MinimalLoggingRecovery

TO DISK = 'C:\SQL\MinimalLoggingRecoveryLog3.bak' WITH INIT, NORECOVERY

GO

Next, restore the full backup and use NORECOVERY.

RESTORE DATABASE MinimalLoggingRecovery

FROM DISK = 'c:\SQL\MinimalLoggingRecoveryFull.bak'

WITH NORECOVERY

GO

Next, restore the 2 log backups you made using NORECOVERY.

RESTORE LOG MinimalLoggingRecovery

FROM DISK = 'c:\SQL\MinimalLoggingRecoveryLog1.bak'

WITH NORECOVERY

GO

RESTORE LOG MinimalLoggingRecovery

FROM DISK = 'c:\SQL\MinimalLoggingRecoveryLog2.bak'

WITH NORECOVERY

This is the delicate part. You know the deletion occurred at around 2013-04-12 14:07:18.913 (I used the first GETDATE() statement returned from the output above). This point in time is BEFORE any BULK LOGGED operation occurred, but after our last log backup (not including the tail log) occurred.

RESTORE LOG MinimalLoggingRecovery

FROM DISK = 'c:\SQL\MinimalLoggingRecoveryLog3.bak'

WITH NORECOVERY, STOPAT = '2013-04-12 14:07:18.913'

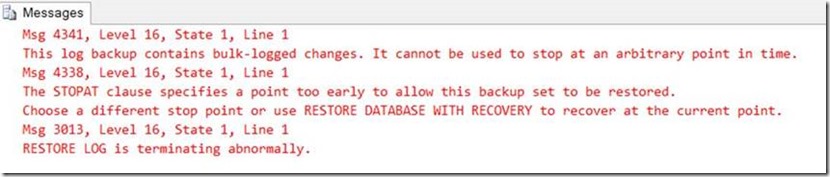

However, you receive the following error on the RESTORE statement. Why?

Well, the BULK LOGGED recovery model can be a fickle beast. You see, when the insert into the heap table above occurred, it only logged allocation page changes as it was minimally logged. When the tail log backup was taken, it contained that minimally logged operation. Since it is possible that the tail log backup contained operations that cannot be reconstituted (again – only the allocation pages were changed there – there is no record of the data page changes) it is not possible to recover to a point in time for that transaction log backup interval. I can restore the entire tail log backup, but that would put us back to where we started – the data would still be missing. So, essentially the data changes made after the last log backup that didn’t contain bulk logged operations, are gone.

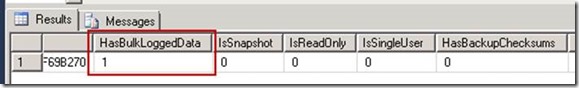

As a precaution to save yourself some time (and potentially frustration), use the RESTORE HEADERONLY command to determine if a particular backup contains minimally logged operations:

RESTORE HEADERONLY

FROM DISK = 'c:\SQL\MinimalLoggingRecoveryLog3.bak'

The moral of the story here is to ensure that you do not run your databases in the BULK LOGGED recovery mode perpetually. If you need to be able to recover to a point in time, you must stay in the FULL recovery model. It is perfectly OK to switch from FULL to BULK LOGGED to perform minimally logged operations (such as index rebuild operations), just make sure that once you do, you switch back to the FULL recovery model and take a log backup. Otherwise, if you may run into unfortunate situations like the one I described here.

Thanks,

Tim Chapman – SQL Dedicated Premier Field Engineer