SharePoint 2013: Crawl database grows because of crawl logs and cause crawl performance.

The crawl log contains information about the status of what was crawled. This log allows to verify whether crawled content was added to the index successfully, whether it was excluded because of a crawl rule, or whether indexing failed because of an error. Additional information about the crawled content is also logged, including the time of the last successful crawl, the content source (there could be more than one), the content access account used, and whether any crawl rules were applied.

Crawl information is stored in following tables of CrawlStoreDB database:

•“Msscrawlhostlist” table contain hostname with hostid.

•“MssCrawlHostsLog” table stores the hosts of all the URLs processed in the crawl.

•“MssCrawlUrlLog” table keeps track of the history of errors encountered in the crawls.

These logs are helpful as primary troubleshooting tools for determining the cause of problems. They are first indication of problems on sites such as why the crawler is not accessing certain documents or certain sites. On the other hand, it also tells if an individual document has been crawled successfully.

Please Note: Crawl Logs and History get cleared if an index reset is done.

Symptom:

Search DB is growing, Crawl Logs and history keeps growing. If content is huge, crawl logs may increase to GBs.

· Poor crawl page performance

· Crawls take longer time to complete.

· Crawl logs take longer time to update.

· Search DB growing in size for same or similar number of indexable items

· Crawled properties take longer time to convert to managed properties.

SharePoint ULS Logs contains highlighted messages

10/24/2013 13:36:17.32 mssdmn.exe (0x1048) 0x1E54 SharePoint Server Search PHSts dvi9 High ****** COWSSite has already been initialized, URL https://contoso/customer/test, hr=80041201

10/24/2013 13:36:17.32 mssdmn.exe (0x1048) 0x1E54 SharePoint Server Search PHSts dv63 High CSTS3Accessor::InitURLType: Return error to caller, hr=80041201

10/24/2013 13:36:17.32 mssdmn.exe (0x1048) 0x1E54 SharePoint Server Search PHSts dv3t High CSTS3Accessor::InitURLType fails, Url sts4://contoso/siteurl=customer/test/siteid={9aaac17f-88bc-45c1-8bbd-362bcaf6c0e4}/weburl=/webid={1cdedb2d-9524-4ba7-b73e-6bbe737857e0}/listid={5880f808-7af4-4974-b378-4ce2a3af9177}/folderurl=/itemid=47, hr=80041201

10/24/2013 13:36:17.32 mssdmn.exe (0x1048) 0x1E54 SharePoint Server Search PHSts dvb1 High CSTS3Accessor::Init fails, Url sts4://contoso/siteurl=customer/test/siteid={9aaac17f-88bc-45c1-8bbd-362bcaf6c0e4}/weburl=/webid={1cdedb2d-9524-4ba7-b73e-6bbe737857e0}/listid={5880f808-7af4-4974-b378-4ce2a3af9177}/folderurl=/itemid=47, hr=80041201

Crawl Logs deletion:

The Search Service Application property which decides the retention of the crawl logs is “CrawlLogCleanUpIntervalInDays”. The default value for this property is 90 days.

It can be modified using the following Powershell commands

$ssa = Get-SPServiceApplication –Name “Search Service Application”

$ssa.CrawlLogCleanUpIntervalInDays = 30

$ssa.Update()

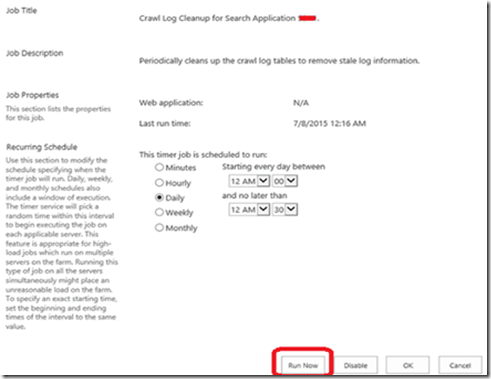

Once this is done, all you need to do is run the timer job responsible for cleaning the crawl logs: Crawl Log Cleanup for Search Application <<Search Service Application>>

You can manually run this job from Central Admin.

We can also run this job through Powershell:

$job = Get-SPTimerJob | where-object {$_.name -match "Crawl log"}

$job.runnow()