Setting up SQL Server 2014 Always On Availability Groups with Azure Premium Storage using the new SQL Server 2014 Always On resource group template

With Azure premium storage offering launched, I bet many of you have been wanting to use it for setting up their SQL Server 2014 Always on Availability Group clusters.

While, one can go ahead and set it up manually, what would be ideal is to use the available SQL Server 2014 Always On resource group template. A few clicks and you have the whole SQL Server Always On Availability Group environment setup.

At the time of writing of this blog, the template uses A3 Standard instances as default and a standard RA-GRS storage account. I am writing some steps below that you can implement to edit the setup created by the template for the SQL nodes to use Azure Premium Storage disks for Data & Logs.

These steps are based out of Keith’s blog on extending SQL Server Always On Availability Group setup, I have tweaked them to use Azure premium storage.

Please do ensure you follow all the steps and in the same order.

Create the SQL Server Always On Availability Group setup from template with DS-series VMs

The Azure premium storage account can at this point in time only be attached to the DS series VM. So our first step will be creating the template using DS series VM.

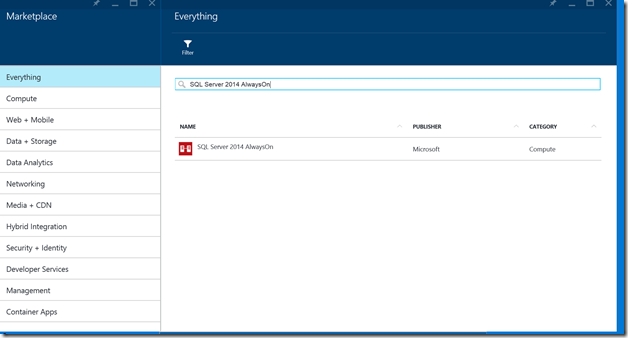

Logon to the new portal at https://portal.azure.com and in the +New page click on Azure Marketplace. Type “SQL Server Always On” and hit enter you will find the template in the list (Figure 1).

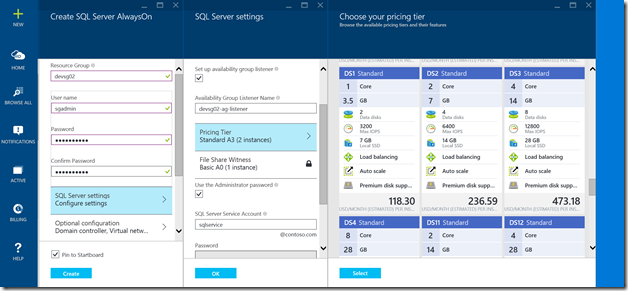

It loads the template, and you can enter a resource group name (make a note of the name as we will be creating a premium account in this resource group in next steps).

The template uses A3 instances by default. Click on the SQL Server Settings option and click on Pricing Tier. It will load the recommended pricing tier, click View All and select the DS-Series VM you want to use. Please keep in mind, the VM instance SKU matters for the IOPS you need from the premium storage. The SKUs have the maximum IOPS number so it should be easy to identify the one that you need. I selected DS2 the for this which has 6400 IOPS (Figure 2).

Create premium storage account in the same Resource Group

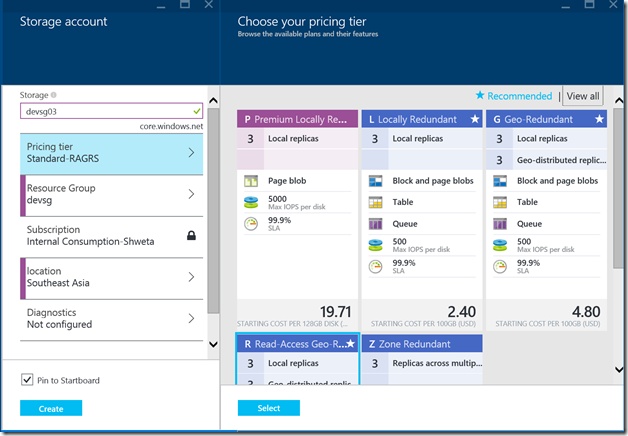

After some time elapses, while the servers are getting created we can go ahead and create a premium storage account in the same resource group. This is very important we want the account to be created as close to the VMs as possible. For doing this, please click on the +New button, Data + Storage and select Storage Account option.

This will load the Create Storage account blade. Click on Pricing Tier and you will see list of storage account tiers. Please click “View All” and you will find the new Premium Locally Redundant option. Please select that and create the storage account. (Figure 3)

Now let’s wait till the entire setup has been deployed. Once you get a confirmation notification on the dashboard.

Please repeat the following steps for every SQL Node that has been created in the template.

Disable Automatic Clustering

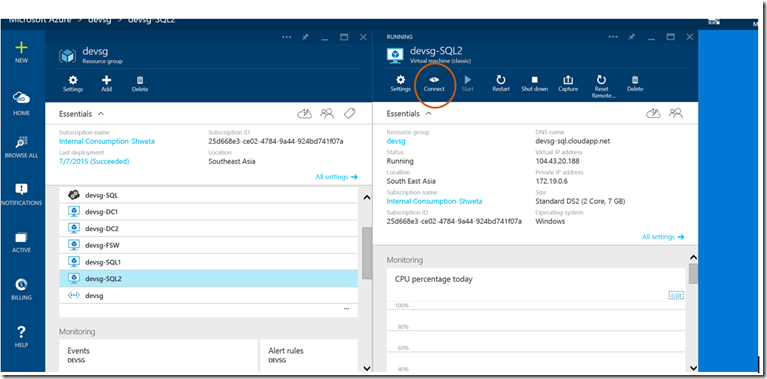

Select the SQL Server node and then logon to the SQL Server using “Connect” option (Figure 4).

Figure 4Once you are connected, run the following powershell command to disable automatic clustering. We need to do this because we don’t want SQL Server to automatically cluster each time we add/remove a disk.

Get-StorageSubSystem -FriendlyName "Clustered Storage Spaces*" | Set-StorageSubSystem -AutomaticClusteringEnabled $False

I use powershell_ise.exe as I like the intellisense feature it provides. Once you have run this command, please keep the connection running and do not disconnect.

-

Remove regular storage data disks and attach premium storage disks

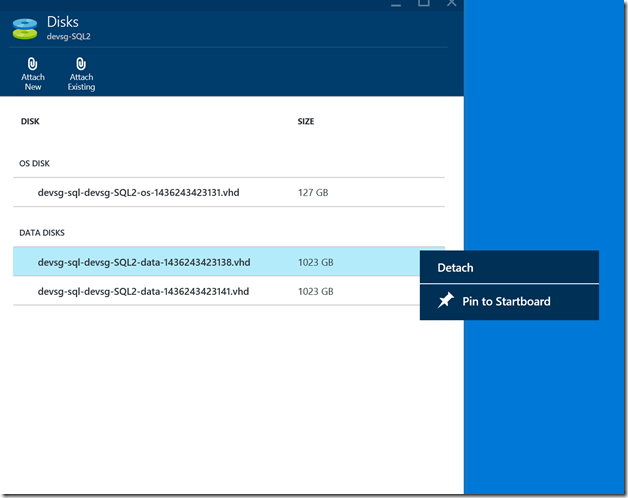

We need to remove the regular data disks that have been attached to the SQL Server node. To do that let’s go back to the portal, select the SQL server node, click on Settings which loads the settings blade and click on Disks.

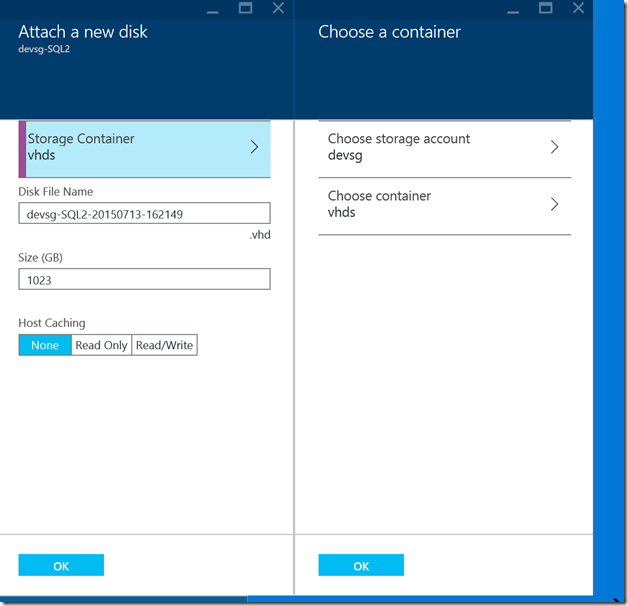

In the disks tab, select the data disks , right click and select Detach (Figure 6). Detach all the disks attached one by one (these are done sequentially so you will need to wait till previous one has been detached before you go to the next one).Once all the attached Data Disks have been detached, please click on “Attach New” disk. Click on “Select Storage Container” option, and select the new premium storage account you had created in step 2. Create a container “vhds” if you already haven't done that. Please make sure you select the premium storage account, as only this account tier has premium storage disks.

Select host caching as “None” if you are going to use it for logging and select it as Read Only if its going to be used for data. (Figure 7)

Setup disks for use

Once you have attached all the disks, let’s go back to the remote desktop connection powershell window in the SQL Server and run the following powershell commands:

$datadisks = Get-PhysicalDisk -CanPool $true

This gets the list of data disks that have been attached and can be pooled.

Note: If you plan to have both logging and data on the premium storage disks, please make sure you attached separate set of disks for each, and repeat 4 and 5 for each of them.

New-StoragePool -FriendlyName "SQLDataPool" -StorageSubSystemFriendlyName "Clustered Storage Spaces*" -PhysicalDisks $datadisks | New-VirtualDisk -FriendlyName "SQLDataDisk" -UseMaximumSize -NumberOfColumns $datadisks.Count -ResiliencySettingName "Simple" -ProvisioningType Fixed -Interleave 65536 | Initialize-Disk -Confirm:$False -PassThru | New-Partition -DriveLetter J –UseMaximumSize

This creates the drive J, please use any letter you like. Once the drive is created, please run the following command to format it for use.

Get-Partition -DriveLetter J | Format-Volume -FileSystem NTFS -NewFileSystemLabel "SQLDataVol" -AllocationUnitSize 65536 -Confirm:$False

Change the default location for data and logging in SQL Server Node

Please use the Link to change the data and log settings on SQL Server node.

You can run the SQLIO tool to get the details of IOPS and verify that you are maximizing the disk performance. After following these steps, I was getting 6450 IOPS.