Solving the “big legacy data” problem using Azure Data Factory…

I am sure as architects and systems developers we have at least one Big Data solution we are working on. Today, many enterprises and businesses are in their “revamp” stage of applications where there existing systems are no longer adequate to handle current data processing workloads. The systems have been built/updated over years, some times more than a decade. They are now moving towards SaaS based solutions preferably leveraging public cloud offerings that bring in cost efficiencies as well as scalable to the increasing workloads.

I am working with a few such enterprises, who want to adopt Azure and its services to evolve their equipment monitoring and maintenance systems (yes yes Internet of Things. This blog explains what their existing big legacy data scenario is and a possible solution that came up and is being implemented after weeks’ of deliberation and PoCs.

I have explained the problem in quite a detail, as I believe it is necessary to understand the scenario. However, if you are in a hurry it should be sufficient for you can scan through the infographics and skip to the solution.

The big legacy data problem

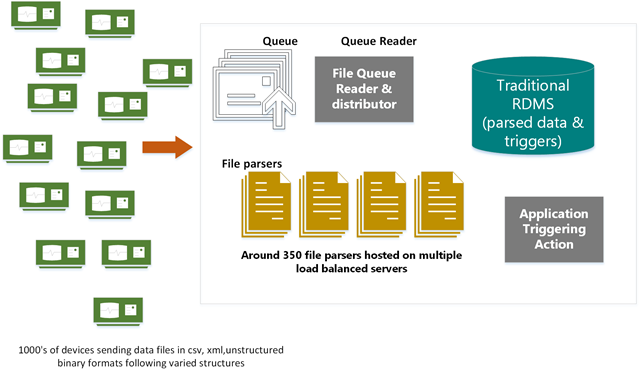

Following infographic (figure 1) describes a generalized version device monitoring and maintenance system of a large scale device manufacturer who could have 100’s of device types built and developed across different divisions. Evolved over years, typically various device types have their own data formats and structures. This eventually means their own parsers!

Figure 1: Generalized Legacy systems

Key features of the existing system:

- The system accepted and processed monitoring data from 10,000’s of devices (about 400 device types)

- Each of the device types had their own data format and structure, some were XML, CSV, Binary and unstructured.

- There were multiple parsers built to cater one or more device types (that had similar file structures).

- All of the parsers parse files extract information and create SQL insert statements that get inserted in batches on the RDMS

- Triggers run and create action inserts for the application to query and perform actions like mailing alerts

What are the pain points?

- Multiple file formats, multiple structures and 1000’s of such files being uploaded every day

- Infrastructure that hosts the parsers not adequate and getting very expensive to maintain

- Maintenance nightmare of these parsers, data

- Expensive RDMS storage

- Not scalable, adding a new device type takes a really long cycle ;

- Similarly adding new triggers/conditions to check the device also takes a long time

Desired Workflow

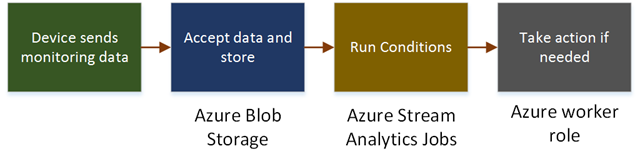

Building a solution on Azure, we could easily cater to a lot of the pain points; infrastructure scaling and costs reduction by using the services provided by Azure, replacing RDMS with Azure blob storage; deploying services like Stream Analytics that can query data directly from blob storage.

A typical desired workflow would be:

Figure 2: Ideal Workflow

But the problem persists

Our ideal solution has one caveat, which is, we still need to tackle the problem where we have various file structures, and we cannot really run conditions on the files that have been uploaded as is. For example, if a condition is:

If value1 > 0.5 for 30 minutes, and value2 > 1.5 for 30 minutes then generate an alert.

The above condition could be easily built using Stream Analytics query. However, value1 and value2 could be coming in from two different files different structures and different formats, one could be XML and one could be CSV, or both could be xml but different structures.

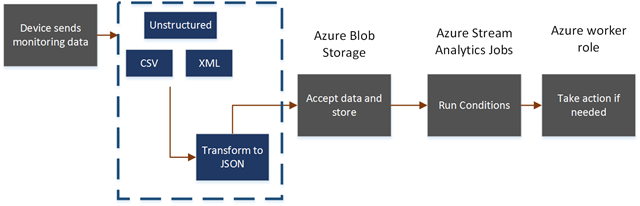

So we still need to PARSE all of them into one format & structure.

The Solution

After some weeks it struck me that we don’t need to “PARSE” but “TRANSFORM” data into one format. Simply because Stream Analytics works on a loosely defined schema unlike a custom application which expects a fixed data format.

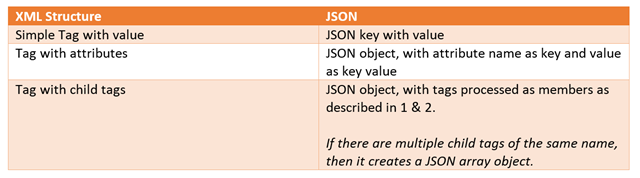

If we look at the CSV & XML format, it may vary in different ways but it still as structure to it, something that can be read and predicted. XML files, will have tags, child tags, attributes and values, CSV has headers and values or no headers only values.

So it’s possible to write a single transformation script that could transform XML & CSV into JSON (I chose JSON because it’s the most flexible structured format available right now and works beautifully with Stream Analytics). To process the unstructured data, it could be achieved by a hive job.

To modify the workflow and accommodate it I would add a step to transform data.

Figure 3: Adding file transformation

Following is the C# script that transforms any XML & CSV data into JSON, this is generic and should work on any kind of structure. Available on github. It follows a simple algorithm that runs recursively navigating through the XML tags hierarchy.

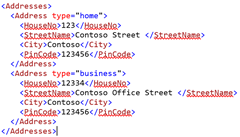

For example, lets take addresses as XML tags:

It’s JSON equivalent would be:

“Addresses”:[ “Address”:{“type”:”home”,”HouseNo”:”123,”StreetName”: “Contoso Street”, “City” : “Contoso”, “PinCode” : 123456},{“type”: “business”, “ HouseNo”: 12334, “StreetName”: “Contoso Street”, “City” : “Contoso”, “PinCode” : 123456}]]

Similarly, the script also transforms CSV files. If the CSV file has a header, it creates an array of JSON objects, each row having header key and their respective values. If there is no header, it will create a key as “key<n>” where n is the column index. The code uses a third party open sources CSVReader provided by Greene Stellman, available for download and use from this link.

Now we have a script that can transform any XML/CSV file into JSON objects. These can be directly queried by Stream Analytics. In the above, example if I want the data to be extracted only if address is home I could easily query it now:

Select * from input where address.type= “home” into output

Scaling transformation to process thousands of incoming files…

Next step is to scale this script to process thousands of incoming file. We also need to run Hive scripts on the unstructured files to extract data. I figured Azure Data Factory would be an ideal solution, it is completely managed, runs and maintains the pipelines, provides detailed audit and logging reports; real time dashboard. Otherwise managing these data processing pipelines at such a large scale would be a nightmare.

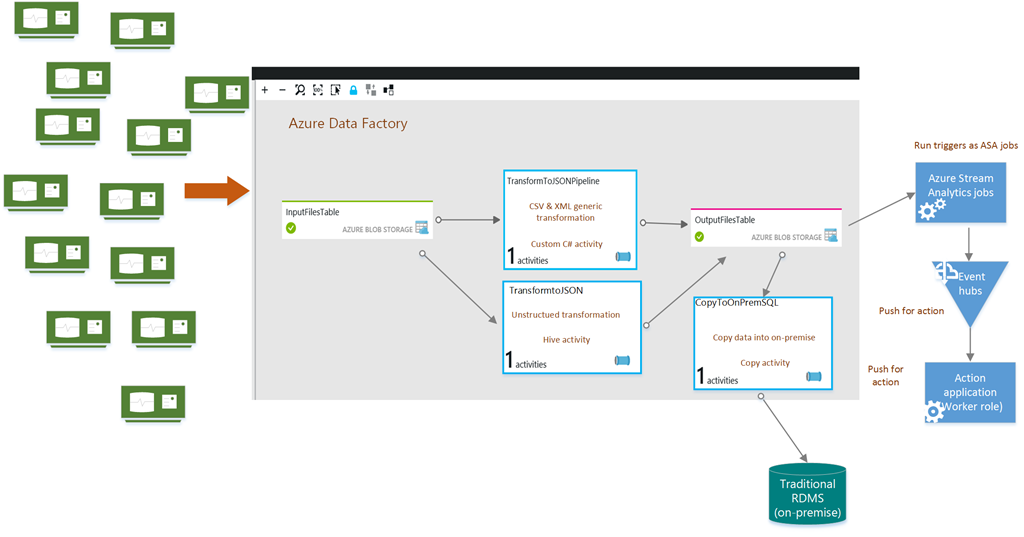

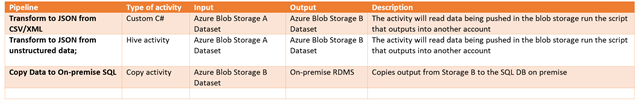

Following diagram shows the Data Factory dashboard implementing three pipelines for processing XML/CSV; Unstructured and also copying data into the on-premise SQL database (Oracle/SQL Server) for other legacy applications that depend on it.

Figure 4: Azure Data Factory Solution

In the Azure Data Factory, I created three pipelines:

All the activities will push data into blob storage, which I can use as input in the Azure Stream Analytics jobs.

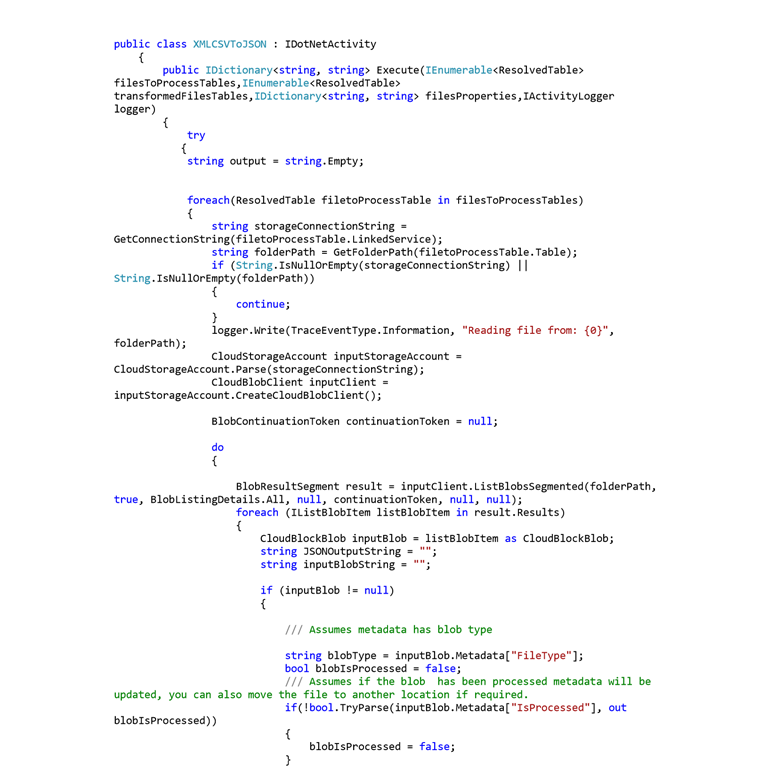

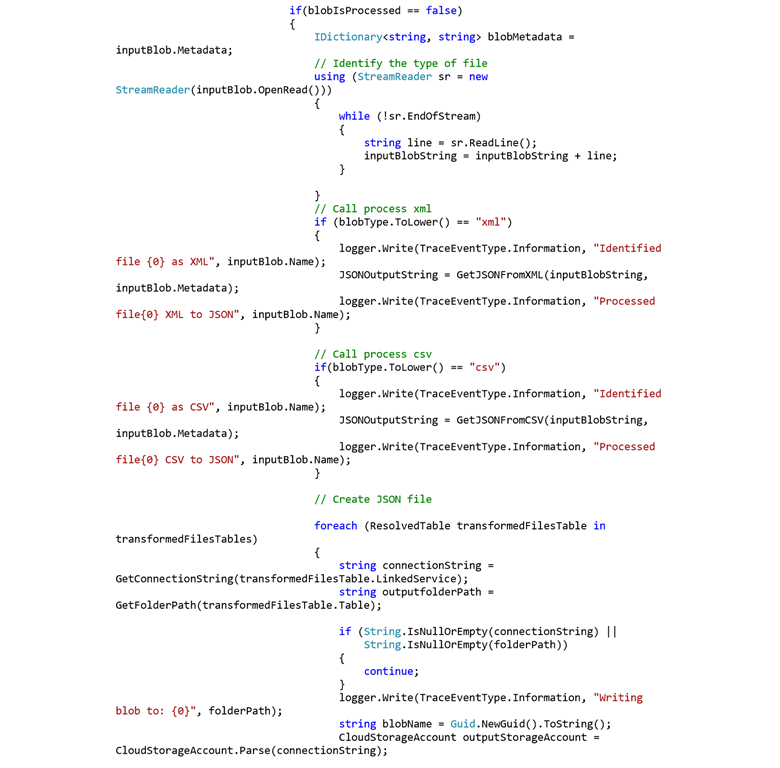

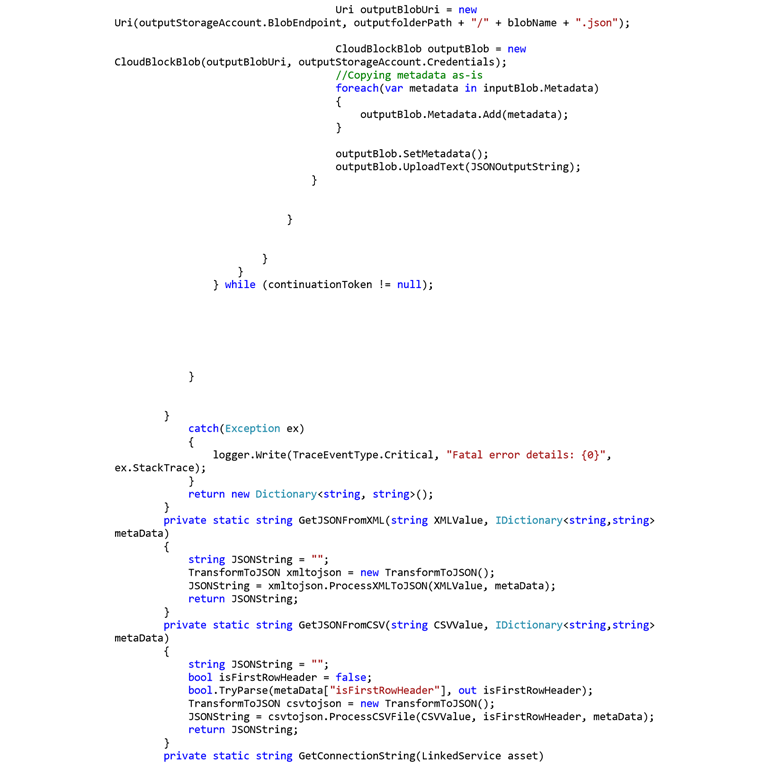

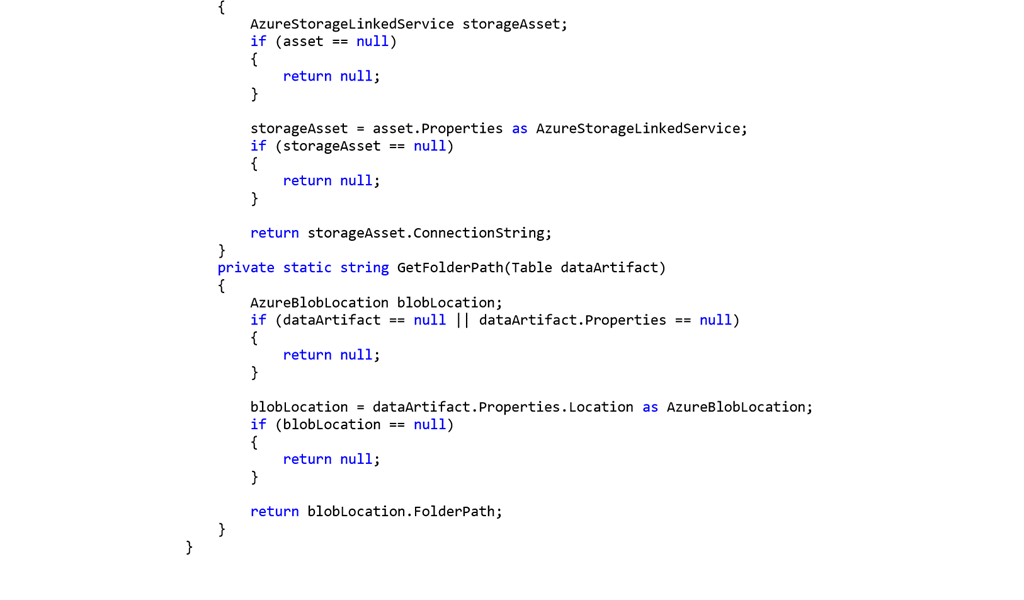

Following is the extract from the C# code that runs in the custom C# activity, you can find the complete project at github, along with scripts to setup Azure Data Factory. I have used the custom C# activity documentation in the azure portal as base, you can find the earlier steps on this link.

The base class needs to inherit the IDotNetActivity class. The function Execute needs to be implemented, it accepts Input tables which is a dataset (Explained later), and output tables.

The solution works beautifully, the output to blob storage could be easily queries by the Stream Analytics jobs running various conditions and is undergoing planned discussions for load tests. I will update the blog post should there be anything else.