PowerShell: Getting Link Counts per Host Domain

Let's consider this throwback Thursday. A couple of years ago, I posted this "PowerShell to Rebalance Crawl Store DBs in SP2013". As a side note, I mentioned the following PowerShell to leverage the CrawlLog object for viewing some Crawl Store level statistics about crawled documents, such as the "Document Count". For example:

$crawlLog = New-Object Microsoft.Office.Server.Search.Administration.CrawlLog $SSA

$dbHashtable = $crawlLog.GetCrawlDatabaseInfo()

$dbHashtable .Keys

Guid

----

2152167e-9dac-4d45-9724-4ed67f5d9643 #GUID of CrawlStoreOne

ecd49946-2bde-4ccc-a2b2-c523554bd923 #GUID of CrawlStoreTwo

The Crawl Store is perfect for any ongoing analysis and monitoring, but there are times when troubleshooting where it is incredibly helpful to capture counts directly from the MSSCrawlUrl and MSSCrawlQueue tables in the Crawl Store DB(s). In that same rebalancing Crawl Store DBspost, I demonstrated the following SQL Query (for each crawl store):

SELECT ContentSourceID, HostID, COUNT(*) AS linkCount

FROM [V5_SSA_CrawlStore].[dbo].[MSSCrawlURL] with (nolock)

Group by ContentSourceID, HostID

Order by ContentSourceID, HostID

Which generated a report such as the following:

| Crawl Store DB Name | ContentSourceID | HostID | linkCount |

|---|---|---|---|

| V5_SSA_CrawlStore | 1 | 4 | 20,558 |

| V5_SSA_CrawlStore | 6 | 2 | 14,813 |

| V5_SSA_CrawlStore | 6 | 3 | 10,818 |

| V5_SSA_CrawlStore2 | 4 | 1 | 77,836 |

| V5_SSA_CrawlStore3 | 4 | 1 | 79,835 |

...but there are times where you just don't have access to SQL and don't have a DBA handy. For this, fear not for we have PowerShell to the rescue.

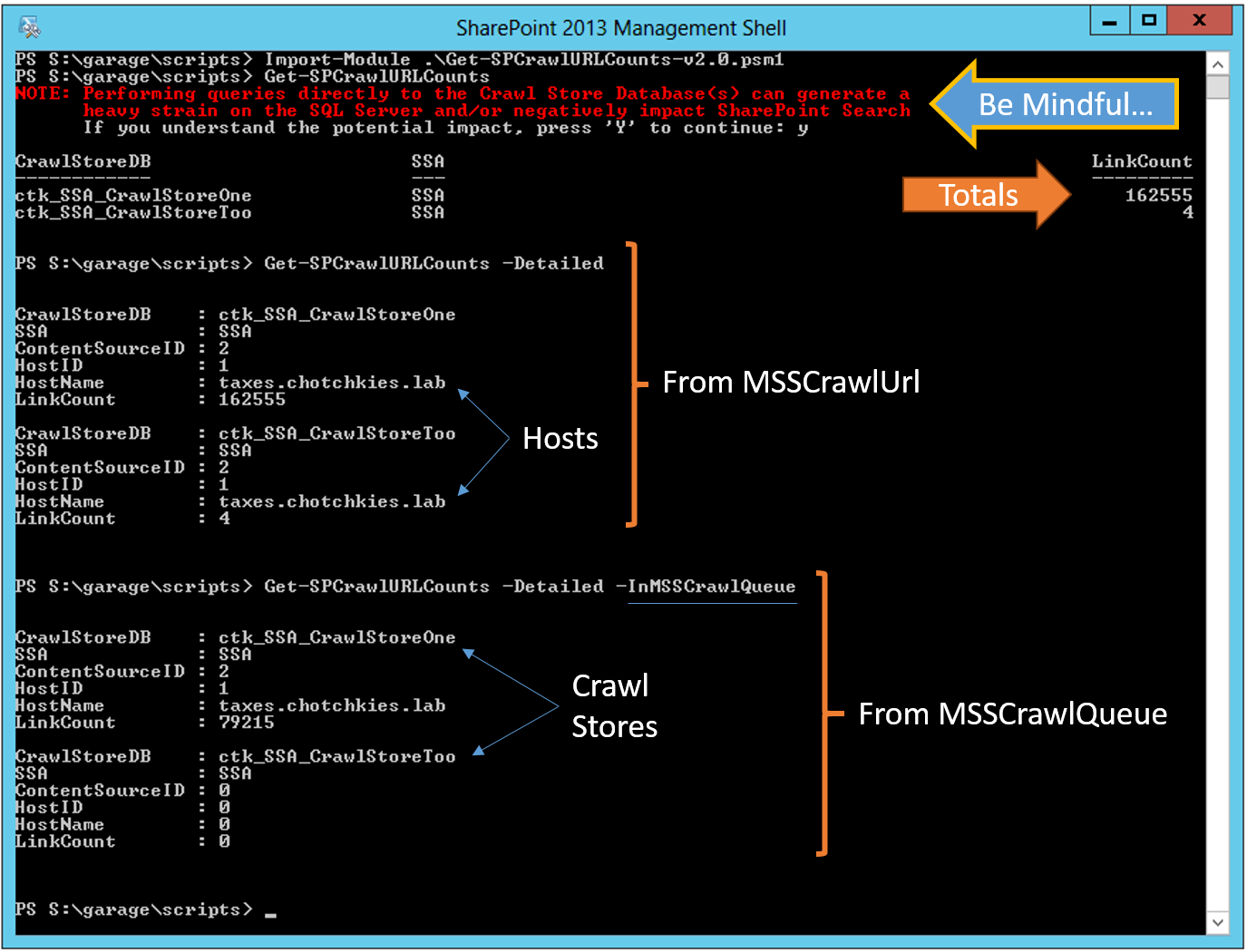

I recently developed/posted the Get-SPCrawlURLCounts-v2.0.psm1 module here (it's version 2.0 because this is a replacement to the one previously published by Brent Groom and Eric Dixon), which enables us to make the query from PowerShell.

In this first screen shot, we can see the comparable results from the CrawlLog versus the output from the custom module (and just to re-iterate, the CrawlLog is the preferred option for everyday use and creates the least impact) :

As I noted before (and as the script will prompt you the first time it runs), be mindful when running this module as it can have impact to the performance of your SSA. In other words, this script is intended for troubleshooting scenarios ...do not regularly run or schedule this query.

In fact, it's time to bring back my standard disclaimer:

ALL information in this blog is provided "AS IS" with no warranties and confers no rights. This blog does not represent the thoughts, intentions, plans or strategies of my employer. All content is solely my opinion and provided with a best effort to be based in reality. All examples, code samples, demonstrations, or anything resembling a “how-to” are provided "AS IS" without warranty of any kind, either express or implied, including but not limited to the implied warranties of merchantability and/or fitness for a particular purpose. Inappropriate comments will be deleted at the authors discretion. And yes, the spelling of strategery was intentional.

OK, being said... In the screen shot below, we see further examples of how to use this new custom module:

The benefit to crafting this into a module (versus a script) is that the cmdlet can be invoked as any of the following:

Get-SPCrawlURLCounts -ByContentSource

Get-SPCrawlURLCounts -ByHostId

#"detailed" is equivalent to: Get-SPCrawlURLCounts -ByContentSource -ByHostId

Get-SPCrawlURLCounts –Detailed

We can even pipeline an $SSA object directly to it, such as:

$SSA | Get-SPCrawlURLCounts –Detailed

Also, we can easily substitute in the MSSCrawlQueue table instead of the MSSCrawlUrl on the fly and get counts from the queue instead. For example:

Get-SPCrawlURLCounts –Detailed -inQueue

But the real reason for writing this version 2.0 was to have the end product be an array of objects that could be further manipulated (and there is still the option to export the CSV). For example:

Get-SPCrawlURLCounts -Detailed | WHERE {$_.HostName -match "chotchkies.lab"}

And exporting to CSV is easily accomplished by tacking on the flag “-toCSV” (optionally, you can specify a custom path with the “–Path c:\custom\” parameter ...otherwise the CSV file is created in the local path), such as:

Get-SPCrawlURLCounts -Detailed -toCSV

--------------------

To run this:

1. Import the module, such as:Import-Module ".\Get-SPCrawlURLCounts-v2.0.psm1"

2. Then run it as the examples above:

$SSA | Get-SPCrawlURLCounts –Detailed -toCSV

(And, for what it’s worth: I plan to add more into this crawl diagnostics module later…)

I hope this helps...