Managed object internals, Part 2. Object header layout and the cost of locking

Working on my current project I’ve faced a very interesting situation. For each object of a given type, I had to create a monotonically growing identifier with few caveats: 1) the solution should work in multithreaded environment 2) the number of objects is fairly large, up to 10 million instances and 3) identity should be created lazily because not every object needs it.

During my original implementation, I haven’t realized the amount of instance the application will deal with, so I came up with a very simple solution:

public class Node

{

public const int InvalidId = -1;

private static int s_idCounter;

private int m_id;

public int Id

{

get

{

if (m_id == InvalidId)

{

lock (this)

{

if (m_id == InvalidId)

{

m_id = Interlocked.Increment(ref s_idCounter);

}

}

}

return m_id;

}

}

}

The code is using double-checked locking pattern that allows initializing an identity field lazily in multithreaded environment. During one of the profiling sessions, I’ve noticed that the number of objects with a valid id reached few million instances and the main surprise was that it didn’t cause any issues in terms of performance.

After that, I’ve created a benchmark to see the impact of the lock statement in terms of performance compared to a no-lock approach.

public class NoLockNode

{

public const int InvalidId = -1;

private static int s_idCounter;

private int m_id = InvalidId;

public int Id

{

get

{

if (m_id == InvalidId)

{

// Leaving double check to have the same amount of computation here

if (m_id == InvalidId)

{

m_id = Interlocked.Increment(ref s_idCounter);

}

}

return m_id;

}

}

}

To analyze performance differences, I’ll be using BenchmarkDotNet:

List<NodeWithLock.Node> m_nodeWithLocks =>

Enumerable.Range(1, Count).Select(n => new NodeWithLock.Node()).ToList();

List<NodeNoLock.NoLockNode> m_nodeWithNoLocks =>

Enumerable.Range(1, Count).Select(n => new NodeNoLock.NoLockNode()).ToList();

[Benchmark]

public long NodeWithLock()

{

// m_nodeWithLocks has 5 million instances

return m_nodeWithLocks

.AsParallel()

.WithDegreeOfParallelism(16)

.Select(n => (long)n.Id).Sum();

}

[Benchmark]

public long NodeWithNoLock()

{

// m_nodeWithNoLocks has 5 million instances

return m_nodeWithNoLocks

.AsParallel()

.WithDegreeOfParallelism(16)

.Select(n => (long)n.Id).Sum();

}

In this case, NoLockNode is not suitable for multithreaded scenarios, but our benchmark doesn’t try to get Id’s for two instances from different threads at the same time either. The benchmark mimics the real-world scenario when contention is happening rarely and in most cases the application just consumes already created identifier.

Method | Mean | StdDev |

---------------------------- |------------ |---------- |

NodeWithLock | 152.2947 ms | 1.4895 ms |

NodeWithNoLock | 149.5015 ms | 2.7289 ms |

As we can see the difference is extremely small. How can the CLR get 1 million locks with almost 0 overhead?

To shed some light on the CLR behavior, let’s extend our benchmarking test suite by another case. Let’s add another Node class that calls GetHashCode method in the constructor (non overridden version of it) and then discards the result:

public class Node

{

public const int InvalidId = -1;

private static int s_idCounter;

private object syncRoot = new object();

private int m_id = InvalidId;

public Node()

{

GetHashCode();

}

public int Id

{

get

{

if (m_id == InvalidId)

{

lock(this)

{

if (m_id == InvalidId)

{

m_id = Interlocked.Increment(ref s_idCounter);

}

}

}

return m_id;

}

}

}

Method | Mean | StdDev |

---------------------------- |------------ |---------- |

NodeWithLock | 152.2947 ms | 1.4895 ms |

NodeWithNoLock | 149.5015 ms | 2.7289 ms |

NodeWithLockAndGetHashCode | 541.6314 ms | 4.0445 ms |

The result of the GetHashCode invocation is discarded and the call itself does not affect the end-2-end time because the benchmark excludes construction time from the measurements. But the questions are: why there is almost 0 overhead for a lock statement in case of NodeWithLock and significant difference when the GetHashCode method was called on the object instance in NodeWithLockAndGetHashCode?

Thin locks, inflation and object header layout

Each object in the CLR can be used to create a critical region to achieve mutually exclusive execution. And you may think that to do so, the CLR creates a kernel object for each CLR object. But this approach would make no sense just because only a very small fraction of all the objects are being used as handles for synchronization purposes. So, it makes a perfect sense that the CLR creates expensive data structures required for synchronization lazily. Moreover, if the CLR can avoid redundant data structures, it won’t create them at all.

As you may know, every managed object has an auxiliary field for every object called the object header. The header itself can be used for different purposes and can keep different information based on the current object’s state.

The CLR can store object’s hash code, domain specific information, lock-related data and some other stuff at the same time. Apparently, 4 bytes of the object header is simply not enough for all of that. So, the CLR will create an auxiliary data structure called sync block table and will keep just an index in the header itself. But the CLR will try to avoid that and will try to put as much data in the header itself as possible.

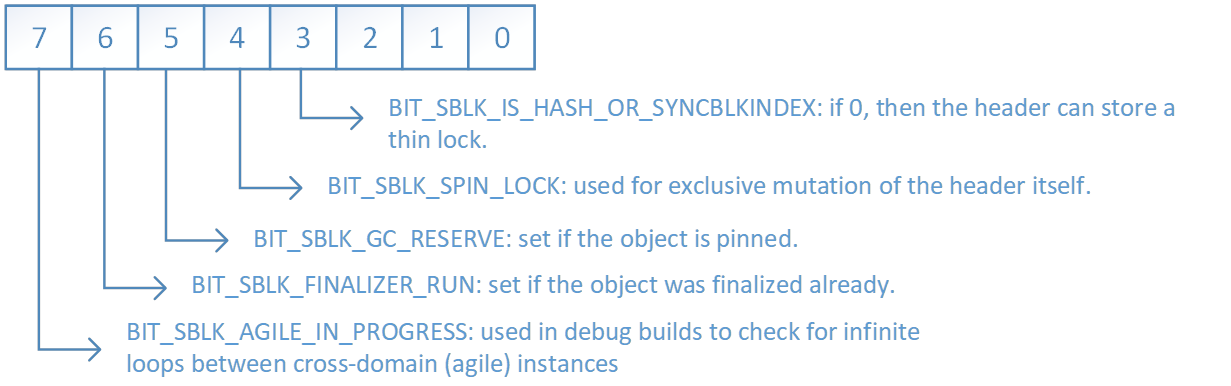

Here is a layout for a most significant byte of the object header:

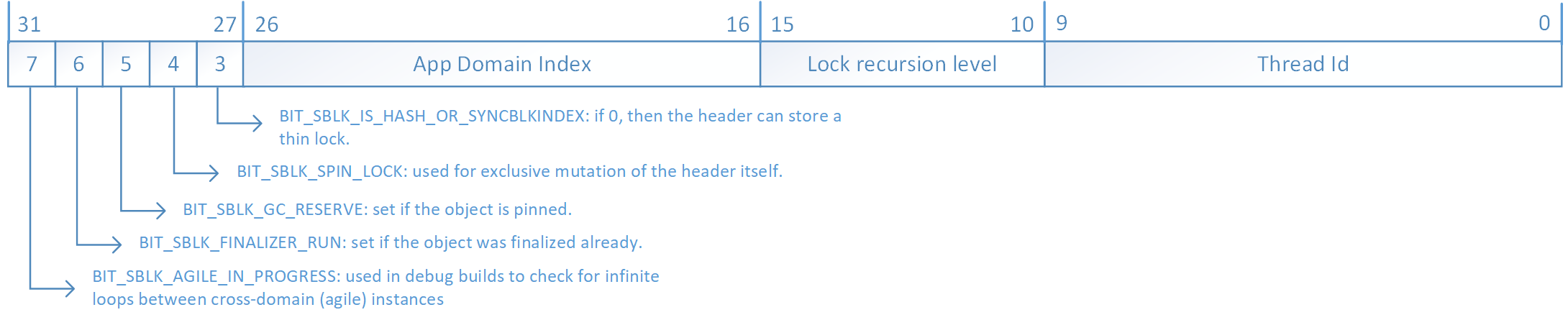

If the BIT_SBLK_IS_HASH_OR_SYNCBLKINDEX bit is 0, then the header itself keeps all lock-related information and the lock is called a “thin lock”. In this case, the overall layout of the object header is the following:

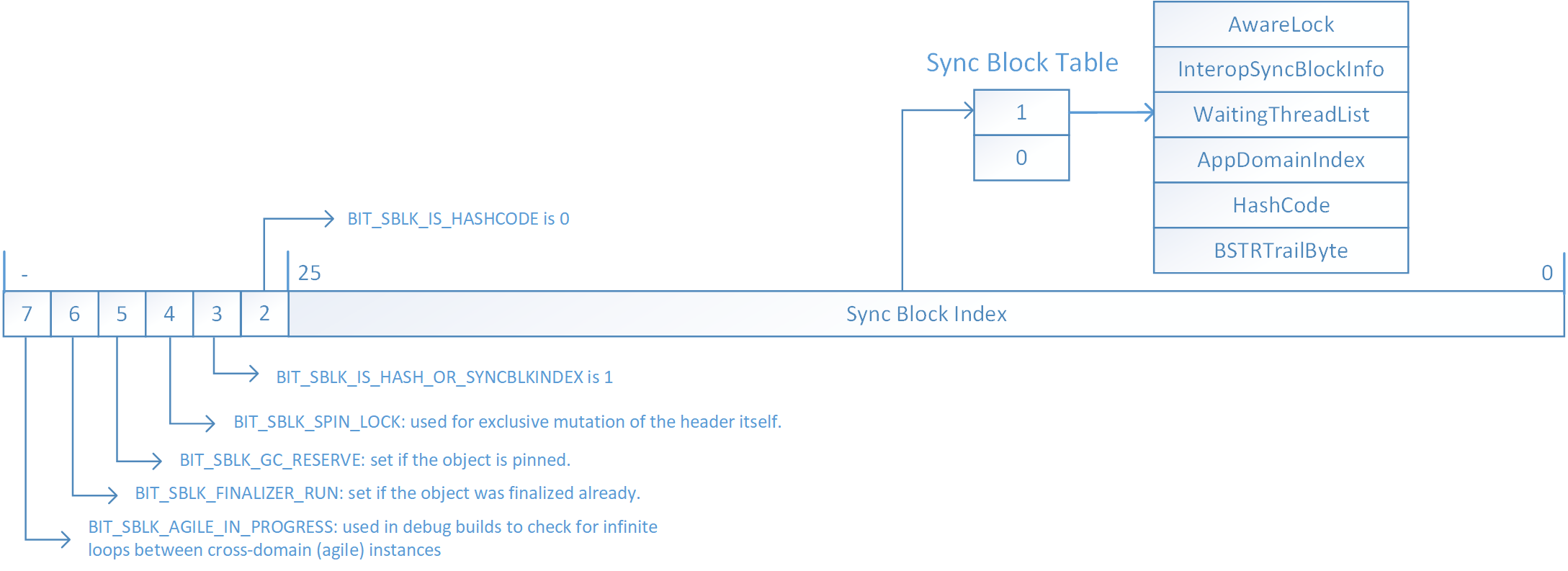

If the BIT_SBLK_IS_HASH_OR_SYNCBLKINDEX bit is 1 then the sync block created for an object or the hash code was computed. If the BIT_SBLK_IS_HASHCODE is 1 (bit #26) then the rest of the dword (lower 26 bits) is the object’s hash code, otherwise, the lower 26 bits represents a sync block index:

We can investigate thin locks using WinDbg with SoS extension. First, we’ll stop the execution inside a lock statement for a simple object instance that doesn’t call a GetHashCode method:

object o = new object();

lock (o)

{

Debugger.Break();

}

Inside WinDbg we’ll run .loadby sos clr to get SOS Debugging extension and then two commands: !DumpHeap -thinlock to see all thin locks and then !DumpObj obj to see the state of the instance we’re using in the lock statement:

0:000> !DumpHeap -thinlock

Address MT Size

02d223e0 725c2104 12 ThinLock owner 1 (00ea5498) Recursive 0

Found 1 objects.

0:000> !DumpObj /d 02d223e0

Name: System.Object

MethodTable: 725c2104

ThinLock owner 1 (00ea5498), Recursive 0

There are at least two cases when the thin lock can be promoted to a “fat lock”: (1) contention on the sync root from another thread that will require a kernel object to be created or (2) inability for the CLR to keep all the information in the object header, for instance, because of a call to a GetHashCode method.

The CLR monitor is implemented as a “hybrid lock” that tries to spin first before creating a real Win32 kernel object. Here is a short description of a monitor from the “Concurrent Programming on Windows” by Joe Duffy: “On a single-CPU machine, the monitor implementation will do a scaled back spin-wait: the current thread’s timeslice is yielded to the scheduler several times by calling SwitchToThread before waiting. On a multi-CPU machine, the monitor yields the thread every so often, but also busy-spins for a period of time before falling back to a yield, using an exponential back-off scheme to control the frequency at which it rereads the lock state. All of this is done to work well on Intel HyperThreaded machines. If the lock still is not available after the fixed spin wait period has been exhausted, the acquisition attempt falls back to a true wait using an underlying Win32 event. We discuss how this works in a bit.”

We can check that in both cases the inflation is indeed happening and a thin lock is promoted to a fat lock:

object o = new object();

// Just need to call GetHashCode and discard the result

o.GetHashCode();

lock (o)

{

Debugger.Break();

}

0:000> !dumpheap -thinlock

Address MT Size

Found 0 objects.

0:000> !syncblk

Index SyncBlock MonitorHeld Recursion Owning Thread Info SyncBlock Owner

1 011790a4 1 1 01155498 4ea8 0 02db23e0 System.Object

As you can see, just by calling GetHashCode on a synchronization object we’ll get a different result. Now there is no thin locks and the sync root has a sync block associated with it.

We can get the same result if the other thread will content for a long period of time:

object o = new object();

lock (o)

{

Task.Run(() =>

{

// This will promote a thin lock as well

lock (o) { }

});

// 10 ms is not enough, the CLR spins longer than 10 ms.

Thread.Sleep(100);

Debugger.Break();

}

In this case, the result would be the same: a thin lock would be promoted and a sync block entry would be created.

0:000> !dumpheap -thinlock

Address MT Size

Found 0 objects.

0:000> !syncblk

Index SyncBlock MonitorHeld Recursion Owning Thread Info SyncBlock Owner

6 00d9b378 3 1 00d75498 1884 0 02b323ec System.Object

Conclusion

Now the benchmark output should be easier to understand. The CLR can acquire millions of locks with close to 0 overhead if it can use thin locks. Thin lock is extremely efficient. To acquire the lock the CLR will change a few bits in the object header to store a thread id there, and the waiting thread will spin until those bits are non-zero. On the other hand, if the thin lock is promoted to a “fat lock” than the overhead becomes more noticeable. Especially if the number of objects acquired a fat lock is fairly large.

Light

Light Dark

Dark

1 comment

Thank you Sergey for another informative and useful article!