Microsoft Cosmos: Petabytes perfectly processed perfunctorily

![clip_image002[10] clip_image002[10]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/32/02/metablogapi/6242.clip_image00210_1E7964E0.jpg) I tell non-technical friends and family that I recently switched roles at Microsoft to the Bing team. Many have heard of Microsoft's new search engine https://bing.com, although most don't know all the cool features Bing offers.

I tell non-technical friends and family that I recently switched roles at Microsoft to the Bing team. Many have heard of Microsoft's new search engine https://bing.com, although most don't know all the cool features Bing offers.

But for my Technical friends (allow me to include you in that group), the details are a bit more interesting. Sure I work in the Bing org, but specifically work for a group building and improving the Cosmos System, which in itself is not related to search. So what is it? Let me start with a usage scenario as an example.

![clip_image003[8] clip_image003[8]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/32/02/metablogapi/6242.clip_image0038_24540879.jpg) Say we collect data for every click made on every Bing page -- not only search results, but visual search, music search including audio, street view, and Photosynth (the last two links are Bing HQ BTW). Assume 140 million searches per day [1]. Then say for each search Bing stores the query text, click data, some URLs of items clicked on, URLs of where the user came from, and some contextual content, for about 20KB per search. (This webpage you are reading is about 70KB, so 20KB is not a lot). So per day Bing is socking away 2.5 TB (that's 2610 GB) of data! Now what do you do with that data? You mine it…. Determine what people like and what they don't... what resonates...what is helping people to make decision and helping them to satisfy their needs (realized or not).

Say we collect data for every click made on every Bing page -- not only search results, but visual search, music search including audio, street view, and Photosynth (the last two links are Bing HQ BTW). Assume 140 million searches per day [1]. Then say for each search Bing stores the query text, click data, some URLs of items clicked on, URLs of where the user came from, and some contextual content, for about 20KB per search. (This webpage you are reading is about 70KB, so 20KB is not a lot). So per day Bing is socking away 2.5 TB (that's 2610 GB) of data! Now what do you do with that data? You mine it…. Determine what people like and what they don't... what resonates...what is helping people to make decision and helping them to satisfy their needs (realized or not).

Cosmos enables this. Cosmos has two hats

- It's a distributed, scalable storage system designed to reliably and efficiently store extremely large sequential files.

- It's a distributed, massively parallelized application execution engine primarily used for processing operations on the aforementioned data.

So some of the data mining folks at Bing write the code to collect the data from the live site and send it in real time to Cosmos. Cosmos stores away these Terabytes of data safely; along with 100s of other jobs per day (do the math!). Then the data mining folks want to analyze their data. Cosmos enables them to use a SQL-like scripting language called SCOPE to efficiently slice and dice their data.

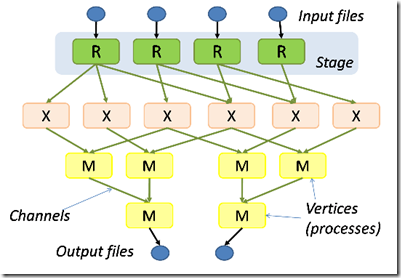

The key here is efficient. How can Cosmos return results of complex query, order, select, and join operations on what is essentially a huge flat file? The key is in use of parallelization. You may have heard of Google's Map-Reduce, or of Microsoft's Dryad. In simplest term we can take 100s or 1000s of commodity level machines and get them to work in parallel on the problem. In the case of Cosmos the path from SCOPE query to final results is several stages of 100s or 1000s of machines. At each stage the task's processing is distributed across all the machines in that stage. Then the intermediate outputs from each machine need to be routed to the right machines in the next stage. So in summary the data is distributed and the application execution to process the data is also distributed. It looks something like this:

![clip_image005[8] clip_image005[8]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/32/02/metablogapi/4760.clip_image0058_4754541C.jpg) It stands to reason if we are relying on numbers of machines to store and process this amount of data, we must have a lot of machines. Yup. The picture to the left is from our Chicago data center. This is the inside of just one of many shipping containers which hold more than 2000 nodes each. The Chicago facility is one of the largest data centers in the world to use containers.

It stands to reason if we are relying on numbers of machines to store and process this amount of data, we must have a lot of machines. Yup. The picture to the left is from our Chicago data center. This is the inside of just one of many shipping containers which hold more than 2000 nodes each. The Chicago facility is one of the largest data centers in the world to use containers.

So that's Cosmos. As you probably know, my racquet is Software Quality,. For Cosmos I lead the Test Team working on the execution engine that enables the massively parallel processing. By the way ;-) I have an opening on my team for a Software Engineer in Test . So if this sounds interesting to you, and you meet the job qualifications here , then give me a shout.

Other Sources of info on Cosmos

- ZDNET: The Bing back-end: More on Cosmos, Tiger and Scope

- What is Microsoft's Cosmos service?

- SCOPE and COSMOS

- Partitioning and Parallel Plans in SCOPE and COSMOS

- The Importance of Teams in Software Engineering

[1] Bing searches per day based from 4.1 billion number from comscore divided by 30 and rounded.