SQL Server 2012 AlwaysOn – Part 2 - Quorum Detection

[Edit] You can find the summary of the complete series here.

After giving an overview on AlwaysOn in the first part of the series of articles, we now need to go a bit more in the details around Availability Groups and why we don’t need a witness instance anymore. Or how do we make sure that there is a quorum for the case of automatic failover. In order to continue this article, make sure that you read part #1.

How do we achieve quorum?

Having just one pair of instances in Database Mirroring, we required a third instance to realize automatic failover and make sure that we could achieve a quorum to eliminate split brain scenarios. As one can imagine, we needed to build a state machine which took the different states of the principal, the mirror and the witness into account and code out every single possible combination. Expanding such a state machine to multiple mirrors, some of them synchronously supplied and some others asynchronously replicated, could have led to quite a substantial number of different combinations of states. Going into SQL Server 2012 development with the goal to get a richer HA/DR experience we looked into different directions on how one can solve this complexity in coding as well as in administrating more complex HA and DR configurations. Realizing that the Windows Server Failover Cluster (WSFC) framework did resolve many of the problems we would encounter going forward with our anticipated solution, the decision was made to build our new AlwaysOn functionality for non-shared disks on the WSFC framework. In rough the distribution between the SQL Server part and the WSFC part looks like:

- Transaction Log Data replication and network handling in transfer is the part SQL Server does

- Creating the necessary cluster services and resources is done by SQL Server when creating an Availability Group or an Availability Group Listener

- Delivering logic which detects whether a replica is responding or not responding is part of SQL Server interfacing with the WSFC framework

- Administrating and defining Availability Modes or Failover modes, configure readable secondary replicas is all part of SQL Server’s responsibilities

- Providing a quorum is part of the logic and configuration WSFC needs to provide

- Reacting on the fact that a primary replica in the AlwaysOn configuration doesn’t react anymore, is part of the WSFC

- Reacting on the fact that a quorum is lost is part of WSFC responsibility

As a consequence each and every replica in an AlwaysOn configuration needs to run on a server which is in a WSFC configuration. One Availability Group can run on one Windows Cluster only and can’t be spread over server nodes of multiple clusters. However one Windows Cluster configuration can be leveraged by multiple AlwaysOn Availability Groups or even other components like e.g. SAP ASCS/SCS HA configuration.

IMPORTANT: Please note that using WSFC for AlwaysOn technology for SQL Server 2012 does not mean that SQL Server 2012 needs to be installed using shared disks for the databases. A lot of the functionality provided by AlwaysOn is intended to avoid the storage as a single point of failure. Hence installation of SQL Server 2012 can be done as normal single instance installation. The configuration on SQL Server side to leverage the cluster framework will happen manually after the installation at the point when Availability Groups get created.

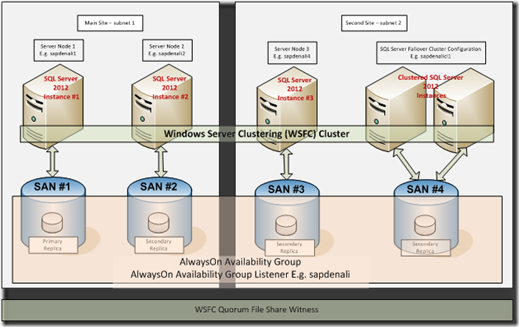

All in all the schema could look like:

Different functionalities are displayed in this overview:

- AlwaysOn functionality can span multiple subnets for non-shared as well as shared storage deployments

- One can mix shared and non-shared storage instances within one configurations. However with some restrictions in automatic failover – in the case above one could not failover automatically to the secondary replica running on shared storage as AlwaysOn Failover Cluster Instance (FCI).

- In the case above the Witness/Quorum is provided by the WSFC configuration – in this case a File Share

- An AG Listener is used to have the application(s) connect against that name which represents an Availability Group towards the applications

VERY IMPORTANT: Losing the quorum in the WSFC configuration the Availability Group is running on has the consequence that the AG will shutdown and the databases within the AG become unavailable. This is similar to losing the Mirror plus the Witness in SQL Server Database Mirroring.

Implications of using WSFC as underlying infrastructure

Working with customers on AlwaysOn for nearly one year now, feedback hit on the common themes:

Especially from customers who used Database Mirroring, it was remarked that deployment time now is a bit extended since it is not a DBA or SAP Basis team only affair anymore. The fact that a cluster configuration (even w/o shared storage) is used does involve other dedicated roles in larger IT organizations like:

- The team which is responsible for running the server and supporting the infrastructure

- Network team in the Datacenter since we will need dedicated IP addresses for the Availability Group Listener name (see Part #1)

- Eventually procurement for dedicated NICs used for cluster heartbeat

- From customers who used WSFC in a lot of scenarios, it was remarked that some of the configurations they wanted to build with AlwaysOn do need more sophisticated WSFC configurations with it. Some customers encountered the need to negotiate support of such new WSFC configurations with their datacenter teams or their infrastructure hosting services.

- Especially in large and very departmentalized IT department time to solution was expanded and more interaction needed to happen to deploy more complex AlwaysOn configurations

Why do we list this? Because we want to give you some thoughts about things to consider when planning roll-out of AlwaysOn functionality

It is all about Windows Server Failover Cluster configuration and quorum

When we start to think about deploying AlwaysOn functionality, the first thoughts and designs need to be about the configuration one needs to build with the WSFC on the infrastructure side. A good functioning AlwaysOn deployment starts right there.

Therefore the first step is to read up a bit about WSFC. A good source could be this paper:

Also other TechNet articles are more than helpful. We assume that recommendations like:

- Running the WSFC Cluster Validation Wizard is used and issues listed are getting addressed

- All node run on the same Windows Patch level and do have the same patches installed

- Best practices like having a dedicated NIC for WSFC heartbeat are in place

- In case of configurations over more than one datacenter reliable networking is in place

As you will realize there are many different configurations possible with AlwaysOn. One can mix and match AlwaysOn with different WSFC shared disk configurations, etc. However as usual, not everything which is technically possible always makes sense.

Therefore we want to limit the discussion in our series to 3-4 deployment scenarios. We will go through all of them before touching how on create an Availability Group. Might sound boring, but it does make sense to cover these basics first.

Also some words upfront before looking into the configurations. Experience in dealing with SAP installations over the last 16 years taught us one important lesson. The simpler and less complex HA and DR configurations are the more efficient those configurations usually are. The most experienced person in the staff usually is not around and not available when issues arise. Hence let’s automate as much as possible with clear architecture and design which can be understood by everybody. Restrict the number of components and technologies involved to a minimum and streamline on a very small number of HA/DR configurations. E.g. excluding SAP Solution Manager, the rest of the productive databases in Microsoft’s SAP landscape is either in a WSFC configuration with shared disk (less important systems) or uses Database Mirroring. But all systems use Log-Shipping to replicate to the DR site. All the DBMS Servers do have the same disk layout. Means two main configurations which only differ in one technology is sufficient to meet the business needs. At least that was the state before moving Microsoft’s SAP ERP database to SQL Server 2012 and AlwaysOn in November 2011. A step which will be repeated for all other systems using Database Mirroring within the next 12 months.

Scenario 1 – Supplement a SAP WSFC configuration with AlwaysOn

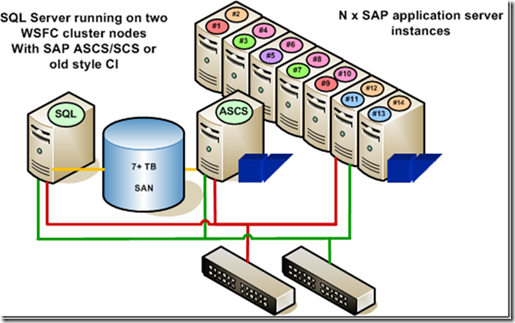

The start scenario in this case looks like:

We basically look at a configuration as it got introduced by SAP in 1998. SQL Server and the SAP Single Point of Failure in form of the old style CI or the new ASCS/SCS using Enqueue Replication Services or not is running together with SQL Server on one WSFC configuration. Both components are able to failover crosswise. Hence configuration of SQL Server as well as ASCS/SCS/CI is in a way that one could run both software components on one node as well. Since Windows Server 2008, the most common cluster configuration for such a scenario is:

- Using SAN storage which can be shared between both servers

- Quorum assembles out of the 2 nodes and a shared disk on the SAN. Means we are looking at a node and disk majority quorum

- All the components making the quorum are in one datacenter

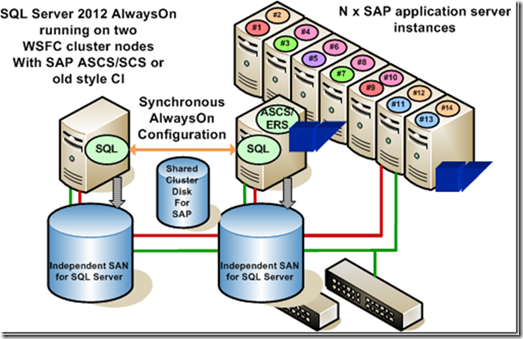

The target configuration we want to get to should look like:

On a first view, it looks a bit more confusing. But it is very easy to understand. In order to avoid the SAN as a single point of failure, we now want to get to two images of the SAP database. Each one located on a different SAN framework. Having both database images on one SAN frame would not make sense at all and is not recommended by us. Not because it wouldn’t work, but because we indeed encountered larger outages with customers when the one single SAN frame went down. Customer who had both images on one SAN where the SAN frame did stop working in orderly manner bringing down both images. Synchronization of these two images should be done with AlwaysOn by running two replicas. One replica will be in the primary role another one in the secondary role. In order to re-use existing configurations, we are not building a new cluster configuration to implement AlwaysOn on. But we use the existing configuration we had used before. Means the SAP components of ASCS/SCS and eventually Enqueue Replication services stay untouched. On the SQL Server side, the following steps would be necessary:

- Old SQL Server release will need to be de-installed

- The new LUNs of the new SAN frames need to be attached.

- SQL Server 2012 needs to be installed in a normal single instance way on both WSFC nodes

- Data needs to be copied to one of the new SANs which should be the primary to start with

Next steps then would be to work on creating an AlwaysOn Availability Group. As well as data synchronization. We will drill into this one in a later part of our blog series

You realized in this case, nothing needed to be changed in the Cluster configuration to accommodate this scenario. The quorum model will remain exactly the same way as it was before.

If we compare the configuration we started with and the configuration we ended with, there are some considerations:

- In the configuration we started with, SQL Server was running on one node only. Now it is running on two nodes and ideally has the same memory and disk configurations on both nodes

- There is increased network traffic and CPU consumption on the node which is running the secondary replica. Compared to the configuration where no SQL Server was running

- Experienced failover of SQL Server will be faster since the SQL Server instance is up and running already. Also the cache is filled with data pages already (at least the pages which experienced changes)

This was it for this part. Next part we will look at the underlying WSFC configuration of a configuration where we use two server nodes in the main site and one in a DR site far away.