SQL Server 2016 AlwaysOn for SAP

We know we are a bit late. But honestly we were very busy, also with exploring the great SQL Server 2016 features. That is why we did not write anything about SQL Server 2016 despite the fact that we are in the last phase of development and SQL Server 2016 launch to which we contributed as a team as well. So over the course of the next few months we will pick topics of SQL Server 2016 as those relate to SAP or the task of operating and administrating SQL Server underneath SAP systems.

The first topic we want to touch is changes to SQL Server 2016 AlwaysOn.

SQL Server AlwaysOn is used by a lot of SAP customers meanwhile for local high availability. But also as functionality to provide disaster recovery capabilities. In the past 4 years we got a lot of feedback from you as a customer. Fortunately, the SQL Server 2016 development campaign gave us enough possibilities to incorporate many details of the feedback we received.

Feedback #1: SQL Server AlwaysOn is not checking the health of a single database within the Availability Group

As we developed AlwaysOn, we introduced a new unit of failover with an Availability Group (AG). In opposite to Database Mirroring where all the mechanics and unit of failover was strictly around a single database, an AlwaysOn Availability Group was introduced as a unit that could hold multiple databases which in case of a SQL Server instance issue could failover to a secondary replica. What was not covered by AlwaysOn Availability Groups at the point of introduction were failure scenarios which affected single databases within an AG. Or in other words, the health of each individual database within an Availability Group did not get checked. As a result, databases in an AG could close because e.g. the LUN of one or multiple data files were not reachable anymore, without actually triggering a failover of the AG. This was the reason for some customers to still stay with Database Mirroring where such a failure scenario of an unexpected close of a database would lead to a failover.

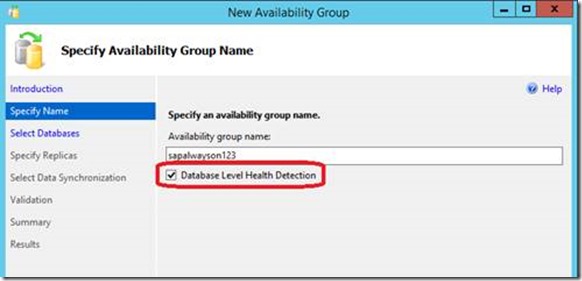

With the introduction of SQL Server 2016, we introduced database health checks as well. These can be individually chosen when creating the AG like shown below:

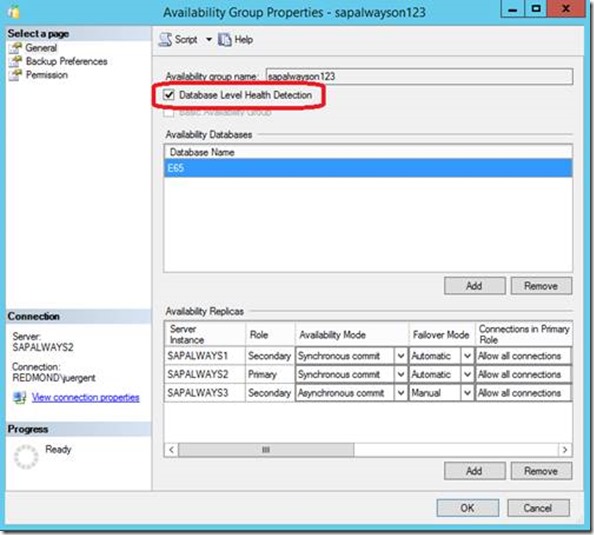

Once an AlwaysOn AG is created the settings can be changed and activated as well like shown in the Property page of an Availability Group as shown here:

Enabling database level health checks make it possible to trigger a failover of the whole AG in case one of the databases within the AG is encountering problems. Problems that force SQL Server to close the database. Means a similar behavior as with Database Mirroring is shown as it relates to react on an unexpected close of a database.

Feedback #2: Redo can’t keep up with the changes transmitted to the secondary

There are several bottlenecks that could occur when in AlwaysOn. Most of them in typical SAP scenarios are described in these articles:

- https://blogs.msdn.microsoft.com/saponsqlserver/2013/04/21/sql-server-2012-alwayson-part-11-performance-aspects-and-performance-monitoring-i/

- https://blogs.msdn.microsoft.com/saponsqlserver/2013/04/24/sql-server-2012-alwayson-part-12-performance-aspects-and-performance-monitoring-ii/

The one issue some SAP customers indeed were reporting, during workloads with high data volumes being modified, was the fact that the redo thread on secondary replicas could not keep up with the incoming stream of changes. Just a short background what happens in such situation:

- AlwaysOn send the transaction log records to the secondary replica.

- In case of a synchronous availability mode, the acknowledge that is sent to the primary replicas is sent at the point the data is persisted in the transaction log of the secondary replica. Not earlier and not later.

- Though the data can then be recognized as persisted on the secondary replica, it is actually not yet applied to the data or index pages of the database itself.

- Applying the data to the data and index pages is task of a redo thread.

- In opposite to Database Mirroring, AlwaysOn of SQL Server 2012 and 2014 only use one (1) redo thread per database within an Availability Group to apply the incoming changes to the associated database. This was a design decision to enable Read-Only capabilities when AlwaysOn got designed.

- As a result of heavy data manipulation workload on large servers with often more than 100 CPU threads, situations could occur where the changes got all persisted in the transaction log of a database within an AG. However, the single redo thread simply could not keep up with applying the changes as persisted in the log.

- With that a redo log queue build up.

- As long as there was no read-only activity or no failover happening, that did not cause any impact. However, in case of a failover, the database on the secondary replica only can open after the redo log queue has been worked down and all the changes have been applied to the data. Or in the case of a read-only secondary you simply would read a slightly obsolete state of the data.

- Hence it is not desirable to get to a state where a redo log queue builds up on the secondary replica.

SQL Server 2016 will solve this problem with parallel redo functionality on the secondary replicas. The number of redo threads per database is defined as a function of the number CPU threads available on the secondary replica. In SAP scenarios we got confronted sometimes with this scenario. Given the relatively large servers/VMs which are in use with SAP customers, parallel redo engaging several threads should solve those rare occurrences as well.

Feedback #3: Seeding the databases is a tedious business

In order to make seeding of a secondary replica easier, SQL Server 2016 introduces the same mechanism that is used by SQL Azure Databases to create secondary replicas. Means NOT creating a backup and restoring that backup. But opening a network pipe to the new secondary replica, create the secondary database on the replica and then transfer the data. Here is how it basically works. The setup of automatic seeding is not supported with SQL Server Management Tools. Instead automatic seeding needs to be applied to a replica when creating the AG or it can be applied to a replica of the AG after it got created.

Means the command to create an AG can look like:

CREATE AVAILABILITY GROUP [<Name of AG>] WITH (AUTOMATED_BACKUP_PREFERENCE = SECONDARY,

DB_FAILOVER = ON, DTC_SUPPORT = NONE)

FOR DATABASE [<database name>]

REPLICA ON N'<name of replica 1>' WITH (ENDPOINT_URL = N'TCP://<FQD name of replica 1>:5022',

FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO), SEEDING_MODE=AUTOMATIC);),

N'<name of replica 2' WITH (ENDPOINT_URL = N'TCP://<FQD name of replica 1>:5022',

FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO), SEEDING_MODE=AUTOMATIC);

The last option indicates that the seeding of the databases within the AG should be done automatically

After executing the command above on the primary replica you go to the replica and join the replica to the AG with a command like:

ALTER AVAILABILITY GROUP [<Name of AG>] JOIN;

As a necessary third step you also need to grant the AG the permission to create a database on the SQL Server instance of the secondary replica. This done with this command:

ALTER AVAILABILITY GROUP [<Name of AG>] GRANT CREATE ANY DATABASE;

In case of adding a new replica to an existing AG it can happen that the creation of the database and its synchronization is not started after having executed the commands above on the secondary replica. In such a case go back to the primary replica and trigger the seeding with:

ALTER AVAILABILITY GROUP [<Name of AG>] MODIFY REPLICA ON '<name of replica to be seeded>'

WITH (SEEDING_MODE = AUTOMATIC);

On the primary replica you can query the DMV sys.dm_hadr_automatic_seeding to check whether an automatic seeding process is going on. In this view all the seeding processes are listed. Expectation is to get one record per seeding process.

In the DMV sys.dm_hadr_physical_seeding_stats you can check the status of each the individual seeding processes. With the query:

SELECT * FROM sys.dm_hadr_physical_seeding_stats;

you can check on the progress of the seeding process to the replica.

The automatic seeding is using a fixed number of threads. On the primary side the number of threads used for reading the database is the same as backup would use (one reader thread per LUN), then there is a fixed number of threads for transferring what was read to the secondary. On the secondary, the number of threads engaged writing the data to the data files on disk follows the backup rules again of one thread per LUN. The fixed number of threads that perform the communication and data transfer will result in a different CPU utilization dependent on the overall CPU resources available on a server or VM. Means on a VM that only has 4 vCPUs as much has half of the CPU resources can be eaten up in the process. Whereas on a VM with 16 vCPUs the impact we measured is already less than 15% utilization of CPU resources. We expect that with even higher CPU count, the impact reduces and becomes hardly noteworthy.

Using traceflag 9567 on the AlwaysOn primary replica will enable compression of the data stream that gets send from the primary to the secondary. The usage of this compression can reduce the transfer times by factors. However, it also will increase the CPU usage. In first measurements we saw an increase of CPU utilization of the primary by using compression of a rough factor of 3.

We could easily provoke situations where the existing network bandwidth could be fully consumed by the automatic seeding. If the disks are fast enough on the primary and secondary, it is not a problem at all to saturate a 1Gbit Ethernet connection with this process. In tests where we ran two VMs on the same host, we could get up to 2Gbit transfer rates. Keep in mind if you run workload that relies on high network bandwidth that the automatic seeding can have some impact on the availability of that bandwidth.

Feedback #4: Since we need to run SQL Server in a Domain Account, we need to change passwords on those and since restart the SQL Server services

A solution to this problem can be Group Managed Service Accounts (https://technet.microsoft.com/en-us/library/hh831782.aspx ). These are supported now with SQL Server 2016 and can be used to work with AlwaysOn configurations as well.

Feedback#5: It is very hard to run a Windows Cluster Configuration that is spread over the whole continent

A lot of our customers recognized in AlwaysOn the ideal tool to cover local High Availability AND Disaster Recovery scenario. As a result, those customers were running a primary and a secondary replica in the main site and another secondary replica in the DR site. Basically a scenario as we described it in this article already: https://blogs.msdn.microsoft.com/saponsqlserver/2012/02/20/sql-server-2012-alwayson-part-3-sap-configuration-with-two-secondary-replicas/

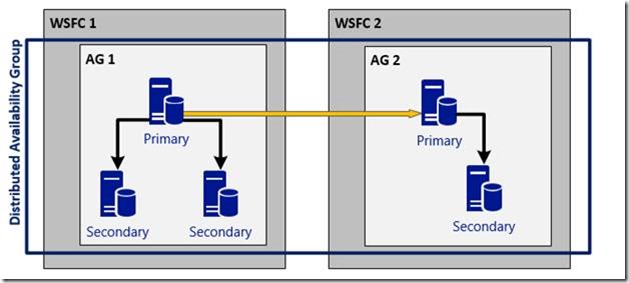

As you read that article you realize that we recommended a few steps to make sure the DR site part of the Windows Cluster configuration had no voting into the quorum and other settings to make sure that the quorum in the main site is not impacted by what is going on in the DR site. However, the larger the distance between the sites, the more challenging it can become to have a reliable networking so that the cluster covering those two far distanced sites works properly. For SQL Azure Database which use AlwaysOn functionality to keep replicas we faced another issue that led the way to a different solution that avoids the usage of one Windows Cluster configuration which needs to cover main site and DR site. The solution is called Distributed Availability Groups. With this solution you can run separate Windows Server Failover Cluster configurations with two separate AlwaysOn Availability Groups and then link those AGs together with a so called Distributed Availability Group. Something that looks like this:

You can find documentation and principles here: https://msdn.microsoft.com/en-US/library/mt651673.aspx

As you certainly realized we just copied the picture above from the documentation. In terms of how this applies to SAP configuration, we’ll go into a separate article.

Other improvements of AlwaysOn that are more generic and also might have been in the feedback from SAP customers are listed here: https://blogs.technet.microsoft.com/dataplatforminsider/2015/12/15/enhanced-always-on-availability-groups-in-sql-server-2016/

and in subsequent SQL Server documentation.

Have fun