World Record SAP Sales and Distribution Standard Application Benchmark for SAP cloud deployments released using Azure IaaS VMs

In this article we want to talk about four SAP 3-Tier benchmarks that SAP and Microsoft released today. As of October 5th 2015, the best of these benchmarks sets the World Record for the category of SAP Sales and Distribution Standard Application Benchmarks executed in the cloud deployment type. The benchmarks were conducted on Azure Virtual Machines, but the technical finding we discuss in this article, are often applicable to private cloud deployments of Hyper-V as well. The idea of the four benchmarks was to use a SAP 3-Tier configuration and use the Azure VM types GS1, GS2, GS3 and GS4 as dedicated DBMS VMs in order to see how the particular GS VM-Series scale with the very demanding SAP SD benchmark workload. The results and detailed data of the benchmarks can be found here:

- Dedicated DBMS VM of GS1: 7000 SAP SD benchmark and 38850 SAPS

- Dedicated DBMS VM of GS2: 14060 SAP SD benchmark users and 78620 SAPS

- Dedicated DBMS VM of GS3: 24500 SAP SD benchmark users and 137520 SAPS

World Record for SAP SD Standard Application benchmarks executed in public cloud:

- Dedicated DBMS VM of GS4: 45100 SAP SD benchmark users and 247880 SAPS

What do these benchmarks prove?

Let’s start with the SAP Standard Sales and Distribution Standard Application Benchmark first. This is a benchmark that executes six typical SAP transactions that are necessary to receive a customer order, check the customer order, create a delivery, book a delivery and look at past orders of a specific customer. The exact order of the SAP transactions as executed in the benchmark is listed here: https://global.sap.com/campaigns/benchmark/appbm_sd.epx. For non-SAP experts, the database workload of the benchmark can be characterized more as an OLTP benchmark than an OLAP benchmark. All the SAP benchmarks certified and released can be found here: http://www.sap.com/benchmark.

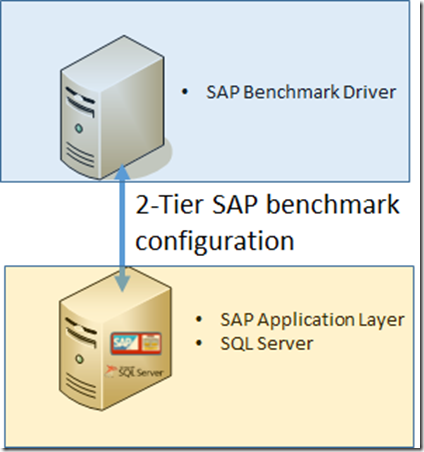

What do we call 2-Tier and 3-Tier in SAP?

We call a SAP 2-Tier configuration if the DBMS layer and the SAP application layer is running within the same VM or server on one operating system image. The second layer would be the user interface layer or in case of a benchmark configuration, the benchmark driver, like shown here:

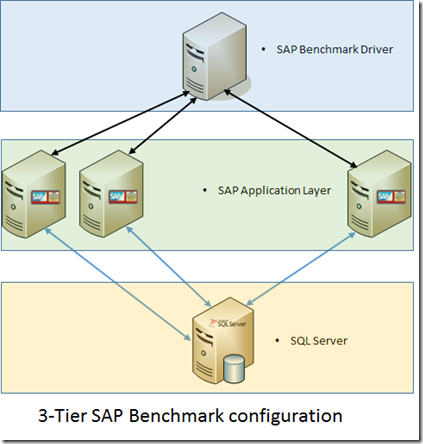

We are talking about an SAP 3-Tier configuration if the DBMS and the SAP application layer are running in separate VMs/servers and the User interface layer or benchmark driver in a 3rd tier as shown here:

The purpose of the benchmark is to get as much business throughput out of a configuration as shown above. In the 2-Tier configuration we want to push the CPU resources as hard as we can. Ideally being as close to 100% CPU consumption as possible. On the other side, the benchmark requires an average response time of <1sec measured on the benchmark driver. Same rules apply for the 3-Tier benchmark. Whereas there the component we want to drive to maximum CPU utilization is the DBMS server or VM. Whereas we can scale-out the SAP application layer to produce more workload on top of the DBMS instance. This scale-out capability also does not force us to maximize CPU utilization in the application layer VMs/servers.

In order to provide you as a customer with sizing information, SAP requires us to certify our Azure VM Series with SAP. This usually is done by conducting a SAP Standard Sales and Distribution Standard Application Benchmark with a 2-Tier SAP ERP configuration. The reason we use this particular SAP SD benchmark in a 2-Tier configuration is the fact that this is the only SAP Standard Application Benchmark that provides a particular benchmark measure which is called 'SAPS'. The 'SAPS' unit is used for SAP sizing as explained here: https://global.sap.com/campaigns/benchmark/measuring.epx.

From our side, we usually don’t certify each and every Azure VM for SAP purposes. We pre-select VMs that we find capable for SAP software. The principles we apply should avoid that we certify VM types that are not suitable from a CPU to memory ratio for the relatively memory hungry SAP NetWeaver applications and related databases. Or that you run into cases where the DBMS in such a VM can’t scale up because of the Azure storage you can combine with a certain VM type or series.

At the end the Azure VM types certified for SAP and supported by SAP are listed in SAP Note: 1928533 – SAP Applications on Azure: Supported Products and Sizing.

If you take a closer look into the SAP note and into your SAP landscape, you will realize that the note falls short in listing 3-Tier configurations. If we compare SAP 2-Tier configurations using a certain VM type and compare to a 3-Tier configuration where the same VM type is used as a dedicated DBMS server, we can identify that in such 3-Tier configurations, the stress applied by the workload will differ in:

- Much higher demands in IOPS writing into the disk that contains the DBMS transaction log.

- Requirement of lower latency writing to the disk that contains the DBMS transaction log (ideally low single digit milliseconds).

- High demand on network throughput since application instances in multiple VMs send tens of thousands of network requests per second to the dedicated DBMS VM.

- Ability of the host running the VM as well as the VM to handle these tens of thousands of network requests per second.

- Ability of the Operating System to deal with the tens of thousands of network requests per second

- High storage bandwidth requirement to write frequent checkpoints by the database to the data files of the DBMS.

All the volumes listed in the areas above are orders of magnitude higher with running a SAP Standard Sales and Distribution benchmark in a 3-Tier configuration than it is the case when running in a 2-Tier configuration. Means VM configurations that might show capable enough of running fine in a configuration where the SAP application instances and the DBMS are running in one VM might not necessarily scale to a 3-Tier configuration. Therefore, 3-Tier configuration benchmarks additionally to the pure SAPS number derived out of the 2-Tier configuration benchmarks, give you way more information about the capabilities and scalability of the offered VMs and the underlying public cloud infrastructure, like Microsoft Azure.

Therefore, we decided to publish a first series of benchmarks of the Azure VM GS-Series where we used the Azure GS1, GS2, GS3 and GS4 VMs as dedicated DBMS servers on the one side and scaled the application layer by using the Azure GS5 VM type.

Actual configurations of these benchmark configurations

Let’s take a closer look at the configurations we used for these four benchmarks. Let’s start with introducing the Azure GS VM Series. The detailed specifications of this VM Series can be found at the end of this article: https://azure.microsoft.com/en-us/documentation/articles/virtual-machines-size-specs/

Basic capabilities are:

- Leverage Azure Premium Storage – this is a requirement for really scaling load on these VMs. Please note the certification using the GS VM types requires you to use Azure Premium Storage for the data and transaction log files of the SAP database(s)

- The different VM sizes of this series allow to mount a different number of VHDs. The larger the VM, the more VHDs can be attached. See the column ‘Max. Data Disks’ in the article mentioned above.

- The larger the VM, the higher the storage throughput bandwidth to Azure Premium Storage. See the column ‘Max. Disk IOPS and Throughput’ in the article mentioned above.

- The larger the VM the larger the read cache that can be used by Premium Storage disks on the local Azure compute node. See the column ‘Cache Size (GB)’ in the article mentioned above.

Configuration Details:

For all four configurations we used a GS2 VM (4 vCPUs and 56GB memory) to run a traditional SAP Central Instance with its Enqueue and Message services.

As building blocks for the SAP application instances we used GS5 VMs (32 vCPUs and 448GB memory). That kept the configuration handy and small in regards to the number of VMs. Hence we could limit the number of application server VMs to:

- One GS5 VM running SAP dialog instances for the GS1 dedicated DBMS VM

- Two GS5 VM running SAP dialog instances for the GS2 dedicated DBMS VM

- Four GS5 VM running SAP dialog instances for the GS3 dedicated DBMS VM

- Seven GS5 VM running SAP dialog instances for the GS4 dedicated DBMS VM

As dedicated DBMS VM we used the Azure GS1 to GS4 VMs. The SQL Server based database for SAP Standard Sales and Distribution benchmarks we use since years for SAP benchmarks has 16 data files plus one log file.

Disk configuration on the dedicated DBMS Server

In order to sustain enough IOPS, we chose to use Azure Premium Storage P30 disks. These provide 5000 IOPS and 200MB/sec throughput by themselves. Even for the transaction log we used the P30, despite the fact that we did not expect to hit anywhere close to 5000 IOPS on this drive. However, the bandwidth and capacity of the P30 drive made it easier to ‘misuse’ the disk for other purposes like frequent and many database backups of the benchmark database.

In opposite to our guidance in this document: https://go.microsoft.com/fwlink/p/?LinkId=397965 we did not use Windows Storage Spaces at all to build stripes over the data disks. The reason is that the benchmark database is well maintained with its 16 data files and is well balanced in allocated and free space. That results in SQL Server always allocating and distributing data evenly amongst the 16 data files. Hence reading data from the data files is balanced as well as checkpoints writing into the data files. Actually conditions, we don’t meet too often with customer systems. Therefore, the customer guidance to use Windows Storage Spaces as expressed in our guidance.

We distributed the data files in in same numbers over two (GS1 and GS2) P30 disks or four (GS3 and GS4) P30 data disks. These disks used Azure Premium Storage Read-Cache.

Whereas the log file was on its own disk which did not use any Azure Premium Storage cache.

Controlling I/O in a bandwidth limited environment

Independent of the deployment, whether bare-metal, private cloud and public cloud, you can encounter situations where hard limits of IOPS or I/O bandwidth are enforced for an OS image or VM. Looking at the specifications of our Azure GS Series, it is apparent that this is the case. This can be kind of a problem with DBMS or here SQL Server specifically, because some data volumes SQL Server is trying to read or write are either dependent on the workload or simply hard to limit. But as soon as such an I/O bandwidth limitation is hit, the impact on the workload can be severe.

One of the scenarios customers reported for long time in such bandwidth limited environments was the impact of SQL Server’s checkpoint writer on the writes that need to happen into the transaction log. SQL Server’s checkpoint mechanism is only throttling back on issuing I/Os once the latency for writing to the data files is hitting a 20ms threshold. In most of the bandwidth limited environments using SSDs, as Azure Premium Storage, you only get to those 20ms, when you hit the bandwidth limits of VM/server or infrastructure. If all the bandwidth to the storage is eaten up by writing checkpoints, then write operations that need to persist changes in the database transaction log will suffer high latencies. Something that again impacts the workload in a severe manner instantaneously.

That problem of controlling the volume of data SQL Server’s checkpoint writer can write per second led to several solutions that could be used automatically with SQL Server:

- Introduction of an I/O volume limit SQL Server would honor when writing checkpoints. This method is documented here: https://support.microsoft.com/en-us/kb/929240

- Introduction of indirect checkpoint as documented in this article: https://msdn.microsoft.com/en-us/library/ms189573.aspx

Both methods can be used to limit the volume that SQL Server writes during checkpoints, so, that the maximum I/O bandwidth, the infrastructure has available, is not reached by just writing checkpoints. As a result of limiting the data volume per second that is written to the storage during a checkpoint, other read and write activities will encounter a reliable latency and with that can serve the workload in a reliable manner.

In the case of our Azure benchmarks we decided to go with the first solution (https://support.microsoft.com/en-us/kb/929240) where we could express the limit the checkpoint writer could write per second in MB/sec. Knowing the limit we had with each of the VM types we usually gave the checkpoint writer 50-75% of the I/O bandwidth of the VM. For the SAP SD benchmark workload, we ran, these were appropriate settings. No doubt, for other workloads, the limit could look drastically different.

Just to give you an idea, in the case of the benchmark we used GS4 as dedicated DBMS server running 45100 SAP SD benchmark users, the write volume into the SQL Server transaction log was around 100MB/sec with 1700-1800 I/Os per second.

With limiting the data volume per second, the checkpoint writer could issue, we could:

- Provide enough bandwidth for the writes into the transaction log

- Reliable write latency of around 3ms for writing into the transaction log

- Stay within the limit of the IOPS and data volume a single P30 disk could sustain while writing the checkpoints

- Avoid that we needed to spread the data files over more than 4 x P30 disks to sustain checkpoints

Dealing with Networking

The one resource in the SAP 2-Tier configuration that is not significantly stressed at all is networking. Whereas in 3-Tier configurations, this is the most challenging part, besides providing low enough disk latency. Just to give an idea. With the GS4 centered SD benchmark running 45100 SAP SD benchmark users, we look into around 550,000 network requests per second, the DBMS VM needs to deal with. The data volume exchanged over the network by the DBMS VM and its associated VMs running the dialog instances was around 450MB/sec or 3.6Gbit/sec.

Looking at those volumes, three challenges arise:

- The network quotas assigned to VMs in such scenarios need to have enough bandwidth assigned to sustain e.g. 3.6Gbit/sec network throughput.

- The Hypervisor used needs to be able to deal with around 550K network requests per second.

- The Guest-OS needs to be able to handle 550K network requests.

In the VMs we tested, there was no doubt that they could handle the network volume. So point #1 was a given by the VM types.

Point #2 got resolved in October 2014, when we completed deployment of the Windows Server 2012 R2 Hyper-V to our hundreds of thousands of Azure nodes. With Windows Server 2012 R2, we introduced Virtual RSS (vRSS). This feature allows to distribute handling of the network requests over more than one logical CPU on the host.

Point #3 as well got addressed with Windows Server 2012 R2 with the introduction of vRSS. Before vRSS, all the network requests arriving in the Guest-OS were handled on vCPU 0 usually. In case of our benchmark exercise with the GS4 as dedicated DBMS server, the throughput shown could not be achieved since vCPU 0 of the VM went close saturation. In the other three SAP benchmarks using GS1, GS2 and GS3 we could work without leveraging vRSS within the DBMS VM. But with GS4, the CPU resources could not be leveraged without using vRSS within the VM.

The benchmark exercises with the different VM types showed that there can be an impact on network handling even if a vCPU handling the network requests is running SQL Server workload as well. If such a vCPU was required to leverage its resources mostly for network handling, plus having SQL Server workload on it, we could see that the network handling as well as the response time for SQL requests handled on this CPU got severely affected. This issue certainly got amplified by us using affinity mask settings of <> 0 in the benchmark configuration which blocks Windows from rescheduling requests on a different vCPU that could provide more resources.

As essence one can state that:

- For production scenarios where you usually don’t use affinity mask settings of <> 0, you need to use vRSS at least with DBMS VMs/servers that have 16 logical or virtual CPUs.

- For scenarios where you are using affinity mask for SQL Server instances, because you e.g. run multiple SQL Server instances and want to restrict CPU resources for each of them, use vRSS as well. Don’t assign (v)CPUs that handle vRSS queues to the SQL Server instance(s). That is what we did at the end in the benchmark that used the GS4 as DBMS VM. Means in our GS4 benchmark SQL Server used 14 of the 16 vCPUs only. The two vCPUs not running SQL Server were assigned to handle one vRSS queue each.

General remarks to vRSS

In order to use vRSS, your Guest-OS needs to be Windows Server 2012 R2 or later. Virtual RSS is off by default in VMs that are getting deployed on Hyper-V or Azure. You need to enable it when you want to use it. Rough recommendations can look like:

- In cases where you have affinity mask of SQL Server set to 0, you want to use at least 4 queues and 4 CPUs. The default start vCPU to handle the RSS is usually 0. You can leave that setting. It is expected that vCPUs 0 to 4 are used then for handling the network requests.

- In cases where you exclude vCPUs to handle network requests, you might want to distribute those over the different vNUMA nodes (if there are vNUMA nodes in your VM), so that you still can use the memory in the NUMA nodes in a balanced way.

So all in all this should give you some ideas on how we performed those benchmarks and what the principal pitfalls were and how we got around them.

The remainder of this article is listing some minimum data about the benchmarks we are obligated to list according to SAP benchmark publication rules (see also: https://global.sap.com/solutions/benchmark/PDF/benchm_publ_proc_3_8.pdf ).

Detailed Benchmark Data:

Benchmark Certification #2015042: Three-tier SAP SD standard application benchmark in Cloud Deployment type. Using SAP ERP 6.0 Enhancement Package 5, the results of 7000 SD benchmark users and 38415 SAPS were achieved using:

- Deployment type: Cloud

- 1 x Azure VM GS2 with 4 CPUs and 56 GB memory running windows Server 2012 R2 Datacenter as Central Instance

- 1 x Azure VM GS5 with 32 CPUs and 448 GB memory running Windows Server 2012 R2 Datacenter for Dialog Instances

- 1 x Azure VM GS1 with 2 CPUs and 28 GB memory running Windows Server 2012 R2 Datacenter and SQL Server 2012 as DBMS instance

- All VMs hosted on Azure nodes with the capability of providing, dependent on configuration, a maximum of 2 processors / 32 cores / 64 threads based on Intel Xeon Processor E5-2698B v3 with 2.00 GHz

- More details: Link to SAP benchmark webpage follows as soon as SAP updated site with this benchmark result

-------------------------------------------------------------------------------------------------------------------------------------------

Benchmark Certification #2015043: Three-tier SAP SD standard application benchmark in Cloud Deployment type. Using SAP ERP 6.0 Enhancement Package 5, the results of 14060 SD benchmark users and 78620 SAPS were achieved using:

- Deployment type: Cloud

- 1 x Azure VM GS2 with 4 CPUs and 56 GB memory running windows Server 2012 R2 Datacenter as Central Instance

- 2 x Azure VM GS5 with 32 CPUs and 448 GB memory running Windows Server 2012 R2 Datacenter for Dialog Instances

- 1 x Azure VM GS2 with 4 CPUs and 56 GB memory running Windows Server 2012 R2 Datacenter and SQL Server 2012 as DBMS instance

- All VMs hosted on Azure nodes with the capability of providing, dependent on configuration, a maximum of 2 processors / 32 cores / 64 threads based on Intel Xeon Processor E5-2698B v3 with 2.00 GHz

- More details: Link to SAP benchmark webpage follows as soon as SAP updated site with this benchmark result

-------------------------------------------------------------------------------------------------------------------------------------------

Benchmark Certification #2015044: Three-tier SAP SD standard application benchmark in Cloud Deployment type. Using SAP ERP 6.0 Enhancement Package 5, the results of 24500 SD benchmark users and 137520 SAPS were achieved using:

- Deployment type: Cloud

- 1 x Azure VM GS2 with 4 CPUs and 56 GB memory running windows Server 2012 R2 Datacenter as Central Instance

- 4 x Azure VM GS5 with 32 CPUs and 448 GB memory running Windows Server 2012 R2 Datacenter for Dialog Instances

- 1 x Azure VM GS3 with 8 CPUs and 112 GB memory running Windows Server 2012 R2 Datacenter and SQL Server 2012 as DBMS instance

- All VMs hosted on Azure nodes with the capability of providing, dependent on configuration, a maximum of 2 processors / 32 cores / 64 threads based on Intel Xeon Processor E5-2698B v3 with 2.00 GHz

- More details: Link to SAP benchmark webpage follows as soon as SAP updated site with this benchmark result

-------------------------------------------------------------------------------------------------------------------------------------------

Benchmark Certification #2015045: Three-tier SAP SD standard application benchmark in Cloud Deployment type. Using SAP ERP 6.0 Enhancement Package 5, the results of 45100 SD benchmark users and 247880 SAPS were achieved using:

- Deployment type: Cloud

- 1 x Azure VM GS2 with 4 CPUs and 56 GB memory running Windows Server 2012 R2 Datacenter as Central Instance

- 7 x Azure VM GS5 with 32 CPUs and 448 GB memory running Windows Server 2012 R2 Datacenter for Dialog Instances

- 1 x Azure VM GS4 with 16 CPUs and 224 GB memory running Windows Server 2012 R2 Datacenter and SQL Server 2012 as DBMS instance

- All VMs hosted on Azure nodes with the capability of providing, dependent on configuration, a maximum of 2 processors / 32 cores / 64 threads based on Intel Xeon Processor E5-2698B v3 with 2.00 GHz

- More details: Link to SAP benchmark webpage follows as soon as SAP updated site with this benchmark result

-------------------------------------------------------------------------------------------------------------------------------------------

For more details see: https://global.sap.com/campaigns/benchmark/index.epx and https://global.sap.com/campaigns/benchmark/appbm_cloud_awareness.epx .