New functionality in SQL Server 2014 – Part 1- Modifiable Column Store Index

As you dear reader realized, there was not that much activity on our blog over the last few months. Reason for the lower output of blogs certainly has to do with work that the team is performing right now in different projects with SAP. One of the projects we were working on hard is SQL Server 2014. Something we want to go into details in a few articles on this blog site.

Overall SQL Server 2014 is a development which mainly focused in the following areas.

- Extending the capabilities of SQL Server’s Column Store Index as it got introduced with SQL Server 2012

- Introduction of In-memory-OLTP

- Introduction of a few functionalities which integrates SQL Server better into Windows Azure Infrastructure as a Service (IaaS) scenarios

- A new Cardinality Estimation for the SQL Server Query Optimizer

- Plus a larger list of smaller improvement which we as those apply will describe in the series of blogs

In September CTP2 of SQL Server 2014 got released. This was the last CTP before we will publish SQL Server 2014 to the public in the first half of 2014. As usual all the preparations with SAP are in place to start the evaluation process as soon as SQL Server 2014 is released to the public. Details about the supported SAP releases will be discussed in a later blog entry. But it is not planned to be massively different from what is supported with SQL Server 2012.

When looking at some of the features as Column Store Indexes or our In-Memory-OLTP one will see differences to some of our competitors in this space. Especially to some that also have Column Store and/or in-memory functionality. Background for these differences have to do with some overarching principles or facts taken into account when developing new functionality for SQL Server.

As such can be listed:

- Investment protection of millions of customers who invested into SQL Server. Features that are meaningful and open up new type of applications for RDBMS need to be integrated into the existing SQL Server RDBMS framework. Creating just another new engine is not acceptable for the most of customers and partners who invested a lot of money and resources into existing SQL Server engines.

- Ability to have SQL Server leveraging new functionality on existing commodity hardware – no hard requirement to buy new hardware or expensive appliances to leverage features like Column Store Indexes or In-Memory-OLTP

- Features of SQL Server should be usable on typical Infrastructure as a Service (IaaS) Cloud providers. Hardware used by typical IaaS Service providers, like Microsoft with Windows Azure, is commodity hardware though not with huge amounts of CPUs and Terabytes of memory as we find it for very high-end DBMS servers at customer sites. The price points which make IaaS Services with e.g. Windows Azure so attractive are achieved by using hardware with a very excellent price/performance ratio. Today (around January 2014) this usually is 2-socket hardware with moderate memory of around 128GB to 256GB. The ability for our customers to leverage new SQL Server features in Virtual machines running on such type of hardware is very important for feature design in SQL Server

- Integration of new functionality into existing HA/DR frameworks is key. In order to enable consumption of new functionality implemented in SQL Server, ideally there are no changes required in HA/DR configurations for mission critical configurations. Nor should 3rd party software which is used for operational and administrative process such as backup/restore require adaption or changes.

Given these four items as principles, solutions as they showed up in SQL Server 2012 and will show up in SQL Server 2014 might look differently than the solutions presented by some of our competitors. Some competitive products were created without any installed customer base or do have a different customer base where the main hardware used is not commodity based, but more based on proprietary hardware with a complete different price point and also no strings attached to any IaaS Service offers.

That just as background on the features we delivered and are going to deliver. Now let’s look at the first functionality which will change in SQL Server 2014

SQL Server Column Store Index

As reported in this Blog location in several articles, SQL Server 2012 introduced a Column Store Index which is fully supported by SAP BW. In the order, these are the articles published so far:

- https://blogs.msdn.com/b/saponsqlserver/archive/2012/05/23/sql-server-2012-column-store-support-for-sap-bw.aspx

- https://blogs.msdn.com/b/saponsqlserver/archive/2012/12/17/sql-server-column-store-updated-sap-bw-code.aspx

- https://blogs.msdn.com/b/saponsqlserver/archive/2013/03/19/optimizing-bw-query-performance.aspx

Summarizing and giving a bit more background on what was and is going to be delivered with our Column Store Index functionality we look at the following characteristics:

- In order to make Column Store Indexes really efficient one needed to break away from representing the Column Store Index data in the usual 8KB page format in SQL Server’s bufferpool.

- As a result Column Store Index data is represented in its own memory area (different than SQL Server’s buffer pool) which is not organized in 8KB pages, but deal with larger variable Column Store Index segments in size of several MBs. Having such an independent addressable memory space allows the algorithms for searching through Column Store Index data to be more efficient than searches of pages in the regular bufferpool. The size of a particular segment is defined by the number of rows and the compression factor one can achieve.

- Since a Column Store index segment contains values of one column only and these segments are stored independent of each other on storage, only segments which relate to columns in the select list of statements need to be loaded into memory

- The Column Store Index data is still being stored on storage broken down into 8KB pages as BLOB data. As a result there was no need for introducing a new storage type with all its related changes and eventual impact to the 3rd party software used by SQL Server customers

- As with other memory segments, like SQL Server bufferpool, the memory area used for Column Store Indexes is NUMA oriented. Additionally the memory area used to store Column Store Index structures is using ‘large pages’. This improves efficiency in virtual address to physical address mapping by the underlying hardware.

- The way how table joins work got changed and a so called ‘batch mode’ introduced which increases efficiency of joins leveraging Column Store Index structures dramatically.

- Great performance out of Column Store Index structures is achieved as well with parallel scanning of segments of the columns. Something that works extremely scalable with the implementation in SQL Server. A fact that also was considered in the integration of Column Store Indexes into SAP BW, where queries are getting hinted to be executed in parallel (configurable within SAP BW – see articles listed earlier).

- In order to follow the principles to develop functionality for SQL Server, decision was taken not to require in-memory residency of the Column Store Index data. Or in other words, in opposite to some competitors, where ALL column oriented data needs to fit into the memory available, the solution implemented in SQL Server still relies on storing Column Store Index data on storage and loading from storage if needed. As a result Column Store Indexes also are suitable in IaaS cloud environments like Azure.

An excellent graphics of how the Column Store Indexes of SQL Server are structured and stored on storage can be found in graphics #1 in this document:

https://research.microsoft.com/pubs/193599/Apollo3%20-%20Sigmod%202013%20-%20final.pdf

Since SQL Server 2012 Column Store Indexes were released with SAP, there were many customers applying it already. Many customers tested it with competitive products underneath SAP BW workload and decided that SQL Server Column Store Indexes fulfilled most of their criteria of:

- As long as the SAP BW reports were database bound (significant time of the report was spent in the DBMS layer), Column Store Indexes as supported by SAP BW on top of SQL Server improved performance of reports significantly and sufficiently by factors

- Investments into new server hardware or memory upgrades were moderate or in most cases not necessary at all. Though SQL Server will add another cache to keep Column Store Data, the overall size of a BW cube is smaller. Hence less row oriented pages need to be kept in the regular buffer pool. Means the reduction of pages that involve the SAP BW cube will more or less compensate for the memory used by Column Store data. Whereas with some competitive products significant investments into infrastructure would have been necessary.

- High-Availability and Disaster Recovery scenarios did work as before and no new investments or upgrades into 3rd party software were required.

- Especially in migrations from other platforms and older hardware, the results running SAP BW scenarios were similar to those of competitive DBMS platforms, however with a free choice of infrastructure.

- Customers leveraging SQL Server 2012 realized very substantial compression over and on top of SQL Server PAGE compression. An example is a customer where a single BW cube was 100GB uncompressed, 30GB after SQL PAGE compression and only 4.5GB when converted to Column Store. Note: Experiences in Compression do vary and are dependent on the structure of the BW Cube and more important on the data in the Cube.

As you see there are quite a bit of positive experiences that we could collect. Some of those experiences also went into further extensions to SQL Server 2014. The extensions to SQL Server 2014 in regards to Column Store Indexes can be listed like:

- In general the range of datatypes and the precision of datatypes that are able to be stored in Column Store Indexes got changed in SQL Server 2014. We encountered issues with some application based on BW which were using datatypes with a precision which was not supported in SQL Server 2012 Column Store Indexes. With SQL Server 2014, this will not be the case anymore

- We enabled the Column Store Index to become a clustered index. That means that the data will be available in a column oriented representation exclusively. This is different to the non-clustered Column Store Index we got so far, where row plus column oriented representation was stored.

- SQL Server enables modifications to the clustered Column Store Index. Unlike the non-clustered Column Store Index which is and remained read-only, the clustered Column Store Index is modifiable

These are the most important changes which will enable a wider range of scenarios to be covered with SQL Server 2014 Column Store Indexes. What remained unchanged is that the Column Store Index data has no requirement to be in-memory resident only. But as before the Column Store Index data is stored in the SQL Server data files.

How do modifications to a Column Store Index actually work?

As we meanwhile all learned, column oriented data representation have some downsides compared to row oriented representation. Not too surprising given that over the last 25 years where one spent hundreds of developer years to really tune the row oriented data representation in RDBMS systems to its absolute best. There still remain a few areas where column oriented representation lag in performance behind the row oriented in certain usage scenarios. Some of these cases are:

- Selecting single rows with all the columns in the select list. Column store constructs are optimized in scanning highly parallel through their segments in order to aggregate values of a few columns. Where column store constructs are performing slower is when just one single row is desired, but all columns of this one row. The way how most column stores are represented in memory, this requires more CPU cycles than just searching through a B-Tree index, to find the page with the row and to read the complete row stored on that page.

- Another difficult case for column stores are modifications of a single or multiple rows. The way how the compression is achieved is a bit more complex than just the page dictionary compression applied on database pages storing row oriented data. Therefore changing a row or multiple rows could require several activities within the column segment where column values need to be changed. This effort would be too massive for time critical modification operations. Therefore one needs to find another way to perform modifications.

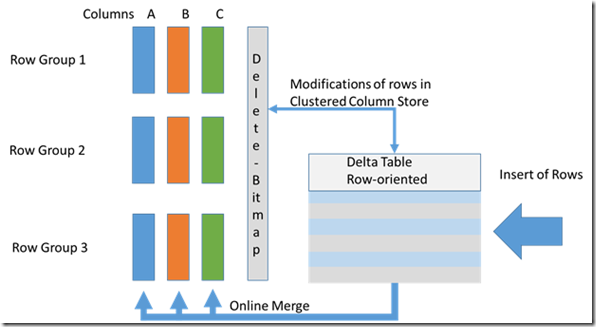

How it works can be demonstrated nicely with the simple graphics below:

Additionally to just have the clustered index structure which includes the column oriented data, there also is a row oriented table aside which includes so called ‘deltas’. As additional construct of Column Store Indexes we introduced a ‘Delete Bitmap’. The different modification operations are handled like:

- Deletes of rows in the clustered Column Store Index: Just mark the row in the ‘Delete Bitmap’ as logically deleted. A physical delete of the row will happen at a later point in time.

- Changes (Updates) to rows in the clustered Column Store Index: Is a two-step process which marks a row in the existing Column Store Index as deleted (Delete-Bitmap) and a second steps inserts the row with the changed column values into the delta store.

- Inserting a row: The row is going to be inserted into the delta store.

At a point in time the delta store is merged in the background with the column oriented structures. Means the rows move from a row oriented form into a column oriented form organized in segments and row groups. The merge usually is driven by a threshold of rows in the delta store. Specifically for SAP BW a SQL command got introduced that allows to start a merge after a Delta Loads to the F-Fact table of a BW cube.

Again more details of the implementation can be read in:

https://research.microsoft.com/pubs/193599/Apollo3%20-%20Sigmod%202013%20-%20final.pdf

A look at the space savings

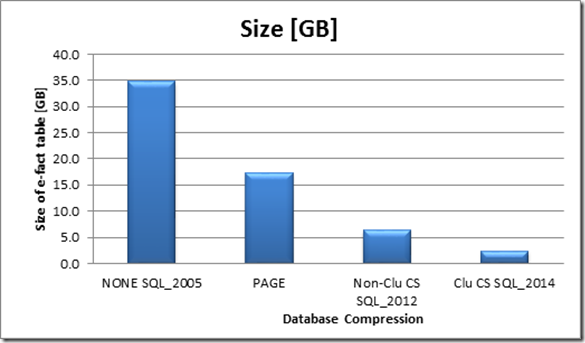

Assuming a fact table of 100 million rows, of a SAP BW cube, we made the following space measurements with the different versions of SQL Server. The BW cube implementation against the different versions of SQL Server changed over time from an indexing schema as well in order to leverage functionality like SQL Server Column Store Indexes. This also demonstrates to a great deal the progress in reduction of infrastructure investments like storage, especially for storage infrastructure. Or to keep such investments limited despite highly increasing data volumes.

As visible the Fact table occupied around 35GB with SQL Server 2005. The introduction of Page Dictionary compression and its subsequent usage by SAP NetWeaver applications provided a 50% reduction of volume already. Leveraging the read-only non-clustered Column Store Index of SQL Server reduced the space again dramatically to 6.3 GB. This reduction is also related to a drop of other non-clustered row oriented indexes against a typical Fact table. Using the same SAP BW implementation, but just using the clustered Column Store Index instead of the non-clustered one again reduces the volume. Reason is that the data is now held in the column oriented representation only. This reduces the volume down to 2.4GB.

Quite impressive steps going from SQL Server 2005 even to e.g. SQL Server 2008 R2 which provides Page Dictionary compression (including UCS2 compression). But even more impressive is what the move to Column Store Indexes can achieve in SAP BW. The effects are certainly boosted by the fact that one can rid of a lot of row oriented indexes on the SAP BW cubes.

As mentioned earlier compression rates can vary. Some of our customers observed better factors using SQL Server 2012 Column Store Index. But as with all the compression algorithms in the RDBMS systems, the factors of compression are majorly influenced by the nature of the data. This is true for the traditional PAGE dictionary compression of SQL Server (and other RDBMS) as well as the compression factors which can be achieved with storing data in a column oriented manner. In the case of SAP BW, the factor also is highly dependent on the number of SAP BW Aggregates which we usually drop when applying the Column Store Index to a fact table. Therefore numbers can vary.

This article only should give a small overview on SQL Server Column Store Indexes and its extensions in SQL Server 2014. Details about the implementation into SAP BW, releases covered, Support Packages required will, as always, be delivered by Martin in form of SAP Notes or blog articles at a time when we are coming close to release.

In the next article, we will look into the new SQL Server Cardinality Estimation logic as it will be released with SQL Server 2014 and it applies for the SAP NetWeaver architecture or not.