Infrastructure Recommendations for SAP on SQL Server: “in-memory” Performance

In recent years the capabilities of Intel and AMD commodity servers have grown exponentially. Processing power (SAPS) has increased and the cost of RAM has decreased dramatically. SQL Server leverages these developments in hardware technologies with features that reduce storage and memory footprint such as SQL Server PAGE compression and SQL Server Column Store to deliver “in-memory” performance to SAP customers. This blog discusses performance aspects of commodity hardware for SAP customers running SQL Server. Following the guidance in this blog will ensure customers achieve the great performance today and in the future at a reasonable cost running on mainstream supportable technology platform.

1. SAP Sizing Process

In order to maximize performance we recommend to apply additional factors and considerations after conducting a SAP sizing analysis. Some factors are not covered by SAP sizing techniques at all such as the use of SSD and large amounts of RAM. There are a number of approaches to sizing SAP systems:

Reference Based Sizing – ST03 and EarlyWatch data is analysed and compared to another known customer with similar workload and a known hardware configuration

SAP Quicksizer – SAP tool that calculates SAPS, DB size and RAM based on many inputs including business document volumes and number of users

T-Shirt – sizing based on the anticipated number of SAP users where precise information is unavailable

To deliver optimal performance the output of traditional sizing processes is the starting point for sizing SAP on SQL Server. This blog now discusses additional factors that must be included to deliver optimal performance.

2. Why Purchase More Memory than Sizing Tools Calculate?

SAP running on Windows and SQL Server can deliver substantially better performance when three additional factors are taken into consideration during a SAP sizing exercise. (A) Increased RAM (B) Ultra-Fast disk storage – Solid State Disk (SSD) or NAND and (C) 10 Gigabit networking. Microsoft and SAP resources who support SAP on SQL Server customers and who run performance labs have repeatedly identified these three areas as possible bottlenecks.

Due to advancements in commodity hardware platforms and SQL Server making more efficient use of memory through compression technologies, it is now possible in many cases to store all or almost all data of an entire SAP database in memory. Today there are hundreds of SAP on SQL Server customers that have used SQL Server PAGE compression to shrink their databases by 80-88% and run these systems on servers with large amounts of physical memory. In all cases these customers see almost zero reads to disk, near 100% cache hit ratio and dramatically improved performance. As expected, SQL Server is able to read data from SQL Server cache hundreds of times faster than from disk regardless of how much SAN cache or other technologies are deployed. A disk IO operation requires the database to issue a command to the operating system rather than containing the request within the context of the database process. External IO operations are always vastly more “expensive” than a lookup in SQL Server buffer which is an internal operation.

SQL Server 2012 introduces a new Column Store Index feature that dramatically improves performance of OLAP type queries and also dramatically increases the compression efficiency. Compression ratios on BW infocubes of over 95% are common. SQL Server 2012 Column Store index is already released and integrated into SAP BW. Column Store structures like SQL Server’s Column Store Index benefit from having the complete structures in-memory when data is being retrieved. OLAP databases that are larger than the amount of physical memory available will show similar benefits when they are running on ultra-fast SSD or NAND storage. Terradata, Vertica, HANA and other Column Store engines leverage in-memory column-store structures and in some cases mandate that these column-store structures are in-memory resident.

Using with large amounts of RAM, physical IO has been observed to almost cease within several hours after restarting SQL Server. The buffering mechanism will take a few hours to fully populate a 1TB data cache.

3. Ultra-Fast Solid State Disk Storage (SSD)

SQL Server like other ACID compliant databases performs write ahead logging and check-pointing of in-memory data. In order for a database to satisfy the ACID requirements there must always be writes to disk in case of some kind of infrastructure failure. All DBMS including all ACID “in-memory” technologies available today must still write to a persistent storage (disk) before a transaction is committed. Therefore the INSERT, UPDATE and DELETE performance of all “in-memory” databases are still dependent on disk performance. All ACID “in-memory” technologies must also periodically checkpoint or write the changed memory contents to the database persistence layer on disk. If changes to the contents of an “in-memory” database were never written back to the disk persisted copy of the database, the shutdown, abort and recovery process would take an extraordinarily long time. The DBMS would need to apply all of the changes accumulated into the transaction log to disk based persistence layer – a busy multi-terabyte database could take some hours to recover if the checkpoint interval was too long. "in-memory" databases that are ACID compliant therefore must still write to persistent storage for at least two reasons (1) Writing Transaction Logs (2) Regular Checkpointing of changed data.

In traditional RDBMS the exchange between disk storage and memory when the size of the database exceeds that of the physical RAM can be speed up with SSD. Solid State Disk and NAND technologies change the response times of these mandatory log and checkpoint write operations from something measured in milliseconds to something measured in microseconds. As discussed in section 2 of this blog there will be little if any physical read to disk as the majority of the database is cache resident. SSD and NAND do also greatly accelerate read operations.

FusionIO and other similar technologies are significantly different from SAN based SSD solutions in terms of overall concept. FusionIO (or similar) are direct local disks and do not rely on a highly performing Fibre Channel network, MPIO software and other components. FusionIO disks plug directly into the PCIe slots on a server motherboard and are therefore in very close proximity to the CPU and RAM. Latency is reduced due to the close proximity and direct connectivity between disk, CPU and RAM, this is especially important for SQL Server transaction logs and tempdb.

Local SSDs and PCI devices cannot be shared disks. This does not present a problem however as SQL Server 2012 AlwaysOn does not depend on shared SAN storage to deliver High Availability. AlwaysOn is therefore the preferred method to achieve High Availability with SQL Server Log and/or Datafiles are storage entirely on local SSD disks such as FusionIO.

With the availability of 2TB and 3TB cards increasing numbers of customers are deploying both SQL Server datafiles and logfiles onto those cards. FusionIO cards plug directly into the PCI bus within a server and are only available for Intel/AMD based servers.

Solid State Disk solutions that are deployed include the following:

1. FusionIO – www.fusionio.com (FusionIO cards are resold by most hardware vendors such as HP, Cisco, Dell, IBM etc)

2. Violin Memory - www.violin-memory.com

BW Loads and other write intensive operations greatly benefit from SSD/NAND technologies.

4. 10 Gigabit Network

3 tier systems are generally recommended for medium and large sized customers. 3 tier systems depend on an extremely low latency, reliable and high bandwidth network connection between the database tier (1st tier) and the SAP application server tier (2nd tier). The connection between the SAP application server and the client PC (3rd tier) is not particularly performance critical.

In recent years Microsoft and network card vendors have developed a large number of technologies to offload network processing onto the network card and to better distribute network processing within Windows. These technologies are now proven and evolved on 10 Gigabit Network cards from Emulex, Intel and others. Many of these features are not available on 1 Gigabit Network cards. Serious problems such as described in Network Settings, Network Teaming, Receive Side Scaling (RSS) & Unbalanced CPU Load can occur on 1 Gigabit networks. Modern good quality 10 Gigabit Network Cards do not have these issues.

It is mandatory to deploy 10 Gigabit network between the Database and the SAP application tier for larger systems. This is detailed in How to Setup a Dedicated SAP Application Server to DB Server Network blog

5. Recommended Hardware Configurations

SAP Note 1612283 - Hardware Configuration Standards and Guidance provides comprehensive details about modern Intel server platforms. As stated in Note 1612283 Intel based platforms account for 99%* of all servers shipped each year and this is driving huge R&D resulting in dramatic performance gains every 12-18 months. SAPS and the amount of memory supported.

Database Servers – Minimum Recommended Configurations:

2 Socket AMD or Intel with 384-768GB RAM, 10 Gigabit Network Card (up to ~40,000 SAPS as at May 2013)

4 Socket AMD or Intel with 1000-2000GB RAM, 10 Gigabit Network Card (up to ~70,000 SAPS as at May 2013)

8 Socket Intel with 2000-4000GB RAM, 10 Gigabit Network Card (up to ~135,000 SAPS as at May 2013)

*for full disclosure review SAP SD Benchmark site

SAP Application Server – Recommended Configuration:

2 Socket AMD or Intel with 384-768GB RAM, 10 Gigabit Network Card (up to ~40,000 SAPS as at May 2013)

SAP work processes on all operating systems are single threaded. Therefore the maximum performance of many SAP business processes is governed by the maximum “thread” performance or SAPS/thread. SAP Note 1612283 - Hardware Configuration Standards and Guidance discusses this topic in more detail, but in general it is recommended to buy servers with higher clock speeds (higher GHz). 4 socket servers have slower clock speeds than 2 socket servers and are not recommended for use as a SAP application server. Many SAP ABAP application servers can be consolidated to a single 2 socket server with or without virtualization. The attachment to Note 1612283 has more details

*IDC Server tracker data. Gartner data.

6. What about “4GB per core” or “6GB per 1000 SAPS” Sizing Formula?

To deliver optimal performance on SAP on SQL Server systems it is recommended to disregard these old and out-dated approaches to sizing. These formula do not take into consideration concepts such as SQL Server PAGE compression, column store and several other important concepts.

These guidelines that were developed at a time when CPU (SAPS) and RAM were both scarce and expensive, neither condition is true with modern Intel hardware (this does however remain true for proprietary systems).

Failure to have sufficient memory in a database server will significantly impact the overall performance of SAP on SQL Server.

Given the rapid innovation in Intel/AMD processors, Windows and SQL Server any static rule such as 4GB per core is invalid. As server costs decrease and new features are added to Windows and SQL Server this blog will contain the information regarding optimal hardware configuration. This is based on lab testing with various hardware vendors and experience from real customer systems.

7. How much extra does it cost to achieve “in-memory” performance?

Most hardware vendors allow customers to configure servers online and obtain an initial quotation.

In the example below www.dell.com is randomly selected to configure a SAP application server as follows:

Dell R720 2 x Intel E5 2670, 384GB RAM (24 x 16GB), Intel 10G Network Card, Power supply + 3 years support = ~USD 10,000

This configuration would support in the range of 3-8 SAP ABAP application instances/servers dependent on their size and workload and is very highly performing. Cutting the memory from 384GB to 96GB holding all other factors identical saves only $3,800 USD (as of June 2013). Attempting to run more than 2-3 SAP ABAP application instances/servers on a 96GB RAM configuration server could lead to severe performance problems. In addition the very powerful processors will be unable to reach significant utilization due to insufficient memory.

A similar situation is observed with a 4 socket Database server:

Dell R910 4 x Intel E7 4850 2Ghz, 1000GB RAM (64 x 16GB), Intel 10G NIC, HBA, Power supply + 3 years support = ~USD 32,000

Reducing the memory to 128GB lowers the cost by only $9,700 USD (as of June 2013). SQL Server will be unable to fully cache the database in memory, unable to provide enough cache for features like Column Store, unable to effectively leverage the huge processing power available and be unable to fully exploit additional features with only 128GB. A powerful 4 socket 40 core 80 thread server with only 128GB RAM will be factors of 3-10x slower than the same server with 1TB of RAM (with a cost of less than $10,000 USD).

Based on actual customer deployments we have observed:

1TB of RAM can fully cache a 1TB PAGE compressed database.

1TB PAGE compressed ECC 6 EHP 6 equates to between 4.5-6.5TB of uncompressed data. 1TB BW with Column Store could equate to well over 7-8TB uncompressed.

1TB of RAM would also be sufficient to provide near in-memory performance for compressed databases up to 2.5TB in size (as the cache size is still a very high percentage of physical DB size). Very little disk IO would occur and cache hit ratios will still be around 99.5%.

A 2.5TB ECC 6.0 EHP 6 PAGE compressed is in excess of 10-12TB uncompressed (such as an Oracle 10g database) and much larger for BW systems with Column Store.

As long between 25% - 50% ratio between memory and compressed database size we will be able to achieve near in-memory performance, as this is usually enough to cache the active (in-use) part of the database. Large parts of SAP databases contain legacy information that is rarely or never accessed. SQL Server only needs to cache the portion of the database that is active to achieve a high level of performance in contrast to other in-memory databases that have a hard requirement to cache the entire database or they will not operate.

8. Summary

Modern Intel based servers are benefiting from the economies of scale and momentum of Research & Development.

Intel and AMD release new generations of processors and chipsets that drive performance, scalability and reliability ever higher every 12-18 months. Simultaneously the cost of hardware is reducing.

Recommendations:

1. Memory is very inexpensive – do not purchase a server with less than 384GB

2. Leveraging the vast CPU processing power is highly dependent on sufficient memory

3. 10 Gigabit network provides many offload and balancing features in addition to extra bandwidth – always purchase 10 Gigabit network for the internal SAP network

4. Non-rotating storage in form of SSD and NAND based solutions have shown considerable performance gains – medium to large size database servers are likely to greatly benefit from SSD/NAND technologies

9. Frequently Asked Questions (FAQ)

Q1: What is meant by the term “in-memory” and what is the difference between latchless in-memory and disk block caching?

A1: The concept of a database cache in memory is nothing new and has existed for decades. On disk a database is divided into discreet pages (or “blocks”). Disk block caching is a mechanism to replicate these disk structures in memory whilst preserving consistency. Latches are a mechanism used by disk block caching functions to preserve consistency and can be a source of latency if there is an attempt to read and write to the same block simultaneously. At a very simple level a “latch” is a lock (mutual exclusion - mutex) on a DB page/block. Latchless in-memory removes the concept of a “page” or block. Removing the concept of dividing a database into blocks and storing data as an array in memory can have some performance advantages for some applications. Exactly how much additional incremental performance this provides over and above a database that is already fully cache resident using traditional disk block caching depends on many factors such as the type of queries, degree of locking/blocking and application coding.

SAP Business Suite and ERP systems are OLTP applications and hardly see latch contention since critical tables have index structures that prevent this type of contention. Only a small amount of CPU resources (much less than 20% of the CPU consumption of SQL Server) is spent for searching qualifying rows in the buffer pool. The effect of having the data latchless in-memory resident is therefore very unlikely to have any significant performance advantage.

SAP BW is an OLAP application and therefore greatly benefits from data presented in memory in a column-oriented structure. Whether the structure is one that is latchless or not has no measurable effect on performance.

The next version of SQL Server will include a latchless in-memory optimized functionality called "in-Memory OLTP", which is fully embedded into the SQL Server RDBMS engine and all Enterprise Grade RDBMS features such as HA/DR functionalities.

Microsoft are still performing testing and analysis to determine the benefit(s) of a latchless in-memory type technology on SAP applications. Future blog posts will contain updates on this technology.

Q2: What about Unicode databases? How does Unicode effect sizing?

A2: Releases since SQL Server 2008 R2 are as efficient as or even more efficient than other DBMS for some languages. Unicode does increase the CPU and memory requirements especially for the SAP application server. There is no overhead in storing Unicode data on disk relative to other DBMS and SQL Server will often be more efficient at Unicode storage for non-latin characters

Q3: How to calculate SAPS per core and SAPS per thread?

A3: All SAP benchmarks are publically available at https://www.sap.com/campaigns/benchmark/index.epx download the certificate and find the total SAPS number and divide by the number of threads. The number of cores is not a useful measure as SAP workprocesses do not execute per core. As at May 2013 SAP on SQL Server demonstrates the highest SAPS/thread of any platform on the SD 2 Tier EHP 5 Benchmark.

Q4: How SQL Server be clustered with FusionIO cards? Isn’t shared storage always required?

A4: SQL Server 2012 and higher supports AlwaysOn. AlwaysOn does not require shared storage of any kind. See earlier posts in this blog for more information on AlwaysOn

Q5. Servers with very large amounts of physical memory are much more susceptible to memory errors and corruption. Is it true that the chances of faulty memory chips corrupting a database increase greatly on servers with 2TB, 4TB or more RAM?

A5. Yes, it is true that servers with huge amounts of physical RAM have a much higher chance of memory errors.

The cause of such memory errors are due to many reasons, but the majority of the time the cause is due to:

(A) Manufacturing faults in memory modules (DIMMs) or attached controllers and interfaces

(B) Memory contents are altered in “Single Event Upsets” – these are radiation induced changes in memory contents

Intel based servers and Windows 2012 have sophisticated tools for detecting problem (A) – manufacturing errors. Faulting memory modules can be deactivated and a warning issued in the server BIOS and Windows Event Log.

The problem with “SEU”, problem (B) is much more difficult to deal with as there is no way to prevent background radiation from corrupting memory other than to locate servers behind lead shields or utilize expensive technologies used in satellites. The solution is therefore to detect and contain such errors. The technologies to do this are not new and have existed for some years. It is only in the last 4-5 years that these features have been available on commodity hardware.

Fortunately Windows 2012 and SQL Server 2012 has functionalities that are seen on large UNIX servers and Mainframes. Videos showing this technology on the HP DL980 are below:

With Hyper-V: https://h20324.www2.hp.com/SDP/Content/ContentDetails.aspx?ID=3432

Native OS: https://h20324.www2.hp.com/SDP/Content/ContentDetails.aspx?ID=3397

It is therefore an essential criteria for any “Enterprise Grade” in-memory DBMS to fully support and integrate these technologies at every layer of the solution from the system board up to the DBMS buffer pool. Unless this condition is met and demonstrated then the DBMS is not suitable for large mission critical workloads.

Q6. Does SQL Server always require the amount of cache to be equal to or greater than the size of the database(s)?

A6. No. SQL Server disk block caching algorithms in combination with highly effective PAGE compression and large amounts of physical RAM dramatically improve database request performance. SQL Server stores database pages in memory in compressed format making the disk-block caching mechanism more efficient. Near zero physical read IO is observed whenever the amount of SQL buffer cache is greater than 25%-50% of the total DB size.

Based on extensive workload testing and analysis with lab and real customer systems it has been observed that:

(A) Many DB tables display absolutely no benefit from being latchless memory resident (B) A small proportion of DB tables will greatly benefit from being latchless memory resident.

The design approach for SQL Server is therefore based on the observable facts that only a small proportion of tables in a database benefit from latchless “in-memory” structures. Therefore the next version of SQL Server includes an analysis tool [AMAR (Analyse Migrate and Report)] to analyse a workload and determine which tables benefit from converting from latched tables into latchless in-memory structures. The analysis tool will also include a further step that allows a query to be natively compiled into a binary further improving query performance.

In conclusion it should be noted that SQL Server differs from other “in-memory” DBMS technologies quite significantly in that there will be no hard requirement to load 100% of the database into physical RAM. This is due to the fact that only a smaller number of critical tables benefit from being held as a latchless in memory structure while most of the tables in OLTP and OLAP databases do not show any benefit. Unlike other "in-memory" DBMS SQL Server is licensed by cores and not the amount of RAM. As DB sizes grow SQL Server unit cost decreases. Some in-memory solutions actually require more physical memory than the size of the database and will abort when they run out of RAM. While RAM is inexpensive, some solutions are licensed by physical RAM.

A fixed requirement to load the entire database into memory is seen as a scalability constraint as customer databases continue to grow ever larger. SQL Server will provide excellent performance for the majority of the DB tables that do not benefit from latchless in-memory technologies via highly efficient disk-block caching leveraging the vast amounts of low cost RAM available in commodity servers. The smaller number of tables in a DB that do benefit from latchless in-memory technologies can be readily identified and converted to latchless in-memory objects selectively.

Q7. How to determine how much “Latching” is happening on a SAP on SQL Server system?

A7. SQL Server has a comprehensive monitoring framework exposed in Windows Performance Monitor. Open Perfmon.exe and create a Performance Counter Log and include CPU, Memory (free memory, page in/sec and page out/sec) and these counters for Latching. Leave the monitor recording data every 15 seconds for a week or so.

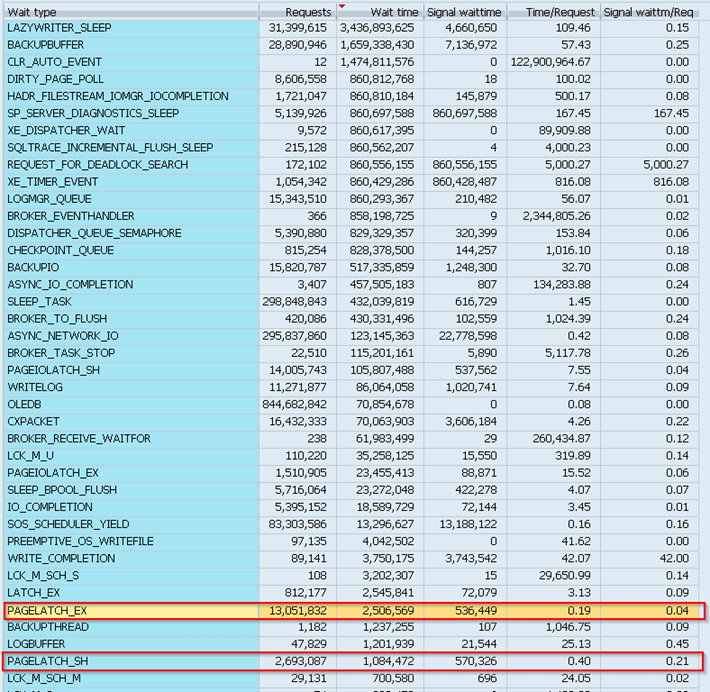

It is also possible quickly quantify how much latching has been occurring on a system by adding up the PAGELATCH_EX and PAGELATCH_SH. ST04 -> Performance -> Wait Events. Note: PAGEIOLATCH_EX/SH is a wait counter for disk performance and is not directly related to latching.

As seen in the screenshot below the “total wait” time for latching is tiny in comparison to the other causes of wait such as ASYNC_NETWORK_IO which is the time taken to transmit data from the DB server to the app server over the network. The results below would be typical of most SAP systems and shows latching is an insignificant source of latency on a SAP system.

Customers are welcome to post any questions about the results of their performance traces or waitstats in this blog post. This process will provide the most accurate scientific proof about the impact of disk-block caching vs. latchless in-memory performance for SAP systems.

This document contains more information: Diagnosing and Resolving Latch Contention on SQL Server

The script attached to this blog will also help pinpoint latching

Links:

More information on Intel RAS features and SEU

More information on Column Store

https://blogs.msdn.com/b/saponsqlserver/archive/2012/12/17/sql-server-column-store-updated-sap-bw-code.aspx

https://social.technet.microsoft.com/wiki/contents/articles/3540.sql-server-columnstore-index-faq.aspx

https://www.mssqltips.com/sqlservertip/2586/sql-server-2012-column-store-index-example/

https://en.wikipedia.org/wiki/Column-oriented_DBMS

https://www.databasejournal.com/features/mssql/understanding-new-column-store-index-of-sql-server-2012.html

https://blog.kejser.org/2012/07/04/how-do-column-stores-work/

https://msdn.microsoft.com/en-us/library/gg492088.aspx

More information on Latching

https://sqlserverperformance.wordpress.com/2009/12/21/great-resource-on-sql-server-wait-types/

https://www.fusionio.com/solutions/microsoft-sql-server/ -> download kit.