AlwaysOn Part 9: AlwaysOn and Logshipping

[Edit] You can find the summary of the complete series here.

In this part we want to talk about some scenarios where AlwaysOn and Log-Shipping is getting combined. The two scenarios we want to look into particularly would be:

- Despite the fact that we are using our 3-node AlwaysOn configuration (one primary and two secondary) which followed us through multiple parts of this series already, we want to use Log-Shipping to distribute the transaction log backup files over the different nodes

- Second scenario is where AlwaysOn actually is used in the main datacenter. But out of different reasons we can’t expand the required WSFC configuration into the DR site. Reasons can read like:

- Simply not possible for the Infrastructure or staffing to maintain WSFC configurations between different sites

- Can’t leverage the target server in the DR site in the WSFC configuration since it is part of another WSFC configuration already

- The SLAs on RPO, RTO force a fast recovery from manual error which only can be realized with delayed recovery restoring the transaction log backups on one instance of the HA/DR strategy

Hence the target server in the DR site needs to get supplied by Log-Shipping with the transaction log backups performed in the main site on the current primary.

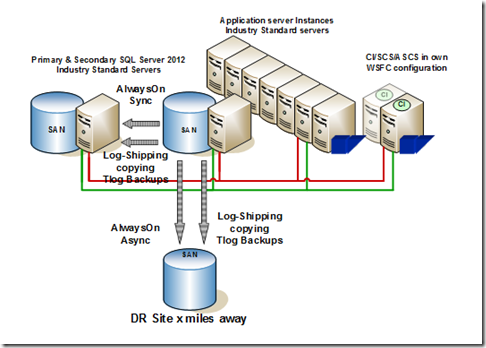

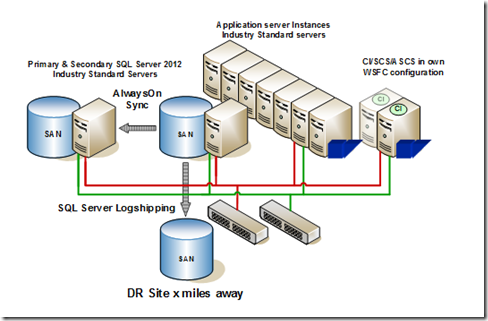

See the scenarios below graphically displayed

Graphic 1: Using Log-Shipping to distribute Tlog backups on the different nodes of the AlwaysOn configuration

Graphic 2: Using a combination of AlwaysOn and Log-Shipping. Supply DR site with Log-Shipping

Some considerations upfront

As clear cut as Scenario #2 is, there is some point to scenario #1 as well. In scenario #1, we already supply the DR site with the data of the main site with AlwaysOn. Nevertheless, even with such a configuration, there might occur scenarios, where one needs to restore an image of the production SAP database in relatively short time for a point-in-time recovery. Reasons could be manual failures where data got deleted and now needs to be restored. Since one doesn't know whether the infrastructure for such an activity is immediately available in the main site or DR site, it can be an advantage having the Tlog Backups and also full database backups available on both sides. Over the years we also encountered numerous cases of human errors (from deleting SQL Server data files instead of copying them to simply reformatting whole SAN volumes or reconfiguring SAN volumes) which suddenly demanded the need to restore the database to the latest possible point in time. Again one doesn't know which site this requirement comes up. In a lot of cases, in larger companies, Central Backup solutions also might apply quite some delays restoring single backups. Hence there is a point having quite a few days Tlog backups in the main site, so that those are accessible by the primary and secondary and to have those Tlog Backups in the DR site as well.

Another point one needs to make some compromises is the location one uses to store the Tlog Backups in the main site. No doubt, the best would be to have a location on the SAN Array used by the primary and another copy would be on the SAN Array used by the secondary plus then one location on the Array in the DR site. From an availability point of view, the best alternative. No doubt. However a scenario which opens up the possibility to break the Tlog Backup Chain. Here is why:

Log-Shipping is a 3-part process of the tasks:

- Perform the Tlog Backup on the primary server. This part runs on the primary server

- Copy the new Tlog Backup(s) from the primary to the secondary. This would be executed on the secondary server

- Restore the Tlog Backup(s) against the secondary. This task is performed on the secondary. Obviously in scenario #1 where we only are interested about spreading the Tlog Backup files when those are finished, this job would be disabled. However in scenario #2 it would be important to run on the instance on the DR site

Assuming that we established scenario #1 or #2 with:

- The primary in a Log-Shipping relationship with the local secondary (secondary copying Tlog Backups to local secondary) and DR site with the instance copying the Tlog Backups from the current primary to the DR site.

- Having the primary and local secondary using their own SAN Array to perform the Tlog Backups against. Means each of the replica stores the Tlog Backups executed locally.

- On the other side, we also setup Log-shipping from the current local secondary replica to the current primary and the secondary of the DR site. Does sound strange. But we did that at a time when the current local secondary was in the primary role and the current primary was in the secondary role.

The following scenario could occur:

- The primary server did execute a Tlog backup and stored it on its SAN Array

- The fact that a Tlog backup has been performed is noted in the transaction log which now got, dependent on scenario #1 or #2 replicated with AlwaysOn at least to the local secondary or to both secondary replicas (scenario #1)

- Before the Log-Shipping copy tasks of each of the secondary replicas kick in, the node with the primary goes down and a failover happens where the local secondary replica moves into primary role.

- We now obviously miss a successfully executed Tlog Backup since the primary is not accessible anymore and the backup has not been copied over completely or not at all

- We also start performing the next Tlog backup on the server in the primary role right now

- As this next backup is successful, it does get copied to the DR site.

- Trying to restore this backup immediately (scenario #2), we now will fail since the next backup after the failover in our scenario, does have a LSN which builds on top of the Tlog Backup which couldn't be copied anymore. Or we break the backup chain of the Tlog backups stored in the DR site.

There is another impact of the scenario above occurring. Assume we indeed ended up with the situation above and we get the former primary, which failed, up and running again. However that replica would now be the secondary replica. The way we setup Log-Shipping we now would enable the copy job for Log-Shipping to copy the Tlog backups from the new primary which created them in a local volume. First thing the copy job of the former primary, now secondary replica would detect is that the content of the local directory does not only miss Tlog backups which have been executed on the new primary meanwhile. But it also realizes that there is one file in its own local directory which doesn't exist on the new primary. This results in the side effect, that the COMPLETE Tlog Backup directory of the primary is getting copied again. Dependent on the Tlog Backup volume this could be many Gigabytes of data copied.

How to remedy this potential problem?

Measures one can use to minimize the risk of this issue of breaking the Tlog Chain coming up

- One measure would be to have the frequency scheduled for the copies of the backups at the same frequency (or even higher frequency like 1min) as the execution of the backup. It goes without saying that we don't want to leverage of the option to delay the copy of the backup when setting up Log-Shipping. Doing so, should cut the potential time where a Tlog backup finished and when it gets copied down to a small time. Disadvantage is that the time gap in which the scenario described above can’t be eliminated completely. Nice thing about every instance performing the backup against a volume of the ‘own’ SAN Array is that we got two copies of the Tlog backups in the main site.

- Another measure could be to execute the Tlog Backups against a share located on a clustered file server. Since most of the SAP landscape require a file server of some sort to e.g. save EDI orders or other data moved in some business processes, this file server in the main site could be sued to create a share where either the primary or the local secondary replica execute their backups against. Especially since Windows Server 2012 and its highly improved SMB protocol, such an option could become very attractive. Advantage is that we eliminated the possibility of backups being executed successfully and the backups not being accessible anymore by one of the AlwaysOn nodes going down. As we will see, this possibility still allows the nodes functioning as a secondary replica to keep a local copy. We even will have up to 3 copies of the Tlog backups in the main datacenter. One also will need to run SQL Server and SQL Agent processes in a context which does have write and read access to the share on the file server.

How does such a setup look from a Job point of view?

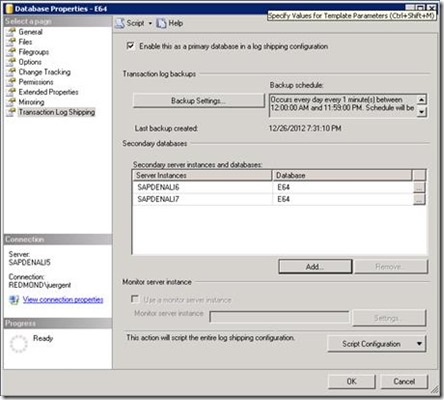

For scenario #1, the first setup step would be to go to the primary

- In Object explorer, expand User Databases, mark the SAP Database in the AG and press the right mouse key

- Choose ‘Properties’ and then chose the last item ‘Transaction Log Shipping’

- Setup the backup folders to use a file share on a file server like shown below

- Then add the secondaries and adapt the schedule of their ‘copy’ jobs accordingly to have at least the frequency of the backup job. As shown above, we scheduled to perform a backup once every minute.

- Since we want to copy, to the local secondary in the main site AND the secondary in the DR site, we add two servers as shown below in the scenario. For the two copy jobs, create local directories on the destination servers and define those as the target directory for the copy. Means the Tlog Backups are defined to be performed against the share on the file server, but then are copied locally to the secondary replica nodes.

- Pressing OK will now create the jobs on all three nodes. In this case sapdenali5 was the primary server.

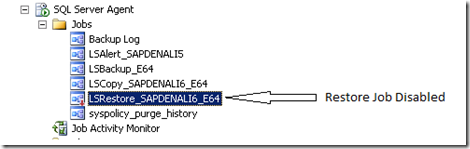

- Now go to both secondary server and DISABLE the jobs starting with LSRestore_...

- Also be aware that SQL Agent on the secondary nodes need to run in user contexts which do have read access to the file share where the backups of the Tlogs are stored by the primary

However we are only halfway done. Since we want to have the log-Shipping working as well in case of a failover between the two replicas in the main datacenter (sapdenali5 and sapdenali6 in our case), we need to setup a similar Log-Shipping configuration for the local secondary replica as well (in our case sapdenali6). This is best done in a situation where that replica is in the role of a primary. Hence a failover might be necessary. After that is done, setup a similar configuration as before.

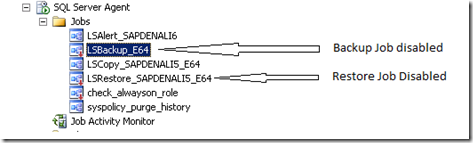

Assuming we have this all setup and our sapdenali5 replica is in the primary role, the settings of the jobs should look like:

Primary (sapdenali5):

The LSBackup_E64 job performs the Tlog backup every minute. The backups are performed against a file share (first copy of Tlog Backups).

The LSCopy_sapdenali6_E64 job which also is active, basically copies the backups which have been created back to a local share (second local copy of the Tlog backups)

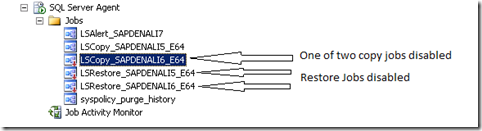

The settings of the local secondary would look like:

The only job enabled would be the Log-Shipping copy job which would copy the Tlog backups performed by the primary onto the local secondary replica.

The secondary replica of the DR site, would have these settings of jobs:

Since we got the secondary replica in the DR site supplied with AlwaysOn already, we can’t restore the Tlog Backups and hence can disable the jobs. We don't want to delete them since those could come in handy for scenarios where we eventually need to reseed that replica with a new backup from the main datacenter.

Another job which is disabled is also one of the copy jobs. In the scenario we set up, where we copy the Tlog backups in the main datacenter to a file share, it doesn't make any difference which of the nodes is running in the primary role. Both of them would create the backups on the file share. Means one of the copy jobs could be deleted as well.

As described above we basically got three copies of the Tlog backups in the main datacenter now. It is obvious that with disabling copy jobs on the primary and/or secondary, one can decide to have two or even only one copy only in the main datacenter.

Differences to scenario #2

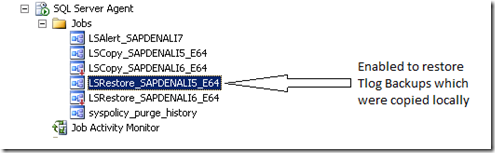

The only real difference there is to setup scenario #2 is a change in the settings of the jobs created for Log-Shipping on the node which acts as Log-Shipping destination in the DR site. Remember in scenario #2, we have no AlwaysOn to supply the secondary in DR site with the changes. This needs to be done with Log-Shipping. As a result, we need, besides copying the Tlog Backups also restore those Tlog backups on this node which is not part of the AlwaysOn configuration.

The main site settings of the jobs remain the same.

On the log-Shipping destination in the DR site the job settings would need to look like:

We only need one of the backup jobs. Hence the second backup job which got created could as well be deleted. As mentioned earlier. This scenario also allows to define a delay in restore for the Log-Shipping destination.

Now one problem stays. In case of a failover, you would need to enable or disable some scheduled tasks/jobs in a manual way. Given the fact that there often enough happened failovers which were not even noticed, this is not so nice. Hence in the next part, we’ll see how one can automate this part of the story