SAP NetWeaver and Hyper-Threading on Windows Servers : to be or not to be

Update Tue 18 Dec 2012

Little correction regarding NUMA setting in WS2012 Hyper-V manager in the

text below. The topic will be explained in detail in a separate blog with focus

on NUMA.

Summary SAP NetWeaver and HT/SMT

Here are the key messages related to SAP NetWeaver and Hyperthreading ( HT ) now called

Simultaneous MultiThreading ( SMT ) derived from different sources as well as internal lab tests

done for the WS2012 SAP First Customer Shipment program. In addition I incorporated very

valuable input from Juergen Thomas who published many blogs and papers about SAP on the

Microsoft platform :

1. Always keep in mind : a CPU thread is NOT equal to a core ! ( also see walk-through section at the end )

2. Sizing based on SAPS which is done by Hardware vendors for certain server models usually

includes SMT. Looking at the latest published SAP SD benchmarks one will realize immediately

that SMT was turned on according to the CPU Information ( # processors / # cores / # threads ).

The goal is to achieve the maximum amount of SD workload. Including SMT for sizing implictly

means that customers will turn it on

3. To use Hyper-V on a Server with more than 64 logical processors ( e.g. 40 cores and SMT turned on )

needs to use Windows Server 2012. Hyper-V of Windows 2008 ( R2 ) has a limit of 64 logical CPUs,

Windows 2008 R2 being able to address 256 CPU threads in a bare-metal deployment

4. When using the latest OS and application releases the general suggestion regarding SMT is to

always turn it on. It either helps or won't hurt. Turning SMT on or off requires a reboot as it's a

BIOS setting on the physical host

5. How much SMT will help depends on the application workload. While there is a proven benefit in

SAP SD Benchmarks as well as in many other benchmarks, we know customer tests where there

was no difference between running a virtualized SAP application Server on WS2012 Hyper-V with

or without SMT. Conclusion is that the effect/impact of SMT is pretty much dependent on the

individual customer scenario

General Information about Hyper-Threading

Being around for many years Hyper-Threading ( HT ) now called Simultaneous MultiThreading ( SMT )

is a well-known feature of Intel processors. Looking on the Internet one can easily find a lot of information

and technical descriptions about it like this one :

https://software.intel.com/en-us/articles/performance-insights-to-intel-hyper-threading-technology/

While there have been some issues in early days the recommendations related to the more recent OS or

application releases is usually to have SMT turned on as it does increase the throughput achievable

with an application on a single server. Nevertheless the question about the impact of SMT on SAP NetWeaver

shows up again when looking at the execution speed of a single request handled by a single CPU thread.

Especially as we are pushing WS2012 Hyper-V - customers wonder what the performance characteristics

are with a combination of SMT and virtualization.

In this blog I try to summarize the status quo and give some guidance based on all the statements and

test results and experiences which are around.

Single-Thread Performance

I personally would like to separate the SMT discussion from the single-thread performance discussion.

When People talk about the latter one it is usually about the trend in processor technology to increase

the number of cores instead of increasing the clock rate. In these discussions the often unspoken

assumption is 1 thread per core. Sure - there are very good reasons for it. But as one can read under

the following links only applications which are able to use parallelism will fully benefit from the multi-core

design. As a consequence certain SAP batch jobs which are dependent on high single-thread performance

might not improve a lot or not at all when the underlying hardware gets upgraded to the next processor

version which has more cores per CPU. A customer example of this effect can be found here :

https://blogs.msdn.com/b/saponsqlserver/archive/2010/01/24/performance-what-do-we-mean-in-regards-to-sap-workload.aspx

SAP NetWeaver is not a multi-threaded application. But in SAP it's of course possible trying to

parallelize processing on a Business process level. Just think about payroll parallelism in SAP.

Some basic articles around single-threaded CPU performance and multi-core processing

can be found here :

https://preshing.com/20120208/a-look-back-at-single-threaded-cpu-performance

https://en.wikipedia.org/wiki/Multi-core_processor

https://iet-journals.org/archive/2012/may_vol_2_no_5/846361133715321.pdf

Could SMT hurt in some cases ?

is in principle the wrong question. The correct question should be : What is the effect and impact

of SMT with different applications and different configurations or scenarios ?

Understanding these effects and impacts will make it possible to adapt and get the maximum out

of an investment in a specific hardware model.

There might be some outdated messages or opinions around based on experiences from the early

days which are no longer valid. In other cases I personally wouldn't call it an issue of SMT but

wrong sizing or overlooking some documented restrictions. First let's look again at a general statement :

https://software.intel.com/en-us/articles/performance-insights-to-intel-hyper-threading-technology/

"Ideal scheduling would be to place active threads on cores before scheduling on threads on

the same core when maximum performance is the goal. This is best left to the operating system.

All multi-threaded operating systems support Intel HT Technology, while later versions have more

support for scheduling threads in the most ideal manner to maximize performance gains"

Here are some more details :

1. single-thread / single-core performance

In SAP note 1612283 section "1.1 Clock Speed" you will find the following statement :

"If you need to speed up a single transaction or report you might try to switch off

Hyperthreading"

Based on some testing I would like to differentiate this a little bit further. As long as the

number of running processes / threads is <= the number of cores the OS / hypervisor should

be smart enough to distribute the workload over all the cores. In this case there shouldn't be

any effect/impact by the fact that SMT is on or off. Based on the basics of SMT as described

in the Intel article named above, expectation is that with the number of running processes

/threads exceeding the # of cores, the performance/throughput of a single CPU thread,

dependent on the load, is decreasing.

2. parallelism

From an OS perspective one shouldn't see any major issues with SMT anymore. It Looks different

though when it comes to the application. One potential issue could arise if an application doesn't realize

that the available logical CPUs are mapped to SMT threads and not cores. This could lead to wrong

assumptions. Here is an example from SQL Server :

https://support.microsoft.com/kb/2023536

"For servers that have hyper-threading enabled, the max degree of parallelism value should not

exceed the number of physical processors"

( the term "processors" being used related to physical cores in this article )

It's related to the two items above. Parallelism on a SQL statement level means that the SQL Server

Optimizer expects all logical CPUs to be of the same type. The important question is if this is just not

as fast as if there would be as many cores as logical CPUs or if it becomes in fact slower than without

SMT

3. virtualization

Another topic is running VMs on Hyper-V with SMT turned on on the underlying physical host. It's

again not different from the items mentioned before. Inside a VM an application might not be aware

of the nature of a virtual CPU. It's not just SMT. Depending on the configuration ( e.g. over-

commitment ) and the capabilities of an OS/hypervisor a Virtual Processor will correspond only to a

"fraction" of a real Physical CPU.

Internal lab tests on WS2012 Hyper-V have proven the statement I quoted at the beginning of this

section. As long as there are enough cores available the workload will be optimally distributed.

A perfect way to show this is to increase the number of virtual CPUs inside a VM step by step

while monitoring the CPU workload on the host.

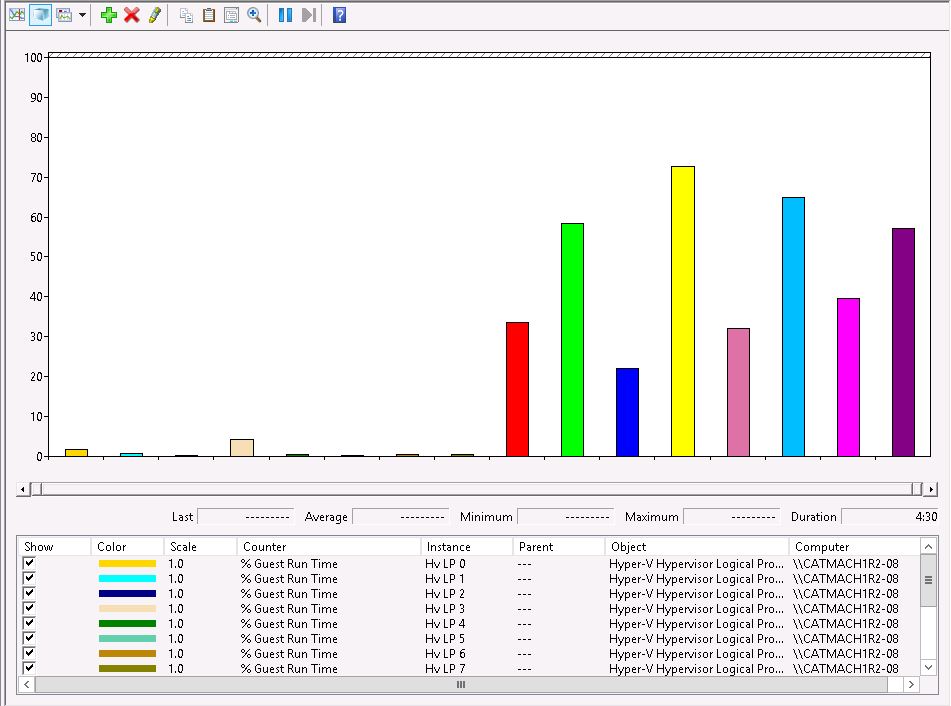

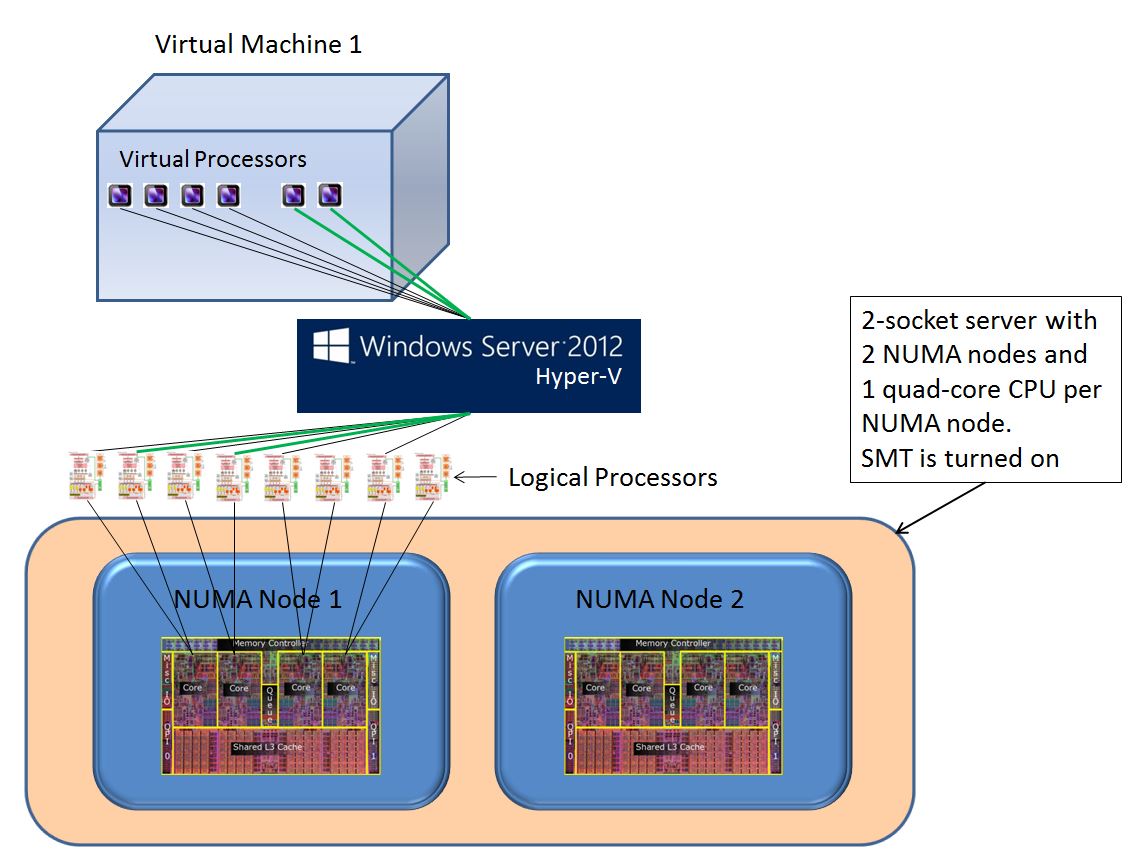

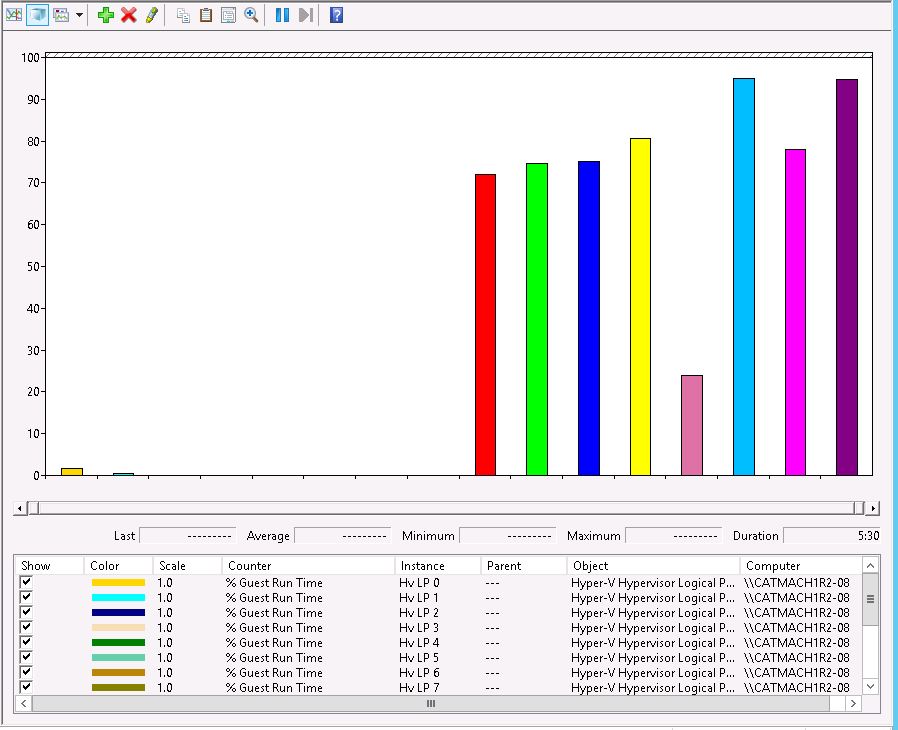

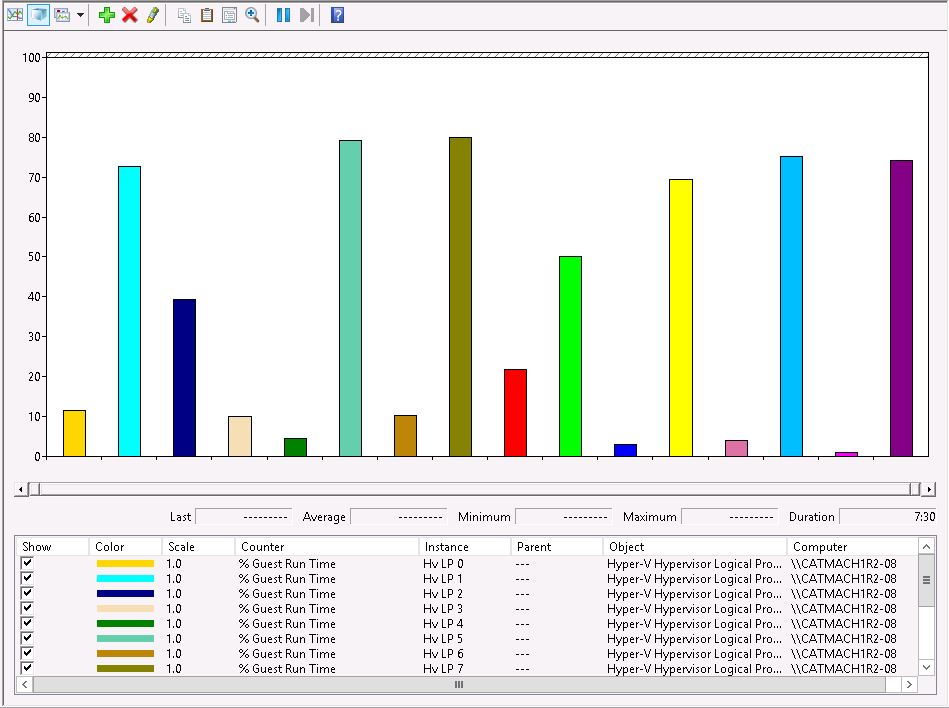

The CPU load screenshots further down were taken from perfmon on a WS2012 host where SMT

was turned on. The server had 8 cores and due to SMT 16 logical processors. The server also had

two NUMA nodes -> 4 cores / 8 threads each. The SAP test running inside a VM ( guest OS was

Windows 2008 R2 ) was absolutely CPU-bound. The scenario looked like this :

a, the test started with two Virtual processors ( VP ) and the workload was increased until both VPs

were 100% busy

b, then the number of VPs was increased to four to see if it was possible to double the

workload. Scalability was very good in this case because the workload could still be

distributed over all four cores in one single NUMA node of the host server

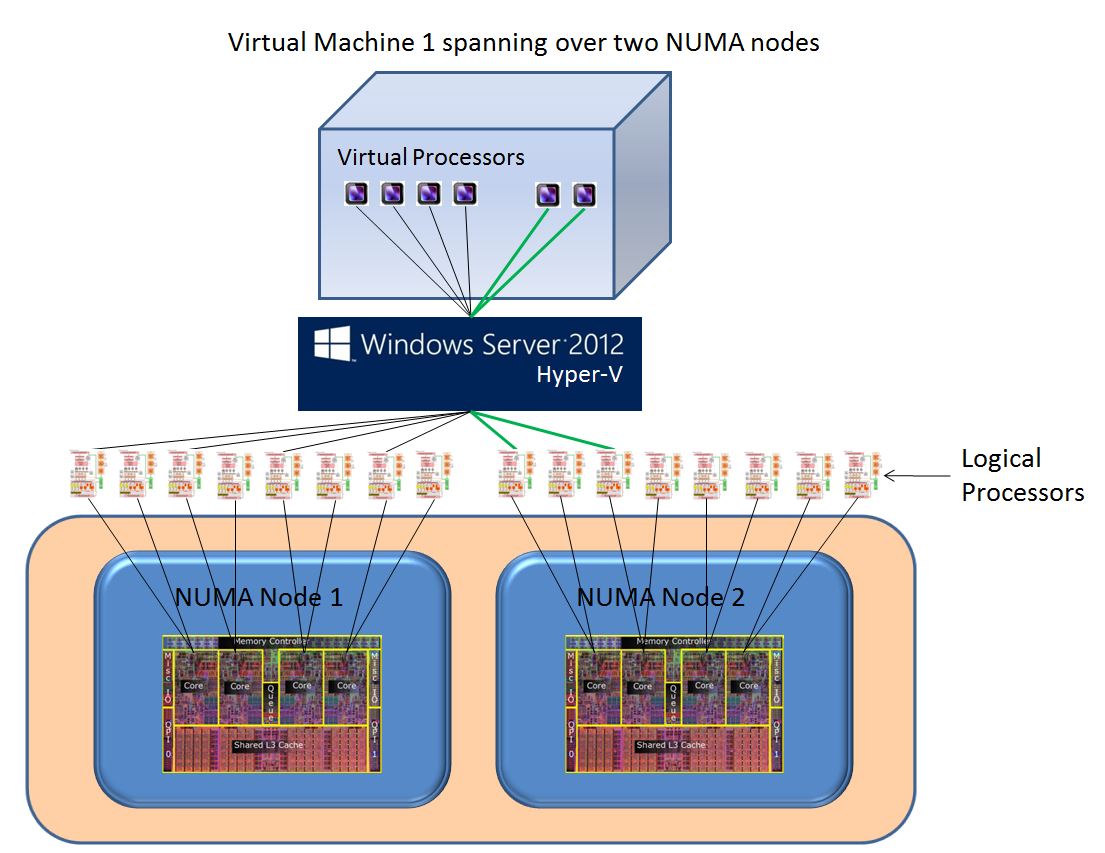

c, but going from 4 VPs to 6 VPs changed the picture. Hyper-V has improved NUMA

support and per default sets max VPs per NUMA node to the # of Logical Processors.

( LP ) of the NUMA node ( 8 on the test Hardware ).

And as 6 VPs is still < 8 the whole workload of the VM still ended up on one single NUMA

node. On the other side due to SMT it was no longer possible to achieve an almost 1:1

VP-to-physical-core mapping. This is a situation where you will see still an improved

throughput compared to four VPs but it's far away from linear scalability we achieved when

going from 2 VPs to 4 VPs.

Keep in mind that it's NOT possible to configure processor affinity on Hyper-V to achieve

a fixed VP-physical-core mapping. But setting the "reserve" value in WS2012 Hyper-V

Manager to 100 has basically the same effect. See also the blog from Ben Armstrong :

d, next step was to adapt the setting for the VM in Hyper-V Manager. It allows to define max

VPs per NUMA node. Setting this value to four forced Hyper-V to distribute the workload

over two NUMA nodes when using 6 VPs in the VM. Now it was again possible to achieve

basically a 1:1 VP-to-physical-core mapping. Scalability looked fine and because it was

totally CPU-bound the disadvantage of potentially slower memory access didn't matter.

The advantage of getting more CPU power outweighed the memory access penalty by far.

The following pictures and screenshots will visualize the four items above :

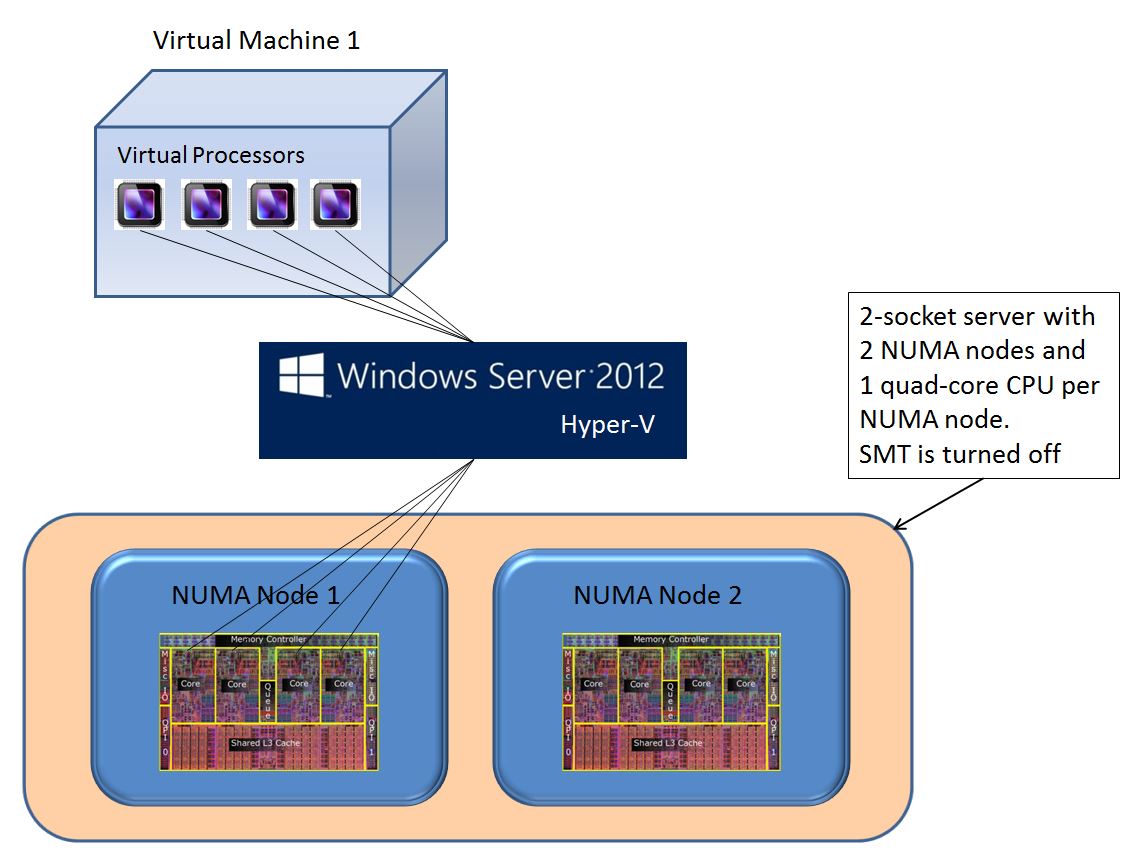

Figure 1 : as long as SMT is turned off and a VM won't span multiple numa nodes the virtual processors

will be mapped to the cores of one single numa node on the physical host

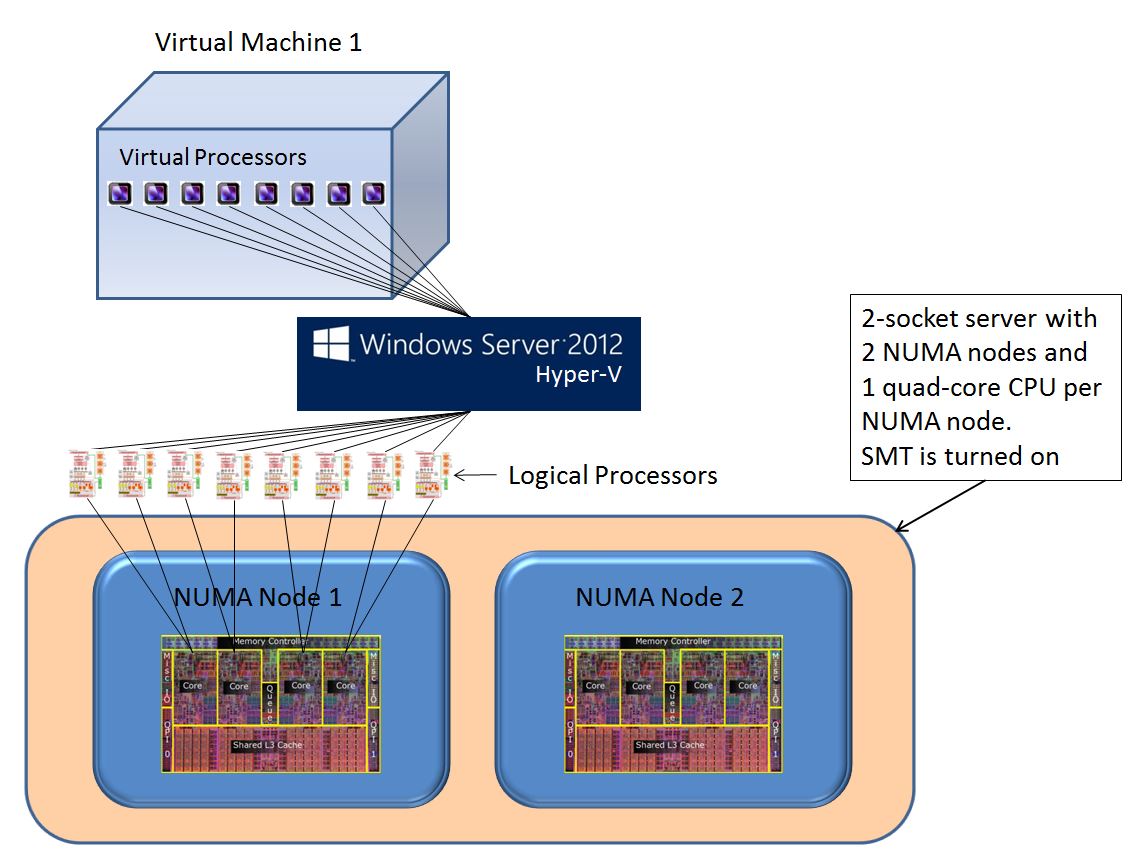

Figure 2 : once SMT is turned on the Virtual Processors of a VM will be mapped to Logical Processors

on the physical host. By Default WS2012 Hyper-V Manager will set the maximum # of Virtual

Processors per numa node according to the hardware layout. In this example it means a max

of 8 Virtual Processors per numa node

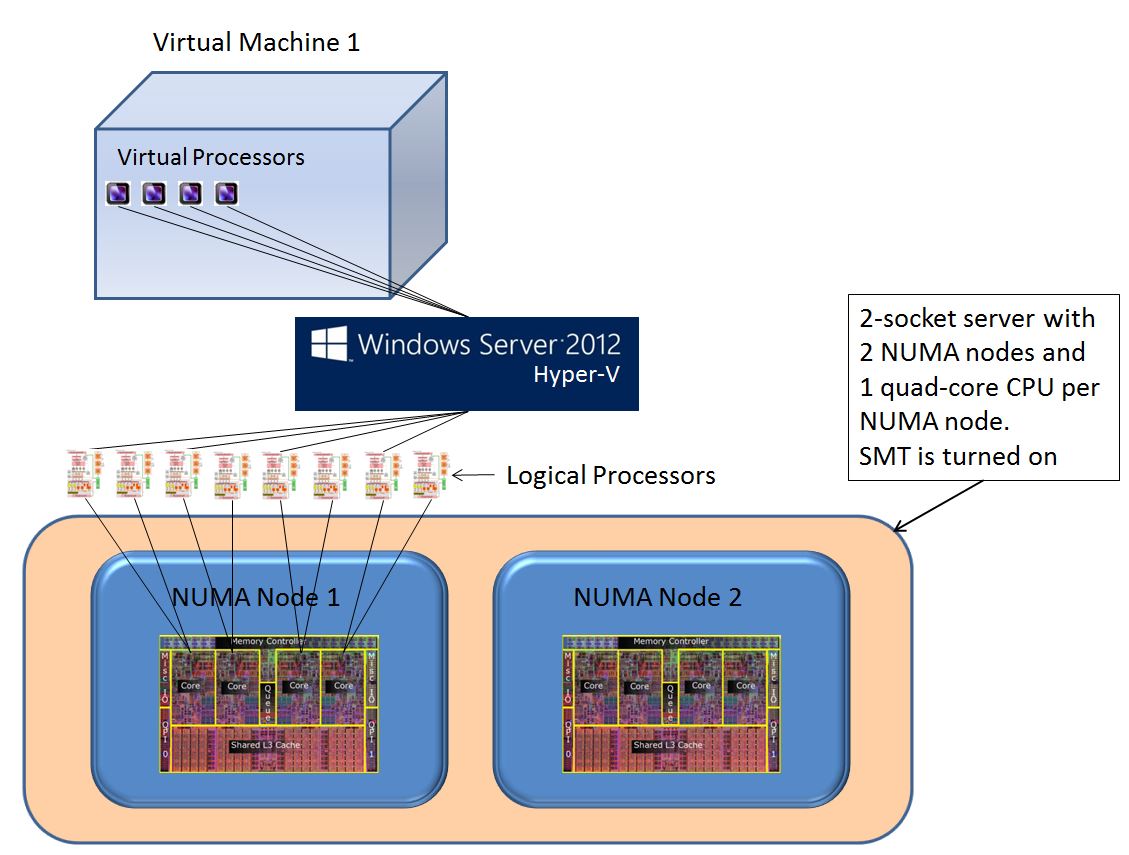

Figure 3 : configuring 4 Virtual Processors in a VM while SMT is turned on means that these 4 VPs

will be mapped to 8 Logical Processors on the physical host. This allows the OS/Hypervisor

to make sure that the workload will be distributed over all 4 physical cores in an optimal way

similar to having SMT turned off

Figure 4 : perfmon showed that the workload which kept four virtual processors busy inside a VM was

distributed over eight Logical processors on four cores within one NUMA node on the

physical host

Figure 5 : what happens when adding two additional Virtual Processors inside the

single VM ? Because 6 VPs is still less than the default setting of a max

of 8 VPs per single numa node the whole workload will be still mapped

to 8 Logical Processors which correspond to 4 cores within one numa

node

Figure 6 : increasing the workload the same way as before when going from two virtual processor to

four VPs by configuring six VPs inside the VM caused a super-busy single NUMA node using

almost all the threads 100%. Means each of the single physical cores of this NUMA node had

to engage more severly the two SMT threads it represented to the OS/Hypervisor. Therefore

the scalability for going from four VPs to 6 VPs looked not great compared to going to four

VPs from two VPs.

Figure 7 : the default setting regarding # of virtual processors per numa node in WS2012

Hyper-V Manager can be changed. Setting the number low enough will force

the Hypervisor to use more than one numa node

Figure 8 : changing the "max VPs per NUMA node" setting in Hyper-V Manager ( 2012 ) to four

forced Hyper-V to use the second NUMA node. This allowed again basically a 1:1

VP-to-physical-core mapping and scalability looked fine again

Conclusion :

The references as well as the experiences shown make it obvious that the effects of SMT are related

to sizing and configuration of SAP deployments as well as to set expectations and SLAs accordingly.

It is proven that with having SMT configured on a Hyper-V host or on a SAP bare metal deployment,

the overall throughput of a specific server is increasing including the power/throughput ratio. Both

are usually the goals we follow when specifying hardware configurations for SAP deployments.

In terms of using SMT for Hyper-V hosts, one clearly needs to define the goals of deploying SAP

components in VMs. Is the goal again to maximize the available capacity of servers, then having

SMT enabled is the way to go. Means one would deploy as many VPs as there are Logical Processors

on the host server and accept that there might be performance variations dependent on the load over

all VMs or the fact that a VM has more VPs than the # of physical cores in one NUMA node of the host

server. SLAs towards the business units would then take such variations into account.

Walk-through TTHS - Tray Table Hyper-Seating

One thing which will be repeated again and again in all the articles about SMT is the fact that a

CPU thread is NOT equal to a core. To visualize this specific point and to make it easy to remember

I would like to compare SMT with TTHS - Tray Table Hyper-Seating as shown on the following

six Pictures :

Figure 1 : you have four comfortable seats and four passengers. Everyone is happy.

Figure 2 : now you want to get more than four passengers into the car and the idea is to introduce

TTHS - Tray Table Hyper Seating. This will allow to put two passengers on one seat. But

it's pretty obvious that it's not so comfortable anymore. Especially one of the two

passengers cannot enjoy the cozy seat surface.

Figure 3 : therefore the driver should be smart enough to let passengers enjoy the cozy seat surface

as long as seats are available despite the fact that TTHS is turned on

Figure 4 : at some point though when you want to put six passengers into the 4-seat car two of them

have to get on the TTHS spots. This is when issues might evolve

Figure 5 : of course one could turn TTHS off again and share two seats the traditional way. While this

might work too it's very obvious that it's not perfect

Figure 6 : conclusion : if it's a hard requirement that every passenger has his own seat to fully enjoy

the cozy seat surface then there is no other way than to take a different car with an

approrpiate number of seats